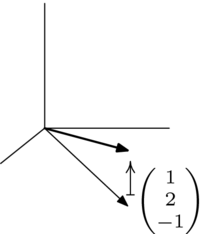

Linear Algebra/Print version/Part 2

| This is the print version of Linear Algebra You won't see this message or any elements not part of the book's content when you print or preview this page. |

Chapter III - Maps Between Spaces

Section I - Isomorphisms

In the examples following the definition of a vector space we developed the intuition that some spaces are "the same" as others. For instance, the space of two-tall column vectors and the space of two-wide row vectors are not equal because their elements—column vectors and row vectors—are not equal, but we have the idea that these spaces differ only in how their elements appear. We will now make this idea precise.

This section illustrates a common aspect of a mathematical investigation. With the help of some examples, we've gotten an idea. We will next give a formal definition, and then we will produce some results backing our contention that the definition captures the idea. We've seen this happen already, for instance, in the first section of the Vector Space chapter. There, the study of linear systems led us to consider collections closed under linear combinations. We defined such a collection as a vector space, and we followed it with some supporting results.

Of course, that definition wasn't an end point, instead it led to new insights such as the idea of a basis. Here too, after producing a definition, and supporting it, we will get two surprises (pleasant ones). First, we will find that the definition applies to some unforeseen, and interesting, cases. Second, the study of the definition will lead to new ideas. In this way, our investigation will build a momentum.

1 - Definition and Examples

We start with two examples that suggest the right definition.

- Example 1.1

Consider the example mentioned above, the space of two-wide row vectors and the space of two-tall column vectors. They are "the same" in that if we associate the vectors that have the same components, e.g.,

then this correspondence preserves the operations, for instance this addition

and this scalar multiplication.

More generally stated, under the correspondence

both operations are preserved:

and

(all of the variables are real numbers).

- Example 1.2

Another two spaces we can think of as "the same" are , the space of quadratic polynomials, and . A natural correspondence is this.

The structure is preserved: corresponding elements add in a corresponding way

and scalar multiplication corresponds also.

- Definition 1.3

An isomorphism between two vector spaces and is a map that

- is a correspondence: is one-to-one and onto;[1]

- preserves structure: if then

(we write , read " is isomorphic to ", when such a map exists).

("Morphism" means map, so "isomorphism" means a map expressing sameness.)

- Example 1.4

The vector space of functions of is isomorphic to the vector space under this map.

We will check this by going through the conditions in the definition.

We will first verify condition 1, that the map is a correspondence between the sets underlying the spaces.

To establish that is one-to-one, we must prove that only when . If

then, by the definition of ,

from which we can conclude that and because column vectors are equal only when they have equal components. We've proved that implies that , which shows that is one-to-one.

To check that is onto we must check that any member of the codomain is the image of some member of the domain . But that's clear—any

is the image under of .

Next we will verify condition (2), that preserves structure.

This computation shows that preserves addition.

A similar computation shows that preserves scalar multiplication.

With that, conditions (1) and (2) are verified, so we know that is an isomorphism and we can say that the spaces are isomorphic .

- Example 1.5

Let be the space of linear combinations of three variables , , and , under the natural addition and scalar multiplication operations. Then is isomorphic to , the space of quadratic polynomials.

To show this we will produce an isomorphism map. There is more than one possibility; for instance, here are four.

The first map is the more natural correspondence in that it just carries the coefficients over. However, below we shall verify that the second one is an isomorphism, to underline that there are isomorphisms other than just the obvious one (showing that is an isomorphism is Problem 3).

To show that is one-to-one, we will prove that if then . The assumption that gives, by the definition of , that . Equal polynomials have equal coefficients, so , , and . Thus implies that and therefore is one-to-one.

The map is onto because any member of the codomain is the image of some member of the domain, namely it is the image of . For instance, is .

The computations for structure preservation are like those in the prior example. This map preserves addition

and scalar multiplication.

Thus is an isomorphism and we write .

We are sometimes interested in an isomorphism of a space with itself, called an automorphism. An identity map is an automorphism. The next two examples show that there are others.

- Example 1.6

A dilation map that multiplies all vectors by a nonzero scalar is an automorphism of .

A rotation or turning map that rotates all vectors through an angle is an automorphism.

A third type of automorphism of is a map that flips or reflects all vectors over a line through the origin.

See Problem 20.

- Example 1.7

Consider the space of polynomials of degree 5 or less and the map that sends a polynomial to . For instance, under this map and . This map is an automorphism of this space; the check is Problem 12.

This isomorphism of with itself does more than just tell us that the space is "the same" as itself. It gives us some insight into the space's structure. For instance, below is shown a family of parabolas, graphs of members of . Each has a vertex at , and the left-most one has zeroes at and , the next one has zeroes at and , etc.

Geometrically, the substitution of for in any function's argument shifts its graph to the right by one. Thus, and 's action is to shift all of the parabolas to the right by one. Notice that the picture before is applied is the same as the picture after is applied, because while each parabola moves to the right, another one comes in from the left to take its place. This also holds true for cubics, etc. So the automorphism gives us the insight that has a certain horizontal homogeneity; this space looks the same near as near .

As described in the preamble to this section, we will next

produce some results supporting the

contention that the definition of isomorphism above

captures our intuition of vector spaces being the same.

Of course the definition itself is persuasive: a vector space consists of two components, a set and some structure, and the definition simply requires that the sets correspond and that the structures correspond also. Also persuasive are the examples above. In particular, Example 1.1, which gives an isomorphism between the space of two-wide row vectors and the space of two-tall column vectors, dramatizes our intuition that isomorphic spaces are the same in all relevant respects. Sometimes people say, where , that " is just painted green"—any differences are merely cosmetic.

Further support for the definition, in case it is needed, is provided by the following results that, taken together, suggest that all the things of interest in a vector space correspond under an isomorphism. Since we studied vector spaces to study linear combinations, "of interest" means "pertaining to linear combinations". Not of interest is the way that the vectors are presented typographically (or their color!).

As an example, although the definition of isomorphism doesn't explicitly say that the zero vectors must correspond, it is a consequence of that definition.

- Lemma 1.8

An isomorphism maps a zero vector to a zero vector.

- Proof

Where is an isomorphism, fix any . Then .

The definition of isomorphism requires that sums of two vectors correspond and that so do scalar multiples. We can extend that to say that all linear combinations correspond.

- Lemma 1.9

For any map between vector spaces these statements are equivalent.

- preserves structure

- preserves linear combinations of two vectors

- preserves linear combinations of any finite number of vectors

- Proof

Since the implications and are clear, we need only show that . Assume statement 1. We will prove statement 3 by induction on the number of summands .

The one-summand base case, that , is covered by the assumption of statement 1.

For the inductive step assume that statement 3 holds whenever there are or fewer summands, that is, whenever , or , ..., or . Consider the -summand case. The first half of 1 gives

by breaking the sum along the final "". Then the inductive hypothesis lets us break up the -term sum.

Finally, the second half of statement 1 gives

when applied times.

In addition to adding to the intuition that the definition of isomorphism does indeed preserve the things of interest in a vector space, that lemma's second item is an especially handy way of checking that a map preserves structure.

We close with a summary. The material in this section augments the chapter on Vector Spaces. There, after giving the definition of a vector space, we informally looked at what different things can happen. Here, we defined the relation "" between vector spaces and we have argued that it is the right way to split the collection of vector spaces into cases because it preserves the features of interest in a vector space—in particular, it preserves linear combinations. That is, we have now said precisely what we mean by "the same", and by "different", and so we have precisely classified the vector spaces.

Exercises

- This exercise is recommended for all readers.

- Problem 1

Verify, using Example 1.4 as a model, that the two correspondences given before the definition are isomorphisms.

- This exercise is recommended for all readers.

- Problem 2

For the map given by

Find the image of each of these elements of the domain.

Show that this map is an isomorphism.

- Problem 3

Show that the natural map from Example 1.5 is an isomorphism.

- This exercise is recommended for all readers.

- Problem 4

Decide whether each map is an isomorphism (if it is an isomorphism then prove it and if it isn't then state a condition that it fails to satisfy).

- given by

- given by

- given by

- given by

- Problem 5

Show that the map given by is one-to-one and onto.Is it an isomorphism?

- This exercise is recommended for all readers.

- Problem 6

Refer to Example 1.1. Produce two more isomorphisms (of course, that they satisfy the conditions in the definition of isomorphism must be verified).

- Problem 7

Refer to Example 1.2. Produce two more isomorphisms (and verify that they satisfy the conditions).

- This exercise is recommended for all readers.

- Problem 8

Show that, although is not itself a subspace of , it is isomorphic to the -plane subspace of .

- Problem 9

Find two isomorphisms between and .

- This exercise is recommended for all readers.

- Problem 10

For what is isomorphic to ?

- Problem 11

For what is isomorphic to ?

- Problem 12

Prove that the map in Example 1.7, from to given by , is a vector space isomorphism.

- Problem 13

Why, in Lemma 1.8, must there be a ? That is, why must be nonempty?

- Problem 14

Are any two trivial spaces isomorphic?

- Problem 15

In the proof of Lemma 1.9, what about the zero-summands case (that is, if is zero)?

- Problem 16

Show that any isomorphism has the form for some nonzero real number .

- This exercise is recommended for all readers.

- Problem 17

These prove that isomorphism is an equivalence relation.

- Show that the identity map is an isomorphism. Thus, any vector space is isomorphic to itself.

- Show that if is an isomorphism then so is its inverse . Thus, if is isomorphic to then also is isomorphic to .

- Show that a composition of isomorphisms is an isomorphism: if is an isomorphism and is an isomorphism then so also is . Thus, if is isomorphic to and is isomorphic to , then also is isomorphic to .

- Problem 18

Suppose that preserves structure. Show that is one-to-one if and only if the unique member of mapped by to is .

- Problem 19

Suppose that is an isomorphism. Prove that the set is linearly dependent if and only if the set of images is linearly dependent.

- This exercise is recommended for all readers.

- Problem 20

Show that each type of map from Example 1.6 is an automorphism.

- Dilation by a nonzero scalar .

- Rotation through an angle .

- Reflection over a line through the origin.

Hint. For the second and third items, polar coordinates are useful.

- Problem 21

Produce an automorphism of other than the identity map, and other than a shift map .

- Problem 22

- Show that a function is an automorphism if and only if it has the form for some .

- Let be an automorphism of such that . Find .

- Show that a function is an automorphism if and only if it has the form

- Let be an automorphism of with

- Problem 24

We show that isomorphisms can be tailored to fit in that, sometimes, given vectors in the domain and in the range we can produce an isomorphism associating those vectors.

- Let be a basis for so that any has a unique representation as , which we denote in this way.

- Show that this function is one-to-one and onto.

- Show that it preserves structure.

- Produce an isomorphism from to

that fits these specifications.

- Problem 25

Prove that a space is -dimensional if and only if it is isomorphic to . Hint. Fix a basis for the space and consider the map sending a vector over to its representation with respect to .

- Problem 26

(Requires the subsection on Combining Subspaces, which is optional.) Let and be vector spaces. Define a new vector space, consisting of the set along with these operations.

This is a vector space, the external direct sum of and .

- Check that it is a vector space.

- Find a basis for, and the dimension of, the external direct sum .

- What is the relationship among , , and ?

- Suppose that and are subspaces of a vector space such that (in this case we say that is the internal direct sum of and ). Show that the map given by

2 - Dimension Characterizes Isomorphism

In the prior subsection, after stating the definition of an isomorphism, we gave some results supporting the intuition that such a map describes spaces as "the same". Here we will formalize this intuition. While two spaces that are isomorphic are not equal, we think of them as almost equal— as equivalent. In this subsection we shall show that the relationship "is isomorphic to" is an equivalence relation.[2]

- Theorem 2.1

Isomorphism is an equivalence relation between vector spaces.

- Proof

We must prove that this relation has the three properties of being symmetric, reflexive, and transitive. For each of the three we will use item 2 of Lemma 1.9 and show that the map preserves structure by showing that it preserves linear combinations of two members of the domain.

To check reflexivity, that any space is isomorphic to itself, consider the identity map. It is clearly one-to-one and onto. The calculation showing that it preserves linear combinations is easy.

To check symmetry, that if is isomorphic to via some map then there is an isomorphism going the other way, consider the inverse map . As stated in the appendix, such an inverse function exists and it is also a correspondence. Thus we have reduced the symmetry issue to checking that, because preserves linear combinations, so also does . Assume that and , i.e., that and .

Finally, we must check transitivity, that if is isomorphic to via some map and if is isomorphic to via some map then also is isomorphic to . Consider the composition . The appendix notes that the composition of two correspondences is a correspondence, so we need only check that the composition preserves linear combinations.

Thus is an isomorphism.

As a consequence of that result, we know that the universe of vector spaces is partitioned into classes: every space is in one and only one isomorphism class.

|

|

- Theorem 2.2

Vector spaces are isomorphic if and only if they have the same dimension.

This follows from the next two lemmas.

- Lemma 2.3

If spaces are isomorphic then they have the same dimension.

- Proof

We shall show that an isomorphism of two spaces gives a correspondence between their bases. That is, where is an isomorphism and a basis for the domain is , then the image set is a basis for the codomain . (The other half of the correspondence— that for any basis of the inverse image is a basis for — follows on recalling that if is an isomorphism then is also an isomorphism, and applying the prior sentence to .)

To see that spans , fix any , note that is onto and so there is a with , and expand as a combination of basis vectors.

For linear independence of , if

then, since is one-to-one and so the only vector sent to is , we have that , implying that all of the 's are zero.

- Lemma 2.4

If spaces have the same dimension then they are isomorphic.

- Proof

To show that any two spaces of dimension are isomorphic, we can simply show that any one is isomorphic to . Then we will have shown that they are isomorphic to each other, by the transitivity of isomorphism (which was established in Theorem 2.1).

Let be -dimensional. Fix a basis for the domain . Consider the representation of the members of that domain with respect to the basis as a function from to

(it is well-defined[3] since every has one and only one such representation— see Remark 2.5 below).

This function is one-to-one because if

then

and so , ..., , and therefore the original arguments and are equal.

This function is onto; any -tall vector

is the image of some , namely .

Finally, this function preserves structure.

Thus the function is an isomorphism and thus any -dimensional space is isomorphic to the -dimensional space . Consequently, any two spaces with the same dimension are isomorphic.

- Remark 2.5

The parenthetical comment in that proof about the role played by the "one and only one representation" result requires some explanation. We need to show that (for a fixed ) each vector in the domain is associated by with one and only one vector in the codomain.

A contrasting example, where an association doesn't have this property, is illuminating. Consider this subset of , which is not a basis.

Call those four polynomials , ..., . If, mimicing above proof, we try to write the members of as , and associate with the four-tall vector with components , ..., then there is a problem. For, consider . The set spans the space , so there is at least one four-tall vector associated with . But is not linearly independent and so vectors do not have unique decompositions. In this case, both

and so there is more than one four-tall vector associated with .

That is, with input this association does not have a well-defined (i.e., single) output value.

Any map whose definition appears possibly ambiguous must be checked to see that it is well-defined. For in the above proof that check is Problem 11.

That ends the proof of Theorem 2.2. We say that the isomorphism classes are characterized by dimension because we can describe each class simply by giving the number that is the dimension of all of the spaces in that class.

This subsection's results give us a collection of representatives of the isomorphism classes.[4]

- Corollary 2.6

A finite-dimensional vector space is isomorphic to one and only one of the .

The proofs above pack many ideas into a small space. Through the rest of this chapter we'll consider these ideas again, and fill them out. For a taste of this, we will expand here on the proof of Lemma 2.4.

- Example 2.7

The space of matrices is isomorphic to . With this basis for the domain

the isomorphism given in the lemma, the representation map , simply carries the entries over.

One way to think of the map is: fix the basis for the domain and the basis for the codomain, and associate with , and with , etc. Then extend this association to all of the members of two spaces.

We say that the map has been extended linearly from the bases to the spaces.

We can do the same thing with different bases, for instance, taking this basis for the domain.

Associating corresponding members of and and extending linearly

gives rise to an isomorphism that is different than .

The prior map arose by changing the basis for the domain. We can also change the basis for the codomain. Starting with

associating with , etc., and then linearly extending that correspondence to all of the two spaces

gives still another isomorphism.

So there is a connection between the maps between spaces and bases for those spaces. Later sections will explore that connection.

We will close this section with a summary.

Recall that in the first chapter we defined two matrices as row equivalent if they can be derived from each other by elementary row operations (this was the meaning of same-ness that was of interest there). We showed that is an equivalence relation and so the collection of matrices is partitioned into classes, where all the matrices that are row equivalent fall together into a single class. Then, for insight into which matrices are in each class, we gave representatives for the classes, the reduced echelon form matrices.

In this section, except that the appropriate notion of same-ness here is vector space isomorphism, we have followed much the same outline. First we defined isomorphism, saw some examples, and established some properties. Then we showed that it is an equivalence relation, and now we have a set of class representatives, the real vector spaces , , etc.

|

|

As before, the list of representatives helps us to understand the partition. It is simply a classification of spaces by dimension.

In the second chapter, with the definition of vector spaces, we seemed to have opened up our studies to many examples of new structures besides the familiar 's. We now know that isn't the case. Any finite-dimensional vector space is actually "the same" as a real space. We are thus considering exactly the structures that we need to consider.

The rest of the chapter fills out the work in this section. In particular, in the next section we will consider maps that preserve structure, but are not necessarily correspondences.

Exercises

- This exercise is recommended for all readers.

- Problem 1

Decide if the spaces are isomorphic.

- ,

- ,

- ,

- ,

- ,

- Answer

Each pair of spaces is isomorphic if and only if the two have the same dimension. We can, when there is an isomorphism, state a map, but it isn't strictly necessary.

- No, they have different dimensions.

- No, they have different dimensions.

- Yes, they have the same dimension.

One isomorphism is this.

- Yes, they have the same dimension. This is an isomorphism.

- Yes, both have dimension .

- This exercise is recommended for all readers.

- Problem 2

Consider the isomorphism where . Find the image of each of these elements of the domain.

- ;

- ;

- Answer

- This exercise is recommended for all readers.

- Problem 3

Show that if then .

- Answer

They have different dimensions.

- This exercise is recommended for all readers.

- Problem 4

Is ?

- Answer

Yes, both are -dimensional.

- This exercise is recommended for all readers.

- Problem 5

Are any two planes through the origin in isomorphic?

- Answer

Yes, any two (nondegenerate) planes are both two-dimensional vector spaces.

- Problem 6

Find a set of equivalence class representatives other than the set of 's.

- Answer

There are many answers, one is the set of (taking to be the trivial vector space).

- Problem 7

True or false: between any -dimensional space and there is exactly one isomorphism.

- Answer

False (except when ). For instance, if is an isomorphism then multiplying by any nonzero scalar, gives another, different, isomorphism. (Between trivial spaces the isomorphisms are unique; the only map possible is .)

- Problem 8

Can a vector space be isomorphic to one of its (proper) subspaces?

- Answer

No. A proper subspace has a strictly lower dimension than it's superspace; if is a proper subspace of then any linearly independent subset of must have fewer than members or else that set would be a basis for , and wouldn't be proper.

- This exercise is recommended for all readers.

- Problem 9

This subsection shows that for any isomorphism, the inverse map is also an isomorphism. This subsection also shows that for a fixed basis of an -dimensional vector space , the map is an isomorphism. Find the inverse of this map.

- Answer

Where , the inverse is this.

- This exercise is recommended for all readers.

- Problem 10

Prove these facts about matrices.

- The row space of a matrix is isomorphic to the column space of its transpose.

- The row space of a matrix is isomorphic to its column space.

- Answer

All three spaces have dimension equal to the rank of the matrix.

- Problem 11

Show that the function from Theorem 2.2 is well-defined.

- Answer

We must show that if then . So suppose that . Each vector in a vector space (here, the domain space) has a unique representation as a linear combination of basis vectors, so we can conclude that , ..., . Thus,

and so the function is well-defined.

- Problem 12

Is the proof of Theorem 2.2 valid when ?

- Answer

Yes, because a zero-dimensional space is a trivial space.

- Problem 13

For each, decide if it is a set of isomorphism class representatives.

- Answer

- No, this collection has no spaces of odd dimension.

- Yes, because .

- No, for instance, .

- Problem 14

Let be a correspondence between vector spaces and (that is, a map that is one-to-one and onto). Show that the spaces and are isomorphic via if and only if there are bases and such that corresponding vectors have the same coordinates: .

- Answer

One direction is easy: if the two are isomorphic via then for any basis , the set is also a basis (this is shown in Lemma 2.3). The check that corresponding vectors have the same coordinates: is routine.

For the other half, assume that there are bases such that corresponding vectors have the same coordinates with respect to those bases. Because is a correspondence, to show that it is an isomorphism, we need only show that it preserves structure. Because , the map preserves structure if and only if representations preserve addition: and scalar multiplication: The addition calculation is this: , and the scalar multiplication calculation is similar.

- Problem 15

Consider the isomorphism .

- Vectors in a real space are orthogonal if and only if their dot product is zero. Give a definition of orthogonality for polynomials.

- The derivative of a member of is in . Give a definition of the derivative of a vector in .

- Answer

- Pulling the definition back from to gives that is orthogonal to if and only if .

- A natural definition is this.

- This exercise is recommended for all readers.

- Problem 16

Does every correspondence between bases, when extended to the spaces, give an isomorphism?

- Answer

Yes.

Assume that is a vector space with basis and that is another vector space such that the map is a correspondence. Consider the extension of .

The map is an isomorphism.

First, is well-defined because every member of has one and only one representation as a linear combination of elements of .

Second, is one-to-one because every member of has only one representation as a linear combination of elements of . That map is onto because every member of has at least one representation as a linear combination of members of .

Finally, preservation of structure is routine to check. For instance, here is the preservation of addition calculation.

Preservation of scalar multiplication is similar.

- Problem 17

(Requires the subsection on Combining Subspaces, which is optional.) Suppose that and that is isomorphic to the space under the map . Show that .

- Answer

Because and is one-to-one we have that . To finish, count the dimensions: , as required.

- Problem 18

- Show that this is not a well-defined function from the rational numbers to the integers: with each fraction, associate the value of its numerator.

- Answer

Rational numbers have many representations, e.g., , and the numerators can vary among representations.

Footnotes

- ↑ More information on one-to-one and onto maps is in the appendix.

- ↑ More information on equivalence relations is in the appendix.

- ↑ More information on well-definedness is in the appendix.

- ↑ More information on equivalence class representatives is in the appendix.

Section II - Homomorphisms

The definition of isomorphism has two conditions. In this section we will consider the second one, that the map must preserve the algebraic structure of the space. We will focus on this condition by studying maps that are required only to preserve structure; that is, maps that are not required to be correspondences.

Experience shows that this kind of map is tremendously useful in the study of vector spaces. For one thing, as we shall see in the second subsection below, while isomorphisms describe how spaces are the same, these maps describe how spaces can be thought of as alike.

1 - Definition

- Definition 1.1

A function between vector spaces that preserves the operations of addition

if then

and scalar multiplication

if and then

is a homomorphism or linear map.

- Example 1.2

The projection map

is a homomorphism.

It preserves addition

and scalar multiplication.

This map is not an isomorphism since it is not one-to-one. For instance, both and in are mapped to the zero vector in .

- Example 1.3

Of course, the domain and codomain might be other than spaces of column vectors. Both of these are homomorphisms; the verifications are straightforward.

- given by

- given by

- Example 1.4

Between any two spaces there is a zero homomorphism, mapping every vector in the domain to the zero vector in the codomain.

- Example 1.5

These two suggest why we use the term "linear map".

- The map given by

- The first of these two maps is linear while the second is not.

What distinguishes the homomorphisms is that the coordinate functions are linear combinations of the arguments. See also Problem 7.

Obviously, any isomorphism is a homomorphism— an isomorphism is a homomorphism that is also a correspondence. So, one way to think of the "homomorphism" idea is that it is a generalization of "isomorphism", motivated by the observation that many of the properties of isomorphisms have only to do with the map's structure preservation property and not to do with it being a correspondence. As examples, these two results from the prior section do not use one-to-one-ness or onto-ness in their proof, and therefore apply to any homomorphism.

- Lemma 1.6

A homomorphism sends a zero vector to a zero vector.

- Lemma 1.7

Each of these is a necessary and sufficient condition for to be a homomorphism.

- for any and

- for any and

Part 1 is often used to check that a function is linear.

- Example 1.8

The map given by

satisfies 1 of the prior result

and so it is a homomorphism.

However, some of the results that we have seen for isomorphisms fail to hold for homomorphisms in general. Consider the theorem that an isomorphism between spaces gives a correspondence between their bases. Homomorphisms do not give any such correspondence; Example 1.2 shows that there is no such correspondence, and another example is the zero map between any two nontrivial spaces. Instead, for homomorphisms a weaker but still very useful result holds.

- Theorem 1.9

A homomorphism is determined by its action on a basis. That is, if is a basis of a vector space and are (perhaps not distinct) elements of a vector space then there exists a homomorphism from to sending to , ..., and to , and that homomorphism is unique.

- Proof

We will define the map by associating with , etc., and then extending linearly to all of the domain. That is, where , the map is given by . This is well-defined because, with respect to the basis, the representation of each domain vector is unique.

This map is a homomorphism since it preserves linear combinations; where and , we have this.

And, this map is unique since if is another homomorphism such that for each then and agree on all of the vectors in the domain.

Thus, and are the same map.

- Example 1.10

This result says that we can construct a homomorphism by fixing a basis for the domain and specifying where the map sends those basis vectors. For instance, if we specify a map that acts on the standard basis in this way

then the action of on any other member of the domain is also specified. For instance, the value of on this argument

is a direct consequence of the value of on the basis vectors.

Later in this chapter we shall develop a scheme, using matrices, that is convienent for computations like this one.

Just as the isomorphisms of a space with itself are useful and interesting, so too are the homomorphisms of a space with itself.

- Definition 1.11

A linear map from a space into itself is a linear transformation.

- Remark 1.12

In this book we use "linear transformation" only in the case where the codomain equals the domain, but it is widely used in other texts as a general synonym for "homomorphism".

- Example 1.13

The map on that projects all vectors down to the -axis

is a linear transformation.

- Example 1.14

The derivative map

is a linear transformation, as this result from calculus notes: .

- Example 1.15

- The matrix transpose map

is a linear transformation of . Note that this transformation is one-to-one and onto, and so in fact it is an automorphism.

We finish this subsection about maps by recalling that we can linearly combine maps. For instance, for these maps from to itself

the linear combination is also a map from to itself.

- Lemma 1.16

For vector spaces and , the set of linear functions from to is itself a vector space, a subspace of the space of all functions from to . It is denoted .

- Proof

This set is non-empty because it contains the zero homomorphism. So to show that it is a subspace we need only check that it is closed under linear combinations. Let be linear. Then their sum is linear

and any scalar multiple is also linear.

Hence is a subspace.

We started this section by isolating the structure preservation property of isomorphisms. That is, we defined homomorphisms as a generalization of isomorphisms. Some of the properties that we studied for isomorphisms carried over unchanged, while others were adapted to this more general setting.

It would be a mistake, though, to view this new notion of homomorphism as derived from, or somehow secondary to, that of isomorphism. In the rest of this chapter we shall work mostly with homomorphisms, partly because any statement made about homomorphisms is automatically true about isomorphisms, but more because, while the isomorphism concept is perhaps more natural, experience shows that the homomorphism concept is actually more fruitful and more central to further progress.

Exercises

- This exercise is recommended for all readers.

- Problem 1

Decide if each is linear.

- Answer

- Yes. The verification is straightforward.

- Yes. The verification is easy.

- No. An example of an addition that is not respected is this.

- Yes. The verification is straightforward.

- This exercise is recommended for all readers.

- Problem 2

Decide if each map is linear.

- Answer

For each, we must either check that linear combinations are preserved, or give an example of a linear combination that is not.

- Yes. The check that it preserves combinations is routine.

- No. For instance, not preserved is multiplication by the scalar .

- Yes. This is the check that it preserves combinations of two members of the domain.

- No.

An example of a combination that is not preserved is this.

- This exercise is recommended for all readers.

- Problem 3

Show that these two maps are homomorphisms.

- given by maps to

- given by maps to

Are these maps inverse to each other?

- Answer

The check that each is a homomorphisms is routine. Here is the check for the differentiation map.

(An alternate proof is to simply note that this is a property of differentiation that is familar from calculus.)

These two maps are not inverses as this composition does not act as the identity map on this element of the domain.

- Problem 4

Is (perpendicular) projection from to the -plane a homomorphism? Projection to the -plane? To the -axis? The -axis? The -axis? Projection to the origin?

- Answer

Each of these projections is a homomorphism. Projection to the -plane and to the -plane are these maps.

Projection to the -axis, to the -axis, and to the -axis are these maps.

And projection to the origin is this map.

Verification that each is a homomorphism is straightforward. (The last one, of course, is the zero transformation on .)

- Problem 5

Show that, while the maps from Example 1.3 preserve linear operations, they are not isomorphisms.

- Answer

The first is not onto; for instance, there is no polynomial that is sent the constant polynomial . The second is not one-to-one; both of these members of the domain

are mapped to the same member of the codomain, .

- Problem 6

Is an identity map a linear transformation?

- Answer

Yes; in any space .

- This exercise is recommended for all readers.

- Problem 7

Stating that a function is "linear" is different than stating that its graph is a line.

- The function given by has a graph that is a line. Show that it is not a linear function.

- The function given by

- Answer

- This map does not preserve structure since , while .

- The check is routine.

- This exercise is recommended for all readers.

- Problem 8

Part of the definition of a linear function is that it respects addition. Does a linear function respect subtraction?

- Answer

Yes. Where is linear, .

- Problem 9

Assume that is a linear transformation of and that is a basis of . Prove each statement.

- If for each basis vector then is the zero map.

- If for each basis vector then is the identity map.

- If there is a scalar such that for each basis vector then for all vectors in .

- Answer

- Let be represented with respect to the basis as . Then .

- This argument is similar to the prior one. Let be represented with respect to the basis as . Then .

- As above, only .

- This exercise is recommended for all readers.

- Problem 10

Consider the vector space where vector addition and scalar multiplication are not the ones inherited from but rather are these: is the product of and , and is the -th power of . (This was shown to be a vector space in an earlier exercise.) Verify that the natural logarithm map is a homomorphism between these two spaces. Is it an isomorphism?

- Answer

That it is a homomorphism follows from the familiar rules that the logarithm of a product is the sum of the logarithms and that the logarithm of a power is the multiple of the logarithm . This map is an isomorphism because it has an inverse, namely, the exponential map, so it is a correspondence, and therefore it is an isomorphism.

- This exercise is recommended for all readers.

- Problem 11

Consider this transformation of .

Find the image under this map of this ellipse.

- Answer

Where and , the image set is

the unit circle in the -plane.

- This exercise is recommended for all readers.

- Problem 12

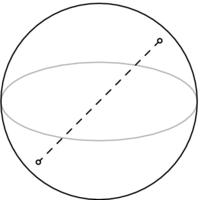

Imagine a rope wound around the earth's equator so that it fits snugly (suppose that the earth is a sphere). How much extra rope must be added to raise the circle to a constant six feet off the ground?

- Answer

The circumference function is linear. Thus we have . Observe that it takes the same amount of extra rope to raise the circle from tightly wound around a basketball to six feet above that basketball as it does to raise it from tightly wound around the earth to six feet above the earth.

- This exercise is recommended for all readers.

- Problem 13

Verify that this map

is linear. Generalize.

- Answer

Verifying that it is linear is routine.

The natural guess at a generalization is that for any fixed the map is linear. This statement is true. It follows from properties of the dot product we have seen earlier: and . (The natural guess at a generalization of this generalization, that the map from to whose action consists of taking the dot product of its argument with a fixed vector is linear, is also true.)

- Problem 14

Show that every homomorphism from to acts via multiplication by a scalar. Conclude that every nontrivial linear transformation of is an isomorphism. Is that true for transformations of ? ?

- Answer

Let be linear. A linear map is determined by its action on a basis, so fix the basis for . For any we have that and so acts on any argument by multiplying it by the constant . If is not zero then the map is a correspondence— its inverse is division by — so any nontrivial transformation of is an isomorphism.

This projection map is an example that shows that not every transformation of acts via multiplication by a constant when , including when .

- Problem 15

- Show that for any scalars this map is a homomorphism.

- Show that for each , the -th derivative operator is a linear transformation of . Conclude that for any scalars this map is a linear transformation of that space.

- Answer

- Where and are scalars, we have this.

- Each power of the derivative operator is linear because of these rules familiar from calculus.

- Problem 16

Lemma 1.16 shows that a sum of linear functions is linear and that a scalar multiple of a linear function is linear. Show also that a composition of linear functions is linear.

- Answer

(This argument has already appeared, as part of the proof that isomorphism is an equivalence.) Let and be linear. For any and scalars combinations are preserved.

- This exercise is recommended for all readers.

- Problem 17

Where is linear, suppose that , ..., for some vectors , ..., from .

- If the set of 's is independent, must the set of 's also be independent?

- If the set of 's is independent, must the set of 's also be independent?

- If the set of 's spans , must the set of 's span ?

- If the set of 's spans , must the set of 's span ?

- Answer

- Yes. The set of 's cannot be linearly independent if the set of 's is linearly dependent because any nontrivial relationship in the domain would give a nontrivial relationship in the range .

- Not necessarily. For instance, the transformation of given by

- Not necessarily. An example is the projection map

- Not necessarily. For instance, the injection map sends the standard basis for the domain to a set that does not span the codomain. (Remark. However, the set of 's does span the range. A proof is easy.)

- Problem 18

Generalize Example 1.15 by proving that the matrix transpose map is linear. What is the domain and codomain?

- Answer

Recall that the entry in row and column of the transpose of is the entry from row and column of . Now, the check is routine.

The domain is while the codomain is .

- Problem 19

- Where , the line segment connecting them is defined to be the set . Show that the image, under a homomorphism , of the segment between and is the segment between and .

- A subset of is convex if, for any two points in that set, the line segment joining them lies entirely in that set. (The inside of a sphere is convex while the skin of a sphere is not.) Prove that linear maps from to preserve the property of set convexity.

- Answer

- For any homomorphism we have

- We must show that if a subset of the domain is convex then its image, as a subset of the range, is also convex. Suppose that is convex and consider its image . To show is convex we must show that for any two of its members, and , the line segment connecting them

- This exercise is recommended for all readers.

- Problem 20

Let be a homomorphism.

- Show that the image under of a line in is a (possibly degenerate) line in .

- What happens to a -dimensional linear surface?

- Answer

- For , the line through with direction is the set . The image under of that line is the line through with direction . If is the zero vector then this line is degenerate.

- A -dimensional linear surface in maps to a (possibly degenerate) -dimensional linear surface in . The proof is just like that the one for the line.

- Problem 21

Prove that the restriction of a homomorphism to a subspace of its domain is another homomorphism.

- Answer

Suppose that is a homomorphism and suppose that is a subspace of . Consider the map defined by . (The only difference between and is the difference in domain.) Then this new map is linear: .

- Problem 22

Assume that is linear.

- Show that the rangespace of this map is a subspace of the codomain .

- Show that the nullspace of this map is a subspace of the domain .

- Show that if is a subspace of the domain then its image is a subspace of the codomain . This generalizes the first item.

- Generalize the second item.

- Answer

This will appear as a lemma in the next subsection.

- The range is nonempty because is nonempty. To finish we need to show that it is closed under combinations. A combination of range vectors has the form, where ,

- The nullspace is nonempty since it contains , as maps to . It is closed under linear combinations because, where are elements of the inverse image set , for

- This image of nonempty because is nonempty. For closure under combinations, where ,

- The natural generalization is that the inverse image of a subspace of is a subspace.

Suppose that is a subspace of . Note that so the set is not empty. To show that this set is closed under combinations, let be elements of such that , ..., and note that

- Problem 23

Consider the set of isomorphisms from a vector space to itself. Is this a subspace of the space of homomorphisms from the space to itself?

- Answer

No; the set of isomorphisms does not contain the zero map (unless the space is trivial).

- Problem 24

Does Theorem 1.9 need that is a basis? That is, can we still get a well-defined and unique homomorphism if we drop either the condition that the set of 's be linearly independent, or the condition that it span the domain?

- Answer

If doesn't span the space then the map needn't be unique. For instance, if we try to define a map from to itself by specifying only that is sent to itself, then there is more than one homomorphism possible; both the identity map and the projection map onto the first component fit this condition.

If we drop the condition that is linearly independent then we risk an inconsistent specification (i.e, there could be no such map). An example is if we consider , and try to define a map from to itself that sends to itself, and sends both and to . No homomorphism can satisfy these three conditions.

- Problem 25

Let be a vector space and assume that the maps are linear.

- Define a map whose component functions are the given linear ones.

- Does the converse hold— is any linear map from to made up of two linear component maps to ?

- Generalize.

- Answer

- Briefly, the check of linearity is this.

- Yes. Let and be the projections

- In general, a map from a vector space to an is linear if and only if each of the component functions is linear. The verification is as in the prior item.

2 - Rangespace and Nullspace

Isomorphisms and homomorphisms both preserve structure. The difference is that homomorphisms needn't be onto and needn't be one-to-one. This means that homomorphisms are a more general kind of map, subject to fewer restrictions than isomorphisms. We will examine what can happen with homomorphisms that is prevented by the extra restrictions satisfied by isomorphisms.

We first consider the effect of dropping the onto requirement, of not requiring as part of the definition that a homomorphism be onto its codomain. For instance, the injection map

is not an isomorphism because it is not onto. Of course, being a function, a homomorphism is onto some set, namely its range; the map is onto the -plane subset of .

- Lemma 2.1

Under a homomorphism, the image of any subspace of the domain is a subspace of the codomain. In particular, the image of the entire space, the range of the homomorphism, is a subspace of the codomain.

- Proof

Let be linear and let be a subspace of the domain . The image is a subset of the codomain . It is nonempty because is nonempty and thus to show that is a subspace of we need only show that it is closed under linear combinations of two vectors. If and are members of then is also a member of because it is the image of from .

- Definition 2.2

The rangespace of a homomorphism is

sometimes denoted . The dimension of the rangespace is the map's rank.

(We shall soon see the connection between the rank of a map and the rank of a matrix.)

- Example 2.3

Recall that the derivative map given by is linear. The rangespace is the set of quadratic polynomials . Thus, the rank of this map is three.

- Example 2.4

With this homomorphism

an image vector in the range can have any constant term, must have an coefficient of zero, and must have the same coefficient of as of . That is, the rangespace is and so the rank is two.

The prior result shows that, in passing from the definition of isomorphism to the more general definition of homomorphism, omitting the "onto" requirement doesn't make an essential difference. Any homomorphism is onto its rangespace.

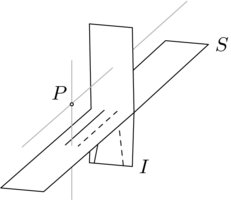

However, omitting the "one-to-one" condition does make a difference. A homomorphism may have many elements of the domain that map to one element of the codomain. Below is a "bean " sketch of a many-to-one map between sets.[1] It shows three elements of the codomain that are each the image of many members of the domain.

Recall that for any function , the set of elements of that are mapped to is the inverse image . Above, the three sets of many elements on the left are inverse images.

- Example 2.5

Consider the projection

which is a homomorphism that is many-to-one. In this instance, an inverse image set is a vertical line of vectors in the domain.

- Example 2.6

This homomorphism

is also many-to-one; for a fixed , the inverse image

is the set of plane vectors whose components add to .

The above examples have only to do with the fact that we are considering functions, specifically, many-to-one functions. They show the inverse images as sets of vectors that are related to the image vector . But these are more than just arbitrary functions, they are homomorphisms; what do the two preservation conditions say about the relationships?

In generalizing from isomorphisms to homomorphisms by dropping the one-to-one condition, we lose the property that we've stated intuitively as: the domain is "the same as" the range. That is, we lose that the domain corresponds perfectly to the range in a one-vector-by-one-vector way.

What we shall keep, as the examples below illustrate, is that a homomorphism describes a way in which the domain is "like", or "analogous to", the range.

- Example 2.7

We think of as being like , except that vectors have an extra component. That is, we think of the vector with components , , and as like the vector with components and . In defining the projection map , we make precise which members of the domain we are thinking of as related to which members of the codomain.

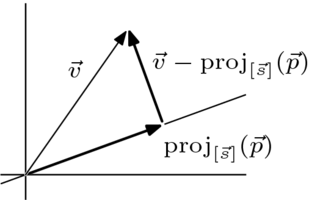

Understanding in what way the preservation conditions in the definition of homomorphism show that the domain elements are like the codomain elements is easiest if we draw as the -plane inside of . (Of course, is a set of two-tall vectors while the -plane is a set of three-tall vectors with a third component of zero, but there is an obvious correspondence.) Then, is the "shadow" of in the plane and the preservation of addition property says that

|

|

| ||

| above | plus | above | equals | above |

Briefly, the shadow of a sum equals the sum of the shadows . (Preservation of scalar multiplication has a similar interpretation.)

Redrawing by separating the two spaces, moving the codomain to the right, gives an uglier picture but one that is more faithful to the "bean" sketch.

Again in this drawing, the vectors that map to lie in the domain in a vertical line (only one such vector is shown, in gray). Call any such member of this inverse image a " vector". Similarly, there is a vertical line of " vectors" and a vertical line of " vectors". Now, has the property that if and then . This says that the vector classes add, in the sense that any vector plus any vector equals a vector, (A similar statement holds about the classes under scalar multiplication.)

Thus, although the two spaces and are not isomorphic, describes a way in which they are alike: vectors in add as do the associated vectors in — vectors add as their shadows add.

- Example 2.8

A homomorphism can be used to express an analogy between spaces that is more subtle than the prior one. For the map

from Example 2.6 fix two numbers in the range . A that maps to has components that add to , that is, the inverse image is the set of vectors with endpoint on the diagonal line . Call these the " vectors". Similarly, we have the " vectors" and the " vectors". Then the addition preservation property says that

|

|

| ||

| a " vector" | plus | a " vector" | equals | a " vector". |

Restated, if a vector is added to a vector then the result is mapped by to a vector. Briefly, the image of a sum is the sum of the images. Even more briefly, . (The preservation of scalar multiplication condition has a similar restatement.)

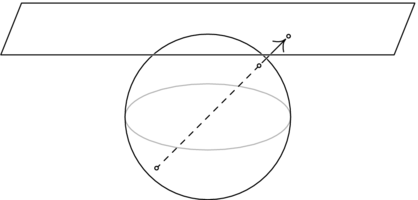

- Example 2.9

The inverse images can be structures other than lines. For the linear map

the inverse image sets are planes , , etc., perpendicular to the -axis.

We won't describe how every homomorphism that we will use is an analogy because the formal sense that we make of "alike in that ..." is "a homomorphism exists such that ...". Nonetheless, the idea that a homomorphism between two spaces expresses how the domain's vectors fall into classes that act like the range's vectors is a good way to view homomorphisms.

Another reason that we won't treat all of the homomorphisms that we see as above is that many vector spaces are hard to draw (e.g., a space of polynomials). However, there is nothing bad about gaining insights from those spaces that we are able to draw, especially when those insights extend to all vector spaces. We derive two such insights from the three examples 2.7 , 2.8, and 2.9.

First, in all three examples, the inverse images are lines or planes, that is, linear surfaces. In particular, the inverse image of the range's zero vector is a line or plane through the origin— a subspace of the domain.

- Lemma 2.10

For any homomorphism, the inverse image of a subspace of the range is a subspace of the domain. In particular, the inverse image of the trivial subspace of the range is a subspace of the domain.

- Proof

Let be a homomorphism and let be a subspace of the rangespace . Consider , the inverse image of the set . It is nonempty because it contains , since , which is an element , as is a subspace. To show that is closed under linear combinations, let and be elements, so that and are elements of , and then is also in the inverse image because is a member of the subspace .

- Definition 2.11

The nullspace or kernel of a linear map is the inverse image of

The dimension of the nullspace is the map's nullity.

- Example 2.12

The map from Example 2.3 has this nullspace .

Now for the second insight from the above pictures. In Example 2.7, each of the vertical lines is squashed down to a single point— , in passing from the domain to the range, takes all of these one-dimensional vertical lines and "zeroes them out", leaving the range one dimension smaller than the domain. Similarly, in Example 2.8, the two-dimensional domain is mapped to a one-dimensional range by breaking the domain into lines (here, they are diagonal lines), and compressing each of those lines to a single member of the range. Finally, in Example 2.9, the domain breaks into planes which get "zeroed out", and so the map starts with a three-dimensional domain but ends with a one-dimensional range— this map "subtracts" two from the dimension. (Notice that, in this third example, the codomain is two-dimensional but the range of the map is only one-dimensional, and it is the dimension of the range that is of interest.)

- Theorem 2.14

A linear map's rank plus its nullity equals the dimension of its domain.

- Proof

Let be linear and let be a basis for the nullspace. Extend that to a basis for the entire domain. We shall show that is a basis for the rangespace. Then counting the size of these bases gives the result.

To see that is linearly independent, consider the equation . This gives that and so is in the nullspace of . As is a basis for this nullspace, there are scalars satisfying this relationship.

But is a basis for so each scalar equals zero. Therefore is linearly independent.

To show that spans the rangespace, consider and write as a linear combination of members of . This gives and since , ..., are in the nullspace, we have that . Thus, is a linear combination of members of , and so spans the space.

- Example 2.15

Where is

the rangespace and nullspace are

and so the rank of is two while the nullity is one.

- Example 2.16

If is the linear transformation then the range is , and so the rank of is one and the nullity is zero.

- Corollary 2.17

The rank of a linear map is less than or equal to the dimension of the domain. Equality holds if and only if the nullity of the map is zero.

We know that an isomorphism exists between two spaces if and only if their dimensions are equal. Here we see that for a homomorphism to exist, the dimension of the range must be less than or equal to the dimension of the domain. For instance, there is no homomorphism from onto . There are many homomorphisms from into , but none is onto all of three-space.

The rangespace of a linear map can be of dimension strictly less than the dimension of the domain (Example 2.3's derivative transformation on has a domain of dimension four but a range of dimension three). Thus, under a homomorphism, linearly independent sets in the domain may map to linearly dependent sets in the range (for instance, the derivative sends to ). That is, under a homomorphism, independence may be lost. In contrast, dependence stays.

- Lemma 2.18

Under a linear map, the image of a linearly dependent set is linearly dependent.

- Proof

Suppose that , with some nonzero. Then, because and because , we have that with some nonzero .

When is independence not lost? One obvious sufficient condition is when the homomorphism is an isomorphism. This condition is also necessary; see Problem 14. We will finish this subsection comparing homomorphisms with isomorphisms by observing that a one-to-one homomorphism is an isomorphism from its domain onto its range.

- Definition 2.19

A linear map that is one-to-one is nonsingular.

(In the next section we will see the connection between this use of "nonsingular" for maps and its familiar use for matrices.)

- Example 2.20

This nonsingular homomorphism

gives the obvious correspondence between and the -plane inside of .

The prior observation allows us to adapt some results about isomorphisms to this setting.

- Theorem 2.21

In an -dimensional vector space , these:

- is nonsingular, that is, one-to-one

- has a linear inverse

- , that is,

- if is a basis for then is a basis for

are equivalent statements about a linear map .

- Proof

We will first show that . We will then show that .

For , suppose that the linear map is one-to-one, and so has an inverse. The domain of that inverse is the range of and so a linear combination of two members of that domain has the form . On that combination, the inverse gives this.

Thus the inverse of a one-to-one linear map is automatically linear. But this also gives the implication, because the inverse itself must be one-to-one.

Of the remaining implications, holds because any homomorphism maps to , but a one-to-one map sends at most one member of to .

Next, is true since rank plus nullity equals the dimension of the domain.

For , to show that is a basis for the rangespace we need only show that it is a spanning set, because by assumption the range has dimension . Consider . Expressing as a linear combination of basis elements produces , which gives that , as desired.

Finally, for the implication, assume that is a basis for so that is a basis for . Then every a the unique representation . Define a map from to by

(uniqueness of the representation makes this well-defined). Checking that it is linear and that it is the inverse of are easy.

We've now seen that a linear map shows how the structure of the domain is like that of the range. Such a map can be thought to organize the domain space into inverse images of points in the range. In the special case that the map is one-to-one, each inverse image is a single point and the map is an isomorphism between the domain and the range.

Exercises

- This exercise is recommended for all readers.

- Problem 1

Let be given by . Which of these are in the nullspace? Which are in the rangespace?

- This exercise is recommended for all readers.

- Problem 2

Find the nullspace, nullity, rangespace, and rank of each map.

- given by

- given by

- given by

- the zero map

- This exercise is recommended for all readers.

- Problem 3

Find the nullity of each map.

- of rank five

- of rank one

- , an onto map

- , onto

- This exercise is recommended for all readers.

- Problem 4

What is the nullspace of the differentiation transformation ? What is the nullspace of the second derivative, as a transformation of ? The -th derivative?

- Problem 5

Example 2.7 restates the first condition in the definition of homomorphism as "the shadow of a sum is the sum of the shadows". Restate the second condition in the same style.

- Problem 6

For the homomorphism given by find these.

- This exercise is recommended for all readers.

- Problem 7

For the map given by

sketch these inverse image sets: , , and .

- This exercise is recommended for all readers.

- Problem 8

Each of these transformations of is nonsingular. Find the inverse function of each.

- Problem 9

Describe the nullspace and rangespace of a transformation given by .

- Problem 10

List all pairs that are possible for linear maps from to .

- Problem 11

Does the differentiation map have an inverse?

- This exercise is recommended for all readers.

- Problem 12

Find the nullity of the map given by

- Problem 13

- Prove that a homomorphism is onto if and only if its rank equals the dimension of its codomain.

- Conclude that a homomorphism between vector spaces with the same dimension is one-to-one if and only if it is onto.

- Problem 14

Show that a linear map is nonsingular if and only if it preserves linear independence.

- Problem 15

Corollary 2.17 says that for there to be an onto homomorphism from a vector space to a vector space , it is necessary that the dimension of be less than or equal to the dimension of . Prove that this condition is also sufficient; use Theorem 1.9 to show that if the dimension of is less than or equal to the dimension of , then there is a homomorphism from to that is onto.

- Problem 16

Let be a homomorphism, but not the zero homomorphism. Prove that if is a basis for the nullspace and if is not in the nullspace then is a basis for the entire domain .

- This exercise is recommended for all readers.

- Problem 17

Recall that the nullspace is a subset of the domain and the rangespace is a subset of the codomain. Are they necessarily distinct? Is there a homomorphism that has a nontrivial intersection of its nullspace and its rangespace?

- Problem 18

Prove that the image of a span equals the span of the images. That is, where is linear, prove that if is a subset of then equals . This generalizes Lemma 2.1 since it shows that if is any subspace of then its image is a subspace of , because the span of the set is .

- This exercise is recommended for all readers.

- Problem 19

- Prove that for any linear map and any , the set has the form

- Consider the map given by

- Conclude from the prior two items that for any linear system of the form

- Show that this map is linear

- Show that the -th derivative map is a linear transformation of

for each . Prove that this map is a linear transformation of that space

- Problem 20

Prove that for any transformation that is rank one, the map given by composing the operator with itself satisfies for some real number .

- Problem 21

Show that for any space of dimension , the dual space

is isomorphic to . It is often denoted . Conclude that .

- Problem 22

Show that any linear map is the sum of maps of rank one.

- Problem 23

Is "is homomorphic to" an equivalence relation? (Hint: the difficulty is to decide on an appropriate meaning for the quoted phrase.)

- Problem 24

Show that the rangespaces and nullspaces of powers of linear maps form descending

and ascending

chains. Also show that if is such that then all following rangespaces are equal: . Similarly, if then .

Footnotes

- ↑ More information on many-to-one maps is in the appendix.

Section III - Computing Linear Maps

The prior section shows that a linear map is determined by its action on a basis. In fact, the equation

shows that, if we know the value of the map on the vectors in a basis, then we can compute the value of the map on any vector at all. We just need to find the 's to express with respect to the basis.

This section gives the scheme that computes, from the representation of a vector in the domain , the representation of that vector's image in the codomain , using the representations of , ..., .

1 - Representing Linear Maps with Matrices

- Example 1.1

Consider a map with domain and codomain (fixing

as the bases for these spaces) that is determined by this action on the vectors in the domain's basis.

To compute the action of this map on any vector at all from the domain, we first express and with respect to the codomain's basis:

and

(these are easy to check). Then, as described in the preamble, for any member of the domain, we can express the image in terms of the 's.

Thus,

with then .

For instance,

with then .

We will express computations like the one above with a matrix notation.

In the middle is the argument to the map, represented with respect to the domain's basis by a column vector with components and . On the right is the value of the map on that argument, represented with respect to the codomain's basis by a column vector with components , etc. The matrix on the left is the new thing. It consists of the coefficients from the vector on the right, and from the first row, and from the second row, and and from the third row.

This notation simply breaks the parts from the right, the coefficients and the 's, out separately on the left, into a vector that represents the map's argument and a matrix that we will take to represent the map itself.

- Definition 1.2

Suppose that and are vector spaces of dimensions and with bases and , and that is a linear map. If

then

is the matrix representation of with respect to .

Briefly, the vectors representing the 's are adjoined to make the matrix representing the map.

Observe that the number of columns of the matrix is the dimension of the domain of the map, and the number of rows is the dimension of the codomain.

- Example 1.3

If is given by

then where

the action of on is given by

and a simple calculation gives

showing that this is the matrix representing with respect to the bases.

We will use lower case letters for a map, upper case for the matrix, and lower case again for the entries of the matrix. Thus for the map , the matrix representing it is , with entries .

- Theorem 1.4

Assume that and are vector spaces of dimensions and with bases and , and that is a linear map. If is represented by

and is represented by

then the representation of the image of is this.

- Proof

We will think of the matrix and the vector as combining to make the vector .

- Definition 1.5

The matrix-vector product of a matrix and a vector is this.

The point of Definition 1.2 is to generalize Example 1.1, that is, the point of the definition is Theorem 1.4, that the matrix describes how to get from the representation of a domain vector with respect to the domain's basis to the representation of its image in the codomain with respect to the codomain's basis. With Definition 1.5, we can restate this as: application of a linear map is represented by the matrix-vector product of the map's representative and the vector's representative.

- Example 1.6

With the matrix from Example 1.3 we can calculate where that map sends this vector.

This vector is represented, with respect to the domain basis , by

and so this is the representation of the value with respect to the codomain basis .

To find itself, not its representation, take .

- Example 1.7

Let be projection onto the -plane. To give a matrix representing this map, we first fix bases.

For each vector in the domain's basis, we find its image under the map.

Then we find the representation of each image with respect to the codomain's basis

(these are easily checked). Finally, adjoining these representations gives the matrix representing with respect to .

We can illustrate Theorem 1.4 by computing the matrix-vector product representing the following statement about the projection map.

Representing this vector from the domain with respect to the domain's basis

gives this matrix-vector product.

Expanding this representation into a linear combination of vectors from

checks that the map's action is indeed reflected in the operation of the matrix. (We will sometimes compress these three displayed equations into one

in the course of a calculation.)

We now have two ways to compute the effect of projection, the straightforward formula that drops each three-tall vector's third component to make a two-tall vector, and the above formula that uses representations and matrix-vector multiplication. Compared to the first way, the second way might seem complicated. However, it has advantages. The next example shows that giving a formula for some maps is simplified by this new scheme.

- Example 1.8

To represent a rotation map that turns all vectors in the plane counterclockwise through an angle

we start by fixing bases. Using both as a domain basis and as a codomain basis is natural, Now, we find the image under the map of each vector in the domain's basis.

Then we represent these images with respect to the codomain's basis. Because this basis is , vectors are represented by themselves. Finally, adjoining the representations gives the matrix representing the map.

The advantage of this scheme is that just by knowing how to represent the image of the two basis vectors, we get a formula that tells us the image of any vector at all; here a vector rotated by .

(Again, we are using the fact that, with respect to , vectors represent themselves.)

We have already seen the addition and scalar multiplication operations of matrices and the dot product operation of vectors. Matrix-vector multiplication is a new operation in the arithmetic of vectors and matrices. Nothing in Definition 1.5 requires us to view it in terms of representations. We can get some insight into this operation by turning away from what is being represented, and instead focusing on how the entries combine.

- Example 1.9

In the definition the width of the matrix equals the height of the vector. Hence, the first product below is defined while the second is not.

One reason that this product is not defined is purely formal: the definition requires that the sizes match, and these sizes don't match. Behind the formality, though, is a reason why we will leave it undefined— the matrix represents a map with a three-dimensional domain while the vector represents a member of a two-dimensional space.

A good way to view a matrix-vector product is as the dot products of the rows of the matrix with the column vector.

Looked at in this row-by-row way, this new operation generalizes dot product.

Matrix-vector product can also be viewed column-by-column.

- Example 1.10

The result has the columns of the matrix weighted by the entries of the vector. This way of looking at it brings us back to the objective stated at the start of this section, to compute as .

We began this section by noting that the equality of these two enables us to compute the action of on any argument knowing only , ..., . We have developed this into a scheme to compute the action of the map by taking the matrix-vector product of the matrix representing the map and the vector representing the argument. In this way, any linear map is represented with respect to some bases by a matrix. In the next subsection, we will show the converse, that any matrix represents a linear map.

Exercises

- This exercise is recommended for all readers.

- Problem 1

Multiply the matrix

by each vector (or state "not defined").

- Problem 2

Perform, if possible, each matrix-vector multiplication.

- This exercise is recommended for all readers.

- Problem 3

Solve this matrix equation.

- This exercise is recommended for all readers.

- Problem 4

For a homomorphism from to that sends

where does go?

- This exercise is recommended for all readers.

- Problem 5

Assume that is determined by this action.

Using the standard bases, find

- the matrix representing this map;

- a general formula for .

- This exercise is recommended for all readers.

- Problem 6

Let be the derivative transformation.

- Represent with respect to where .

- Represent with respect to where .

- This exercise is recommended for all readers.

- Problem 7

Represent each linear map with respect to each pair of bases.

- with respect to

where , given by

-

with respect to

where , given by

- with respect to

where

and , given by

- with respect to

where

and , given by

-

with respect

to where , given by

- Problem 8

Represent the identity map on any nontrivial space with respect to , where is any basis.

- Problem 9

Represent, with respect to the natural basis, the transpose transformation on the space of matrices.

- Problem 10

Assume that is a basis for a vector space. Represent with respect to the transformation that is determined by each.

- , , ,

- , , ,

- , , ,

- Problem 11

Example 1.8 shows how to represent the rotation transformation of the plane with respect to the standard basis. Express these other transformations also with respect to the standard basis.

- the dilation map , which multiplies all vectors by the same scalar

- the reflection map , which reflects all all vectors across a line through the origin

- This exercise is recommended for all readers.

- Problem 12

Consider a linear transformation of determined by these two.