Systems Theory/Printable version

| This is the print version of Systems Theory You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/Systems_Theory

Introduction

Systems theory or general systems theory or systemics is an interdisciplinary field which studies systems as a whole. Systems theory was founded by Ludwig von Bertalanffy, William Ross Ashby and others between the 1940s and the 1970s on principles from physics, biology and engineering and later grew into numerous fields including philosophy, sociology, organizational theory, management, psychotherapy (within family systems therapy) and economics among others. Cybernetics is a closely related field. In recent times complex systems has increasingly been used as a synonym.

Overview

[edit | edit source]Systems theory focuses on complexity and interdependence. A system is composed of regularly interacting or interdependent groups of activities/parts that form a whole.

Part of systems theory, system dynamics is a method for understanding the dynamic behavior of complex systems. The basis of the method is the recognition that the structure of any system -- the many circular, interlocking, sometimes time-delayed relationships among its components -- is often just as important in determining its behavior as the individual components themselves. Examples are chaos theory and social dynamics.

Systems theory has also been developed within sociology. The most notable scientist in this area is Niklas Luhmann (see Luhmann 1994). The systems framework is also fundamental to organizational theory as organizations are dynamic living entities that are goal-oriented. The systems approach to organizations relies heavily upon achieving negative entropy through openness and feedback.

In recent years, the field of systems thinking has been developed to provide techniques for studying systems in holistic ways to supplement more traditional reductionistic methods. In this more recent tradition, systems theory is considered by some as a humanistic counterpart to the natural sciences.

History

[edit | edit source]Subjects like complexity, self-organization, connectionism and adaptive systems had already been studied in the 1940s and 1950s, in fields like cybernetics through researchers like Norbert Wiener, William Ross Ashby, John von Neumann and Heinz Von Foerster. They only lacked the right tools, and tackled complex systems with mathematics, pencil and paper. John von Neumann discovered cellular automata and self-reproducing systems without computers, with only pencil and paper. Aleksandr Lyapunov and Jules Henri Poincaré worked on the foundations of chaos theory without any computer at all.

All of the "C"-Theories below - cybernetics, catastrophe theory, chaos theory,... - have the common goal to explain complex systems which consist of a large number of mutually interacting and interwoven parts. Cellular automata (CA), neural networks (NN), artificial intelligence (AI), and artificial life (ALife) are related fields, but they do not try to describe general complex systems. The best context to compare the different "C"-Theories about complex systems is historical, which emphasizes different tools and methodologies, from pure mathematics in the beginning to pure computer science now. Since the beginning of chaos theory when Edward Lorenz accidentally discovered a strange attractor with his computer, computers have become an indispensable source of information. One could not imagine the study of complex systems without computers today.

Timeline

[edit | edit source]- 1960 cybernetics (W. Ross Ashby, Norbert Wiener) Mathematical theory of the communication and control of systems through regulatory feedback. Closely related: "control theory" and "general systems theory" founded by Ludwig von Bertalanffy and W. Ross Ashby

- 1970 catastrophe theory (René Thom, E.C. Zeeman) Branch of mathematics that deals with bifurcations in dynamical systems, classifies phenomena characterized by sudden shifts in behavior arising from small changes in circumstances.

- 1980 chaos theory (David Ruelle, Edward Lorenz, Mitchell Feigenbaum, Steve Smale, James A. Yorke....) Mathematical theory of nonlinear dynamical systems that describes bifurcations, strange attractors, and chaotic motions.

- 1990 complex adaptive systems (CAS) (John H. Holland, Murray Gell-Mann, Harold Morowitz, W. Brian Arthur,..) The "new" science of complexity which describes emergence, adaptation and self-organization was established mainly by researchers of the SFI and is based on agents and computer simulations and includes multi-agent systems (MAS) which have become an important tool to study social and complex systems. CAS are still an active field of research.

References

[edit | edit source]- Daniel Durand (1979) La systémique, Presses Universitaires de France

- Ludwig von Bertalanffy (1968). General System Theory: Foundations, Development, Applications New York: George Braziller

- Gerald M. Weinberg (1975) An Introduction to General Systems Thinking (1975 ed., Wiley-Interscience) (2001 ed. Dorset House).

- Niklas Luhmann Soziale Systeme. Grundriss einer allgemeinen Theorie, Frankfurt, Suhrkamp, 1994

- Herman Kahn, Techniques of System Analysis

See also

[edit | edit source]- William Ross Ashby

- autopoiesis

- cybernetics

- Buckminster Fuller

- system

- systems theory in archaeology

- systems thinking

- systemantics

- Important publications in systems theory

- Tectology

- Hierarchical system theory

External links

[edit | edit source]- Principia Cybernetica Web

- International Society for the System Sciences

- Autopoiesis at the ACM website

- Systems theory

- Le Village Systémique

Un-annotated external links

[edit | edit source]- http://mvhs1.mbhs.edu/mvhsproj/project2.html

- http://www.geom.umn.edu/education/math5337/ds/

- http://www.albany.edu/cpr/sds/

- http://www.uni-klu.ac.at/users/gossimit/links/bookmksd.htm

- http://www.wkap.nl/journalhome.htm/0924-6703

- http://www.wkap.nl/jrnltoc.htm/0924-6703

Systems Approach to Instruction

What is a System

[edit | edit source]entities

connection

A system, S, is viewed as a whole made up of many parts or subsystems which are interconnected. To be considered as a system, S MUST have one or more objectives. In turn, each subsystem may itself be viewed as a system, leading to a hierarchy of systems (or subsystems). The system's parts are working together as a whole to accomplish the system's objective(s) by performing certain tasks. Ex. a computer. A system can also be defined as separate bodies coming together to form a system.

Keywords: system, subsystem, interconnection, objective

Systems Approach

[edit | edit source]The systems approach developed out of the 1950s and 1960s focus on language laboratories, teaching machines, programmed instruction, multimedia presentations and the use of the computer in instruction. Most systems approaches are similar to computer flow charts with steps that the designer moves through during the development of instruction. Rooted in the military and business world, the systems approach involved setting goals and objectives, analyzing resources, devising a plan of action and continuous evaluation/modification of the program. (Saettler, 1990)

Who This Book is For

[edit | edit source]How This Book is Organized

[edit | edit source]

Order-Chaos

Order and Chaos

[edit | edit source]Systems exhibit behaviors. These behaviors are the result of the application of parameters for the system’s elements - the rules. The subsequent behavior is interpreted based on the knowledge of the current system and the anticipated results of various inputs and processes. When systems behave in the expected manner they are generally regarded as stable and thus, in order. The term “order” is not absolute, however. Generally, terms such as “stable”, “balanced” and “in order” are describing all known (considered) inputs and outputs of a system, and based on those factors alone, the system appears to be exhibiting the desired behaviors.

Order describes the history of a system or system segment. Order illustrates that a system has responded to a rule or rules that have made the system behave in a manner that is expected. Specific forms of order exist in many systems: homeostasis, autonomy and chaos. These forms of order describe the system’s inherent behavior and how fluctuations in a system can occur.

Homeostasis

When systems respond to external forces by eventually returning to their starting points, they are considered homeostatic. An example of this form of order is in living systems. A population of animals supported by an island will, over time, maintain a certain number. An external disease, predator, or other force may diminish the number, but eventually the population will increase (all other forces being equal). If the population spikes, shortages in food will eventually lead to less animals. These natural laws work to create homeostatic order. Systems do not require external forces to create fluctuations, though. Often, systems that maintain the same inputs and processes over time experience diminishing desired outputs due to entropy (see wikipedia:Entropy).

Autonomy

Systems that produce ongoing fluctuations or change but follow an average output yield autonomy. Autonomy is best described as oscillation in a system over a period of time. Although this oscillation is not necessarily harmonic motion, it does tend to be around a general mean. A small change in the input parameters of homeostatic order can create autonomic order.

Chaos

If the system fluctuates further it can become unpredictable. Although this does not indicate system failure, the behavior suggests “deterministic unpredictability” – the concept that the same inputs generate different results. This unpredictability is often considered chaotic. The term “chaos” does not mean that the system is failing or will fail; rather it is a method of describing a system that can not be predicted will full certainty. Most often, systems are considered to be chaotic when the underlying rules are unknown, thus the results can not be known. This, however, does not mean that unpredictable or complex output structured systems are chaotic. When there is no underlying rule that governs the unpredictable system it is considered to be non-deterministic.

An example of a non-deterministic system is a coin flip. Although the coin has only two sides, predicting which side will land face-up is quite difficult. There is no underlying rule that governs the coin flip; rather it is the interaction of several inputs: the force of the flip, the original position, friction of fingernails, wind speed and direction, height of the flip, rotational velocity, current gravity of the object on which the person is standing, etc. Unpredictability results from not being able to consider all of these factors. Although we may assume that after many flips the number of times each side lands face-up will be equal, it is not a definite prediction. This system is not considered autonomic since it does not internally correct itself, nor does the low number of outputs available immediately make it a homeostatic environment.

Thus, chaotic systems can be considered to have a highly complex order, sometimes too complex to understand without the aid of analytical tools or complex mathematics. Most approaches to studying complex and chaotic systems involve understanding graphical plots of fractal nature, and bifurcation diagrams. These models illustrate very complex recurrences of outputs directly related to inputs. Hence, complex order occurs from chaotic systems.

References

[edit | edit source]- Serendip. On Beyond Newton: From Simple Rules to Stability, Fluctuation, and Chaos

http://serendip.brynmawr.edu/complexity/newton

- Cybernetics:Principles of Systems and cybernetics: an evolutionary perspective

- Sterman, John. Business Dynamics: Systems Thinking and Modeling for a Complex World. 2000.

Holism

Holistic View

[edit | edit source]A holistic view of a system encompasses the complete, entire view of that system. Holism emphasizes that the state of a system must be assessed in its entirety and cannot be assessed through its independent member parts. Dividing a system into its separate parts is considered destructive to that system and single parts within a system should not be prioritized. Thus, holistic views define atomism to ultimately be a threat to the health of a system. Holism focuses on alleviating problems within a system by emphasizing on the system as a whole and understanding that member parts ultimately aggregate to create that whole.

Currently

[edit | edit source]Today, many industries are integrating holistic practices into their management and operations. U.S. western medical practitioners as well as corporate leaders are becoming aware of the dangers of treating humans or complex corporations as entities made up of isolated, unrelated parts. Understanding the larger system and how individual parts contribute to that system is key. Leaders must grasp the paradigm that the “whole is more than the sum of its parts”. Understanding a complex system entails understanding that the whole can take on a behavior all its own. Understanding this independent behavior is key in holism. Powerful, effective decisions can be made once a holistic view of a system is acquired. Poor, inappropriate decisions can be derived when a limited view is acquired by assessing separate parts without regarding how those parts contribute to the whole. Management practices emphasizing holism seek to eliminate the risk of these poor decisions.

The Goal

[edit | edit source]The goal of increasing holistic awareness allows leaders to create and implement successful management policies and is highlighted by the International Standards Organization for quality management. The ISO 9001 standard defines improved quality management in which acquiring complete, complex knowledge of an organization is essential in implementing effective management practices. Operations and structures within an organization must be enveloped into the full, complete system context. Pooling these critical definitions together must be done so that a sophisticated and complete, essential perspective of an organization's behavior is acquired. Implementing holistic practices within an organization improves the chances of bringing that system to a stable state. Decisions concerning only one isolated function within the system are never constructed using holistic management practices. Thus, unknown outcomes caused by an underestimation of thorough system behavior are eliminated. Without holistic principles, an entire system’s function can be threatened and ultimately the individual constituting parts can be put into peril.

Places that use Holism

[edit | edit source]Holistic practices are critical within the IT industry as data networks and intelligent complete systems are designed and built. Viewing independent components of a communications network or intelligent system as stand-alone entities ultimately impacts the overall quality of that system. In networking, care is taken to view a LAN, MANET or WAN as an entire entity. Small iterative changes to parts of a network are not being considered in isolation such that the downstream or upstream impacts aren't anticipated. Seeing a data network as a whole living system increases the chances for success of a stable, reliable system. In building IT intelligent systems, comprised of interacting smaller software and hardware modules, retaining a systems view is necessary so that the complete product can be troubleshot and debugged successfully. Myopic views focusing only on a single subsystem, where the entire system performance is not considered, can create an unpredictable and unreliable final product. If a holistic view is retained by analysts building or maintaining IT systems, the chance for success of that IT system is more realistic. As the analysts' understanding of the entire system increases, he/she is more capable of initiating complex changes such that the global health of the system is protected.

A Final Example

[edit | edit source]A final illustration of how holism is an immanent and essential theory is the current adaptation of our world to its new global economy. It is imperative that we understand how our global world operates and how we all participate within that entity. Gaining a global economy has been facilitated through readily available international travel and instant, personal communication. As international parties intermix, the world will grow into a very sophisticated and complex system. Retaining a concise and objective perspective of our world and understanding its complexity is critical. Powerful internal influences will begin to impact our global system’s behavior. Every individual is gaining the power to make a fuller impact on his environment and the ability to voice his opinion concerning the state of his world. As more individual voices and wills are exercised, the stability and balance of our global system will be harder to maintain. We must persistently understand our world with a holistic perspective to gain in depth awareness of its behavior as an independent entity. Gaining this holistic view is essential in understanding our future evolution.

Goal Seeking (Intrinsic & Extrinsic)

Goal Seeking

[edit | edit source]Control systems or Cybernetics are characterized by the fact that they have goals: states of affairs that they try to achieve and maintain, in spite of obstacles or perturbations

Control systems are combinations of components (electrical, mechanical, thermal, or hydraulic) that act together to maintain actual system performance close to a specified set of performance specifications. Open-loop control systems (e.g. alarm clocks) are those in which the output has no effect on the input. Closed-loop control systems (e.g. automotive cruise-control systems) are those in which the output has an effect on the input in such a way as to maintain the specified output value. A closed-loop system must include some way to measure its output to sense changes so that corrective action can be taken. The speed with which a simple closed-loop control system moves to correct its output is described by its natural frequency and damping ratio. A system with a small damping ratio is characterized by overshooting the desired output before settling down. Systems with larger damping ratios do not overshoot the desired output, but respond more slowly.

Mechanistic World View

[edit | edit source]In the mechanistic world view, there is no place for goal-directedness or purpose. All mechanical processes are determined by their cause, which lies in the past. A goal, on the other hand, is something that determines a process, yet lies in the future.

The thesis that natural processes are determined by their future purpose is called teleology. It is closely associated with vitalism, the belief that life is animated by a vital force outside the material realm. Our mind is not a goalless mechanism; it is constantly planning ahead, solving problems, trying to achieve goals. How can we understand such goal-directedness without recourse to the doctrine of teleology?

Cybernetics

[edit | edit source]Probably the most important innovation of cybernetics is its explanation of goal-directedness. An autonomous system, such as a person or an organism, can be characterized by the fact that it pursues its own goals, resisting obstructions from the environment that would make it deviate from its preferred state of affairs. Thus, goal-directedness implies regulation of or control over distractions.

A good example is a room in which the temperature is controlled by a thermostat. The setting of the thermostat determines the desired temperature or goal state. Perturbations may be caused by changes in the outside temperature, opening of windows or doors, drafts, etc. The task of the thermostat is to minimize the effects of such influences, and thus to keep the temperature as much as possible constant with respect to the specified temperature.

Survival

[edit | edit source]On the most fundamental level, the goal of an autopoietic or autonomous system is survival, that is, maintenance of its essential organization. This goal has been built into all living organisms by natural selection: those that were not focused on survival have simply been eliminated. In addition to this main goal, the system will have various subsidiary goals, such as keeping warm or finding food, that indirectly contribute to its survival. Artificial systems, such as automatic pilots and thermostats, are not autonomous: their primary goals are constructed in them by their designers. They are allopoietic: which means their function is to produce something other ("allo") than themselves.

Goals as States

[edit | edit source]Goal-directedness can be defined most simply as suppression of deviations from an invariant goal state. In that respect, a goal is similar to a stable equilibrium, to which the system returns after any disruptions. Both goal-directedness and stability are characterized by equifinality: different initial states lead to the same final state, implying the destruction of variety. What distinguishes them is that a stable system automatically returns to its equilibrium state, without performing any work or effort. But a goal-directed system must actively engage to achieve and maintain its goal, which would not be equilibrium otherwise. Control may appear essentially conservative, resisting all departures from a preferred state. But the net effect can be very progressive or dynamic, depending on the complexity of the goal. For example, if the goal is defined as the rate of increase of some quantity, or the distance relative to a moving target, then suppressing deviation from the goal implies constant change. A simple example is a heat-seeking head in a Stinger missile attempting to reach a fast moving enemy jet, cruise missile or helicopter.

A system's "goal" can also be a range of acceptable states, similar to an attractor. The dimensions defining these states are called the essential variables, and they must be kept within a limited range compatible with the survival of the system. For example, a person's body temperature must be kept within a range of approximately 35-40 degrees C. Even more generally, the goal can be seen as a gradient, or "fitness" function, defined on state space, which defines the degree of "preference" or "value" of one state relative to another one. In the latter case, the problem of control becomes one of on-going optimization or maximization of fitness.

Reference

[edit | edit source]- Heylighen F. & Joslyn C. (2001): "Cybernetics and Second Order Cybernetics", in: R.A. Meyers (ed.), Encyclopedia of Physical Science & Technology , Vol. 4 (3rd ed.), (Academic Press, New York), p. 155-170

- http://www.answers.com/main/ntquery?method=4&dsid=2040&dekey=controlsy&gwp=8&curtab=2040_1

- http://pespmc1.vub.ac.be/GOAL.html

Wiki-Resources

[edit | edit source]

Goal Structure (Teleological Behavior)

Four Teleological Orders

[edit | edit source]According to Quentin Smith (1981), the four teleological orders are the relations of one aim to another as (1) a “means to” it, (2) a “part of” it, (3) a “concretion” of it, and (4) as “subsumed” under it. These teleological orders interconnect three different types of aims: (1) ends, (2) goals and (3) purposes. Smith uses the term “ends” to refer to those aims that are directly pursued in voluntary actions. Ends are terms of one or both of the teleological relationships that we are designating as the “means to” relationship and the “part of” relationship. The ends that are terms of these relationships are either physical ends, mental ends, or interpersonal ends. 1. Physical ends are either to alter the physical structure of my surroundings (such as to saw a branch in half), or to alter and move my body for its own sake (as is the case when I engage in exercise). 2. Mental ends are not to change the physical environment or my body, but to “bring to my mind” ideas, images or memories. Examples of mental ends are “to solve a mathematical problem,” “to recall a person’s name,” “to read a poem,” and “to conceptualize in an accurate manner a vague insight.” 3. Interpersonal ends are to influence or affect the consciousness of another person. Goals are formally defined as aims that are constituted by two or more ends. The smallest scale goals are constituted by the fewest ends, and the largest scale goals are constituted by thousands of ends. Goals differ in essence from ends in that they are mediate aims of voluntary actions, whereas ends are immediate aims of these actions. An end is immediately and directly attained by an action: it is the volitional activity and striving that either are the end itself, or that bring about the end as their direct result. A voluntary action, however, cannot immediately attain a goal. A goal must be attained by a series of voluntary actions, such that they become attained only through the attainment of a series of complete actional ends. In this sense the attainment of the goal is mediate: it is mediated by the attainment of a series of actional ends. The explanation of this definition of goals involves a distinction of goals from complete actional ends. Complete actional ends differ from goals in two respects. Complete actional ends are such that: (1) they are continually posited from the moment they are willed by a volition until the moment they have been completely attained by the subsequent voluntary action. This entails that these ends (2) are pursued in a continuous and uninterrupted action and striving, such that I act and strive without intermission from the moment I will the end to be attained until the moment I attain it.

Goals

[edit | edit source]Goals, on the other hand: (1) are not continually posited from the moment they are willed until the moment they are attained. Rather they are posited as my aims at different times. The pursuit of a goal is interspersed with modes of behavior that do not aim at attaining the goal; these modes of behavior may be actions that aim to attain other goals, or they may be non-actional modes of behavior, such as passions, moods, periods of day-dreaming, sleeping, etc. (2) Corresponding to this discontinuous positing of the goal, the voluntary activities and strivings that aim to realize the goal are engaged in at different times. This definition of goals indicates that they differ from ends in the manner in which they are posited and pursued. They do not necessarily differ in their contents (although they usually do). This occasional similarity between their contents is apparent in the smallest scale goals and the complete actional ends. At one time I may pursue and attain one aim in a single voluntary action, and at another time I may pursue and attain a similar aim in two or three different actions. In the first case, since the aim is attained in a single action, it is nothing more than the complete end of this action. In the second case, it is the mediate aim of several actions: it is the goal to which the several actional ends are ordered. This distinction between ends and goals enables us to note a fundamental difference in kind among the various aims of actions. This difference is usually overlooked. For example, Sartre does not distinguish between the different kinds of aims we empirically desire. For Sartre (L’Etre et le Neaot; Paris: Librairie Gallimard, 1943), “drinking a glass of water” and “conquering Gaul” are undifferentiated instances of the aims we can empirically desire. However we believe there is a fundamental difference in kind between these two aims. The aim of “drinking a glass of water” is achieved in a single and continuous action, whereas the aim of “conquering Gaul” is achieved by a vast number of such actions. The former aim is an end, the immediate aim of one action, whereas the latter aim is a goal, the mediate aim of a number of actions. The relation of goals to purposes is different in kind than the relation of ends to goals. Goals are neither “means to” nor “parts of” purposes, but “concretions” of purposes. Goals “make concrete” the “abstractions” that are purposes. Specifically, goals are the concrete determinations of undetermined instances of universals. It is the nature of purposes to be undetermined instances of universals, and it is the nature of goals to be the particular determinations of these instances. Whereas ends and goals constitute the unique individuality of our actions, purposes are the unconditioned meanings of our actions. They are the ultimate “reason” or “what for” of our actions. If it is asked why or “what for” a person is engaging in an action, the final reason he can give is that he is doing it in order to realize a purpose. While goals are the meaning of ends, in that ends are pursued for the reason that they realize goals, purposes are the meaning of goals, for purposes are the “reason why” we pursue goals.

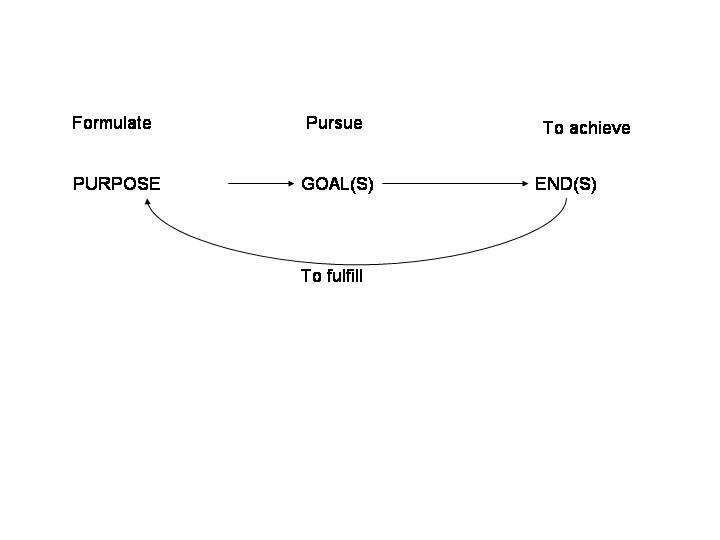

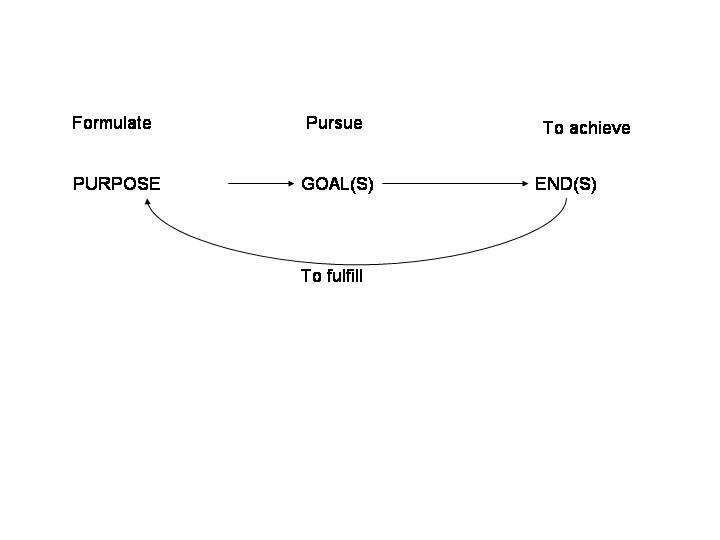

If we consider this order and distinction between these terms and their relationships, then the order of things may be presented in the way similar to graph above. Once the purpose of the action is formulated in the mind of a person, he/she pursues the goal(s) required to be accomplished, in order to achieve definite (and desired) end(s), with ultimate result of fulfilling this purpose. Ends, goals and purposes, as they are interrelated by the four teleological orders, constitute a teleological structure of voluntary actions. According to this interpretation of goal structure, the goal is mediate aim of a number of actions. Hence, it consists of the series of actions intended to achieve proximal and distal effects. If we use Smith’s example, adapted from Sartre, aim of “conquering Gaul” requires series of actions. In order to achieve this military aim, Julius Caesar might have devised this series of actions: • Divide the territory into areas of responsibility between legions, in order to prevent communication of Gaul tribes. • Ascertain key terrain features that need to be controlled by legions, to establish camps and uninterrupted lines of communication and supplies for the troops, etc. These hypothetical actions of Caesar would represent the series of activities designed to achieve proximal effects. Distal effect of this series of actions would still be, of course, “conquering Gaul.” The structure above has five levels, each level being an interrelationship between some of these aims. The relationship between ends and other ends constitute the level that lies at the basis of this structure: the “part of” and “means to” order between ends is the irreducible foundation of voluntary and aim—directed action. Founded upon this basic level of the interconnection of ends, there is a second level consisting of the “part of” and “means to” relations between ends and goals. And upon this level there is based a third level: the “part of” and “means to” relations that connect goals to other goals. These relations serve as the foundation for a fourth level of this teleological structure: the “concretion” order that links goals to purposes. Upon this level there is built the uppermost level, which is the “subsumption” order that connects purposes to other purposes. The final purpose in this “subsumption” order is the empty purpose of “attaining some purpose.” This is the most general aim of voluntary action, and with it the teleological relationships terminate. Any phenomenon that is to become an aim of human action can only do so through being integrated in an immanent fashion within this interconnected teleological structure.

References

[edit | edit source]- “Four Teleological Orders of Human Action” by Quentin Smith Published in: Philosophical Topics, Vol. 12, No. 3., Winter 1981, pp. 312-335.

Inputs-Outputs

Inputs and Outputs

[edit | edit source]Input is something put into a system or expended in its operation to achieve output or a result. The information entered into a computer system, examples include: typed text, mouse clicks, etc. Output is the information produced by a system or process from a specific input. Within the context of systems theory, the inputs are what are put into a system and the outputs are the results obtained after running an entire process or just a small part of a process. Because the outputs can be the results of an individual unit of a larger process, outputs of one part of a process can be the inputs to another part of the process. Output includes the visual, auditory, or tactile perceptions provided by the computer after processing the provided information. Examples include: text, images, sound, or video displayed on a monitor or through speaker as well as text or Braille from printers or embossers. Inputs and Ouputs of the system has been classified viz., i) Dynamic Nature of the Input/Output, ii) temporal nature - a) planned, and b) unplanned inputs, iii) periodicity, (iv) controllability, and (v) duration of application (Hariharan, 2021)

Input-Output Analysis

[edit | edit source]Input-output analysis is “a technique used in economics for tracing resources and products within an economy. The system of producers and consumers is divided into different branches, which are defined in terms of the resources they require as inputs and what they produce as outputs. The quantities of input and output for a given time period, usually expressed in monetary terms, are entered into an input-output matrix within which one can analyze what happens within and across various sectors of an economy where growth and decline takes place and what effects various subsidies may have” (Krippendorf).

Areas of Consideration

[edit | edit source]Systems theory is “transdisciplinary study of the abstract organization of phenomena, independent of their substance, type, or spatial or temporal scale of existence” (Universiteit). It can be applied to general systems that exist in nature or, in a business context, organizational or economic systems. In studying systems theory, there are a few common, major aspects to consider.

One must look at the individual objects that compose a system. The objects consist of the parts, elements, or variables that make up the system. The objects that make up a system can be physical objects that actually exist in the world, or they can be abstract objects or ideas that cannot be found physically in the world.

One must also consider the attributes of a system. The attributes consist of the qualities and properties of the aforementioned objects of the system. The attributes may also describe the entire system itself.

A third consideration would be the internal relationships among the objects of a system.

The fourth consideration would be the environment in which the system exists.

All of these aspects of a system play an important role. Using these four characteristics, a system can then be defined as “a set of things that affect one another within an environment and form a larger pattern that is different from any of the parts.” Furthermore, “The fundamental systems-interactive paradigm of organizational analysis features the continual stages of input, throughput (processing), and output, which demonstrate the concept of openness/closedness” (Universiteit).

Systems Defined

[edit | edit source]A system can be defined by using the definition of desired outputs to understand what inputs are necessary. The following questions can be used for this method:

- What essential outputs must the system produce in order to satisfy the system users’ requirements?

- What transformations are necessary to produce these outputs?

- What inputs are necessary for these transformations to produce the desired outputs?

- What types of information does the system need to retain?

Another way of defining a system is to work forward in a stimulus-response method of definition. The following questions can be asked in conjunction with this method in order to define the system:

- What are the stimuli, and what are the responses to each stimulus?

- For each stimulus response pair, what are the transformations necessary?

- What are the essential data that must be maintained?

These methods can be used to define not just the general system in question, but also the subsystems which compose the larger system as a whole (Sauter).

References

[edit | edit source]Hariharan, T. S., Ganesh, L. S., Venkatraman, V., Sharma, P., & Potdar, V. (2021). Morphological Analysis of general system–environment complexes: Representation and application. Systems Research and Behavioral Science, 1– 23.

Krippendorf (Accessed 2005): "Input-Output Analysis", in: F. Heylighen, C. Joslyn and V. Turchin (editors): Principia Cybernetica Web (Principia Cybernetica, Brussels), URL: http://pespmc1.vub.ac.be/ASC/INPUT-_ANALY.html.

Universiteit Twente de ondernemende universiteit (Accessed 2005): “System Theory”. URL: http://www.tcw.utwente.nl/theorieenoverzicht/Theory%20clusters/Communication%20Processes/System_Theory.doc/.

Sauter, Vicki L. (Accessed 2005): “Systems Theory”. Last Modified: 2000. URL: http://www.umsl.edu/~sauter/analysis/intro/system.htm.

Transformation Processes

Transformation Processes

[edit | edit source]Transformation processes can be described as some change in behavior which is intended to alter the desired outcome. Individuals can go through a transformation process that deals with their intellect as well as their overall persona. Organizations can also go through transformation processes. Usually, organizations will have a change in goal or organizational mission that will trigger for a transformation process to occur, whether it is to have a major down-sizing or to implement new policies and procedures that directly affect the collective behavior of the organization as a whole. The research involving transformation processes to date explore different models to use for the most efficient processes to take place. For instance, Creative Dimensions in Management (CDM), a consulting firm, presented corporate transformation processes based on one-on-one mentoring to a succession of UK banking organizations in the late 80s and 90s. Models drawn from Comprehensive Family Therapy and Progressive Abreactive Regression (PAR) tries to predict a persons attempt to significantly change their performance, they are likely to follow a zig-zag path to growth, alternatively progressing and regressing as in the following diagram:

Concluding the study by CDM suggested that, “The key to managing these regressions lies in increased self-awareness. As the growth goal increases, awareness and self-consciousness must deepen in order to manage the regressive trends that occur. These trends include moving beyond one's illusions about oneself and one's potential; moving beyond the defenses that protect the self from the anxieties of growth; examining and resolving the ambivalence that prevents a total commitment to achieving one's vision; embracing fears and terrors associated with failure and success including shame and abandonment; and, ultimately, discovering one's will - an energy source that can fuel the activation and achievement of any vision.”

Continuous Transformations

[edit | edit source]Another realm often researched is socio-ecological systems which are often described by society-environment relations which are relevant for sustainable development within a certain problem area. Problem areas may be the supply of human needs, economic sectors, geographic regions etc. Energy, substance flows, technical structures, institutions, and ideas influence the transformation process that is involved in these socio-ecological systems. It is also discovered that this type of system is continuously undergoing transformations due to its underlined nature. The following chart depicts the study of this system and how it relates to transformation processes: File:Graph2.jpg Further research of this realm suggests, “The challenge of sustainable transformation is to understand the complex interactions which underlie the dynamics of structural change, to assess and evaluate the impacts of specific paths of transformation, and to shape transformation processes in order bring about desired outcomes “

Sustainable Transformation

[edit | edit source]3 specific features of Sustainable Transformation:

- 1. Uncertainty about system behavior, because of non-predictability of complex interactions underlying transformation processes.

- 2. Divergent social goals and differing evaluations of the impact of transformation, with social values being endogenous to the transformation process.

- 3. Distribution of control capacities among a broad range of social actors with specific interests and resources to influence transformation paths.

References

[edit | edit source]

Entropy

Energy – From Physics to Organizations

[edit | edit source]The First Law of Thermodynamics states that energy can not be created or destroyed. All energy present in the universe (the largest system we know) simply changes forms throughout the cycles and phases of the system. When we observe a component of the system losing energy, we are observing a displacement of the energy’s location. The energy that was once localized to a specific entity, group, business or person will eventually disperse into the surrounding system.

Example: A hammer is swung with kinetic energy to drive a nail into a piece of wood. When the nail is struck, the hammer’s energy is transferred to the nail. The nail then uses its kinetic energy to move into the wood. The wood uses its potential energy to push back against the nail until the nail no longer continues to move. If the nail had been driven into a perfectly elastic substance it would simply have bounced back. But the wood fibres irreversibly dissipate the lost kinetic energy as heat. Excess energy produces heat and sound during the hammering.

In this small system, energy only changes form and location, but is never created or destroyed. If we were to expand the system, we would see that the kinetic energy in the hammer came from the kinetic energy in the hammerer’s arm. This kinetic energy came from the chemical energy gained from ingesting food. The food’s chemical energy came from the chemical bonds formed in the presence of solar energy. In physics, the changes in the location and form of energy is the mechanism that connects the universe as a very large system. Energy flows through other systems as well. In social organizations, energy is often described in various other terms (people, money, products, information or capital) but still flows in a similar manner.*

Example: A company’s success in bill collecting is declining because the amount of collection calls per week has diminished. The bill collector has been searching for a new job during their working hours. The energy that the collector normally put into calling clients is now being used to conduct personal business. In this example, the energy inputs (40 hours per week from the collector) have remained the same, however, the energy is being dispersed from the company’s system to the collector and other businesses’ systems.

Entropy Defined

[edit | edit source]Entropy is a tendency for a systems’ outputs to decline when the inputs have remained the same. Most often associated with the Second Law of Thermodynamics, entropy measures the changes in the type and dispersion of energy within an observable system. We measure entropy in a systems thinking by the change in outputs when the inputs have remained the same. Thus, entropy is a direct function of time (temporal).**

Closed Systems and Scope

[edit | edit source]Entropy occurs in closed systems where only the outputs decline. The system appears closed because the observed scope of the system displays no changes in the normal processes or actions that continue to take place.

Example: An organization manufactures ocean-worthy sailing ships for transportation. The organization has reported fewer revenues every year since the late 1800’s. The inputs (labor, skill, tools and capital) have remained the same throughout the years, however, sales have dropped (no pun intended) due to entropy. Without changes in inputs (creativity, modernism, or market observance) the system is considered closed, and entropy becomes inevitable.

In this example, the success of the transportation industry is not declining, only the success of one organization. Through more convenient transportation, the success of large sailing ships is being transferred from the local environment to the global market. The trans-oceanic travel is still needed, but due to a closed and entropic system, the sailing boat organization will no longer be successful. This organization failed to consider that they are part of a larger system, and must evolve over time. The scope of the system and the complexity greatly effect the length of time in which entropy typically occurs.

Example: A neighborhood child opens a lemonade stand. The lemonade remains the same, and after one month, the sales of lemonade decline as the neighborhood consumers want variety. Entropy within this system sets in quickly. An international soft-drink company that chooses to no longer manufacture more than one type of beverage could similarly fall victim to entropy.

Combating Entropy

[edit | edit source]Living organisms are often affected by diseases. These diseases represent external and internal threats that degenerate the organism until it no longer can sustain life. We challenge this form of organic entropy by adding inputs (white blood cells, medications, nutrients, etc.). Thus, organisms continue to exist through a spontaneous changes in the inputs and structures of the processes.***

Once social organizations reach a state of static equilibrium, entropy begins to occur. The degradation of the system unit of entire system is then only a function of time. This unintended process occurs until the system is thrown out of its static state with new inputs or process changes, or the system fails. To challenge the onslaught of entropy, system thinkers are required to continuously expand their knowledge of the scope and complexity of their system. Once they can identify a way for the component or process to evolve, the risk of entropy is lessened. After new actions are taken or inputs are changed, the process to avoid system decline due to entropy begins again. Once growth has stopped, the decline of the system is inevitable.

- Although the concepts of people, money, products, information, or capital can be created and destroyed, the flow, organization, and displacements of these components are treated like energy for the discussion of organizational entropy.

- Not to confuse physics and systems science: In systems science, entropy is measured by change in outputs over time. In physics, entropy is measured by change in temperature over time.

- Nobel laureate Ilya Prigogine first described that living systems continuously renew themselves through a process of "spontaneous structuration" which occurs when they are jarred out of a state of equilibrium.

References

[edit | edit source]More information on entropy can be found at: http://www.entropylaw.com/entropyenergy.html

- Swenson, R. (1997a). Autocatakinetics, Evolution, and the Law of Maximum Entropy Production: A Principled Foundation Toward the Study of Human Ecology. Advances in Human Ecology, 6, 1-46.

- Swenson, R. and Turvey, M.T. (1991). Thermodynamic Reasons for Perception-Action Cycles. Ecological Psychology, 3(4), 317-348. (Also in Japanese: Translated and reprinted in Perspectives on Affordances, M. Sasaki (ed.). Tokyo: University of Tokyo Press, 1998 ).

Open/Closed System Structure

Open Systems

[edit | edit source]A system that interfaces and interacts with its environment, by receiving inputs from and delivering outputs to the outside, is called an open system. They possess permeable boundaries, that permits interaction across its boundary, through which new information or ideas are readily absorbed, permitting the incorporation and diffusion of viable, new ideas. Because of this they can adapt more quickly to changes in the external environment in which they operate. As the environment influence the system, the system also influences the environment. Allowing a system to be open ultimately sustains growth and serves its parent environment, and so both have a stronger probability for survival.

Examples of open systems: Business organization, Hospital system, College or University system.

Conversely, a closed system is more prone to resist incorporating new ideas, that can be deemed unnecessary to its parent environment and risks atrophy. By not adopting or imps, a closed system ceases to properly serve the environment it lives in.

Adaptability and Survival

[edit | edit source]This adaptability and survival of open systems has been exhibited in society's recent global information age. Current information technology have absorbed new technologies and approaches have sustained long term success. Systems which incorporate efficient data representation, storage, and transfer, and good operating system design and effective use of processor power have retained their public user base. Information technology initiatives which adapted slowly to their rapidly changing environment ultimately lost significance in the industry and have disappeared.Operating technologies such as the Network File System, initiated by Sun Microsystems, and Netscape Internet Browser, designed by Marc Andreessen, are examples of effective open IT systems. These technologies have established the backbone of information technology within society. Both technologies represent open systems with the common initiative for sharing data. NFS is used to distribute access to shared disk file system across several servers within a local network. Netscape is used as a portal to gain access to the volumes of data within the world’s Internet. Both technologies fostered further growth within the environments in which they were introduced. The NFS standard created synergy for the growth of SUN Microsystems and has been adopted by other operating system designers. Netscape ultimately fostered the growth of the amount and types of data made available through the Internet. These technologies foster an open paradigm where data is absorbed by an infinite number of participants.

Increase Chances

[edit | edit source]The rate of change within the technology sector is extremely rapid, and systems groups have consciously adopted an “open systems” approach to increase their chance of survival. Prioritizing the adaptability of a system for different OS platforms, database designs, and communication protocols has been seen within the IT industry as the wisest approach. Any technologies designed to solely propagate the success of one platform or particular technology have been methodically eliminated. At the risk of domination of the user’s personal computer desktop, Microsoft's introduction of a closed design embedding the company’s internet browser into kernel operating modules was halted by an investigation of monopolistic practices. The initiative of Microsoft to manipulate technology such that the primary benefits were for the propagation of their technical product alone was halted by the natural tensions created by the unhealthy closed system. The system did not serve the environment in which it lived by posing the threat of limiting user’s access to the world’s internet. Outcry from users as well as judicial entities has forced Microsoft to evolve it's operating system into a more accommodating, dynamic and viable system.

Isomorphic Systems

Isomorphism

[edit | edit source]Isomorphism is the formal mapping between complex structures where the two structures contain equal parts. This formal mapping is a fundamental premise used in mathematics and is derived from the Greek words Isos, meaning equal, and morphe, meaning shape. Identifying isomorphic structures in science is a powerful analytical tool used to gain deeper knowledge of complex objects. Isomorphic mapping aids biological and mathematical studies where the structural mapping of complex cells and sub-graphs is used to understand equally related objects.

Isomorphic Mapping

[edit | edit source]Isomorphic mapping is applied in systems theory to gain advanced knowledge of the behavior of phenomena in our world. Finding isomorphism between systems opens up a wealth of knowledge that can be shared between the analyzed systems. Systems theorists further define isomorphism to include equal behavior between two objects. Thus, isomorphic systems behave similarly when the same set of input elements is presented. As in scientific analysis, systems theorists seek out isomorphism in systems so to create a synergetic understanding of the intrinsic behavior of systems. Mastering the knowledge of how one system works and successfully mapping that system’s intrinsic structure to another releases a flow of knowledge between two critical knowledge domains. Discovering isomorphism between a well understood and a lesser known, newly defined system can create a powerful impact in science, medicine or business since future, complex behaviors of the lesser understood system will become revealed.

Methods

[edit | edit source]General systems theorists strive to find concepts, principles and patterns between differing systems so that they can be readily applied and transferred from one system to another. Systems are mathematically modeled so that the level of isomorphism can be determined. Event graphs and data flow graphs are created to represent the behavior of a system. Identical vertices and edges within the graphs are discovered to identify equal structure between systems. Identifying this isomorphism between modeled systems allows for shared abstract patterns and principles to be discovered and applied to both systems. Thus, isomorphism is a powerful element of systems theory which propagates knowledge and understanding between different groups. The archive of knowledge obtained for each system is increased. This empowers decision makers and leaders to make critical choices concerning the system in which they participate. As future behavior of a system is more well understood, good decision making concerning the potential balance and operation of a system is facilitated.

Uses

[edit | edit source]Isomorphism has been used extensively in information technology as computers have evolved from simple low level circuitry with a minimal external interface to highly distributed clusters of dedicated application servers. All computer scientific concepts are derived from fundamental mathematical theory. Thus, isomorphic theory is easily applied within the computer science domain. Finding isomorphism between lesser undeveloped and current existing technologies is a powerful goal within the IT industry as scientists determine the proper path in implementing new technologies. Modeling an abstract dedicated computer or large application on paper is much less costly than building the actual instance with hardware components. Finding isomorphism within these modeled, potential computer technologies allows scientists to gain an understanding of the potential performance, drawbacks and behavior of emerging technologies. Isomorphic theory is also critical in discovering “design patterns” within applications. Computer scientists recognized similar abstract data structures and architecture types within software as programs migrated from low level assembler language to the currently used higher level languages. Patterns of equivalent technical solution architectures have been documented in detail. Modularization, functionality, interfacing, optimization, and platform related issues are identified for each common architecture so to further assist developers implementing today’s applications. Examples of common patterns include the “proxy” and “adapter” patterns. The proxy design pattern defines the best way to implement a remote object’s interface, while the adapter pattern defines how to build interface wrappers around frequently instantiated objects. Current research into powerful, new abstract solutions to industry specific applications and the protection of user security and privacy will further benefit from implementing isomorphic principles.

Comparing real vs model

[edit | edit source]The most powerful use for isomorphic research occurs when comparing a synthetic model of a natural system and the real existence of that system in nature. System theorists build models to potentially solve business, engineering and scientific problems and to gain a valid representation of the natural world. These models facilitate understanding of our natural phenomena. Theorists work to build these powerful isomorphic properties between the synthetic models they create and real world phenomena. Discovering significant isomorphism between the modeled and real world facilitates our understanding of the our own world. Equal structure must exist between the man-made model and the natural system so to ensure an isomorphic link between the two systems. The defined behavior and principles built inside the synthetic model must directly parallel the natural world. Success in this analytical and philosophical drive leads man to gain a deeper understanding of himself and the natural world he lives in.

Evolution & Growth

Instability: Not Always Bad?

[edit | edit source]Instability in systems is not always bad. Growth and evolution are both potential properties of unstable systems and can occur only when there is a net change in one or more system stocks.

- Growth

- Growth, found in many forms, is essentially the change in the quantities of stocks (levels) within a system. Mostly thought of as a positive increase, growth is the change in the quantities of the system given the normal system structures, i.e. without changing the behavior of the system or the interaction of its components. Different forms of growth exist based on the system’s complexity. All that is required for the positive feedback loops to gain dominance is to have a very small net increase in the system.

- Exponential Growth

- The result of a dominance of positive feedback loops in a system is the exponential growth of its quantities. Exponential growth doubles the system stock quantities each period of time, regardless of the system size or complexity. This growth will continue within a system until it reaches the “carrying-capacity”. This capacity is the natural limits imposed on the system as to what it can sustain. As the limits are met, the negative feedback loops tend to gain dominance over the positive feedback loops. This slows the growth and even can cause decline in the system if the carrying-capacity limits were exceeded.

Limitations

[edit | edit source]Resources are not infinite, however. Eventually, real world systems must run out of the resources used to increase exponentially and may do so only after doubling the stocks a few times. Exponential growth in a system may be difficult to notice with only one or two iterations. As the time periods increase in the difference in the previous system state (i.e. the ability to notice change) will also double.

Note: To gain a conceptual idea of the true nature of exponential growth, see examples and explanations in pages 268-272 in Business Dynamics: Systems Thinking and Modeling for a Complex World, Sterman, 2000.

- S-Shaped Growth

- Exponential growth continues until negative feedback loops (possibly in the form of resource restrictions) slow that growth. The slowing of this exponential growth in return to a stable system can produce the common pattern of an “S-Shaped Growth”. This pattern is dependent on the responsiveness of the negative feedback loop. Delays in the negative feedback loop allow the exponential growth to overshoot the equilibrium goal then create an oscillating graph pattern. Additionally, if the carrying capacity of the system is overshot due to this delay in negative feedback, the result will be a decline (collapse) of the system’s stock(s). This decline often remains until the system reaches the carrying capacity and regains equilibrium.

Patterns of Growth

[edit | edit source]Growth in a system can occur in patterns other than the standard exponential or S-Shaped models. Linear growth, however, is often rare. What is thought to be linear is often a narrow field of view of a system’s growth. If widened, this narrow view often turns out to be exponential growth.

- Evolution

- When the growth of a system changes the structure or behaviors of a system, instability will occur, if only briefly. Spontaneity in the system or just simply the release of non-advantageous components makes the system evolve. This evolution is the creation of a new generation of the previous system. Evolution of a system is driven by selection processes that effect the growth and stability of the system. These selection processes select against disadvantages in the system, rather than selecting for processes thought to be advantageous.

Example

[edit | edit source]Examine the growth of a plant over time. Eventually, the plant’s root system will expand to meet the needs of the plant. However, this root system did not grow in a direct manner. Rather, roots grew in various directions with some roots finding less nutrients and water than others. Thus, the plant naturally selects away from underproductive roots and the productive roots in the nutrient-rich areas thrive.

The example illustrates the process of evolution throughout the plant’s root system (a sub-system of its own). Evolution is a by-product of the system’s growth and periodic instability. As the system experiences periods of growth, the evolutionary choices allow the system to remain productive. Without evolution, the system would eventually be unable to compete with other systems for resources.

References

[edit | edit source]- Cybernetics:Principles of Systems and cybernetics: an evolutionary perspective

- Evolutionary Cybernetics.

http://pespmc1.vub.ac.be/EVOLCYB.html

- Lucas, Chris. Emergence and Evolution - Constraints on Form.

http://www.calresco.org/emerge.htm

- Sterman, John. Business Dynamics: Systems Thinking and Modeling for a Complex World. 2000.

Creativity

Creativity

[edit | edit source]The key work on Systems Theory in Creativity was done by Mihaly_Csikszentmihalyi (1988, 1999) in providing a model with which to explain how creative artifacts emerge from the system, which is a confluence model. Csikszentmihalyi's model incorporates three entities, the Individual, The Field and the Domain.

Creativity is a multidisciplinary, multifaceted concept that has held the interest of both theorists and practitioners over many years. It is perhaps more important today than ever before (Runco, 2004) because of the fast and complex changes that characterize the environment in which we live and operate. Some of the more fundamental changes are: a) globalization which, among other things, has introduced diversity in cultures and markets and exposed organizations to increased competitive pressure; b) technology advancements, particularly in communication, that have changed the means and the pace of information flow; c) organizational structures that are leaner and flatter, sometimes with part of the operations physically located on other continents; d) shift from manufacturing dominated to service dominated economies; e) markets that are more informed about products, available choices and civil rights.

All these changes make obsolete the traditional ways of going about business and pose new challenges for decision makers. Individuals, firms and governments alike are must seek novel solutions to the challenges posed by the increasing dynamism and complexity of their environment. Creativity is the first step in the formulation of the novel solutions needed to counter these equally new situations. Indeed since the times of Graham Wallas and his work – Art of Thought - published in 1926, creativity has been recognized as a useful and effective response to evolutionary changes.

Meaning and Scope

[edit | edit source]Being a multidisciplinary concept, creativity means different things to, and is expressed in different ways by different people. Organizational creativity is different from artistic creativity and both are different from clinical creativity. Emphases will also differ from one discipline to another. A psychiatrist’s interest in creativity will be different from that of a mathematician and both will differ from that of an organizational behaviorist. Consequently, debates abound as to the origins, boundaries, processes and importance of creativity. For organizations and businesses however, the debates are only academic because of the role that creativity plays in innovation and entrepreneurship.

In organizations and businesses, creativity is the process through which new ideas that make innovation possible are developed (Paulus & Nijstad, 2003). Additionally, at least for business organizations, creative ideas must have utility. They must constitute an appropriate response to a gap in the production, marketing or administrative processes of the organization. In this sense, creativity may be defined as the development of original ideas that have functional utility. The generation and development of original ideas is a complex process that has attracted research interest in several disciplines. The model first developed by Wallas in 1926 seems to have gained general acceptance, albeit with modifications over time. Without going into details, the model breaks down the creativity process into five stages namely: idea germination (or problem perception), knowledge accumulation (also called preparation or immersion), incubation, illumination (also called revelation or the Eureka stage) and verification (or evaluation).

There are other interesting perspectives about the meaning and scope of creativity. Only a few of them are mentioned here. First, understanding creativity as an aspect of problem solving seems to suggest that creativity is reactive. While this is true – creativity responds to new demands occasioned by changes that have become part of organizational life – creativity is also proactive (Heinzen, 1994). Understanding creativity as the development of new ideas that have utility pits the concept in a dual role of problem solving and problem finding (Runco, 2004). As developments in the communication industry demonstrate, creativity has a role to play in initiating change and evolution in organizations. Second, whereas originality is necessary, it is not sufficient for creativity. Unless an idea is useful to someone and can be replicated, it is only just that – a bright idea (Drucker, 1994). In addition, originality does not imply that creative ideas are always radical deviations of present day applications. Inventions are momentous when they are introduced on the market but there are very few radical inventions and far between. In many cases creative ideas that result in innovations are a reformulation of existing ideas that makes them more versatile, user-friendlier or just less costly to produce and dispense. Third, creativity is intricately linked to innovation and entrepreneurship (Kao, 1989). Creativity envisions what is possible and is more conceptual than practical. Innovation and entrepreneurship complete the cycle by applying the results of the creative process to economic or social advantage. Fourth, a distinction needs to be made between talent creativity – like that possessed by artists and performers – and self-actualizing creativity (Maslow, 1971) that is deliberately developed for problem solving and competence enhancing. The point here is that creative ability is a process of nature and nurture. Some may have a natural flair for creative thinking while for others it lies latent and must de deliberately developed. Fifth, apart from the categorization along the disciplinary divide, creativity may also be discussed under an alliterative scheme adopted by Runco from Rhodes (1987). The scheme distinguishes between the creative person, process, product and pressures (press) on creative persons or creative processes.

Drivers

[edit | edit source]Factors that enhance creativity in individuals or organizations may be divided into two categories - personal and environmental. Personal characteristics that enhance creative ability include high evaluation of aesthetic qualities, having broad interests, curiosity and a penchant for discovery, openness to suggestion, attraction to complexity, having independence of judgment thought and action, a love for autonomy, intuition, self-confidence, ability to accommodate ambiguity and to resolve antinomies, intrinsic motivation and a firm belief in self as a creative person.

Environmental factors examine the context in which creative ability is nurtured and in which creative action is required. In an individual’s formative stages, family background and structure (Sulloway, 1996), societal norms and values, social institutions such as schools, religion, role models and peer groups all play a role in the nurturing of creative ability. Contexts that are flexible about rules and regulations, permitting experimentation and independent choices will enhance creativity. Those that are rigid will inhibit it. In the organizational context, situations that avail time to think, resources to spend, encouragement and reward for original solutions, freedom from criticism and that have good role models and norms in which innovation is prized and failure is not fatal, will enhance creativity.

Inhibitors

[edit | edit source]Among the factors that inhibit creative ability in individuals and organizations are a population that is not curious or inquisitive and unwilling or unable to question assumptions, resistance to change and tendency to conform, fear of failure and criticism, red tape, time pressure (time is important for new ideas to incubate), lack of feedback, inappropriate norms and values, strict adherence to rules, regulations and budgets, an atmosphere characterized by constraint and lack of autonomy, blinked thinking and unrealistic expectations, over analysis of phenomenon (resulting in paralysis), organizational structures that constrain the flow of ideas, lack of recognition and reward for successful ideas, short term orientation and departmental actions that fail to take into account the bigger picture (functional myopia).

There are also a number of factors that may work both ways - that may stimulate or inhibit creativity. Among these are: lack of resources – ideas require extensive resources to be developed but paucity may in itself be an incentive for creativity (Runco, 2004); competition may also act in both directions – it may stimulate creativity to maintain a competitive edge but it may also discourage creative effort by making obsolete innovations before they are able to recoup expenditure on their development.

The Dark Side

[edit | edit source]While creativity has been associated with problem solving and value enhancing through proactive actions, it has also been linked with potential costs to the individual and to the society at large. For example, creative geniuses have been associated with various disorders including madness and eccentricity (Ludwig 1995), alcoholism (Noble et al 1993) and stress (Carson and Runco, 1999). Runco (1999) suggested that because creativity is strongly linked to originality it is a kind of social deviance. Plucker & Runco (1999) and Runco (1999) observed that there is frequent stigma attached to creativity. Before these, McLaren (1993) made the observation that it is the dark side of creativity has given the world weapons of mass destruction and other evil inventions and techniques. It would of course be naïve to conclude that creative persons are mad, social deviants or terrorists from such correlations.

References

[edit | edit source]- Carson D.K., Runco M.A. 1999. Creativity problem solving and problem finding in young adults: Interconnections with stress, hassles and coping abilities. Journal of Creative Behavior 33:167- 190.

- Drucker P. 1994. Innovation and Entrepreneurship: Practice and Principles. Harper Business

- Heizen T. 1994. Situational affect: proactive and reactive creativity. In Shaw and Runco eds 1994. Creativity and Affect, NJ: Abex, pp 127- 146.

- Kao J. 1989. Creativity, Entrepreneurship and Organization: Texts, Cases and Readings. Sage, Thousand Oaks.

- Ludwig A. 1995. The Price of Greatness. New York: Guilford.

- Maslow A. H. 1971. The Farther Reaches of Human Nature. New York: Viking Press

- McLaren R. 1993. The dark side of creativity. Creativity Research Journal 6 137- 144

- Paulus P.P. and Nijstad B.A. eds. 2003. Group Creativity. New York: OUP.

- Plucker J and Runco M.A. 1999. Deviance. In Runco and Pritzker eds. 1999. Encyclopedia of Creativity. San Diego, CA: Academic.

- Rhodes M. 1987. An analysis of creativity. In Frontiers of Creativity Research: Beyond the Basics, eds SG Isaksen, Buffalo NY: Bearly, pp 216-222.

- Runco M.A. 2004. Creativity. In Annual Review of Psychology 55: 657-687.

- Runco M.A. 1999 Time for Creativity. In Runco and Pritzker eds. 1999. Encyclopedia of Creativity. San Diego, CA: Academic.

- Runco and Pritzker eds. 1999. Encyclopedia of Creativity. San Diego, CA: Academic.

- Sulloway F. 1996. Born to Rebel. New York: Patheon.

Coordination

Coordination

[edit | edit source]Coordination may be defined as the process of managing dependencies between activities (Malone & Crowston, 1994). The need for coordination arises from the fact that literally all organizations are a complex aggregation of diverse systems, which need to work or be operated in concert to produce desired outcomes. To simplify the picture, one could decompose an organization into three broad components of actors, goals and resources. The actors, comprising of entities such as management, employees, customers, suppliers and other stakeholders perform interdependent activities aimed at achieving certain goals. To perform these activities, the actors require various types of inputs or resources. As explained later in the paper the inputs may themselves be interdependent in the ways that they are acquired, created or used. The goals to which the actors aspire are also diverse in nature. Some of them will be personal while others are corporate. Even where the goals are corporate, they address different sets of stakeholders and may be in conflict.

Calls for coordination are evident is situations where a) temporality is a factor, such that effects of delays or of future consequences of today’s decisions are not immediately apparent b) there is a large number of actors c) there is a large number interactions between actors or tasks in the system or d) where combinations or occurrences in the system involve an aspect of probability (stochastic variability). In summary, the more complex the system (and organizations are complex aggregations) the more coordination is necessary.

Multiple actors and interactions, resources and goals need to be coordinated if common desired outcomes are to be achieved. Viewed from the need to maintain perspective and solve problems that might arise from these multiplicities, coordination links hand in glove with the concept of systems thinking.

In contrast to traditional methods of problem analysis, system thinking focuses on how a component of a system under study interacts with other constituents of the same system (Aronson, 1998). Organizations are systems in the sense that they comprise of elements that interact to produce a predetermined behavior or output. Traditional analysis approaches focus on isolating individual parts. The systems thinking approach instead works by expanding the analytical spectrum to take into account the broader picture of how the constituent parts of the system interact with each other. Change in a constituent part of a system may constrain efficient functioning of other parts of the same system or alter required input or output specifications. Others, especially resources, may need to be used in combination to achieve desired changes. The point here is that looking at small parts of an interacting system involving multiple actors, resources and goals may accentuate a problem that analysis seeks to solve. Coordination, in a systems thinking approach fashion is called for.

Crowston (1998) refers to coordination theory as “a still developing body of theories about how coordination can occur in diverse kinds of systems. According to this theory, actors in organizations are faced with coordination problems. Coordination problems are a consequence of dependencies in the organization that constrain the efficiency of task performance. Dependencies may be inherent in the structure of the organization (for example, departments of a university college interact with each other, constraining the changes that can be made to a single department without interfering with the efficient functioning of the other departments) or dependences may result from processes - task decomposition or allocation to actors and resources (for example, professors teaching complementary courses face constraints on the kind of changes they can make without interfering with the functioning of each other).