Cryptography/Print version

| This is the print version of Cryptography You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/Cryptography

Introduction

Cryptography is the study of information hiding and verification. It includes the protocols, algorithms and strategies to securely and consistently prevent or delay unauthorized access to sensitive information and enable verifiability of every component in a communication.

Cryptography is derived from the Greek words: kryptós, "hidden", and gráphein, "to write" - or "hidden writing". People who study and develop cryptography are called cryptographers. The study of how to circumvent the use of cryptography for unintended recipients is called cryptanalysis, or codebreaking. Cryptography and cryptanalysis are sometimes grouped together under the umbrella term cryptology, encompassing the entire subject. In practice, "cryptography" is also often used to refer to the field as a whole, especially as an applied science. At the dawn of the 21 century in an ever more interconnected and technological world cryptography started to be ubiquitous as well as the reliance on the benefits it brings, especially the increased security and verifiability.

Cryptography is an interdisciplinary subject, drawing from several fields. Before the time of computers, it was closely related to linguistics. Nowadays the emphasis has shifted, and cryptography makes extensive use of technical areas of mathematics, especially those areas collectively known as discrete mathematics. This includes topics from number theory, information theory, computational complexity, statistics and combinatorics. It is also a branch of engineering, but an unusual one as it must deal with active, intelligent and malevolent opposition.

An example of the sub-fields of cryptography is steganography — the study of hiding the very existence of a message, and not necessarily the contents of the message itself (for example, microdots, or invisible ink) — and traffic analysis, which is the analysis of patterns of communication in order to learn secret information.

When information is transformed from a useful form of understanding to an opaque form of understanding, this is called encryption. When the information is restored to a useful form, it is called decryption. Intended recipients or authorized use of the information is determined by whether the user has a certain piece of secret knowledge. Only users with the secret knowledge can transform the opaque information back into its useful form. The secret knowledge is commonly called the key, though the secret knowledge may include the entire process or algorithm that is used in the encryption/decryption. The information in its useful form is called plaintext (or cleartext); in its encrypted form it is called ciphertext. The algorithm used for encryption and decryption is called a cipher (or cypher).

Common goals in cryptography

[edit | edit source]In essence, cryptography concerns four main goals. They are:

- message confidentiality (or privacy): Only an authorized recipient should be able to extract the contents of the message from its encrypted form. Resulting from steps to hide, stop or delay free access to the encrypted information.

- message integrity: The recipient should be able to determine if the message has been altered.

- sender authentication: The recipient should be able to verify from the message, the identity of the sender, the origin or the path it traveled (or combinations) so to validate claims from emitter or to validated the recipient expectations.

- sender non-repudiation: The remitter should not be able to deny sending the message.

Not all cryptographic systems achieve all of the above goals. Some applications of cryptography have different goals; for example some situations require repudiation where a participant can plausibly deny that they are a sender or receiver of a message, or extend this goals to include variations like:

- message access control: Who are the valid recipients of the message.

- message availability: By providing means to limit the validity of the message, channel, emitter or recipient in time or space.

Common forms of cryptography

[edit | edit source]Cryptography involves all legitimate users of information having the keys required to access that information.

- If the sender and recipient must have the same key in order to encode or decode the protected information, then the cipher is a symmetric key cipher since everyone uses the same key for the same message. The main problem is that the secret key must somehow be given to both the sender and recipient privately. For this reason, symmetric key ciphers are also called private key (or secret key) ciphers.

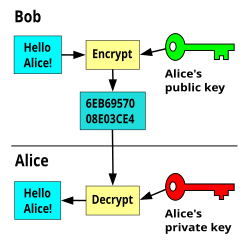

- If the sender and recipient have different keys respective to the communication roles they play, then the cipher is an asymmetric key cipher as different keys exist for encoding and decoding the same message. It is also called public key encryption as the user publicly distributes one of the keys without a care for secrecy. In the case of confidential messages to the user, they distribute the encryption key. Asymmetric encryption relies on the fact that possession of the encryption key will not reveal the decryption key.

- Digital Signatures are a form of authentication with some parallels to public-key encryption. The two keys are the public verification key and the secret signature key. As in public-key encryption, the verification key can be distributed to other people, with the same caveat that the distribution process should in some way authenticate the owner of the secret key. Security relies on the fact that possession of the verification key will not reveal the signature key.

- Hash Functions are unkeyed message digests with special properties.

Other:

Poorly designed, or poorly implemented, crypto systems achieve them only by accident or bluff or lack of interest on the part of the opposition. Users can, and regularly do, find weaknesses in even well-designed cryptographic schemes from those of high reputation.

Even with well designed, well implemented, and properly used crypto systems, some goals aren't practical (or desirable) in some contexts. For example, the sender of the message may wish to be anonymous, and would therefore deliberately choose not to bother with non-repudiation. Alternatively, the system may be intended for an environment with limited computing resources, or message confidentiality might not be an issue.

In classical cryptography, messages are typically enciphered and transmitted from one person or group to some other person or group. In modern cryptography, there are many possible options for "sender" or "recipient". Some examples, for real crypto systems in the modern world, include:

- a computer program running on a local computer,

- a computer program running on a 'nearby' computer which 'provides security services' for users on other nearby systems,

- a human being (usually understood as 'at the keyboard'). However, even in this example, the presumed human is not generally taken to actually encrypt or sign or decrypt or authenticate anything. Rather, he or she instructs a computer program to perform these actions. This 'blurred separation' of human action from actions which are presumed (without much consideration) to have 'been done by a human' is a source of problems in crypto system design, implementation, and use. Such problems are often quite subtle and correspondingly obscure; indeed, generally so, even to practicing cryptographers with knowledge, skill, and good engineering sense.

When confusion on these points is present (e.g., at the design stage, during implementation, by a user after installation, or ...), failures in reaching each of the stated goals can occur quite easily—often without notice to any human involved, and even given a perfect cryptosystem. Such failures are most often due to extra-cryptographic issues; each such failure demonstrates that good algorithms, good protocols, good system design, and good implementation do not alone, nor even in combination, provide 'security'. Instead, careful thought is required regarding the entire crypto system design and its use in actual production by real people on actual equipment running 'production' system software (e.g., operating systems) -- too often, this is absent or insufficient in practice with real-world crypto systems.

Although cryptography has a long and complex history, it wasn't until the 19th century that it developed anything more than ad hoc approaches to either encryption or cryptanalysis (the science of finding weaknesses in crypto systems). Examples of the latter include Charles Babbage's Crimean War era work on mathematical cryptanalysis of polyalphabetic ciphers, repeated publicly rather later by the Prussian Kasiski. During this time, there was little theoretical foundation for cryptography; rather, understanding of cryptography generally consisted of hard-won fragments of knowledge and rules of thumb; see, for example, Auguste Kerckhoffs' crypto writings in the latter nineteenth century. An increasingly mathematical trend accelerated up to World War II (notably in William F. Friedman's application of statistical techniques to cryptography and in Marian Rejewski's initial break into the German Army's version of the Enigma system). Both cryptography and cryptanalysis have become far more mathematical since WWII. Even then, it has taken the wide availability of computers, and the Internet as a communications medium, to bring effective cryptography into common use by anyone other than national governments or similarly large enterprise.

External links

History

Classical Cryptography

[edit | edit source]The earliest known use of cryptography is found in non-standard hieroglyphs carved into monuments from Egypt's Old Kingdom (ca 4500 years ago). These are not thought to be serious attempts at secret communications, however, but rather to have been attempts at mystery, intrigue, or even amusement for literate onlookers. These are examples of still another use of cryptography, or of something that looks (impressively if misleadingly) like it. Later, Hebrew scholars made use of simple Substitution ciphers (such as the Atbash cipher) beginning perhaps around 500 to 600 BCE. Cryptography has a long tradition in religious writing likely to offend the dominant culture or political authorities.

The Greeks of Classical times are said to have known of ciphers (e.g., the scytale transposition cypher claimed to have been used by the Spartan military). Herodutus tells us of secret messages physically concealed beneath wax on wooden tablets or as a tattoo on a slave's head concealed by regrown hair (these are not properly examples of cryptography per se; see secret writing). The Romans certainly did (e.g., the Caesar cipher and its variations). There is ancient mention of a book about Roman military cryptography (especially Julius Caesar's); it has been, unfortunately, lost.

In India, cryptography was apparently well known. It is recommended in the Kama Sutra as a technique by which lovers can communicate without being discovered. This may imply that cryptanalytic techniques were less than well developed in India ca 500 CE.

Cryptography became (secretly) important still later as a consequence of political competition and religious analysis. For instance, in Europe during and after the Renaissance, citizens of the various Italian states, including the Papacy, were responsible for substantial improvements in cryptographic practice (e.g., polyalphabetic ciphers invented by Leon Alberti ca 1465). And in the Arab world, religiously motivated textual analysis of the Koran led to the invention of the frequency analysis technique for breaking monoalphabetic substitution cyphers sometime around 1000 CE.

Cryptography, cryptanalysis, and secret agent betrayal featured in the Babington plot during the reign of Queen Elizabeth I which led to the execution of Mary, Queen of Scots. And an encrypted message from the time of the Man in the Iron Mask (decrypted around 1900 by Étienne Bazeries) has shed some, regrettably non-definitive, light on the identity of that legendary, and unfortunate, prisoner. Cryptography, and its misuse, was involved in the plotting which led to the execution of Mata Hari and even more reprehensibly, if possible, in the travesty which led to Dreyfus' conviction and imprisonment, both in the early 20th century. Fortunately, cryptographers were also involved in setting Dreyfus free; Mata Hari, in contrast, was shot.

Mathematical cryptography leapt ahead (also secretly) after World War I. Marian Rejewski, in Poland, attacked and 'broke' the early German Army Enigma system (an electromechanical rotor cypher machine) using theoretical mathematics in 1932. The break continued up to '39, when changes in the way the German Army's Enigma machines were used required more resources than the Poles could deploy. His work was extended by Alan Turing, Gordon Welchman, and others at Bletchley Park beginning in 1939, leading to sustained breaks into several other of the Enigma variants and the assorted networks for which they were used. US Navy cryptographers (with cooperation from British and Dutch cryptographers after 1940) broke into several Japanese Navy crypto systems. The break into one of them famously led to the US victory in the Battle of Midway. A US Army group, the SIS, managed to break the highest security Japanese diplomatic cipher system (an electromechanical 'stepping switch' machine called Purple by the Americans) even before WWII began. The Americans referred to the intelligence resulting from cryptanalysis, perhaps especially that from the Purple machine, as 'Magic'. The British eventually settled on 'Ultra' for intelligence resulting from cryptanalysis, particularly that from message traffic enciphered by the various Enigmas. An earlier British term for Ultra had been 'Boniface'.

World War II Cryptography

[edit | edit source]By World War II mechanical and electromechanical cryptographic cipher machines were in wide use, but they were impractical manual systems. Great advances were made in both practical and mathematical cryptography in this period, all in secrecy. Information about this period has begun to be declassified in recent years as the official 50-year (British) secrecy period has come to an end, as the relevant US archives have slowly opened, and as assorted memoirs and articles have been published.

The Germans made heavy use (in several variants) of an electromechanical rotor based cypher system known as Enigma. The German military also deployed several mechanical attempts at a one-time pad. Bletchley Park called them the Fish cyphers, and Max Newman and colleagues designed and deployed the world's first programmable digital electronic computer, the Colossus, to help with their cryptanalysis. The German Foreign Office began to use the one-time pad in 1919; some of this traffic was read in WWII partly as the result of recovery of some key material in South America that was insufficiently carefully discarded by a German courier.

The Japanese Foreign Office used a locally developed electrical stepping switch based system (called Purple by the US), and also used several similar machines for attaches in some Japanese embassies. One of these was called the 'M-machine' by the US, another was referred to as 'Red'. All were broken, to one degree or another by the Allies.

Other cipher machines used in WWII included the British Typex and the American SIGABA; both were electromechanical rotor designs similar in spirit to the Enigma.

Modern Cryptography

[edit | edit source]The era of modern cryptography really begins with Claude Shannon, arguably the father of mathematical cryptography. In 1949 he published the paper Communication Theory of Secrecy Systems in the Bell System Technical Journal, and a little later the book Mathematical Theory of Communication with Warren Weaver. These, in addition to his other works on information and communication theory established a solid theoretical basis for cryptography and for cryptanalysis. And with that, cryptography more or less disappeared into secret government communications organizations such as the NSA. Very little work was again made public until the mid '70s, when everything changed.

1969 saw two major public (i.e., non-secret) advances. First was the DES (Data Encryption Standard) submitted by IBM, at the invitation of the National Bureau of Standards (now NIST), in an effort to develop secure electronic communication facilities for businesses such as banks and other large financial organizations. After 'advice' and modification by the NSA, it was adopted and published as a FIPS Publication (Federal Information Processing Standard) in 1977 (currently at FIPS 46-3). It has been made effectively obsolete by the adoption in 2001 of the Advanced Encryption Standard, also a NIST competition, as FIPS 197. DES was the first publicly accessible cypher algorithm to be 'blessed' by a national crypto agency such as NSA. The release of its design details by NBS stimulated an explosion of public and academic interest in cryptography. DES, and more secure variants of it (such as 3DES or TDES; see FIPS 46-3), are still used today, although DES was officially supplanted by AES (Advanced Encryption Standard) in 2001 when NIST announced the selection of Rijndael, by two Belgian cryptographers. DES remains in wide use nonetheless, having been incorporated into many national and organizational standards. However, its 56-bit key-size has been shown to be insufficient to guard against brute-force attacks (one such attack, undertaken by cyber civil-rights group The Electronic Frontier Foundation, succeeded in 56 hours—the story is in Cracking DES, published by O'Reilly and Associates). As a result, use of straight DES encryption is now without doubt insecure for use in new crypto system designs, and messages protected by older crypto systems using DES should also be regarded as insecure. The DES key size (56-bits) was thought to be too small by some even in 1976, perhaps most publicly Whitfield Diffie. There was suspicion that government organizations even then had sufficient computing power to break DES messages and that there may be a back door due to the lack of randomness in the 'S' boxes.

Second was the publication of the paper New Directions in Cryptography by Whitfield Diffie and Martin Hellman. This paper introduced a radically new method of distributing cryptographic keys, which went far toward solving one of the fundamental problems of cryptography, key distribution. It has become known as Diffie-Hellman key exchange. The article also stimulated the almost immediate public development of a new class of enciphering algorithms, the asymmetric key algorithms.

Prior to that time, all useful modern encryption algorithms had been symmetric key algorithms, in which the same cryptographic key is used with the underlying algorithm by both the sender and the recipient who must both keep it secret. All of the electromechanical machines used in WWII were of this logical class, as were the Caesar and Atbash cyphers and essentially all cypher and code systems throughout history. The 'key' for a code is, of course, the codebook, which must likewise be distributed and kept secret.

Of necessity, the key in every such system had to be exchanged between the communicating parties in some secure way prior to any use of the system (the term usually used is 'via a secure channel') such as a trustworthy courier with a briefcase handcuffed to a wrist, or face-to-face contact, or a loyal carrier pigeon. This requirement rapidly becomes unmanageable when the number of participants increases beyond some (very!) small number, or when (really) secure channels aren't available for key exchange, or when, as is sensible crypto practice keys are changed frequently. In particular, a separate key is required for each communicating pair if no third party is to be able to decrypt their messages. A system of this kind is also known as a private key, secret key, or conventional key cryptosystem. D-H key exchange (and succeeding improvements) made operation of these systems much easier, and more secure, than had ever been possible before.

In contrast, with asymmetric key encryption, there is a pair of mathematically related keys for the algorithm, one of which is used for encryption and the other for decryption. Some, but not all, of these algorithms have the additional property that one of the keys may be made public since the other cannot be (by any currently known method) deduced from the 'public' key. The other key in these systems is kept secret and is usually called, somewhat confusingly, the 'private' key. An algorithm of this kind is known as a public key / private key algorithm, although the term asymmetric key cryptography is preferred by those who wish to avoid the ambiguity of using that term for all such algorithms, and to stress that there are two distinct keys with different secrecy requirements.

As a result, for those using such algorithms, only one key pair is now needed per recipient (regardless of the number of senders) as possession of a recipient's public key (by anyone whomsoever) does not compromise the 'security' of messages so long as the corresponding private key is not known to any attacker (effectively, this means not known to anyone except the recipient). This unanticipated, and quite surprising, property of some of these algorithms made possible, and made practical, widespread deployment of high quality crypto systems which could be used by anyone at all. Which in turn gave government crypto organizations worldwide a severe case of heartburn; for the first time ever, those outside that fraternity had access to cryptography that wasn't readily breakable by the 'snooper' side of those organizations. Considerable controversy, and conflict, began immediately. It has not yet subsided. In the US, for example, exporting strong cryptography remains illegal; cryptographic methods and techniques are classified as munitions. Until 2001 'strong' crypto was defined as anything using keys longer than 40 bits—the definition was relaxed thereafter. (See S Levy's Crypto for a journalistic account of the policy controversy in the US).

Note, however, that it has NOT been proven impossible, for any of the good public/private asymmetric key algorithms, that a private key (regardless of length) can be deduced from a public key (or vice versa). Informed observers believe it to be currently impossible (and perhaps forever impossible) for the 'good' asymmetric algorithms; no workable 'companion key deduction' techniques have been publicly shown for any of them. Note also that some asymmetric key algorithms have been quite thoroughly broken, just as many symmetric key algorithms have. There is no special magic attached to using algorithms which require two keys.

In fact, some of the well respected, and most widely used, public key / private key algorithms can be broken by one or another cryptanalytic attack and so, like other encryption algorithms, the protocols within which they are used must be chosen and implemented carefully to block such attacks. Indeed, all can be broken if the key length used is short enough to permit practical brute force key search; this is inherently true of all encryption algorithms using keys, including both symmetric and asymmetric algorithms.

This is an example of the most fundamental problem for those who wish to keep their communications secure; they must choose a crypto system (algorithms + protocols + operation) that resists all attack from any attacker. There being no way to know who those attackers might be, nor what resources they might be able to deploy, nor what advances in cryptanalysis (or its associated mathematics) might in future occur, users may ONLY do the best they know how, and then hope. In practice, for well designed / implemented / used crypto systems, this is believed by informed observers to be enough, and possibly even enough for all(?) future attackers. Distinguishing between well designed / implemented / used crypto systems and crypto trash is another, quite difficult, problem for those who are not themselves expert cryptographers. It is even quite difficult for those who are.

Revision of modern history

[edit | edit source]In recent years public disclosure of secret documents held by the UK government has shown that asymmetric key cryptography, D-H key exchange, and the best known of the public key / private key algorithms (i.e., what is usually called the RSA algorithm), all seem to have been developed at a UK intelligence agency before the public announcement by Diffie and Hellman in '76. GCHQ has released documents claiming that they had developed public key cryptography before the publication of Diffie and Hellman's paper. Various classified papers were written at GCHQ during the 1960s and 1970s which eventually led to schemes essentially identical to RSA encryption and to Diffie-Hellman key exchange in 1973 and 1974. Some of these have now been published, and the inventors (James Ellis, Clifford Cocks, and Malcolm Williamson) have made public (some of) their work.

Classical Cryptography

Cryptography has a long and colorful history from Caesar's encryption in first century BC to the 20th century.

There are two major principles in classical cryptography: transposition and substitution.

Transposition ciphers

[edit | edit source]Lets look first at transposition, which is the changing in the position of the letters in the message such as a simple writing backwards

Plaintext: THE PANEL IN THE WALL MOVES

Encrypted: EHT LENAP NI EHT LLAW SEVOM

or as in a more complex transposition such as:

THEPAN

ELINTH

EWALLM

OVESAA

then take the columns:

TEEO HLWV EIAE PNLS ATLA NHMA

(the extra letters are called space fillers) The idea in transposition is NOT to randomize it but to transform it to something that is not recognizable with a reversible algorithm (an algorithm is just a procedure, reversible so your correspondent can read the message).

We discuss transposition ciphers in much more detail in a later chapter, Cryptography/Transposition ciphers.

Substitution ciphers

[edit | edit source]The second most important principle is substitution. That is, substituting a Symbol for a letter of your plaintext (or word or even sentence). Slang even can sometimes be a form of cipher (the symbols replacing your plaintext), ever wonder why your parents never understood you? Slang, though, is not something you would want to store a secret in for a long time. In WWII, there were Navajo CodeTalkers who passed along info from unit to unit. From what I hear (someone verify this) the Navajo language was a very exclusive almost unknown and unwritten language. So the Japanese were not able to decipher it.

Even though this is a very loose example of substitution, whatever works works.

Caesar Cipher

[edit | edit source]One of the most basic methods of encryption is the use of Caesar Ciphers. It simply consist in shifting the alphabet over a few characters and matching up the letters.

The classical example of a substitution cipher is a shifted alphabet cipher

Alphabet: ABCDEFGHIJKLMNOPQRSTUVWXYZ

Cipher: BCDEFGHIJKLMNOPQRSTUVWXYZA

Cipher2: CDEFGHIJKLMNOPQRSTUVWXYZAB

etc...

Example:(using cipher 2)

Plaintext: THE PANEL IN THE WALL MOVES

Encrypted: VJG RCPGN KP VJG YCNN OQXGU

If this is the first time you've seen this it may seem like a rather secure cipher, but it's not. In fact this by itself is very insecure. For a time in the 1500-1600s this was the most secure (mainly because there were many people who were illiterate) but a man (old what's his name) in the 18th century discovered a way to crack (find the hidden message) of every cipher of this type he discovered frequency analysis.

We discuss substitution ciphers in much more detail in a later chapter, Cryptography/Substitution ciphers.

Frequency Analysis

[edit | edit source]In the shifted alphabet cipher or any simple randomized cipher, the same letter in the cipher replaces each of the same ones in your message (e.g. 'A' replaces all 'D's in the plaintext, etc.). The weakness is that English uses certain letters more than any other letter in the alphabet. 'E' is the most common, etc. Here's an exercise count all of each letter in this article. You'll find that in the previous sentence there are 2 'H's,7 'E's, 3 'R's, 3 'S's, etc. By far 'E' is the most common letter; here are the other frequencies [Frequency tables|http://rinkworks.com/words/letterfreq.shtml]. Basically you experiment with replacing different symbols with letters (the most common with 'E', etc.).

Encrypted: VJG TCKP KP URCKP

First look for short words with limited choices of words, such as 'KP' this may be at, in, to, or, by, etc. Let us select in. Replace 'K' with 'I' and 'P' with 'N'.

Encrypted: VJG TCIN IN URCIN

Next select VJG this is most likely the (since the huge frequency of 'the' in a normal sentence, check a couple of the preceding sentences).

Encrypted: THE TCIN IN URCIN

generally this in much easier in long messages the plaintext is 'THE RAIN IN SPAIN'

We discuss many different ways to "attack", "break", and "solve" encrypted messages in a later section of this book, "Part III: Cryptanalysis", which includes a much more detailed section on Cryptography/Frequency analysis.

Combining transposition and substitution

[edit | edit source]A more secure encryption is a transposed substitution cipher.

Take the above message in encrypted form

Encrypted:VJG RCPGN KP VJG YCNN OQXGU

now spiral transpose it

VJGRC

NNOQP

CAAXG

YUNGN

GJVPK

The message starts in the upper right corner and spirals to the center (again the AA is a filler) Now take the columns:

VNCYG JNAUJ GOANV RQXGP CPGNK

Now this is more resistant to Frequency analysis, see what we did before that started recognizable patterns results in:

TNCYE HNAUH EOANT RQXEN CNENK

A problem for people who crack codes.

Multiliteral systems

[edit | edit source]The vast majority of classical ciphers are "uniliteral" -- they encrypt a plaintext 1 letter at a time, and each plaintext letter is encrypted to a single corresponding ciphertext letter.

A multiliteral system is one where the ciphertext unit is more than one character in length. The major types of multiliteral systems are:[1]

- biliteral systems: 2 letters of ciphertext per letter of plaintext

- dinomic systems: 2 digits of ciphertext per letter of plaintext

- Triliteral systems: 3 letters of ciphertext per letter of plaintext

- trinomic systems: 3 digits of ciphertext per letter of plaintext

- monome-dinome systems, also called straddling checkerboard systems: 1 digit of ciphertext for some plaintext letters, 2 digits of ciphertext for the remaining plaintext letters.

- biliteral with variants and dinomic with variants systems: several ciphertext values decode to the same plaintext letter (homophonic substitution cipher)

- Syllabary square systems: 2 letters or 2 digits of ciphertext decode to an entire syllable or a single character of plaintext.[2]

Quantum Cryptography

While in the year 2001, Quantum Cryptography was only a future concept. Since not much was known of how capable a quantum computer would be, but even then it was understood that if at all cost-effective, the technology would have only niche applications. By 2024 the technology is yet to prove itself usable in practical terms. Specific algorithms have to be created for yet to be standardized hardware.

Quantum cryptography deals with three distinct issues:

1 - Since the quantum machines will not be available or standardized in a very near future, let's say by 2035, theoretical efforts are being made in and proofing standard cryptographic practices against brute force attacks using these new systems. As we enter the often referred as post-quantum cryptography, cryptographers raised concern regarding the technology impact on cryptography due to the potential vulnerabilities of general use cryptographic systems to quantum attacks, particularly those facilitated by Shor's algorithm, which can efficiently factor large numbers and compute discrete logarithms—the mathematical foundations of many public-key crypto systems as the reliance (expectation) of some cryptographic systems that consumer level technology will have difficulty in solving certain mathematical problems in useful (to get to the secret) time scales. Something that is specifically eroded by quantum parallelism and Grover's algorithm. Creating a need for development of post-quantum cryptography (PQC) algorithms.

2 - It is so crucial to develop new algorithms that ca n not only work but makes use of the new quantum computers specifications. Today (2024) advances are specially being made in hardware for secure signal transmission, protection, and speed using quantum properties.

3 - Lastly, verifying that development of the quantum computing technology and quantum-resistant algorithms as to prevent these machines to break the security expectations of legacy systems (much like computers in general made easy work of non-digital cryptography relegating, for example as we saw with the German Enigma machines).

With the new technology comes new terminology like QBit Cryptanalysis, and the other is Quantum Key Exchange (which is the most common use of the term, and I will discuss here)

Quantum Key Exchange

[edit | edit source]With Quantum Key Exchange, also called quantum key distribution (QKD),[3] you use through-air free-space optical links[4][5] or a single optical fiber to send a single photon at a time orientated to one certain angle of four; we can describe them as horizontally polarized ( - ), vertically polarized ( | ), Ordinary ( \ ) or Sinister ( / ) To detect these photons, you can use either an ordinary filter ( \ slot) or a vertical filter ( | slot)

An ordinary filter has the properties that it will always pass an ordinary polarized photon, and always block a sinister polarized photon (this is because the photon is correctly aligned for the slot in the Ordinary case, and at to the slot for the Sinister photon.

A vertical filter has similar properties for its two associated photons - it will always pass a vertical photon, and always block a horizontal one.

This leaves four cases: '|' and '-' for an ordinary filter, and '\' and '/' for a vertical one. The problem is - nobody knows! they might make it though the slot, they might not, and it is entirely random.

For this reason, the sender will send 'n' photons to the recipient, and the recipient will then report back which of the two possible filters (chosen at random) he tried.

If the recipient guessed the right filter, he now knows which one of two possible orientations the photon was in. if he guessed wrong, he has no idea - so the sender responds to the recipient's list with a second list - of the decisions the recipient got right. By discarding the "wrong" filter choices, both sender and recipient now know which of two possible choices each of the photons received were actually matched to - and can convert pass/fail into logic 1 or 0 for a binary set (and this can then be used as an encryption key)

However, this *only* works if the cable between the sender and the recipient is completely unbroken - because it is impossible to route, regenerate or otherwise manipulate the photons sent without losing the delicate orientation information that is the hub of the scheme.

Anybody who tries to measure the photons en route must pick the correct filter - if he picks the wrong one, he is unable to tell the difference between a pass and a misaligned photon that happens to have gotten though the slot - and indeed, a block and a misaligned photon that was blocked. If he picks wrongly, he cannot tell what orientation the photon was in, and the eventual conversation between the recipient and sender as to orientation of filters will cause there to be differences between the two sets of data - and reveal an eavesdropper has intercepted photons.

There are obvious problems with this scheme. the first is that sending a single photon down a light pipe can be unreliable - sometimes, they fail to reach the recipient and are read as a false "block". The second is that the obvious attack on this is a man-in-the-middle one - the attacker intercepts both the light pipe and the out-of-band data channel used for the discussion of filters and acceptance lists - then negotiates different Quantum key Exchange keysets with both the sender and the recipient independently. by converting the encrypted data between the keys each is expecting to see, he can read the message en route (provided of course there is no way that either party can verify the transmissions in a way the m-i-t-m cannot intercept and replace with his own doctored version)

However, the problems have not stopped a commercial company selling a product which relies on QKE for its operation.

For further reading

[edit | edit source]- ↑ Field Manual 34-40-2 "Chapter 5: Monoalphabetic Multiliteral Substitution Systems".

- ↑ "The Syllabary Cipher".

- ↑ Mart Haitjema. "A Survey of the Prominent Quantum Key Distribution Protocols". 2007.

- ↑ Jian-Yu Wang et. al. "Direct and full-scale experimental verifications towards ground–satellite quantum key distribution". 2012.

- ↑ Sebastian Nauerth et. al. "Air-to-ground quantum communication". 2013.

- Cryptodox: quantum cryptography

- Serious Flaw Emerges In Quantum Cryptography

- Quantiki: quantum key distribution

- "Commercial Quantum Cryptography Satellites Coming"

- "Breaking Quantum Cryptography's 150-Kilometer Limit: Scientists want to put an unbreakable-code generator on the International Space Station"

- "Is quantum key distribution safe against MITM attacks too?"

- "Quantum key distribution simulation"

Timeline of Notable Events

The desire to keep stored or send information secret dates back into antiquity. As society developed so did the application of cryptography. Below is a timeline of notable events related to cryptography.

BCE

[edit | edit source]- 3500s - The Sumerians develop cuneiform writing and the Egyptians develop hieroglyphic writing.

- 1500s - The Phoenicians develop an alphabet

- 600-500 - Hebrew scholars make use of simple monoalphabetic substitution ciphers (such as the Atbash cipher)

- c. 400 - Spartan use of scytale (alleged)

- c. 400BCE - Herodotus reports use of steganography in reports to Greece from Persia (tatoo on shaved head)

- 100-0 - Notable Roman ciphers such as the Caeser cipher.

1 - 1799 CE

[edit | edit source]- ca 1000 - Frequency analysis leading to techniques for breaking monoalphabetic substitution ciphers. It was probably developed among the Arabs, and was likely motivated by textual analysis of the Koran.

- 1450 - The Chinese develop wooden block movable type printing

- 1450-1520 - The Voynich manuscript, an example of a possibly encrypted illustrated book, is written.

- 1466 - Leone Battista Alberti invents polyalphabetic cipher, also the first known mechanical cipher machine

- 1518 - Johannes Trithemius' book on cryptology

- 1553 - Belaso invents the (misnamed) Vigenère cipher

- 1585 - Vigenère's book on ciphers

- 1641 - Wilkins' Mercury (English book on cryptography)

- 1586 - Cryptanalysis used by spy master Sir Francis Walsingham to implicate Mary Queen of Scots in the Babington Plot to murder Queen Elizabeth I of England. Queen Mary was eventually executed.

- 1614 - Scotsman John Napier (1550-1617) published a paper outlining his discovery of the logarithm. Napier also invented an ingenious system of moveable rods (referred to as Napier's Rods or Napier's bones) which were a precursor of the slide rule. These were based on logarithms and allowed the operator to multiply, divide and calculate square and cube roots by moving the rods around and placing them in specially constructed boards.

- 1793 - Claude Chappe establishes the first long-distance semaphore "telegraph" line

- 1795 - Thomas Jefferson invents the Jefferson disk cipher, reinvented over 100 years later by Etienne Bazeries and widely used a a tactical cypher by the US Army.

1800-1899

[edit | edit source]- 1809-14 George Scovell's work on Napoleonic ciphers during the Peninsular War

- 1831 - Joseph Henry proposes and builds an electric telegraph

- 1835 - Samuel Morse develops the Morse code.

- c. 1854 - Babbage's method for breaking polyalphabetic cyphers (pub 1863 by Kasiski); the first known break of a polyaphabetic cypher. Done for the English during the Crimean War, a general attack on Vigenère's autokey cipher (the 'unbreakable cypher' of its time) as well as the much weaker cypher that is today termed "the Vigenère cypher". The advance was kept secret and was, in essence, reinvented somewhat later by the Prussian Friedrich Kasiski, after whom it is named.

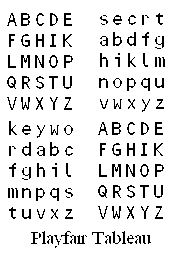

- 1854 - Wheatstone invents Playfair cipher

- 1883 - Auguste Kerckhoffs publishes La Cryptographie militare, containing his celebrated "laws" of cryptography

- 1885 - Beale ciphers published

- 1894 - The Dreyfus Affair in France involves the use of cryptography, and its misuse, re: false documents.

1900 - 1949

[edit | edit source]- c 1915 - William Friedman applies statistics to cryptanalysis ( coincidence counting, etc.)

- 1917 - Gilbert Vernam develops first practical implementation of a teletype cipher, now known as a stream cipher and, later, with Mauborgne the one-time pad

- 1917 - Zimmermann telegram intercepted and decrypted, advancing U.S. entry into World War I

- 1919 - Weimar Germany Foreign Office adopts (a manual) one-time pad for some traffic

- 1919 - Hebern invents/patents first rotor machine design -- Damm, Scherbius and Koch follow with patents the same year

- 1921 - Washington Naval Conference - U.S. negotiating team aided by decryption of Japanese diplomatic telegrams

- c. 1924 - MI8 (Yardley, et al.) provide breaks of assorted traffic in support of US position at Washington Naval Conference

- c. 1932 - first break of German Army Enigma machine by Rejewski in Poland

- 1929 - U.S. Secretary of State Henry L. Stimson shuts down State Department cryptanalysis "Black Chamber", saying "Gentlemen do not read each other's mail."

- 1931 - The American Black Chamber by Herbert O. Yardley is published, revealing much about American cryptography

- 1940 - break of Japan's Purple machine cipher by SIS team

- December 7, 1941 - U.S. Naval base at Pearl Harbor surprised by Japanese attack, despite U.S. breaks into several Japanese cyphers. U.S. enters World War II

- June 1942 - Battle of Midway. Partial break into Dec 41 edition of JN-25 leads to successful ambush of Japanese carriers and to the momentum killing victory.

- April 1943 - Admiral Yamamoto, architect of Pearl Harbor attack, is assassinated by U.S. forces who know his itinerary from decrypted messages

- April 1943 - Max Newman, Wynn-Williams, and their team (including Alan Turing) at the secret Government Code and Cypher School ('Station X'), Bletchley Park, Bletchley, England, complete the "Heath Robinson". This is a specialized machine for cypher-breaking, not a general-purpose calculator or computer.

- December 1943 - The Colossus was built, by Dr Thomas Flowers at The Post Office Research Laboratories in London, to crack the German Lorenz cipher (SZ42). Colossus was used at Bletchley Park during WW II - as a successor to April's 'Robinson's. Although 10 were eventually built, unfortunately they were destroyed immediately after they had finished their work - it was so advanced that there was to be no possibility of its design falling into the wrong hands. The Colossus design was the first electronic digital computer and was somewhat programmable. A epoch in machine capability.

- 1944 - patent application filed on SIGABA code machine used by U.S. in WW II. Kept secret, finally issued in 2001

- 1946 - VENONA's first break into Soviet espionage traffic from early 1940s

- 1948 - Claude Shannon writes a paper that establishes the mathematical basis of information theory

- 1949 - Shannon's Communication Theory of Secrecy Systems pub in Bell Labs Technical Journal, based on work done during WWII.

1950 - 1999

[edit | edit source]- 1951 - U.S. National Security Agency founded, subsuming the US Army and US Navy 'girls school' departments

- 1968 - John Anthony Walker walks into the Soviet Union's embassy in Washington and sells information on KL-7 cipher machine. The Walker spy ring operates until 1985

- 1964 - David Kahn's The Codebreakers is published

- June 8, 1967 - USS Liberty incident in which a U.S. SIGINT ship is attacked by Israel, apparently by mistake, though some continue to dispute this

- January 23, 1968 - USS Pueblo, another SIGINT ship, is captured by North Korea

- 1969 - The first hosts of ARPANET, Internet's ancestor, are connected

- 1974? - Horst Feistel develops the Feistel network block cipher design at IBM

- 1976 - the Data Encryption Standard was published as an official Federal Information Processing Standard (FIPS) for the US

- 1976 - Diffie and Hellman publish New Directions in Cryptography article

- 1977- RSA public key encryption invented at MIT

- 1981 - Richard Feynman proposes quantum computers. The main application he had in mind was the simulation of quantum systems, but he also mentioned the possibility of solving other problems.

- 1986 In the wake of an increasing number of break-ins to government and corporate computers, the US Congress passes the Computer Fraud and Abuse Act, which makes it a crime to break into computer systems. The law, however, does not cover juveniles.

- 1988 - First optical chip developed, it uses light instead of electricity to increase processing speed.

- 1989 - Tim Berners-Lee and Robert Cailliau built the prototype system which became the World Wide Web at CERN

- 1991 - Phil Zimmermann releases the public key encryption program PGP along with its source code, which quickly appears on the Internet.

- 1992 - Release of the movie Sneakers (film)|Sneakers, in which security experts are blackmailed into stealing a universal decoder for encryption systems (no such decoder is known, likely because it is impossible).

- 1994 - 1st ed of Bruce Schneier's Applied Cryptography is published

- 1994 - Secure Sockets Layer (SSL) encryption protocol released by Netscape

- 1994 - Peter Shor devises an algorithm which lets quantum computers determine the factorization of large integers quickly. This is the first interesting problem for which quantum computers promise a significant speed-up, and it therefore generates a lot of interest in quantum computers.

- 1994 - DNA computing proof of concept on toy traveling salesman problem; a method for input/output still to be determined.

- 1994 - Russian crackers siphon $10 million from Citibank and transfer the money to bank accounts around the world. Vladimir Levin, the 30-year-old ringleader, uses his work laptop after hours to transfer the funds to accounts in Finland and Israel. Levin stands trial in the United States and is sentenced to three years in prison. Authorities recover all but $400,000 of the stolen money.

- 1994 - Formerly proprietary trade secret, but not patented, RC4 cipher algorithm is published on the Internet

- 1994 - first RSA Factoring Challenge from 1977 is decrypted as The Magic Words are Squeamish Ossifrage

- 1995 - NSA publishes the SHA1 hash algorithm as part of its Digital Signature Standard; SHA0 had a flaw corrected by SHA1

- 1997 - Ciphersaber, an encryption system based on RC4 that is simple enough to be reconstructed from memory, is published on Usenet

- 1998 - RIPE project releases final report

- October 1998 - Digital Millennium Copyright Act (DMCA) becomes law in U.S., criminalizing production and dissemination of technology that can circumvent measures taken to protect copyright

- October 1999 - DeCSS, a computer program capable of decrypting content on a DVD, is published on the Internet

- 1999: Bruce Schneier develops the Solitaire cipher, a way to allow field agents to communicate securely without having to rely on electronics or having to carry incriminating tools like a one-time pad. Unlike all previous manual encryption techniques -- except the one-time pad -- this one is resistant to automated cryptanalysis. It is published in Neal Stephenson's Cryptonomicon (2000).

2000 and beyond

[edit | edit source]- January 14, 2000 - U.S. Government announce restrictions on export of cryptography are relaxed (although not removed). This allows many US companies to stop the long running, and rather ridiculous process of having to create US and international copies of their software.

- March 2000 - President Clinton says he doesn't use e-mail to communicate with his daughter, Chelsea Clinton, at college because he doesn't think the medium is secure.

- September 6, 2000 - RSA Security Inc. released their RSA algorithm into the public domain, a few days in advance of their US patent 4405829 expiring. Following the relaxation of the U.S. government export restrictions, this removed one of the last barriers to the world-wide distribution of much software based on cryptographic systems. It should be noted that the IDEA algorithm is still under patent and also that government restrictions still apply in some places.

- 2000 - U.K. Regulation of Investigatory Powers Act 2000|Regulation of Investigatory Powers Act requires anyone to supply their cryptographic key to a duly authorized person on request

- 2001 - Belgian Rijndael algorithm selected as the U.S. Advanced Encryption Standard after a 5 year public search process by National Institute for Standards and Technology (NIST)

- September 11, 2001 - U.S. response to terrorist attacks hampered by Communication during the September 11, 2001 attacks|lack of secure communications

- November 2001 - Microsoft and its allies vow to end "full disclosure" of security vulnerabilities by replacing it with "responsible" disclosure guidelines.

- 2002 - NESSIE project releases final report / selections

- 2003 - CRYPTREC project releases 2003 report / recommendations

- 2004 - the hash MD5 is shown to be vulnerable to practical collision attack

- 2005 - potential for attacks on SHA1 demonstrated

- 2005 - agents from the U.S. FBI demonstrate their ability to crack WEP using publicly available tools

- 2007 - NIST announces w:NIST hash function competition

- 2012 - proclamation of a winner of the w:NIST hash function competition is scheduled

- 2015 - year by which NIST suggests that 80-bit keys for symmetric key cyphers be phased out. Asymmetric key cyphers require longer keys which have different vulnerability parameters.

Goals of Cryptography

Crytography is the science of secure communication in the presence of third parties (sometimes called "adversaries").

Modern cryptographers and cryptanalysts work in many areas including

- data confidentiality

- data integrity

- authentication

- forward secrecy

- end-to-end auditable voting systems

- digital currency

Classical cryptography focused on "data confidentiality"—keeping pieces of information secret, i.e. of designing technical systems such that an observer can infer as few as possible - optimally none - information from observing the system. The motivation for this is that the owner of the system wants to prevent the observer from taking advantage (e.g. monetary, influential, emotional) of the possible intelligence.

This secrecy or hiding is achieved by removing contextual information from the system's observable state and/or behaviour, without which the observer cannot gain intelligence about the system.

Examples

[edit | edit source]The term is very often used in conjunction in the context of message exchange between two entities, but of course not restricted to this case.

Hiding System State Alone

[edit | edit source]It may be advantageous for an ATM machine to hide the information as to how much cash is still available in the machine. It may e.g. only disclose the information that no more bank notes are available from it to the holder of a valid debit card.

Hiding Communication Content

[edit | edit source]Two companies doing business with each other may not wish to disclose the information on pricing of their products to third parties tapping into their communications.

Hiding the Fact of Communicating

[edit | edit source]Well known entities with well known fields of activity may wish to hide the fact that they are communicating at all since an observer aware of their fields of activity may already from the fact of some communication happening, be able to infer information.

Symmetric Ciphers

A symmetric key cipher (also called a secret-key cipher, or a one-key cipher, or a private-key cipher, or a shared-key cipher) Shared_secretis one that uses the same (necessarily secret) key to encrypt messages as it does to decrypt messages.

Until the invention of asymmetric key cryptography (commonly termed "public key / private key" crypto) in the 1970s, all ciphers were symmetric. Each party to the communication needed a key to encrypt a message; and a recipient needed a copy of the same key to decrypt the message. This presented a significant problem, as it required all parties to have a secure communication system (e.g. face-to-face meeting or secure courier) in order to distribute the required keys. The number of secure transfers required rises impossibly, and wholly impractically, quickly with the number of participants.

Formal Definition

[edit | edit source]Any cryptosystem based on a symmetric key cipher conforms to the following definition:

- M : message to be enciphered

- K : a secret key

- E : enciphering function

- D : deciphering function

- C : enciphered message. C := E(M, K)

- For all M, C, and K, M = D(C,K) = D(E(M,K),K)

Reciprocal Ciphers

[edit | edit source]Some shared-key ciphers are also "reciprocal ciphers." A reciprocal cipher applies the same transformation to decrypt a message as the one used to encrypt it. In the language of the formal definition above, E = D for a reciprocal cipher.

An example of a reciprocal cipher is Rot 13, in which the same alphabetic shift is used in both cases.

The xor-cipher (often used with one-time-pads) is another reciprocal cipher.[1]

Reciprocal ciphers have the advantage that the decoding machine can be set up exactly the same as the encoding machine -- reciprocal ciphers do not require the operator to remember to switch between "decoding" and "encoding".[2]

Symmetric Cypher Advantages

[edit | edit source]Symmetric key ciphers are typically much less computational overhead Overhead_(computing) than Asymmetric ciphers, sometimes this difference in computing overhead per character can be several orders of magnitude[3]. As such they are still used for bulk encryption of files and data streams for online applications.

Use

[edit | edit source]To set up a secure communication session Session_key between two parties the following actions take place Transport_Layer_Security:

- Alice tells Bob (in cleartext) that she wants a secure connection.

- Bob generates a single use (session), public/private (asymmetric) key pair (Kpb Kpr).

- Alice generates a single use (session) symmetric key. This will be the shared secret (Ks).

- Bob sends Alice the public key (Kpb).

- Alice encrypts her shared session key Ks with the Public key Kpb Ck := E(Ks, Kpb) and sends it to Bob

- Bob decrypts the message with his private key to obtains the shared session key Ks := D(Ck, Kpr)

- Now Alice and Bob have a shares secret (symmetric key) to secure communication on this connection for this session

- Either party can encrypt a message simply by C := E(M, Ks) and decrypt is by M = D(C,K) = D(E(M,Ks),Ks)

This is the Basis for Diffie–Hellman Diffie_Hellman_key_exchange key exchange Key exchange and its more advanced successors Transport_Layer_Security.

Examples

[edit | edit source]

Further Reading

[edit | edit source]

This page or section of the Cryptography book is a stub. You can help Wikibooks by expanding it.

Asymmetric Ciphers

In cryptography, an asymmetric key algorithm uses a pair of different, though related, cryptographic keys to encrypt and decrypt. The two keys are related mathematically; a message encrypted by the algorithm using one key can be decrypted by the same algorithm using the other. In a sense, one key "locks" a lock (encrypts); but a different key is required to unlock it (decrypt).

Some, but not all, asymmetric key cyphers have the "public key" property, which means that there is no known effective method of finding the other key in a key pair, given knowledge of one of them. This group of algorithms is very useful, as it entirely evades the key distribution problem inherent in all symmetric key cyphers and some of the asymmetric key cyphers. One may simply publish one key while keeping the other secret. They form the basis of much of modern cryptographic practice.

A Postal Analogy

[edit | edit source]An analogy which can be used to understand the advantages of an asymmetric system is to imagine two people, Alice and Bob, sending a secret message through the public mail. In this example, Alice has the secret message and wants to send it to Bob, after which Bob sends a secret reply.

With a symmetric key system, Alice first puts the secret message in a box, and then locks the box using a padlock to which she has a key. She then sends the box to Bob through regular mail. When Bob receives the box, he uses an identical copy of Alice's key (which he has somehow obtained previously) to open the box, and reads the message. Bob can then use the same padlock to send his secret reply.

In an asymmetric key system, Bob and Alice have separate padlocks. Firstly, Alice asks Bob to send his open padlock to her through regular mail, keeping his key to himself. When Alice receives it she uses it to lock a box containing her message, and sends the locked box to Bob. Bob can then unlock the box with his key and read the message from Alice. To reply, Bob must similarly get Alice's open padlock to lock the box before sending it back to her. The critical advantage in an asymmetric key system is that Bob and Alice never need send a copy of their keys to each other. This substantially reduces the chance that a third party (perhaps, in the example, a corrupt postal worker) will copy a key while it is in transit, allowing said third party to spy on all future messages sent between Alice and Bob. In addition, if Bob were to be careless and allow someone else to copy his key, Alice's messages to Bob will be compromised, but Alice's messages to other people would remain secret, since the other people would be providing different padlocks for Alice to use...

Actual Algorithms - Two Linked Keys

[edit | edit source]Fortunately cryptography is not concerned with actual padlocks, but with encryption algorithms which aren't vulnerable to hacksaws, bolt cutters, or liquid nitrogen attacks.

Not all asymmetric key algorithms operate in precisely this fashion. The most common have the property that Alice and Bob own two keys; neither of which is (so far as is known) deducible from the other. This is known as public-key cryptography, since one key of the pair can be published without affecting message security. In the analogy above, Bob might publish instructions on how to make a lock ("public key"), but the lock is such that it is impossible (so far as is known) to deduce from these instructions how to make a key which will open that lock ("private key"). Those wishing to send messages to Bob use the public key to encrypt the message; Bob uses his private key to decrypt it.

Weaknesses

[edit | edit source]Of course, there is the possibility that someone could "pick" Bob's or Alice's lock. Unlike the case of the one-time pad or its equivalents, there is no currently known asymmetric key algorithm which has been proven to be secure against a mathematical attack. That is, it is not known to be impossible that some relation between the keys in a key pair, or a weakness in an algorithm's operation, might be found which would allow decryption without either key, or using only the encryption key. The security of asymmetric key algorithms is based on estimates of how difficult the underlying mathematical problem is to solve. Such estimates have changed both with the decreasing cost of computer power, and with new mathematical discoveries.

Weaknesses have been found in promising asymmetric key algorithms in the past. The 'knapsack packing' algorithm was found to be insecure when an unsuspected attack came to light. Recently, some attacks based on careful measurements of the exact amount of time it takes known hardware to encrypt plain text have been used to simplify the search for likely decryption keys. Thus, use of asymmetric key algorithms does not ensure security; it is an area of active research to discover and protect against new and unexpected attacks.

Another potential weakness in the process of using asymmetric keys is the possibility of a 'Man in the Middle' attack, whereby the communication of public keys is intercepted by a third party and modified to provide the third party's own public keys instead. The encrypted response also must be intercepted, decrypted and re-encrypted using the correct public key in all instances however to avoid suspicion, making this attack difficult to implement in practice.

A Brief History

[edit | edit source]The first known asymmetric key algorithm was invented by Clifford Cocks of GCHQ in the UK. It was not made public at the time, and was reinvented by Rivest, Shamir, and Adleman at MIT in 1976. It is usually referred to as RSA as a result. RSA relies for its security on the difficulty of factoring very large integers. A breakthrough in that field would cause considerable problems for RSA's security. Currently, RSA is vulnerable to an attack by factoring the 'modulus' part of the public key, even when keys are properly chosen, for keys shorter than perhaps 700 bits. Most authorities suggest that 1024 bit keys will be secure for some time, barring a fundamental breakthrough in factoring practice or practical quantum computers, but others favor longer keys.

At least two other asymmetric algorithms were invented after the GCHQ work, but before the RSA publication. These were the Ralph Merkle puzzle cryptographic system and the Diffie-Hellman system. Well after RSA's publication, Taher Elgamal invented the Elgamal discrete log cryptosystem which relies on the difficulty of inverting logs in a finite field. It is used in the SSL, TLS, and DSA protocols.

A relatively new addition to the class of asymmetric key algorithms is elliptic curve cryptography. While it is more complex computationally, many believe it to represent a more difficult mathematical problem than either the factorisation or discrete logarithm problems.

Practical limitations and hybrid cryptosystems

[edit | edit source]One drawback of asymmetric key algorithms is that they are much slower (factors of 1000+ are typical) than 'comparably' secure symmetric key algorithms. In many quality crypto systems, both algorithm types are used; they are termed 'hybrid systems'. PGP is an early and well-known hybrid system. The receiver's public key encrypts a symmetric algorithm key which is used to encrypt the main message. This combines the virtues of both algorithm types when properly done.

We discuss asymmetric ciphers in much more detail later in the Public Key Overview and following sections of this book.

Random number generation

The generation of random numbers is essential to cryptography. One of the most difficult aspect of cryptographic algorithms is in depending on or generating, true random information. This is problematic, since there is no known way to produce true random data, and most especially no way to do so on a finite state machine such as a computer.

There are generally two kinds of random number generators: non-deterministic random number generators, sometimes called "true random number generators" (TRNG), and deterministic random number generators, also called pseudorandom number generators (PRNG).[8]

Many high-quality cryptosystems use both -- a hardware random-number generator to periodically re-seed a deterministic random number generator.

Quantum mechanical theory suggests that some physical processes are inherently random (though collecting and using such data presents problems), but deterministic mechanisms, such as computers, cannot be. Any stochastic process (generation of random numbers) simulated on a computer, however, is not truly random, but only pseudorandom.

Within the limitations of pseudorandom generators, any quality pseudorandom number generator must:

- have a uniform distribution of values, in all dimensions

- have no detectable pattern, ie generate numbers with no correlations between successive numbers

- have a very long cycle length

- have no, or easily avoidable, weak initial conditions which produce patterns or short cycles

Methods of Pseudorandom Number Generation

[edit | edit source]Keeping in mind that we are dealing with pseudorandom number generation (i.e. numbers generated from a finite state machine, as a computer), there are various ways to randomly generate numbers.

In C and C++ the function rand() returns a pseudo-random integer between zero and RAND_MAX (internally defined constant), defined with the srand() function; otherwise it will use the default seed and consistently return the same numbers when the program is restarted. Most such libraries have short cycle lengths and are not usable for cryptographic purposes.

"Numerical Recipes in C" reviews several random number generators and recommends a modified version of the DES cypher as their highest quality recommended random number generator. "Practical Cryptography" (Ferguson and Schneier) recommend a design they have named Fortuna; it supersedes their earlier design called Yarrow.

Methods of nondeterministic number generation

[edit | edit source]As of 2004, the best random number generators have 3 parts: an unpredictable nondeterministic mechanism, entropy assessment, and conditioner. The nondeterministic mechanism (also called the entropy source) generates blocks of raw biased bits. The entropy assessment part produces a conservative estimate of the min-entropy of some block of raw biased bits. The conditioner (also called a whitener, an unbiasing algorithm, or a randomness extractor) distills the block of raw bits into a much smaller block of conditioned output bits -- an output block of bits half the size of the estimated entropy (in bits) of the raw biased bits -- eliminating any systematic bias. If the estimate is good, the conditioned output bits are unbiased full-entropy bits even if the nondeterministic mechanism degrades over time. In practice, the entropy assessment is the difficult part.[9]

References

[edit | edit source]- ↑ Wikipedia: Symmetric-key algorithm#Reciprocal cipher

- ↑ Greg Goebel. "The Mechanization of Ciphers". 2018.

- ↑ http://s3.amazonaws.com/academia.edu.documents/33551175/IJETAE_1211_02.pdf?AWSAccessKeyId=AKIAIWOWYYGZ2Y53UL3A&Expires=1499689317&Signature=EavACFmNNlzcPXDa5kVZS9rh7Yw%3D&response-content-disposition=inline%3B%20filename%3DDES_AES_and_Blowfish_Symmetric_Key_Crypt.pdf

- ↑ https://www.schneier.com/blog/archives/2004/10/the_legacy_of_d.html

- ↑ https://www.schneier.com/academic/blowfish/

- ↑ https://books.google.com/books?hl=en&lr=&id=fNaoCAAAQBAJ&oi=fnd&pg=PA1&dq=speed+advantages+in+symmetric+ciphers+aes&ots=7hPPzLQjm5&sig=kaBEJW49jdonFWKin0-p6ZhROCA#v=onepage&q=speed%20advantages%20in%20symmetric%20ciphers%20aes&f=false

- ↑ http://s3.amazonaws.com/academia.edu.documents/33551175/IJETAE_1211_02.pdf?AWSAccessKeyId=AKIAIWOWYYGZ2Y53UL3A&Expires=1499689317&Signature=EavACFmNNlzcPXDa5kVZS9rh7Yw%3D&response-content-disposition=inline%3B%20filename%3DDES_AES_and_Blowfish_Symmetric_Key_Crypt.pdf

- ↑ NIST. "Random number generation".

- ↑ John Kelsey. "Entropy and Entropy Sources in X9.82" NIST. 2004. "Are you measuring what you think you're measuring?" "How much of sample variability is entropy, how much is just complexity?"

Further Reading

[edit | edit source]- Cryptography/Random Quality

- RFC 4086 "Randomness Requirements for Security"

- Random Number—from MathWorld

- Statistics/Numerical Methods/Random Number Generation

- Algorithms/Randomization

- Random number generation standards development bodies

Hashes

| A Wikibookian suggests that Cryptography/Hash function be merged into this chapter. Discuss whether or not this merger should happen on the discussion page. |

A digest, sometimes simply called a hash, is the result of a hash function, a specific mathematical function or algorithm, that can be described as . "Hashing" is required to be a deterministic process, and so, every time the input block is "hashed" by the application of the same hash function, the resulting digest or hash is constant, maintaining a verifiable relation with the input data. Thus making this type of algorithms useful for information security.

Other processes called cryptographic hashes, function similarly to hashing, but require added security, in the form or a level of guarantee that the input data can not feasibly be reversed from the generated hash value. I.e. That there is no useful inverse hash function

This property can be formally expanded to provide the following properties of a secure hash:

- Preimage resistant : Given H it should be hard to find M such that H = hash(M).

- Second preimage resistant: Given an input m1, it should be hard to find another input, m2 (not equal to m1) such that hash(m1) = hash(m2).

- Collision-resistant: it should be hard to find two different messages m1 and m2 such that hash(m1) = hash(m2). Because of the birthday paradox this means the hash function must have a larger image than is required for preimage-resistance.

A hash function is the implementation of an algorithm that, given some data as input, will generate a short result called a digest. A useful hash function generates a fixed length of hashed value.

For Ex: If our hash function is 'X' and we have 'wiki' as our input... then X('wiki')= a5g78 i.e. some hash value.

Applications of hash functions

[edit | edit source]Non-cryptographic hash functions have many applications,[1] but in this section we focus on applications that specifically require cryptographic hash functions:

A typical use of a cryptographic hash would be as follows: Alice poses to Bob a tough math problem and claims she has solved it. Bob would like to try it himself, but would yet like to be sure that Alice is not bluffing. Therefore, Alice writes down her solution, appends a random nonce, computes its hash and tells Bob the hash value (whilst keeping the solution secret). This way, when Bob comes up with the solution himself a few days later, Alice can verify his solution but still be able to prove that she had the solution earlier.

In actual practice, Alice and Bob will often be computer programs, and the secret would be something less easily spoofed than a claimed puzzle solution. The above application is called a commitment scheme. Another important application of secure hashes is verification of message integrity. Determination of whether or not any changes have been made to a message (or a file), for example, can be accomplished by comparing message digests calculated before, and after, transmission (or any other event) (see Tripwire, a system using this property as a defense against malware and malfeasance). A message digest can also serve as a means of reliably identifying a file.

A related application is password verification. Passwords should not be stored in clear text, for obvious reasons, but instead in digest form. In a later chapter, Password handling will be discussed in more detail—in particular, why hashing the password once is inadequate.

A hash function is a key part of message authentication (HMAC).

Most distributed version control systems (DVCSs) use cryptographic hashes.[2]

For both security and performance reasons, most digital signature algorithms specify that only the digest of the message be "signed", not the entire message. The Hash functions can also be used in the generation of pseudo-random bits.

SHA-1, MD5, and RIPEMD-160 are among the most commonly-used message digest algorithms as of 2004. In August 2004, researchers found weaknesses in a number of hash functions, including MD5, SHA-0 and RIPEMD. This has called into question the long-term security of later algorithms which are derived from these hash functions. In particular, SHA-1 (a strengthened version of SHA-0), RIPEMD-128 and RIPEMD-160 (strengthened versions of RIPEMD). Neither SHA-0 nor RIPEMD are widely used since they were replaced by their strengthened versions.

Other common cryptographic hashes include SHA-2 and Tiger.

Later we will discuss the "birthday attack" and other techniques people use for Breaking Hash Algorithms.

Hash speed

[edit | edit source]When using hashes for file verification, people prefer hash functions that run very fast. They want a corrupted file can be detected as soon as possible (and queued for retransmission, quarantined, or etc.). Some popular hash functions in this category are:

- BLAKE2b

- SHA-3

In addition, both SHA-256 (SHA-2) and SHA-1 have seen hardware support in some CPU instruction sets.

When using hashes for password verification, people prefer hash functions that take a long time to run. If/when a password verification database (the /etc/passwd file, the /etc/shadow file, etc.) is accidentally leaked, they want to force a brute-force attacker to take a long time to test each guess.[3] Some popular hash functions in this category are:

- Argon2

- scrypt

- bcrypt

- PBKDF2

We talk more about password hashing in the Cryptography/Secure Passwords section.

Further reading

[edit | edit source]- see Algorithm Implementation/Hashing for more about non-cryptographic hash functions and their applications.

- see Data Structures/Hash Tables for the most common application of non-cryptographic hash functions

- Rosetta Code : Cryptographic hash function has implementations of cryptographic hash functions in many programming languages.

- ↑ applications of non-cryptographic hash functions are described in Data Structures/Hash Tables and Algorithm Implementation/Hashing.

- ↑ Eric Sink. "Version Control by Example". Chapter 12: "Git: Cryptographic Hashes".

- ↑ "Speed Hashing"

Common flaws and weaknesses

Cryptography relies on puzzles. A puzzle that can not be solved without more information than the cryptanalyst has or can feasibly acquire is an unsolvable puzzle for the attacker. If the puzzle can be understood in a way that circumvents the secret information the cryptanalyst doesn't have then the puzzle is breakable. Obviously cryptography relies on an implicit form of security through obscurity where there currently exists no likely ways to understand the puzzle that will break it. The increasing complexity and subtlety of the mathematical puzzles used in cryptography creates a situation where neither cryptographers or cryptanalysts can be sure of all facets of the puzzle and security.

Like any puzzle, cryptography algorithms are based on assumptions - if these assumptions are flawed then the underlying puzzle may be flawed.

Secret knowledge assumption - Certain secret knowledge is not available to unauthorised people. Attacks such as packet sniffing, keylogging and meet in the middle attacks try to breach this assumption.

Secret knowledge masks plaintext - The secret knowledge is applied to the plaintext so that the nature of the message is no longer obvious. In general the secret knowledge hides the message in way so that the secret knowledge is required in order rediscover the message. Attacks such as chosen plaintext, brute force and frequency analysis try to breach this assumption

Secure Passwords

Passwords

[edit | edit source]A serious cryptographic system should not be based on a hidden algorithm, but rather on a hidden password that is hard to guess (see Kerckhoffs's law in the Basic Design Principles section). Passwords today are very important because access to a very large number of portals on the Internet, or even your email account, is restricted to those who can produce the correct password. This usually involves humans in choosing, remembering, and using passwords. All three aspects are commonly weaknesses: humans are notoriously bad at choosing hard-to-break passwords,[1] do not easily remember strong passwords, and are sloppy and too trusting in their use of passwords when they remember them. It is nearly overwhelmingly tempting to base passwords on already known items. As well, we can remember simple (e.g. short), or familiar (e.g. telephone number) pretty well, but stronger passwords are more than most of us can reliably remember; this leads to insecurity as easy methods of password recovery, or even password bypass, are required. These are universally insecure. Finally, humans are too easily prey to phishing fraud scams, to shoulder surfing, to helping out a friend who has forgotten their own password, etc.

Security