Sensory Systems/Print version

| This is the print version of Sensory Systems You won't see this message or any elements not part of the book's content when you print or preview this page. |

Table of contents

Introduction

Sensory Systems in Humans

- Visual System

- Auditory System

- Vestibular System

- Somatosensory System

- Olfactory System

- Gustatory System

Sensory Systems in Non-Primates

Appendix

The Wikibook of

Biological Organisms, an Engineer's Point of View.

From Wikibooks: The Free Library

Introduction

In order to survive - at least on the species level - we continually need to make decisions:

- "Should I cross the road?"

- "Should I run away from the creature in front of me?"

- "Should I eat the thing in front of me?"

- "Or should I try to mate it?"

To help us to make the right decision, and make that decision quickly, we have developed an elaborate system: a sensory system to notice what's going on around us; and a nervous system to handle all that information. And this system is big. Very big. Our nervous system contains about nerve cells (or neurons), and about 10-50 times as many supporting cells. These supporting cells, called gliacells, include oligodendrocytes, Schwann cells, and astrocytes. But do we really need all these cells?

Keep it simple: Unicellular Creatures

The answer is, "No!" We do not need that many cells in order to survive. Creatures existing of a single cell can be large, can respond to multiple stimuli, and can also be remarkably smart!

|

|

We often think of cells as really small things. But Xenophyophores (see image) are unicellular organisms that are found throughout the world's oceans and can get as large as 20 centimetres in diameter.

And even with this single cell, those organisms can respond to a number of stimuli. For example look at a creature from the group Paramecium: the paramecium is a group of unicellular ciliate protozoa formerly known as slipper animalcules, from their slipper shape. (The corresponding word in German is Pantoffeltierchen.) Despite the fact that these creatures consist of only one cell, they are able to respond to different environmental stimuli, e.g. to light or to touch.

And such unicellular organisms can be amazingly smart: the plasmodium of the slime mould Physarum polycephalum is a large amoebalike cell consisting of a dendritic network of tube-like structures. This single cell creature manages to connect sources finding the shortest connections (Nakagaki et al. 2000), and can even build efficient, robust and optimized network structures that resemble the Tokyo underground system (Tero et al. 2010). In addition, it has somehow developed the ability to read its tracks and tell if its been in a place before or not: this way it can save energy and not forage through locations where effort has already been put (Reid et al. 2012).

On the one hand, the approach used by the paramecium cannot be too bad, as they have been around for a long time. On the other hand, a single cell mechanism cannot be as flexible and as accurate in its responses as a more refined version of creatures, which use a dedicated, specialized system just for the registration of the environment: a Sensory System.

Not so simple: Three-hundred-and-two Neurons

While humans have hundreds of millions of sensory nerve cells, and about nerve cells, other creatures get away with significantly less. A famous one is Caenorhabditis elegans, a nematode with a total of 302 neurons.

C. elegans is one of the simplest organisms with a nervous system, and it was the first multicellular organism to have its genome completely sequenced. (The sequence was published in 1998.) And not only do we know its complete genome, we also know the connectivity between all 302 of its neurons. In fact, the developmental fate of every single somatic cell (959 in the adult hermaphrodite; 1031 in the adult male) has been mapped out. We know, for example, that only 2 of the 302 neurons are responsible for chemotaxis (“movement guided by chemical cues”, i.e. essentially smelling). Nevertheless, there is still a lot of research conducted—also on its smelling—in order to understand how its nervous system works.

General principles of Sensory Systems

Based on the example of the visual system, the general principle underlying our neuro-sensory system can be described as below:

All sensory systems are based on

- a Signal, i.e. a physical stimulus, provides information about our surrounding.

- the Collection of this signal, e.g. by using an ear or the lens of an eye.

- the Transduction of this stimulus into a nerve signal.

- the Processing of this information by our nervous system.

- And the generation of a resulting Action.

While the underlying physiology restricts the maximum frequency of our nerve-cells to about 1 kHz, more than one-million times slower than modern computers, our nervous system still manages to perform stunningly difficult tasks with apparent ease. The trick is there are lots of nerve cells (about ), and they are massively connected (one nerve cell can have up to 150,000 connections with other nerve cells).

Transduction

The role of our "senses" is to transduce relevant information from the world surrounding us into a type of signal that is understood by the next cells receiving that signal: the "Nervous System". (The sensory system is often regarded as part of the nervous system. Here I will try to keep these two apart, with the expression Sensory System referring to the stimulus transduction, and the Nervous System referring to the subsequent signal processing.)

Note here that only relevant information is to be transduced by the sensory system. The task of our senses is not to show us everything that is happening around us. Instead, their task is to filter out the important bits of the signals around us: electromagnetic signals, chemical signals, and mechanical ones. Our Sensory Systems transduce those environmental variables that are (probably) important to us. And the Nervous System propagates them in such a way that the responses that we take help us to survive, and to pass on our genes.

Types of sensory transducers

- Mechanical receptors

- Balance system (vestibular system)

- Hearing (auditory system)

- Pressure:

- Fast adaptation (Meissner’s corpuscle, Pacinian corpuscle) ? movement

- Slow adaptation (Merkel disks, Ruffini endings) ? shape Comment: these signals are transferred fast

- Muscle spindles

- Golgi organs: in the tendons

- Joint-receptors

- Chemical receptors

- Smell (olfactory system)

- Taste

- Light-receptors (visual system): here we have light-dark receptors (rods), and three different color receptors (cones)

- Thermo-receptors

- Heat-sensors (maximum sensitivity at ~ 45°C, signal temperatures < 50°C)

- Cold-sensors (maximum sensitivity at ~ 25°C, signal temperatures > 5°C)

- Comment: The information processing of these signals is similar to those of visual color signals, and is based on differential activity of the two sensors; these signals are slow

- Electro-receptors: for example in the bill of the platypus

- Magneto-receptors

- Pain receptors (nocioceptors): pain receptors are also responsible for itching; these signals are passed on slowly.

Neurons

What distinguishes neurons from other cells in the human body, like liver cells or fat cells? Neurons are unique, in that they:

- can switch quickly between two states (which can also be done by muscle cells);

- can propagate this change into a specified direction and over longer distances (which cannot be done by muscle cells);

- and this state-change can be signaled effectively to other connected neurons.

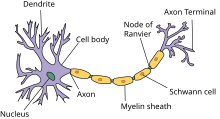

While there are more than 50 distinctly different types of neurons, they all share the same structure:

- An input stage, often called dendrites, as the input-area often spreads out like the branches of a tree. Input can come from sensory cells or from other neurons; it can come from a single cell (e.g. a bipolar cell in the retina receives input from a single cone), or from up to 150’000 other neurons (e.g. Purkinje cells in the Cerebellum); and it can be positive (excitatory) or negative (inhibitory).

- An integrative stage: the cell body does the household chores (generating the energy, cleaning up, generating the required chemical substances, etc), combines the incoming signals, and determines when to pass a signal on down the line.

- A conductile stage, the axon: once the cell body has decided to send out a signal, an action potential propagates along the axon, away from the cell body. An action potential is a quick change in the state of a neuron, which lasts for about 1 msec. Note that this defines a clear direction in the signal propagation, from the cell body, to the:

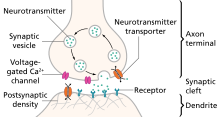

- output stage: The output is provided by synapses, i.e. the points where a neuron contacts the next neuron down the line, most often by the emission of neurotransmitters (i.e. chemicals that affect other neurons) which then provide an input to the next neuron.

Principles of Information Processing in the Nervous System

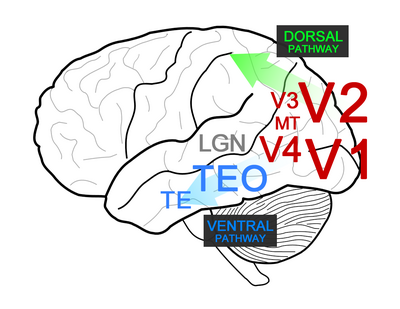

Parallel processing

An important principle in the processing of neural signals is parallelism. Signals from different locations have different meaning. This feature, sometimes also referred to as line labeling, is used by the

- Auditory system - to signal frequency

- Olfactory system - to signal sweet or sour

- Visual system - to signal the location of a visual signal

- Vestibular system - to signal different orientations and movements

Population Coding

Sensory information is rarely based on the signal nerve. It is typically coded by different patterns of activity in a population of neurons. This principle can be found in all our sensory systems.

Learning

The structure of the connections between nerve cells is not static. Instead it can be modified, to incorporate experiences that we have made. Thereby nature walks a thin line:

- If we learn too slowly, we might not make it. One example is the "Passenger Pidgeon", an American bird which is extinct by now. In the last century (and the one before), this bird was shot in large numbers. The mistake of the bird was: when some of them were shot, the others turned around, maybe to see what's up. So they were shot in turn - until the birds were essentially gone. The lesson: if you learn too slowly (i.e. to run away when all your mates are killed), your species might not make it.

- On the other hand, we must not learn too fast, either. For example, the monarch butterfly migrates. But it takes them so long to get from "start" to "finish", that the migration cannot be done by one butterfly alone. In other words, no single butterfly makes the whole journey. Nevertheless, the genetic disposition still tells the butterflies where to go, and when they are there. If they would learn any faster - they could never store the necessary information in their genes. In contrast to other cells in the human body, nerve cells are not re-generated in the human body.

Simulation of Neural Systems

Simulating Action Potentials

Action Potential

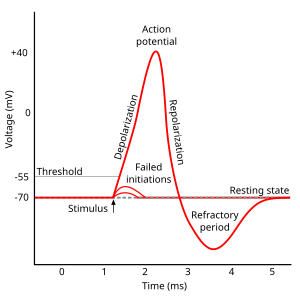

The "action potential" is the stereotypical voltage change that is used to propagate signals in the nervous system.

With the mechanisms described below, an incoming stimulus (of any sort) can lead to a change in the voltage potential of a nerve cell. Up to a certain threshold, that's all there is to it ("Failed initiations" in Fig. 4). But when the Threshold of voltage-gated ion channels is reached, it comes to a feed-back reaction that almost immediately completely opens the Na+-ion channels ("Depolarization" below): This reaches a point where the permeability for Na+ (which is in the resting state is about 1% of the permeability of K+) is 20\*larger than that of K+. Together, the voltage rises from about -60mV to about +50mV. At that point internal reactions start to close (and block) the Na+ channels, and open the K+ channels to restore the equilibrium state. During this "Refractory period" of about 1 m, no depolarization can elicit an action potential. Only when the resting state is reached can new action potentials be triggered.

To simulate an action potential, we first have to define the different elements of the cell membrane, and how to describe them analytically.

Cell Membrane

The cell membrane is made up by a water-repelling, almost impermeable double-layer of proteins, the cell membrane. The real power in processing signals does not come from the cell membrane, but from ion channels that are embedded into that membrane. Ion channels are proteins which are embedded into the cell membrane, and which can selectively be opened for certain types of ions. (This selectivity is achieved by the geometrical arrangement of the amino acids which make up the ion channels.) In addition to the Na+ and K+ ions mentioned above, ions that are typically found in the nervous system are the cations Ca2+, Mg2+, and the anions Cl- .

States of ion channels

Ion channels can take on one of three states:

- Open (For example, an open Na-channel lets Na+ ions pass, but blocks all other types of ions).

- Closed, with the option to open up.

- Closed, unconditionally.

Resting state

The typical default situation – when nothing is happening - is characterized by K+ that are open, and the other channels closed. In that case two forces determine the cell voltage:

- The (chemical) concentration difference between the intra-cellular and extra-cellular concentration of K+, which is created by the continuous activity of the ion pumps described above.

- The (electrical) voltage difference between the inside and outside of the cell.

The equilibrium is defined by the Nernst-equation:

R ... gas-constant, T ... temperature, z ... ion-valence, F ... Faraday constant, [X]o/i … ion concentration outside/ inside. At 25° C, RT/F is 25 mV, which leads to a resting voltage of

With typical K+ concentration inside and outside of neurons, this yields . If the ion channels for K+, Na+ and Cl- are considered simultaneously, the equilibrium situation is characterized by the Goldman-equation

where Pi denotes the permeability of Ion "i", and I the concentration. Using typical ion concentration, the cell has in its resting state a negative polarity of about -60 mV.

Activation of Ion Channels

The nifty feature of the ion channels is the fact that their permeability can be changed by

- A mechanical stimulus (mechanically activated ion channels)

- A chemical stimulus (ligand activated ion channels)

- Or an by an external voltage (voltage gated ion channels)

- Occasionally ion channels directly connect two cells, in which case they are called gap junction channels.

Important

- Sensory systems are essentially based ion channels, which are activated by a mechanical stimulus (pressure, sound, movement), a chemical stimulus (taste, smell), or an electromagnetic stimulus (light), and produce a "neural signal", i.e. a voltage change in a nerve cell.

- Action potentials use voltage gated ion channels, to change the "state" of the neuron quickly and reliably.

- The communication between nerve cells predominantly uses ion channels that are activated by neurotransmitters, i.e. chemicals emitted at a synapse by the preceding neuron. This provides the maximum flexibility in the processing of neural signals.

Modeling a voltage dependent ion channel

Ohm's law relates the resistance of a resistor, R, to the current it passes, I, and the voltage drop across the resistor, V:

or

where is the conductance of the resistor. If you now suppose that the conductance is directly proportional to the probability that the channel is in the open conformation, then this equation becomes

where is the maximum conductance of the cannel, and is the probability that the channel is in the open conformation.

Example: the K-channel

Voltage gated potassium channels (Kv) can be only open or closed. Let α be the rate the channel goes from closed to open, and β the rate the channel goes from open to closed

Since n is the probability that the channel is open, the probability that the channel is closed has to be (1-n), since all channels are either open or closed. Changes in the conformation of the channel can therefore be described by the formula

Note that α and β are voltage dependent! With a technique called "voltage-clamping", Hodgkin and Huxley determine these rates in 1952, and they came up with something like

If you only want to model a voltage-dependent potassium channel, these would be the equations to start from. (For voltage gated Na channels, the equations are a bit more difficult, since those channels have three possible conformations: open, closed, and inactive.)

Hodgkin Huxley equation

The feedback-loop of voltage-gated ion channels mentioned above made it difficult to determine their exact behaviour. In a first approximation, the shape of the action potential can be explained by analyzing the electrical circuit of a single axonal compartment of a neuron, consisting of the following components: 1) Na channel, 2) K channel, 3) Cl channel, 4) leakage current, 5) membrane capacitance, :

The final equations in the original Hodgkin-Huxley model, where the currents in of chloride ions and other leakage currents were combined, were as follows:

where , , and are time- and voltage dependent functions which describe the membrane-permeability. For example, for the K channels n obeys the equations described above, which were determined experimentally with voltage-clamping. These equations describe the shape and propagation of the action potential with high accuracy! The model can be solved easily with open source tools, e.g. the Python Dynamical Systems Toolbox PyDSTools. A simple solution file is available under [1] , and the output is shown below.

Links to full Hodgkin-Huxley model

Modeling the Action Potential Generation: The Fitzhugh-Nagumo model

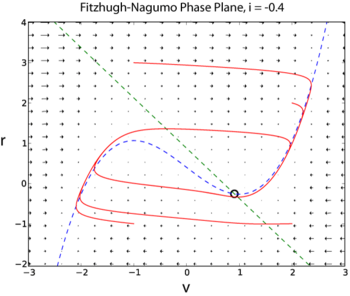

The Hodgkin-Huxley model has four dynamical variables: the voltage , the probability that the K channel is open, , the probability that the Na channel is open given that it was closed previously, , and the probability that the Na channel is open given that it was inactive previously, . A simplified model of action potential generation in neurons is the Fitzhugh-Nagumo (FN) model. Unlike the Hodgkin-Huxley model, the FN model has only two dynamic variables, by combining the variables and into a single variable , and combining the variables and into a single variable

The following two examples are taken from I is an external current injected into the neuron. Since the FN model has only two dynamic variables, its full dynamics can be explored using phase plane methods (Sample solution in Python here [2])

Simulating a Single Neuron with Positive Feedback

The following two examples are taken from [3] . This book provides a fantastic introduction into modeling simple neural systems, and gives a good understanding of the underlying information processing.

Let us first look at the response of a single neuron, with an input , and with feedback onto itself. The weight of the input is , and the weight of the feedback . The response of the neuron is given by

This shows how already very simple simulations can capture signal processing properties of real neurons.

""" Simulation of the effect of feedback on a single neuron """

import numpy as np

import matplotlib.pylab as plt

# System configuration

is_impulse = True # 'True' for impulse, 'False' for step

t_start = 11 # set a start time for the input

n_steps = 100

v = 1 # input weight

w = 0.95 # feedback weight

# Stimulus

x = np.zeros(n_steps)

if is_impulse:

x[t_start] = 1 # then set the input at only one time point

else:

x[t_start:] = 1 # step input

# Response

y = np.zeros(n_steps) # allocate output vector

for t in range(1, n_steps): # at every time step (skipping first)

y[t] = w*y[t-1] + v*x[t-1] # compute the output

# Plot the results

time = np.arange(n_steps)

fig, axs = plt.subplots(2, 1, sharex=True)

axs[0].plot(time, x)

axs[0].set_ylabel('Input')

axs[0].margins(x=0)

axs[1].plot(time, y)

axs[1].set_xlabel('Time Step')

axs[1].set_ylabel('Output')

plt.show()

Simulating a Simple Neural System

Even very simple neural systems can display a surprisingly versatile set of behaviors. An example is Wilson's model of the locust-flight central pattern generator. Here the system is described by

W is the connection matrix describing the recurrent connections of the neurons, and describes the input to the system.

""" 'Recurrent' feedback in simple network:

linear version of Wilson's locust flight central pattern generator (CPG) """

import numpy as np

import matplotlib.pylab as plt

# System configuration

v = np.zeros(4) # input weight matrix (vector)

v[1] = 1

w1 = [ 0.9, 0.2, 0, 0] # feedback weights to unit one

w2 = [-0.95, 0.4, -0.5, 0] # ... to unit two

w3 = [ 0, -0.5, 0.4, -0.95] # ... to unit three

w4 = [ 0, 0, 0.2, 0.9 ] # ... to unit four

W = np.vstack( [w1, w2, w3, w4] )

n_steps = 100

# Initial kick, to start the system

x = np.zeros(n_steps) # zero input vector

t_kick = 11

x[t_kick] = 1

# Simulation

y = np.zeros( (4,n_steps) ) # zero output vector

for t in range(1,n_steps): # for each time step

y[:,t] = W @ y[:,t-1] + v * x[t-1] # compute output

# Show the result

time = np.arange(n_steps)

plt.plot(time, x, '-', label='Input')

plt.plot(time, y[1,:], ls='dashed', label = 'Left Motorneuron')

plt.plot(time, y[2,:], ls='dotted', label='Right Motorneuron')

# Format the plot

plt.xlabel('Time Step')

plt.ylabel('Input and Unit Responses')

plt.gca().margins(x=0)

plt.tight_layout()

plt.legend()

plt.show()

The Development and Theory of Neuromorphic Circuits

Introduction

Neurmomorphic engineering uses very-large-scale-integration (VLSI) systems to build analog and digital circuits, emulating neuro-biological architecture and behavior. Most modern circuitry primarily utilizes digital circuit components because they are fast, precise, and insensitive to noise. Unlike more biologically relevant analog circuits, digital circuits require higher power supplies and are not capable of parallel computing. Biological neuron behaviors, such as membrane leakage and threshold constraints, are functions of material substrate parameters, and require analog systems to model and fine tune beyond digital 0/1. This paper will briefly summarize such neuromorphic circuits, and the theory behind their analog circuit components.

Current Events in Neuromorphic Engineering

In the last 10 years, the field of neuromorphic engineering has experienced a rapid upswing and received strong attention from the press and the scientific community.

After this topic came to the attention of the EU Commission, the Human Brain Project was launched in 2013, which was funded with 600 million euros over a period of ten years. The aim of the project is to simulate the human brain from the level of molecules and neurons to neural circuits. It is scheduled to end in September 2023, and although the main goal has not been achieved, it is providing detailed 3D maps of at least 200 brain regions and supercomputers to model functions such as memory and consciousness, among other things [4]. Another major deliverable is the EBRAINS virtual platform, launched in 2019. It provides a set of tools and image data that scientists worldwide can use for simulations and digital experiments.

Also in 2013, the U.S. National Institute of Health announced funding announced funding for the BRAIN project aimed at reconstructing the activity of large populations of neurons. It is ongoing and $680 million in funding has been allocated through 2023.

Other major accomplishments in recent years are listed below

1. TrueNorth Chip (IBM, 2014): with a total number of 268 million programmable synapses

2. Loihi Chip (Intel, 2017): based on asynchronous neural spiking network (SNN) for adaptive event-driven parallel computing. It was superseded by Loihi2 in 2021.

3. prototype chip (IMEC, 2017): first self-learning neuromorphic chip based on OxRAM technology

4. Akida AI Processor Development Kits (Brainchip, 2021): 1st commercially available neuromorphic processor

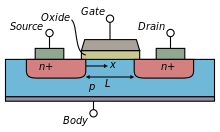

Transistor Structure & Physics

Metal-oxide-silicon-field-effect-transistors (MOSFETs) are common components of modern integrated circuits. MOSFETs are classified as unipolar devices because each transistor utilizes only one carrier type; negative-type MOFETs (nFETs) have electrons as carriers and positive-type MOSFETs (pFETs) have holes as carriers.

The general MOSFET has a metal gate (G), and two pn junction diodes known as the source (S) and the drain (D) as shown in Fig \ref{fig: transistor}. There is an insulating oxide layer that separates the gate from the silicon bulk (B). The channel that carries the charge runs directly below this oxide layer. The current is a function of the gate dimensions.

The source and the drain are symmetric and differ only in the biases applied to them. In a nFET device, the wells that form the source and drain are n-type and sit in a p-type substrate. The substrate is biased through the bulk p-type well contact. The positive current flows below the gate in the channel from the drain to the source. The source is called as such because it is the source of the electrons. Conversely, in a pFET device, the p-type source and drain are in a bulk n-well that is in a p-type substrate; current flows from the source to the drain.

When the carriers move due to a concentration gradient, this is called diffusion. If the carriers are swept due to an electric field, this is called drift. By convention, the nFET drain is biased at a higher potential than the source, whereas the source is biased higher in a pFET.

In a nFET, when a positive voltage is applied to the gate, positive charge accumulates on the metal contact. This draws electrons from the bulk to the silicon-oxide interface, creating a negatively charged channel between the source and the drain. The larger the gate voltage, the thicker the channel becomes which reduces the internal resistance, and thus increases the current logarithmically. For small gate voltages, typically below the threshold voltage, , the channel is not yet fully conducting and the increase in current from the drain to the source increases linearly on a logarithmic scale. This regime, when , is called the subthreshold region. Beyond this threshold voltage, , the channel is fully conducting between the source and drain, and the current is in the superthreshold regime.

For current to flow from the drain to the source, there must initially be an electric field to sweep the carriers across the channel. The strength of this electric field is a function of the applied potential difference between the source and the drain (), and thus controls the drain-source current. For small values of , the current linearly increases as a function of for constant values. As increases beyond , the current saturates.

pFETs behave similarly to nFET except that the carriers are holes, and the contact biases are negated.

In digital applications, transistors either operate in their saturation region (on) or are off. This large range in potential differences between the on and off modes is why digital circuits have such a high power demand. Contrarily, analog circuits take advantage of the linear region of transistors to produce a continuous signals with a lower power demand. However, because small changes in gate or source-drain voltages can create a large change in current, analog systems are prone to noise.

The field of neuromorphic engineering takes advantage of the noisy nature of analog circuits to replicate stochastic neuronal behavior [5] [6]. Unlike clocked digital circuits, analog circuits are capable of creating action potentials with temporal dynamics similar to biological time scales (approx. ). The potentials are slowed down and firing rates are controlled by lengthening time constants through leaking biases and variable resistive transistors. Analog circuits have been created that are capable of emulating biological action potentials with varying temporal dynamics, thus allowing silicon circuits to mimic neuronal spike-based learning behavior [7]. Whereas, digital circuits can only contain binary synaptic weights [0,1], analog circuits are capable of maintaining synaptic weights within a continuous range of values, making analog circuits particularly advantageous for neuromorophic circuits.

Basic static circuits

With an understanding of how transistors work and how they are biased, basic static analog circuits can be rationalized through. Afterward, these basic static circuits will be combined to create neuromorphic circuits. In the following circuit examples, the source, drain, and gate voltages are fixed, and the current is the output. In practice, the bias gate voltage is fixed to a subthreshold value (), the drain is held in saturation (), and the source and bulk are tied to ground (, ). All non-idealities are ignored.

Diode-Connected Transistor

A diode-connected nFET has its gate tied to the drain. Since the floating drain controls the gate voltage, the drain-gate voltages will self-regulate so the device will always sink the input current, . Beyond several microvolts, the transistor will run in saturation. Similarly, a diode-connected pFET has its gate tied to the source. Though this simple device seems to merely function as a short circuit, it is commonly used in analog circuits for copying and regulating current. Particularly in neuromorphic circuits, they are used to slow current sinks, to increase circuit time constants to biologically plausible time regimes.

Current Mirror

A current mirror takes advantage of the diode-connected transistor’s ability to sink current. When an input current is forced through the diode connected transistor, , the floating drain and gate are regulated to the appropriate voltage that allows the input current to pass. Since the two transistors share a common gate node, will also sink the same current. This forces the output transistor to duplicate the input current. The output will mirror the input current as long as:

- .

The current mirror gain can be controlled by adjusting these two parameters. When using transistors with different dimensions, otherwise known as a tilted mirror, the gain is:

A pFET current mirror is simply a flipped nFET mirror, where the diode-connected pFET mirrors the input current, and forces the other pFET to source output current.

Current mirrors are commonly used to copy currents without draining the input current. This is especially essential for feedback loops, such as the one use to accelerate action potentials, and summing input currents at a synapse.

Source Follower

A source follower consists of an input transistor, , stacked on top of a bias transistor, . The fixed subthreshold () bias voltage controls the gate , forcing it to sink a constant current, . is thus also forced to sink the same current () regardless of what the input voltage, .

A source follower is called so because the output, , will follow with a slight offset described by:

where kappa is the subthreshold slope factor, typically less than one.

This simple circuit is often used as a buffer. Since no current can flow through the gate, this circuit will not draw current from the input, an important trait for low-power circuits. Source followers can also isolate circuits, protecting them from power surges or static. A pFET source follower only differs from an nFET source follower in that the bias pFET has its bulk tied to .

In neuromorphic circuits, source followers and the like are used as simple current integrators which behave like post-synaptic neurons collecting current from many pre-synaptic neurons.

Inverter

An inverter consists of a pFET, , stacked on top of a nFET, , with their gates tied to the input, and the output is tied to the common source node, . When a high signal is input, the pFET is off but the nFET is on, effectively draining the output node, , and inverting the signal. Contrarily, when the input signal is low, the nFET is off but the pFET is on, charging up the node.

This simple circuit is effective as a quick switch. The inverter is also commonly used as a buffer because an output current can be produced without directly sourcing the input current, as no current is allowed through the gate. When two inverters are used in series, they can be used as a non-inverting amplifier. This was used in the original Integrate-and-Fire silicon neuron by Mead et al., 1989 to create a fast depolarizing spike similar to that of a biological action potential [8]. However, when the input fluctuates between high and low signals both transistors are in superthreshold saturation draining current, making this a very power hungry circuit.

Current Conveyor

The current conveyor is also commonly known as a buffered current mirror. Consisting of two transistors with their gates tied to a node of the other, the Current Conveyor self regulates so that the output current matches the input current, in a manner similar to the Current Mirror.

The current conveyor is often used in place of current mirrors for large serially repetitious arrays. This is because the current mirror current is controlled through the gate, whose oxide capacitance will result in a delayed output. Though this lag is negligible for a single output current mirror, long mirroring arrays will accumulative significant output delays. Such delays would greatly hinder large parallel processes such as those that try to emulate biological neural network computational strategies.

Differential Pair

The differential pair is a comparative circuit composed of two source followers with a common bias that forces the current of the weaker input to be silenced. The bias transistor will force to remain constant, tying the common node, , to a fixed voltage. Both input transistors will want to drain current proportional to their input voltages, and , respectively. However, since the common node must remain fixed, the drains of the input transistors must raise in proportion to the gate voltages. The transistor with the lower input voltage will act as a choke and allow less current through its drain. The losing transistor will see its source voltage increase and thus fall out of saturation.

The differential pair, in the setting of a neuronal circuit, can function as an activation threshold of an ion channel below which the voltage-gated ion channel will not open, preventing the neuron from spiking [9].

Silicon neurons

Winner-Take-All

The Winner-Take-All (WTA) circuit, originally designed by Lazzaro et al. [10], is a continuous time, analog circuit. It compares the outputs of an array of cells, and only allows the cell with the highest output current to be on, inhibiting all other competing cells.

Each cell comprises a current-controlled conveyor, and receives input currents, and outputs into a common line controlling a bias transistor. The cell with the largest input current, will also output the largest current, increasing the voltage of the common node. This forces the weaker cells to turn off. The WTA circuit can be extended to include a large network of competing cells. A soft WTA also has its output current mirrored back to the input, effectively increasing the cell gain. This is necessary to reduce noise and random switching if the cell array has a small dynamic range.

WTA networks are commonly used as a form of competitive learning in computational neural networks that involve distributed decision making. In particular, WTA networks have been used to perform low level recognition and classification tasks that more closely resemble cortical activity during visual selection tasks [11].

Integrate & Fire Neuron

The most general schematic of an Integrate & Fire Neuron, is also known as an Axon-Hillock Neuron, is the most commonly used spiking neuron model [8]. Common elements between most Axon-Hillock circuits include: a node with a memory of the membrane potential , an amplifier, a positive feedback loop , and a mechanism to reset the membrane potential to its resting state, .

The input current, , charges the , which is stored in a capacitor, C. This capacitor is analogous to the lipid cellular membrane which prevents free ionic diffusion, creating the membrane potential from the accumulated charge difference on either side of the lipid membrane. The input is amplified to output a voltage spike. A change in membrane potential is positively fed back through to , producing a faster spike. This closely resembles how a biological axon hillock, which is densely packed with voltage-gated sodium channels, amplifies the summed potentials to produce an action potential. When a voltage spike is produced, the reset bias, , begins to drain the node. This is similar to sodium-potassium channels which actively pump sodium and potassium ions against the concentration gradient to maintain the resting membrane potential.

The original Axon Hillock silicon neuron has been adapted to include an activation threshold with the addition of a Differential Pair comparing the input to a set threshold bias [9]. This conductance-based silicon neuron utilizes differential-pair integrator (DPI) with a leaky transistor to compare the input, to the threshold, . The leak bias , refractory period bias , adaptation bias , and positive feed back gain, all independently control the spiking frequency. Research has been focused on implementing spike frequency adaptation to set refractory periods and modulating thresholds [12]. Adaptation allows for the neuron to modulate its output firing rate as a function of its input. If there is a constant high frequency input, the neuron will be desensitized to the input and the output will be steadily diminished over time. The adaptive component of the conductance-based neuron circuit is modeled through the calcium flux and stores the memory of past activity through the adaptive capacitor, . The advent of spike frequency adaptation allowed for changes on the neuron level to control adaptive learning mechanisms on the synapse level. This model of neuronal learning is modeled from biology [13] and will be further discussed in Silicon Synapses.

Silicon Synapses

The most basic silicon synapse, originally used by Mead et al.,1989 [8], simply consists of a pFET source follower that receives a low signal pulse input and outputs a unidirectional current, [14].

The amplitude of the spike is controlled by the weight bias, , and the pulse width is directly correlated with the input pulse width which is set by $V_{\tau}$. The capacitor in the Lazzaro et al. (1993) synapse circuit was added to increase the spike time constant to a biologically plausible value. This slowed the rate at which the pulse hyperpolarizes and depolarizes, and is a function of the capacitance.

For multiple inputs depicting competitive excitatory and inhibitive behavior, the log-domain integrator uses and to regulate the output current magnitude, , as function of the input current, , according to:

controls the rate at which is able to charge the output transistor gate. governs the rate in which the output is sunk. This competitive nature is necessary to mimic biological behavior of neurotransmitters that either promote or depress neuronal firing.

Synaptic models have also been developed with first order linear integrators using log-domain filters capable of modeling the exponential decay of excitatory post-synaptic current (EPSC) [15]. This is necessary to have biologically plausible spike contours and time constants. The gain is also independently controlled from the synapse time constant which is necessary for spike-rate and spike-timing dependent learning mechanisms.

The aforementioned synapses simply relay currents from the pre-synaptic sources, varying the shape of the pulse spike along the way. They do not, however, contain any memory of previous spikes, nor are they capable of adapting their behavior according to temporal dynamics. These abilities, however, are necessary if neuromorphic circuits are to learn like biological neural networks.

According to Hebb's postulate, behaviors like learning and memory are hypothesized to occur on the synaptic level [16]. It accredits the learning process to long-term neuronal adaptation in which pre- and post-synaptic contributions are strengthened or weakened by biochemical modifications. This theory is often summarized in the saying, "Neurons that fire together, wire together." Artificial neural networks model learning through these biochemical "wiring" modifications with a single parameter, the synaptic weight, . A synaptic weight is a parameter state variable that quantifies how a presynaptic neuron spike affects a postsynaptic neuron output. Two models of Hebbian synaptic weight plasticity include spike-rate-dependent plasticity (SRDP), and spike-timing-dependent plasticity (STDP). Since the conception of this theory, biological neuron activity has been shown to exhibit behavior closely modeling Hebbian learning. One such example is of synaptic NMDA and AMPA receptor plastic modifications that lead to calcium flux induced adaptation [17].

Learning and long-term memory of information in biological neurons is accredited to NMDA channel induced adaptation. These NMDA receptors are voltage dependent and control intracellular calcium ion flux. It has been shown in animal studies that neuronal desensitization is diminished when extracellular calcium was reduced [17].

Since calcium concentration exponentially decays, this behavior easily implemented on hardware using subthreshold transistors. A circuit model demonstrating calcium dependent biological behavior is shown by Rachmuth et al. (2011) [18]. The calcium signal, , regulates AMPA and NMDA channel activity through the node according to calcium-dependent STDP and SRDP learning rules. The output of these learning rules is the synaptic weight, , which is proportional to the number of active AMPA and NMDA channels. The SRDP model describes the weight in terms of two state variables, , which controls the update rule, and , which controls the learning rate.

where is the synaptic weight, is the update rule, is the learning rate, and is a constant that allows the weight to drift out of saturation in absence of an input.

The NMDA channel controls the calcium influx, . The NMDA receptor voltage-dependency is modeled by , and the channel mechanics are controlled with a large capacitor to increase the calcium time constant, . The output is copied via current mirrors into the and circuits to perform downstream learning functions.

The circuit compares to threshold biases, and ), that respectively control long-term potentiation or long-term depression through a series of differential pair circuits. The output of differential pairs determines the update rule. This circuit has been demonstrated to exhibit various Hebbian learning rules as observed in the hippocampus, and anti-Hebbian learning rules used in the cerebellum.

The circuit controls when synaptic learning can occur by only allowing updates when is above a differential pair set threshold, . The learning rate (LR) is modeled according to:

where is a function of and controls the learning rate, is the capacitance of the circuit, and is the threshold voltage of the comparator. This function demonstrates that must be biased to maintain an elevated in order to simulate SRDT. A leakage current, , was included to drain to during inactivity.

The NEURON simulation environment

Introduction

Neuron is a simulation environment with which you can simulate the propagation of ions and action potentials in biological and artificial neurons as well as in networks of neurons [19]. The user can specify a model geometry by defining and connecting neuron cell parts, which can be equipped with various mechanisms such as ion channels, clamps and synapses. To interact with NEURON the user can either use the graphical user interface (GUI) or one of the programming languages hoc (a language with a C like syntax) or Python as an interpreter. The GUI contains a wide selection of the most-used features, an example screenshot is shown in the Figure on the right. The programming languages on the other hand can be exploited to add more specific mechanisms to the model and for automation purposes Furthermore, custom mechanisms can be created with the programming language NMODL, which is an extension to MODL, a model description language developed by the NBSR (National Biomedical Simulation Resource). These new mechanisms can then be compiled, and added to models through the GUI or interpreters.

Neuron was initially developed by John W. Moore at Duke university in collaboration with Michael Hines. It is currently used in numerous institutes and universities for educational and research purposes. There is an extensive amount of information available including the official website containing the documentation, the NEURON forum and various tutorials and guides. Furthermore in 2006 the authoritative reference book for NEURON was published called “The NEURON Book” [20]. To read the following chapters and to work with NEURON, some background knowledge on the physiology of neurons is recommended. Some examples of information sources about neurons are the WikiBook chapter, or the videos in the introduction of the Advanced Nervous System Physiology chapter on Khan academy. We will not cover specific commands or details about how to perform the here mentioned actions with NEURON since this document is not intended to be a tutorial but only an overview of the possibilities and model structure within NEURON. For more practical information on the implementation with NEURON I would recommend the tutorials which are linked below and the documentation on the official webpage [19].

Model creation

Single Cell Geometry

First we will discuss the creation of a model geometry that consists of a single biological neuron. A schematic representation of a Neuron is shown in the Figure on the right. In the following Listing an example code snippet is shown in which a multi-compartment cell with one soma and two dendrites is specified using hoc.

load_file("nrngui.hoc")

// Create a soma object and an array containing 2 dendrite objects

ndend = 2

create soma, dend[ndend]

access soma

// Initialize the soma and the dendrites

soma {

nseg = 1

diam = 18.8

L = 18.8

Ra = 123.0

insert hh

}

dend[0] {

nseg = 5

diam = 3.18

L = 701.9

Ra = 123

insert pas

}

dend[1] {

nseg = 5

diam = 2.0

L = 549.1

Ra = 123

insert pas

}

// Connect the dendrites to the soma

connect dend[0](0), soma(0)

connect dend[1](0), soma(1)

// Create an electrode in the soma

objectvar stim

stim = new IClamp(0.5)

// Set stimulation parameters delay, duration and amplitude

stim.del = 100

stim.dur = 100

stim.amp = 0.1

// Set the simulation end time

tstop = 300

Sections

The basic building blocks in NEURON are called “sections”. Initially a section only represents a cylindrical tube with individual properties such as the length and the diameter. A section can be used to represent different neuron parts, such as a soma, a dendrite or an axon, by equipping it with the corresponding mechanisms such as ion channels or synapse connections with other cells or artificial stimuli. A neuron cell can then be created by connecting the ends of the sections together however you want, for example in a tree like structure, as long as there are no loops. The neuron as specified in the Listing above, is visualized in the Figure on the right.

Segments

In order to model the propagation of action potentials through the sections more accurately, the sections can be divided into smaller parts called “segments”. A model in which the sections are split into multiple segments is called a “multi-compartment” model. Increasing the number of segments can be seen as increasing the granularity of the spatial discretization, which leads to more accurate results when for example the membrane properties are not uniform along the section. By default, a section consists of one segment.

Membrane Mechanisms

The default settings of a section do not contain any ion channels, but the user can add them [21]. There are two types of built-in ion channel membrane mechanisms available, namely a passive ion channel membrane model and a Hodgkin-Huxley model membrane model which represents a combination of passive and voltage gated ion channels. If this is not sufficient, users can define their own membrane mechanisms using the programming language NMODL.

Point Processes

Besides membrane mechanisms which are defined on membrane areas, there are also local mechanisms known as “Point processes” that can be added to the sections. Some examples are synapses, as shown in the Figure on the right, and voltage- and current clamps. Again, users are free to implement their own mechanisms with the programming language NMODL. One key difference between point processes and membrane mechanisms is that the user can specify the location where the point process should be added onto the section, because it is a local mechanism [21].

Output and Visualizations

The computed quantities can be tracked over time and plotted, to create for example a graph of voltage versus time within a specific segment, as shown in the GUI screenshot above. It is also possible to make animations, to show for example how the voltage distribution within the axon develops over time. Note that the quantities are only computed at the centre of each segment and at the boundaries of each section.

Creating a Cell Network

Besides modeling the ion concentrations within single cells it is also possible to connect the cells and to simulate networks of neurons. To do so the user has to attach synapses, which are point processes, to the postsynaptic neurons and then create “NetCon” objects which will act as the connection between the presynaptic neuron and the postsynaptic neuron. There are different types of synapses that the user can attach to neurons, such as AlphaSynapse, in which the synaptic conductance decays according to an alpha function and ExpSyn in which the synaptic conductance decays exponentially. Like with other mechanisms it is also possible to create custom synapses using NMODL. For the NetCon object it is possible to specify several parameters, such as the threshold and the delay, which determine the required conditions for the presynaptic neuron to cause a postsynaptic potential.

Artificial Neurons

Besides the biological neurons that we have discussed up until now, there is also another type of neuron that can be simulated with NEURON known as an “artificial” neuron. The difference between the biological and artificial neurons in NEURON is that the artificial neuron does not have a spatial extent and that its kinetics are highly simplified. There are several integrators available to model the behaviour of artificial cells in NEURON, which distinguish themselves by the extent to which they are simplifications of the dynamics of biological neurons [22]. To reduce the computation time for models of artificial spiking neuron cells and networks, the developers of NEURON have chosen to support event-driven simulations. This substantially reduced the computational burden of simulating spike-triggered synaptic transmissions. Although modelling conductance based neuron cells requires a continuous system simulation, NEURON can still exploit the benefits of event-driven methods for networks that contain biological and artificial neurons by fully supporting hybrid simulations. This way any combination of artificial and conductance based neuron cells can be simulated while still achieving the reduced computation time that results from event-driven simulation of artificial cells [23]. The user can also add other artificial neuron classes with the language NMODL.

Neuron with Python

Since 1984 NEURON has provided the hoc interpreter for the creation and execution of simulations. The hoc language has been extended and maintained to be used with NEURON up until now, but because this maintenance takes a lot of time and because it has turned out to be an orphan language limited to NEURON users, the developers of NEURON desired a more modern programming language as an interpreter for NEURON. Because Python has become widely used within the area of scientific computing with many users creating packages containing reusable code, it is now more attractive as an interpreter than hoc[24]. There are three ways to use NEURON with Python. The first way is to run NEURON with the terminal accepting interactive Python commands. The second way is to run NEURON with the interpreter hoc, and to access Python commands through special commands in hoc. The third way is to use NEURON as an extension module for Python, such that a NEURON module can be imported into Python or IPython scripts.

Installation

To use the first and second mode, so to use NEURON with Python embedded, it is sufficient to complete the straightforward installation. To use the third mode however, which is NEURON as an extension module for Python, it is necessary to build NEURON from the source code and install the NEURON shared library for Python which is explained in this installation guide.

NEURON commands in Python

Because NEURON was originally developed with hoc as an interpreter the user still has to explicitly call hoc functions and classes from within Python. All functions and classes that have existed for hoc are accessible in Python through the module “neuron”, both when using Python embedded in NEURON or when using NEURON as an extension module. There are only some minor differences between the NEURON commands in hoc and Python so there should not be many complications for users when changing from one to another [24]. There are a couple of advantages of using NEURON with Python instead of hoc. One of the primary advantages is that Python offers a lot more functionality because it is a complete object-oriented language and because there is an extensive suite of analysis tools available for science and engineering. Also, loading user-defined mechanisms from NMODL scripts has become easier, which makes NEURON more attractive for simulations of very specific mechanisms [24]. More details on NEURON in combination with Python can be found here.

Tutorials

There are multiple tutorials available online for getting started with NEURON and two of them are listed below.

In the first tutorial you will start with learning how to create a single compartment cell and finish with creating a network of neurons as shown in the Figure on the right, containing custom cell mechanisms. Meanwhile you will be guided through the NEURON features for templates, automation, computation time optimization and extraction of resulting data. The tutorial uses hoc commands but the procedures are almost the same within Python.

The second tutorial shows how to create a cell with a passive cell membrane and a synaptic stimulus, and how to visualize the results with the Python module matplotlib.

Further Reading

Besides what is mentioned in this introduction to NEURON, there are many more options available which are continuously extended and improved by the developers. An extensive explanation of NEURON can be found in “The NEURON book” [20], which is the official reference book. Furthermore the official website contains a lot of information and links to other sources as well.

References

Two additional sources are "An adaptive silicon synapse", by Chicca et al. [25] and "Analog VLSI: Circuits and Principles", by Liu et al. [26].

- ↑ T. Haslwanter (2012). "Hodgkin-Huxley Simulations [Python]". private communications.

- ↑ T. Haslwanter (2012). "Fitzhugh-Nagumo Model [Python]". private communications.

- ↑ T. Anastasio (2010). "Tutorial on Neural systems Modeling".

- ↑ Europe spent €600 million to recreate the human brain in a computer. How did it go?

- ↑

E Aydiner, AM Vural, B Ozcelik, K Kiymac, U Tan (2003), A simple chaotic neuron model: stochastic behavior of neural networks

{{citation}}: CS1 maint: multiple names: authors list (link) - ↑ WM Siebert (1965), Some implications of the stochastic behavior of primary auditory neurons

- ↑

G Indiveri, F Stefanini, E Chicca (2010), Spike-based learning with a generalized integrate and fire silicon neuron

{{citation}}: CS1 maint: multiple names: authors list (link) - ↑ a b c CA Mead (1989), Analog VLSI and Neural Systems

- ↑ a b RJ Douglas, MA Mahowald (2003), Silicon Neuron

- ↑

J Lazzaro, S Ryckebusch, MA Mahowald, CA Mead (1989), Winner-Take-All: Networks of Complexity

{{citation}}: CS1 maint: multiple names: authors list (link) - ↑ M Riesenhuber, T Poggio (1999), Hierarchical models of object recognition in cortex

- ↑

E Chicca, G Indiveri, R Douglas (2004), An event-based VLSI network of Integrate-and-Fire Neurons

{{citation}}: CS1 maint: multiple names: authors list (link) - ↑

G Indiveri, E Chicca, R Douglas (2004), A VLSI reconfigurable network of integrate-and-fire neurons with spike-based learning synapses

{{citation}}: CS1 maint: multiple names: authors list (link) - ↑ J Lazzaro, J Wawrzynek (1993), Low-Power Silicon Neurons, Axons, and Synapses

- ↑

S Mitra, G Indiveri, RE Cummings (2010), Synthesis of log-domain integrators for silicon synapses with global parametric control

{{citation}}: CS1 maint: multiple names: authors list (link) - ↑ DO Hebb (1949), The organization of behavior

- ↑ a b

PA Koplas, RL Rosenberg, GS Oxford (1997), The role of calcium in the densensitization of capsaisin responses in rat dorsal root ganglion neurons

{{citation}}: CS1 maint: multiple names: authors list (link) - ↑

G Rachmuth, HZ Shouval, MF Bear, CS Poon (2011), A biophysically-based neuromorphic model of spike rate-timing-dependent plasticity

{{citation}}: CS1 maint: multiple names: authors list (link) - ↑ a b Neuron, for empirically-based simulations of neurons and networks of neurons

- ↑ a b Nicholas T. Carnevale, Michael L. Hines (2009), The NEURON book

- ↑ a b NEURON Tutorial 1

- ↑ M.L. Hines and N.T. Carnevale (2002), The NEURON Simulation Environment

- ↑

Romain Brette, Michelle Rudolph, Ted Carnevale, Michael Hines, David Beeman, James M. Bower, Markus Diesmann, Abigail Morrison, Philip H. Goodman, Frederick C. Harris, Jr., Milind Zirpe, Thomas Natschläger, Dejan Pecevski, Bard Ermentrout, Mikael Djurfeldt, Anders Lansner, Olivier Rochel, Thierry Vieville, Eilif Muller, Andrew P. Davison, Sami El Boustani, Alain Destexhe (2002), Simulation of networks of spiking neurons: A review of tools and strategies

{{citation}}: CS1 maint: multiple names: authors list (link) - ↑ a b c

Hines ML, Davison AP, Muller E. NEURON and Python. Frontiers in Neuroinformatics. 2009;3:1. doi:10.3389/neuro.11.001.2009. (2009), NEURON and Python

{{citation}}: CS1 maint: multiple names: authors list (link) - ↑

E Chicca, G Indiveri, R Douglas (2003), An adaptive silicon synapse

{{citation}}: CS1 maint: multiple names: authors list (link) - ↑

SC Liu, J Kramer, T Delbrück, G Indiveri, R Douglas (2002), Analog VLSI: Circuits and Principles

{{citation}}: CS1 maint: multiple names: authors list (link)

Visual System

Introduction

Generally speaking, visual systems rely on electromagnetic (EM) waves to give an organism more information about its surroundings. This information could be regarding potential mates, dangers and sources of sustenance. Different organisms have different constituents that make up what is referred to as a visual system.

The complexity of eyes range from something as simple as an eye spot, which is nothing more than a collection of photosensitive cells, to a fully fledged camera eye. If an organism has different types of photosensitive cells, or cells sensitive to different wavelength ranges, the organism would theoretically be able to perceive colour or at the very least colour differences. Polarisation, another property of EM radiation, can be detected by some organisms, with insects and cephalopods having the highest accuracy.

Please note, in this text, the focus has been on using EM waves to see. Granted, some organisms have evolved alternative ways of obtaining sight or at the very least supplementing what they see with extra-sensory information. For example, whales or bats, which use echo-location. This may be seeing in some sense of the definition of the word, but it is not entirely correct. Additionally, vision and visual are words most often associated with EM waves in the visual wavelength range, which is normally defined as the same wavelength limits of human vision.

Since some organisms detect EM waves with frequencies below and above that of humans a better definition must be made. We therefore define the visual wavelength range as wavelengths of EM between 300nm and 800nm. This may seem arbitrary to some, but selecting the wrong limits would render parts of some bird's vision as non-vision. Also, with this range of wavelengths, we have defined for example the thermal-vision of certain organisms, like for example snakes as non-vision. Therefore snakes using their pit organs, which is sensitive to EM between 5000nm and 30,000nm (IR), do not "see", but somehow "feel" from afar. Even if blind specimens have been documented targeting and attacking particular body parts.

Firstly a brief description of different types of visual system sensory organs will be elaborated on, followed by a thorough explanation of the components in human vision, the signal processing of the visual pathway in humans and finished off with an example of the perceptional outcome due to these stages.

Sensory Organs

Vision, or the ability to see depends on visual system sensory organs or eyes. There are many different constructions of eyes, ranging in complexity depending on the requirements of the organism. The different constructions have different capabilities, are sensitive to different wave-lengths and have differing degrees of acuity, also they require different processing to make sense of the input and different numbers to work optimally. The ability to detect and decipher EM has proved to be a valuable asset to most forms of life, leading to an increased chance of survival for organisms that utilise it. In environments without sufficient light, or complete lack of it, lifeforms have no added advantage of vision, which ultimately has resulted in atrophy of visual sensory organs with subsequent increased reliance on other senses (e.g. some cave dwelling animals, bats etc.). Interestingly enough, it appears that visual sensory organs are tuned to the optical window, which is defined as the EM wavelengths (between 300nm and 1100nm) that pass through the atmosphere reaching to the ground. This is shown in the figure below. You may notice that there exists other "windows", an IR window, which explains to some extent the thermal-"vision" of snakes, and a radiofrequency (RF) window, of which no known lifeforms are able to detect.

Through time evolution has yielded many eye constructions, and some of them have evolved multiple times, yielding similarities for organisms that have similar niches. There is one underlying aspect that is essentially identical, regardless of species, or complexity of sensory organ type, the universal usage of light-sensitive proteins called opsins. Without focusing too much on the molecular basis though, the various constructions can be categorised into distinct groups:

- Spot Eyes

- Pit Eyes

- Pinhole Eyes

- Lens Eyes

- Refractive Cornea Eyes

- Reflector Eyes

- Compound Eyes

The least complicated configuration of eyes enable organisms to simply sense the ambient light, enabling the organism to know whether there is light or not. It is normally simply a collection of photosensitive cells in a cluster in the same spot, thus sometimes referred to as spot eyes, eye spot or stemma. By either adding more angular structures or recessing the spot eyes, an organisms gains access to directional information as well, which is a vital requirement for image formation. These so called pit eyes are by far the most common types of visual sensory organs, and can be found in over 95% of all known species.

Taking this approach to the obvious extreme leads to the pit becoming a cavernous structure, which increases the sharpness of the image, alas at a loss in intensity. In other words, there is a trade-off between intensity or brightness and sharpness. An example of this can be found in the Nautilus, species belonging to the family Nautilidae, organisms considered to be living fossils. They are the only known species that has this type of eye, referred to as the pinhole eye, and it is completely analogous to the pinhole camera or the camera obscura. In addition, like more advanced cameras, Nautili are able to adjust the size of the aperture thereby increasing or decreasing the resolution of the eye at a respective decrease or increase in image brightness. Like the camera, the way to alleviate the intensity/resolution trade-off problem is to include a lens, a structure that focuses the light unto a central area, which most often has a higher density of photo-sensors. By adjusting the shape of the lens and moving it around, and controlling the size of the aperture or pupil, organisms can adapt to different conditions and focus on particular regions of interest in any visual scene. The last upgrade to the various eye constructions already mentioned is the inclusion of a refractive cornea. Eyes with this structure have delegated two thirds of the total optic power of the eye to the high refractive index liquid inside the cornea, enabling very high resolution vision. Most land animals, including humans have eyes of this particular construct. Additionally, many variations of lens structure, lens number, photosensor density, fovea shape, fovea number, pupil shape etc. exists, always, to increase the chances of survival for the organism in question. These variations lead to a varied outward appearance of eyes, even with a single eye construction category. Demonstrating this point, a collection of photographs of animals with the same eye category (refractive cornea eyes) is shown below.

|

|

|

|

|

An alternative to the lens approach called reflector eyes can be found in for example mollusks. Instead of the conventional way of focusing light to a single point in the back of the eye using a lens or a system of lenses, these organisms have mirror like structures inside the chamber of the eye that reflects the light into a central portion, much like a parabola dish. Although there are no known examples of organisms with reflector eyes capable of image formation, at least one species of fish, the spookfish (Dolichopteryx longipes) uses them in combination with "normal" lensed eyes.

The last group of eyes, found in insects and crustaceans, is called compound eyes. These eyes consist of a number of functional sub-units called ommatidia, each consisting of a facet, or front surface, a transparent crystalline cone and photo-sensitive cells for detection. In addition each of the ommatidia are separated by pigment cells, ensuring the incoming light is as parallel as possible. The combination of the outputs of each of these ommatidia form a mosaic image, with a resolution proportional to the number of ommatidia units. For example, if humans had compound eyes, the eyes would have covered our entire faces to retain the same resolution. As a note, there are many types of compound eyes, but delving to deep into this topic is beyond the scope of this text.

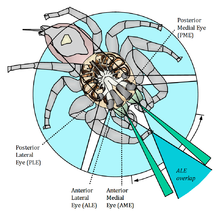

Not only the type of eyes vary, but also the number of eyes. As you are well aware of, humans usually have two eyes, spiders on the other hand have a varying number of eyes, with most species having 8. Normally the spiders also have varying sizes of the different pairs of eyes and the differing sizes have different functions. For example, in jumping spiders 2 larger front facing eyes, give the spider excellent visual acuity, which is used mainly to target prey. 6 smaller eyes have much poorer resolution, but helps the spider to avoid potential dangers. Two photographs of the eyes of a jumping spider and the eyes of a wolf spider are shown to demonstrate the variability in the eye topologies of arachnids.

- Eye Topologies of Spiders

-

Wolf Spider

-

Jumping Spider

Anatomy of the Visual System

We humans are visual creatures, therefore our eyes are complicated with many components. In this chapter, an attempt is made to describe these components, thus giving some insight into the properties and functionality of human vision.

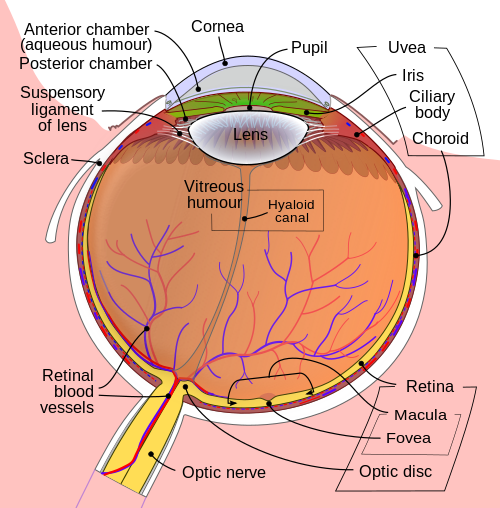

Getting inside of the eyeball - Pupil, iris and the lens

Light rays enter the eye structure through the black aperture or pupil in the front of the eye. The black appearance is due to the light being fully absorbed by the tissue inside the eye. Only through this pupil can light enter into the eye which means the amount of incoming light is effectively determined by the size of the pupil. A pigmented sphincter surrounding the pupil functions as the eye's aperture stop. It is the amount of pigment in this iris, that give rise to the various eye colours found in humans.

In addition to this layer of pigment, the iris has 2 layers of ciliary muscles. A circular muscle called the pupillary sphincter in one layer, that contracts to make the pupil smaller. The other layer has a smooth muscle called the pupillary dilator, which contracts to dilate the pupil. The combination of these muscles can thereby dilate/contract the pupil depending on the requirements or conditions of the person. The ciliary muscles are controlled by ciliary zonules, fibres that also change the shape of the lens and hold it in place.

The lens is situated immediately behind the pupil. Its shape and characteristics reveal a similar purpose to that of camera lenses, but they function in slightly different ways. The shape of the lens is adjusted by the pull of the ciliary zonules, which consequently changes the focal length. Together with the cornea, the lens can change the focus, which makes it a very important structure indeed, however only one third of the total optical power of the eye is due to the lens itself. It is also the eye's main filter. Lens fibres make up most of the material for the lense, which are long and thin cells void of most of the cell machinery to promote transparency. Together with water soluble proteins called crystallins, they increase the refractive index of the lens. The fibres also play part in the structure and shape of the lens itself.

Beamforming in the eye – Cornea and its protecting agent - Sclera

The cornea, responsible for the remaining 2/3 of the total optical power of the eye, covers the iris, pupil and lens. It focuses the rays that pass through the iris before they pass through the lens. The cornea is only 0.5mm thick and consists of 5 layers:

- Epithelium: A layer of epithelial tissue covering the surface of the cornea.

- Bowman's membrane: A thick protective layer composed of strong collagen fibres, that maintain the overall shape of the cornea.

- Stroma: A layer composed of parallel collagen fibrils. This layer makes up 90% of the cornea's thickness.

- Descemet's membrane and Endothelium: Are two layers adjusted to the anterior chamber of the eye filled with aqueous humor fluid produced by the ciliary body. This fluid moisturises the lens, cleans it and maintains the pressure in the eye ball. The chamber, positioned between cornea and iris, contains a trabecular meshwork body through which the fluid is drained out by Schlemm canal, through posterior chamber.

The surface of the cornea lies under two protective membranes, called the sclera and Tenon’s capsule. Both of these protective layers completely envelop the eyeball. The sclera is built from collagen and elastic fibres, which protect the eye from external damages, this layer also gives rise to the white of the eye. It is pierced by nerves and vessels with the largest hole reserved for the optic nerve. Moreover, it is covered by conjunctiva, which is a clear mucous membrane on the surface of the eyeball. This membrane also lines the inside of the eyelid. It works as a lubricant and, together with the lacrimal gland, it produces tears, that lubricate and protect the eye. The remaining protective layer, the eyelid, also functions to spread this lubricant around.

Moving the eyes – extra-ocular muscles

The eyeball is moved by a complicated muscle structure of extra-ocular muscles consisting of four rectus muscles – inferior, medial, lateral and superior and two oblique – inferior and superior. Positioning of these muscles is presented below, along with functions:

As you can see, the extra-ocular muscles (2,3,4,5,6,8) are attached to the sclera of the eyeball and originate in the annulus of Zinn, a fibrous tendon surrounding the optic nerve. A pulley system is created with the trochlea acting as a pulley and the superior oblique muscle as the rope, this is required to redirect the muscle force in the correct way. The remaining extra-ocular muscles have a direct path to the eye and therefore do not form these pulley systems. Using these extra-ocular muscles, the eye can rotate up, down, left, right and alternative movements are possible as a combination of these.

Other movements are also very important for us to be able to see. Vergence movements enable the proper function of binocular vision. Unconscious fast movements called saccades, are essential for people to keep an object in focus. The saccade is a sort of jittery movement performed when the eyes are scanning the visual field, in order to displace the point of fixation slightly. When you follow a moving object with your gaze, your eyes perform what is referred to as smooth pursuit. Additional involuntary movements called nystagmus are caused by signals from the vestibular system, together they make up the vestibulo-ocular reflexes.

The brain stem controls all of the movements of the eyes, with different areas responsible for different movements.

- Pons: Rapid horizontal movements, such as saccades or nystagmus

- Mesencephalon: Vertical and torsional movements

- Cerebellum: Fine tuning

- Edinger-Westphal nucleus: Vergence movements

Where the vision reception occurs – The retina

Before being transduced, incoming EM passes through the cornea, lens and the macula. These structures also act as filters to reduce unwanted EM, thereby protecting the eye from harmful radiation. The filtering response of each of these elements can be seen in the figure "Filtering of the light performed by cornea, lens and pigment epithelium". As one may observe, the cornea attenuates the lower wavelengths, leaving the higher wavelengths nearly untouched. The lens blocks around 25% of the EM below 400nm and more than 50% below 430nm. Finally, the pigment ephithelium, the last stage of filtering before the photo-reception, affects around 30% of the EM between 430nm and 500nm.

A part of the eye, which marks the transition from non-photosensitive region to photosensitive region, is called the ora serrata. The photosensitive region is referred to as the retina, which is the sensory structure in the back of the eye. The retina consists of multiple layers presented below with millions of photoreceptors called rods and cones, which capture the light rays and convert them into electrical impulses. Transmission of these impulses is nervously initiated by the ganglion cells and conducted through the optic nerve, the single route by which information leaves the eye.

A conceptual illustration of the structure of the retina is shown on the right. As we can see, there are five main cell types:

- photoreceptor cells

- horizontal cells

- bipolar cells

- amacrine cells

- ganglion cells

Photoreceptor cells can be further subdivided into two main types called rods and cones. Cones are much less numerous than rods in most parts of the retina, but there is an enormous aggregation of them in the macula, especially in its central part called the fovea. In this central region, each photo-sensitive cone is connected to one ganglion-cell. In addition, the cones in this region are slightly smaller than the average cone size, meaning you get more cones per area. Because of this ratio, and the high density of cones, this is where we have the highest visual acuity.

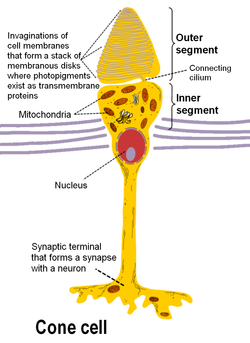

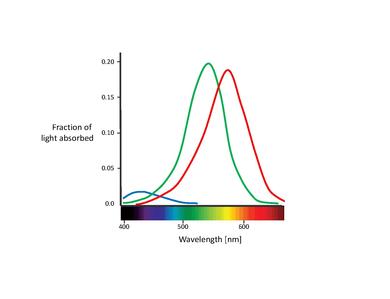

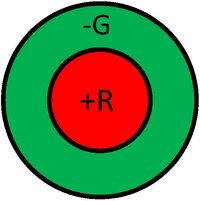

There are 3 types of human cones, each of the cones responding to a specific range of wavelengths, because of three types of a pigment called photopsin. Each pigment is sensitive to red, blue or green wavelength of light, so we have blue, green and red cones, also called S-, M- and L-cones for their sensitivity to short-, medium- and long-wavelength respectively. It consists of protein called opsin and a bound chromphore called the retinal. The main building blocks of the cone cell are the synaptic terminal, the inner and outer segments, the interior nucleus and the mitochondria.

The spectral sensitivities of the 3 types of cones:

- 1. S-cones absorb short-wave light, i.e. blue-violet light. The maximum absorption wavelength for the S-cones is 420nm

- 2. M-cones absorb blue-green to yellow light. In this case The maximum absorption wavelength is 535nm

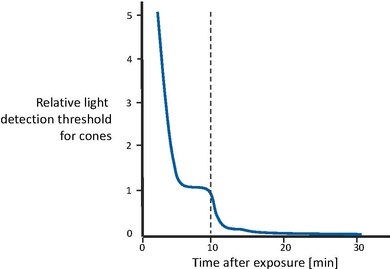

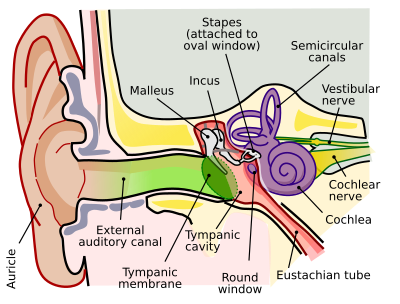

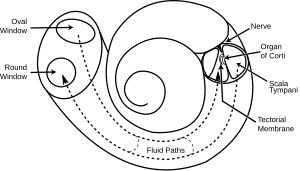

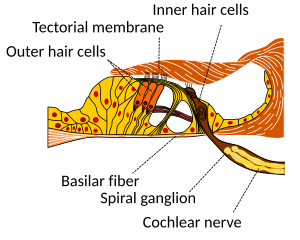

- 3. L-cones absorb yellow to red light. The maximum absorption wavelength is 565nm