Digital Signal Processing/Printable version

| This is the print version of Digital Signal Processing You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/Digital_Signal_Processing

Introduction

Digital Signal Processing (DSP) is the new technological revolution.[edit | edit source]

Who is This Book For?[edit | edit source]

What Will This Book Cover?[edit | edit source]

How is This Book Arranged[edit | edit source]

Software tool

Digital Signal Processing is a field of study that combines both mathematical theory and physical implementation. It makes no sense to consider a digital system without asking oneself how it will be implemented. In the design and analysis phase, some general-purpose signal processing tools are available.

Matlab[edit | edit source]

MATLAB is an excellent (although expensive) tool for simulating systems, and for creating the ever-valuable "proof of concept". This book will make several references to MATLAB, but don't get confused: This book will not teach how to program in MATLAB. If you would like to learn MATLAB, check out the book MATLAB Programming.

There are other free alternatives to MATLAB, with varying degrees of code compatibility.

Octave[edit | edit source]

GNU Octave is a free and Open Source alternative to MATLAB. Octave can be obtained from http://www.octave.org. It endeavors to be MATLAB-compatible and largely it is (a lot of MATLAB code can be run using Octave, and vice versa), though some functionality is missing. For more information on Octave, see the Wikibook Octave Programming.

SciPy[edit | edit source]

SciPy is a Python-based set of libraries which allow to perform numeric calculations. As the preceding tools, it features a signal processing toolbox. Also, the python scripts can make use of matplotlib, a plotting library whose basic commands are very similar to MATLAB's.

Discrete Data

Continuous data is something that most people are familiar with. A quick analogy: when an analog data sensor (e.g., your eye) becomes active (you blink it open), it starts receiving input immediately (you see sun-shine), it converts the input (optical rays) to a desired output (optic nerve signals), and sends the data off to its destination (your brain). It does this without hesitation, and continues doing so until the sensor turns off (you blink your eyes closed). The output is often called a data "stream"; once started, it might run forever, unless something tells it to stop. Now, instead of a physical sensor, if we're able to define our data mathematically in terms of a continuous function, we can calculate our data value at any point along the data stream. It's important to realize that this provides the possibility of an infinite (∞) number of data points, no matter how small the interval might be between the start and stop limits of the data stream.

This brings us to the related concept of Discrete data. Discrete data is non-continuous, only existing at certain points along an input interval, and thereby giving us a finite number of data points to deal with. It is impossible to take the value of a discrete data set at a time point where there is no data.

The analogy with the vision would be the illumination with a stroboscope. The scene viewed consists of a series of images. As all information between two images is lost, the frequency of the stroboscopic illumination should be high enough not to miss the movements of a moving object. This data can also be defined by a mathematical function, but one that is limited and can only be evaluated at the discrete points of input. These are called "discrete functions" to distinguish them from the continuous variety.

Discrete functions and data give us the advantage of being able to deal with a finite number of data points.

Sets and Series[edit | edit source]

Discrete data is displayed in sets such as:

X[n] = [1 2 3& 4 5 6]

We will be using the "&" symbol to denote the data item that occurs at point zero. Now, by filling in values for n, we can select different values from our series:

X[0] = 3 X[-2] = 1 X[3] = 6

We can move the zero point anywhere in the set that we want. It is also important to note that we can pad a series with zeros on either side, so long as we keep track of the zero-point:

X[n] = [0 0 0 0 1 2 3& 4 5 6] = [1 2 3& 4 5 6]

In fact, we assume that any point in our series without an explicit value is equal to zero. So if we have the same set:

X[n] = [1 2 3& 4 5 6]

We know that every value outside of our given range is zero:

X[100] = 0 X[-100] = 0

Stem Plots[edit | edit source]

Discrete data is frequently represented with a stem plot. Stem plots mark data points with dots, and draw a vertical line between the t-axis (the horizontal time axis) and the dot:

F[n] = [5& 4 3 2 1]

About the Notation[edit | edit source]

The notation we use to denote the zero point of a discrete set was chosen arbitrarily. Textbooks on the subject will frequently use arrows, periods, or underscores to denote the zero position of a set. Here are some examples:

|

v

Y[n] = [1 2 3 4 5]

. Y[n] = [1 2 3 4 5]

_ Y[n] = [1 2 3 4 5]

All of these things are too tricky to write in wikibooks, so we have decided to use an ampersand (&) to denote the zeropoint. The ampersand is not used for any other purpose in this book, so hopefully we can avoid some confusion.

Sampling[edit | edit source]

Sampling is the process of converting continuous data into discrete data. The sampling process takes a snapshot of the value of a given input signal, rounds if necessary (for discrete-in-value systems), and outputs the discrete data. A common example of a sampler is an Analog to Digital Converter (ADC).

Let's say we have a function based on time (t). We will call this continuous-time function f(t):

Where u(t) is the unit step function. Now, if we want to sample this function, mathematically, we can plug in discrete values for t, and read the output. We will denote our sampled output as F[n]:

F[n] = 0 : n ≤ 0 F[1] = f(1) = 2 F[2] = f(2) = 4 F[100] = f(100) = 200

This means that our output series for F[n] is the following:

F[n] = [0& 2 4 6 8 10 12 ...]

Reconstruction[edit | edit source]

We digitize (sample) our signal, we do some magical digital signal processing on that signal, and then what do we do with the results? Frequently, we want to convert that digital result back into an analog signal. The problem is that the sampling process can't perfectly represent every signal. Specifically, the Nyquist-Shannon sampling theorem states that the largest frequency that can be perfectly reconstructed by a sampled signal with a sample rate of is . To give an example, audio CDs are sampled at a rate of 44100 samples per second. This means that the largest frequency that can be represented on an audio CD is Hz. Humans can hear frequencies up to around 20000 Hz. From this, we can conclude that the audio CD is well-suited to storing audio data for humans, at least from a sample rate standpoint. A device that converts from a digital representation to an analog one is called a Digital-to-Analog converter (DAC).

Discrete Operations

There are a number of different operations that can be performed on discrete data sets.

Arithmetic[edit | edit source]

Let's say that we have 2 data sets, A[n] and B[n]. We will define these two sets to have the following values:

A[n] = [W X& Y Z] B[n] = [I J& K L]

Arithmetic operations on discrete data sets are performed on an item-by-item basis. Here are some examples:

Addition[edit | edit source]

A[n] + B[n] = [(W+I) (X+J)& (Y+K) (Z+L)]

If the zero positions don't line up (like they conveniently do in our example), we need to manually line up the zero positions before we add.

Subtraction[edit | edit source]

A[n] - B[n] = [(W-I) (X-J)& (Y-K) (Z-L)]

Same as addition, only we subtract.

Multiplication[edit | edit source]

In this situation, we are using the "asterisk" (*) to denote multiplication. Realistically, we should use the "" symbol for multiplication. In later examples, we will use the asterisk for other operations.

A[n] * B[n] = [(W*I) (X*J)& (Y*K) (Z*L)]

Division[edit | edit source]

A[n] / B[n] = [(W/I) (X/J)& (Y/K) (Z/L)]

Even though we are using the square brackets for discrete data sets, they should NOT be confused with matrices. These are not matrices, and we do not perform matrix operations on these sets.

Time-Shifting[edit | edit source]

If we time-shift a discrete data set, we essentially are moving the zero-time reference point of the set. The zero point represents "now", creates the starting point of our view of the data, and the point's location is typically established by a need of the processing we're involved in.

Let's say we have the data set F[n] with the values:

F[n] = [1 2 3& 4 5 ]

Then we can shift this set backwards by 1 data point as such:

F[n-1] = [1 2& 3 4 5]

We can shift the data forward by 1 data point in a similar manner:

F[n+1] = [1 2 3 4& 5]

Discrete data values are time oriented. Values to the right of the zero point are called "future values", and values to the left are "past values". When the data set is populated on both sides of the zero reference, "future" and "past" are synthetic terms relative to the zero point "now", and don't refer to current physical time. In this context, the data values have already been collected and, as such, can only come from our past.

It is important to understand that a physically-realizable digital system that is still receiving data cannot perform operations on future values. It makes no sense to require a future value in order to make a calculation, because such systems are often getting input from a sensor in real-time, and future values simply don't exist.

Time Inversion[edit | edit source]

Let's say that we have the same data set, F[n]:

F[n] = [1 2 3& 4 5]

We can invert the data set as such:

F[-n] = [5 4 3& 2 1]

We keep the zero point in the same place, and we flip all the data items around. in essence, we are taking a mirror image of the data set, and the zero point is the mirror.

Convolution[edit | edit source]

Convolution is a much easier operation in the discrete time domain than in the continuous time domain. Let's say we have two data sets, A[n] and B[n]:

A[n] = [1& 0 1 2] B[n] = [2& 2 2 1]

We will denote convolution with the asterisk (*) in this section; it isn't "multiplication" here. Our convolution function is shown like this:

Y[n] = A[n] * B[n]

and it specifies that we will store our convolution values in the set named Y[n].

Convolution is performed by following a series of steps involving both sets of data points. First, we time invert one of the data sets. It doesn't matter which one, so we can pick the easiest choice:

A[n] = [1& 0 1 2] B[-n] = [1 2 2 2&]

Next, we line up the data vertically so that only one data item overlaps:

A[n] -> [1& 0 1 2] B[-n] -> [1 2 2 2&]

Now, we are going to zero-pad both sets, making them equal length, putting zeros in open positions as needed:

A[n] -> [0 0 0 1& 0 1 2] B[-n] -> [1 2 2 2& 0 0 0]

Now, we will multiply the contents of each column, and add them together:

A[n] -> [0 0 0 1& 0 1 2]

B[-n] -> [1 2 2 2& 0 0 0]

Y[m] =

This gives us the first data point: Y[m] = [2].

Next, we need to time shift B[-n] forward in time by one point, and do the same process: multiply the columns, and add:

A[n] -> [0 0 0 1& 0 1 2]

B[-n-1] -> [1 2 2 2& 0 0 0]

Y[m+1] =

Repeat the "time shift, multiply, and add" steps until no more data points overlap.

A[n] -> [0 0 0 1& 0 1 2]

B[-n-2] -> [1 2 2 2& 0 0 0]

Y[m+2] =

A[n] -> [0 0 0 1& 0 1 2]

B[-n-3] -> [1 2 2 2& 0 0 0]

Y[m+3] =

A[n] -> [0 0 0 1& 0 1 2]

B[-n-4] -> [1 2 2 2& 0 0 0]

Y[m+4] =

A[n] -> [0 0 0 1& 0 1 2]

B[-n] -> [1 2 2 2& 0 0 0]

Y[m+5] =

A[n] -> [0 0 0 1& 0 1 2]

B[-n+6] -> [1 2 2 2& 0 0 0]

Y[m+6] =

Now we have our full set of data points, Y[m]:

Y[m] = [2 2 4 7 6 5 2]

We have our values, but where do we put the zero point? It turns out that the zero point of the result occurs where the zero points of the two operands overlapped in our shift calculations. The result set becomes:

Y[n] = [2& 2 4 7 6 5 2]

It is important to note that the length of the result set is the sum of the lengths of the unpadded operands, minus 1. Or, if you prefer, the length of the result set is the same as either of the zero-padded sets (since they are equal length).

Discrete Operations in Matlab/Octave[edit | edit source]

These discrete operations can all be performed in Matlab, using special operators.

- Division and Multiplication

- Matlab is matrix-based, and therefore the normal multiplication and division operations will be the matrix operations, which are not what we want to do in DSP. To multiply items on a per-item basis, we need to prepend the operators with a period. For instance:

Y = X .* H %X times H Y = X ./ H %X divided by H

If we forget the period, Matlab will attempt a matrix operation, and will alert you that the dimensions of the matrixes are incompatible with the operator.

- Convolution

- The convolution operation can be performed with the conv command in Matlab. For instance, if we wanted to compute the convolution of X[n] and H[n], we would use the following Matlab code:

Y = conv(X, H);

or

Y = conv(H, X);

Difference Calculus[edit | edit source]

When working with discrete sets, you might wonder exactly how we would perform calculus in the discrete time domain. In fact, we should wonder if calculus as we know it is possible at all! It turns out that in the discrete time domain, we can use several techniques to approximate the calculus operations of differentiation and integration. We call these techniques Difference Calculus.

Derivatives[edit | edit source]

What is differentiation exactly? In the continuous time domain, the derivative of a function is the slope of the function at any given point in time. To find the derivative, then, of a discrete time signal, we need to find the slope of the discrete data set.

The data points in the discrete time domain can be treated as geometrical points, and we know that any two points define a unique line. We can then use the algebraic equation to solve for the slope, m, of the line:

Now, in the discrete time domain, f(t) is sampled and replaced by F[n]. We also know that in discrete time, the difference in time between any two points is exactly 1 unit of time! With this mind, we can plug these values into our above equation:

or simply:

We can then time-shift the entire equation one point to the left, so that our equation doesn't require any future values:

The derivative can be found by subtracting time-shifted (delayed) versions of the function from itself.

Difference Calculus Equations[edit | edit source]

Difference calculus equations are arithmetic equations, with a few key components. Let's take an example:

We can see in this equation that X[n-1] has a coefficient of 2, and X[n-2] has a coefficient of 5. In difference Calculus, the coefficients are known as "tap weights". We will see why they are called tap weights in later chapters about digital filters.

Digital Systems

Systems Introduction[edit | edit source]

Digital systems can be conceptually very difficult to understand at first. Let's start out with a block diagram:

We have a digital system, h[n], that is going to alter the input (x[n]) in some way, and produce an output (y[n]). At each integer value for discrete time, n, we will feed 1 value of x[n] into the machine, turn the crank, and create 1 output value for y[n].

Basic Example[edit | edit source]

Let's say that h[n] is a multiplier circuit with a value of 5. Every input value is multiplied by 5, and that is the output. In other words, we can show our difference calculus equation as such:

now, for each successive value for n, we can calculate our output value, y[n]. As an example, using the above difference equation, we can feed in an experimental input:

x[n] = [1 0 1 2]

And by multiplying each data item by 5, we get the following result:

y[n] = [5 0 5 10]

Properties of Digital systems[edit | edit source]

A loose definition of a causal system is a system in which the output does not change before the input. All real systems are causal, and it is impossible to create a system that is not causal.

Circuit Symbols[edit | edit source]

- wires

- pickoff nodes

- adders

- multipliers

Physically Realizeable Systems[edit | edit source]

There is a distinction to be made between systems which are possible mathematically, and systems that can be physically implemented in digital hardware. Some systems might look good on paper, but they are difficult, if not impossible, to actually build. One criterion we have already seen is that a physically realizable digital system must not rely on future values, only on past values. We will discuss other criteria in future chapters, as the concepts involved become more familiar.

Impulse Response

Let's say that we have the following block diagram:

h[n] is known as the 'Impulse Response of the digital system. We will talk about impulse responses here in this chapter.

Impulse Function[edit | edit source]

In digital circuits, we use a variant of the continuous-time delta function. This delta function (δ[n]) has a value of 1 at n = 0, and a value of 0 everywhere else.

δ[n] = [... 0 0 0 0 1& 0 0 0 0 ...]

If we set x[n] = δ[n], then the output y[n] will be the response of the system to an impulse, or simply, the "Impulse Response".

We can time-shift the impulse function as such:

δ[n-1] = [... 0 0 0 0& 1 0 0 0 0 ...] δ[n+1] = [... 0 0 0 0 1 0& 0 0 0 ...]

We can add time-shifted values of the impulse function to create an Impulse Train:

y[n] = δ[n] + δ[n-1] + δ[n-2] + [n-4] y[n] = [1& 1 1 0 1]

Impulse Trains[edit | edit source]

Impulse Response[edit | edit source]

If we have a difference equation relating y[n] to x[n], we can find the impulse response difference equation by replacing every y with an h, and every x with a δ:

y[n] = 2x[n] + 3x[n-1] + 4x[n-2] h[n] = 2δ[n] + 3δ[n-1] + 4δ[n-2]

And by plugging in successive values for n, we can calculate the impulse response to be:

h[n] = [2& 3 4]

Output[edit | edit source]

Now, let's say that we have a given impulse response, h[n], and we have a given input x[n] as such:

x[n] = [1& 0 1 2] h[n] = [2& 2 2 1]

We can calculate the output, y[n], as the convolution of those 2 quantities:

x[n] -> 1& 0 1 2 h[-n] -> 1 2 2 2& h[-n-3] -> 1 2 2 2& -> y[m-3] = 2& h[-n-2] -> 1 2 2 2& -> y[m-2] = 2 h[-n-1] -> 1 2 2 2& -> y[m-1] = 4 h[-n] -> 1 2 2 2& -> y[m] = 7 h[-n+1] -> 1 2 2 2& -> y[m+1] = 6 h[-n+2] -> 1 2 2 2& -> y[m+2] = 5 h[-n+3] -> 1 2 2 2& -> y[m+3] = 2 y[n] = [2& 2 4 7 6 5 2]

Sampling and Reconstruction

digital pro{sampling}[edit | edit source]

Sampling is the process of recording the values of a signal at given points in time. For A/D converters, these points in time are equidistant. The number of samples taken during one second is called the sample rate. Keep in mind that these samples are still analogue values. The mathematic description of the ideal sampling is the multiplication of the signal with a sequence of direct pulses. In real A/D converters the sampling is carried out by a sample-and-hold buffer. The sample-and-hold buffer splits the sample period in a sample time and a hold time. In case of a voltage being sampled, a capacitor is switched to the input line during the sample time. During the hold time it is detached from the line and keeps its voltage.

Quantization[edit | edit source]

Quantization is the process of representing the analog voltage from the sample-and-hold circuit by a fixed number of bits. The input analog voltage (or current) is compared to a set of pre-defined voltage (or current) levels. Each of the levels is represented by a unique binary number, and the binary number that corresponds to the level that is closest to the analog voltage is chosen to represent that sample. This process rounds the analog voltage to the nearest level, which means that the digital representation is an approximation to the analog voltage. There are a few methods for quantizing samples. The most commonly used ones are the dual slope method and the successive approximation.

Dual Slope[edit | edit source]

Successive Approximation[edit | edit source]

Reconstruction[edit | edit source]

Reconstruction is the process of creating an analog voltage (or current) from samples. A digital-to-analog converter takes a series of binary numbers and recreates the voltage (or current) levels that corresponds to that binary number. Then this signal is filtered by a lowpass filter. This process is analogous to interpolating between points on a graph, but it can be shown that under certain conditions the original analog signal can be reconstructed exactly from its samples. Unfortunately, the conditions for exact reconstruction cannot be achieved in practice, and so in practice the reconstruction is an approximation to the original analog signal.

Aliasing[edit | edit source]

Aliasing is a common problem in digital media processing applications. Many readers have heard of "anti-aliasing" features in high-quality video cards. This page will explain what Aliasing is, and how it can be avoided.

Aliasing is an effect of violating the Nyquist-Shannon sampling theory. During sampling the base band spectrum of the sampled signal is mirrored to every multifold of the sampling frequency. These mirrored spectra are called alias. If the signal spectrum reaches farther than half the sampling frequency base band spectrum and aliases touch each other and the base band spectrum gets superimposed by the first alias spectrum. The easiest way to prevent aliasing is the application of a steep sloped low-pass filter with half the sampling frequency before the conversion. Aliasing can be avoided by keeping Fs>2Fmax.

Nyquist Sampling Rate[edit | edit source]

The Nyquist Sampling Rate is the lowest sampling rate that can be used without having aliasing. The sampling rate for an analog signal must be at least two times the bandwidth of the signal. So, for example, an audio signal with a bandwidth of 20 kHz must be sampled at least at 40 kHz to avoid aliasing. In audio CD's, the sampling rate is 44.1 kHz, which is about 10% higher than the Nyquist Sampling Rate to allow cheaper reconstruction filters to be used. The Nyquist Sampling Rate is the lowest sampling rate that can be used without having aliasing.

Anti-Aliasing[edit | edit source]

The sampling rate for an analog signal must be at least two times as high as the highest frequency in the analog signal in order to avoid aliasing. Conversely, for a fixed sampling rate, the highest frequency in the analog signal can be no higher than a half of the sampling rate. Any part of the signal or noise that is higher than a half of the sampling rate will cause aliasing. In order to avoid this problem, the analog signal is usually filtered by a low pass filter prior to being sampled, and this filter is called an anti-aliasing filter. Sometimes the reconstruction filter after a digital-to-analog converter is also called an anti-aliasing filter.

Converters[edit | edit source]

As a matter of professional interest, we will use this page to briefly discuss Analog-to-Digital (A2D) and Digital-to-Analog (D2A) converters, and how they are related to the field of digital signal processing. Strictly speaking, this page is not important to the core field of DSP, so it can be skipped at the reader's leisure.

A2D converters are the bread and butter of DSP systems. A2D converters change analog electrical data into digital signals through a process called sampling.

D2A converters attempt to create an analog output waveform from a digital signal. However, it is nearly impossible to create a smooth output curve in this manner, so the output waveform is going to have a wide bandwidth, and will have jagged edges. Some techniques, such as interpolation can be used to improve these output waveforms.

Continuous-Time Fourier Transform

The Continuous-Time Fourier Transform (CTFT) is the version of the fourier transform that is most common, and is the only fourier transform so far discussed in EE wikibooks such as Signals and Systems, or Communication Systems. This transform is mentioned here as a stepping stone for further discussions of the Discrete-Time Fourier Transform (DTFT), and the Discrete Fourier Transform (DFT). The CTFT itself is not useful in digital signal processing applications, so it will not appear much in the rest of this book

CTFT Definition[edit | edit source]

The CTFT is defined as such:

[CTFT]

CTFT Use[edit | edit source]

Frequency Domain[edit | edit source]

Convolution Theorem[edit | edit source]

Multiplication in the time domain becomes convolution in the frequency domain. Convolution in the time domain becomes multiplication in the frequency domain. This is an example of the property of duality. The convolution theorem is a very important theorem, and every transform has a version of it, so it is a good idea to become familiar with it now (if you aren't previously familiar with it).

Further reading[edit | edit source]

For more information about the Fourier Transform, see Signals and Systems.

Discrete-Time Fourier Transform

The Discrete-Time Fourier Transform (DTFT) is the cornerstone of all DSP, because it tells us that from a discrete set of samples of a continuous function, we can create a periodic summation of that function's Fourier transform. At the very least, we can recreate an approximation of the actual transform and its inverse, the original continuous function. And under certain idealized conditions, we can recreate the original function with no distortion at all. That famous theorem is called the Nyquist-Shannon sampling theorem.

DTFT[edit | edit source]

[DTFT]

The resulting function, is a continuous function that is interesting for analysis. It can be used in programs, such as Matlab, to design filters and obtain the corresponding time-domain filter values.

DTFT Convolution Theorem[edit | edit source]

Like the CTFT, the DTFT has a convolution theorem associated with it. However, since the DTFT results in discrete-frequency values, the convolution theorem needs to be modified as such:

- DTFT Convolution Theorem

- Multiplication in the continuous time domain becomes discrete convolution in the discrete frequency domain. Convolution in the continuous time domain becomes multiplication in the discrete frequency domain.

Energy[edit | edit source]

It is sometimes helpful to calculate the amount of energy that exists in a certain set. These calculations are based off the assumption that the different values in a set are voltage values, however this doesn't necessarily need to be the case to employ these operations.

We can show that the energy of a given set can be given by the following equation:

Energy in Frequency[edit | edit source]

Likewise, we can make a formula that represents the power in the continuous-frequency output of the DTFT:

Parseval's Theorem[edit | edit source]

Parseval's theorem states that the energy amounts found in the time domain must be equal to the energy amounts found in the frequency domain:

Power Density Spectrum[edit | edit source]

We can define the power density spectrum of the continuous-time frequency output of the DTFT as follows:

The area under the power density spectrum curve is the total energy of the signal.

Discrete Fourier Transform

As the name implies, the Discrete Fourier Transform (DFT) is purely discrete: discrete-time data sets are converted into a discrete-frequency representation. This is in contrast to the DTFT that uses discrete time, but converts to continuous frequency. Since the resulting frequency information is discrete in nature, it is very common for computers to use DFT(Discrete fourier Transform) calculations when frequency information is needed.

Using a series of mathematical tricks and generalizations, there is an algorithm for computing the DFT that is very fast on modern computers. This algorithm is known as the Fast Fourier Transform (FFT), and produces the same results as the normal DFT, in a fraction of the computational time as ordinary DFT calculations. The FFT will be discussed in detail in later chapters.

DFT[edit | edit source]

The Discrete Fourier Transform is a numerical variant of the Fourier Transform. Specifically, given a vector of n input amplitudes such as {f0, f1, f2, ... , fn-2, fn-1}, the Discrete Fourier Transform yields a set of n frequency magnitudes.

The DFT is defined as such:

here, k is used to denote the frequency domain ordinal, and n is used to represent the time-domain ordinal. The big "N" is the length of the sequence to be transformed.

The Inverse DFT (IDFT) is given by the following equation:

Where is defined as:

Again, "N" is the length of the transformed sequence.

Matrix Calculations[edit | edit source]

The DFT can be calculated much more easily using a matrix equation:

The little "x" is the time domain sequence arranged as a Nx1 vertical vector. The big "X" is the resulting frequency information, that will be arranged as a vertical vector (as per the rules of matrix multiplication). The "DN" term is a matrix defined as such:

In a similar way, the IDFT can be calculated using matrices as follows:

And we will define as the following:

Visualization of the Discrete Fourier Transform[edit | edit source]

It may be easily seen that the term

represents a unit vector in the complex plane, for any value of j and k. The angle of the vector is initially 0 radians (along the real axis) for or . As j and k increase, the angle is increased in units of 1/nth of a circle. The total angle therefore becomes radians.

To understand the meaning of the Discrete Fourier Transform, it becomes effective to write the transform in matrix form, depicting the complex terms pictorally.

For example, with , the fourier transform can be written:

The top row (or the left-hand column) changes not at all; therefore, F0 is the resultant when the amplitudes augment each other. The second row changes by 1/nth of a circle, going from left to right. The third row changes by 2/nths of a circle; the fourth row changes by 3/nths of a circle; the fifth row, here, changes by 4/nths = 4/8 = 1/2 a circle. Therefore, F4 is the resultant when the even terms are set at odds with the odd terms.

We therefore see from the square matrix that the DFT is the resultant of all 2-D vectoral combinations of the input amplitudes.

Relation to DTFT[edit | edit source]

The DFT and the DTFT are related to each other in a very simple manner. If we take the DTFT of a given time sequence, x[n], we will get a continuous-frequency function called . If we sample at N equally-spaced locations, the result will be the DFT, X[k]

Circular Time Shifting[edit | edit source]

Circular Time Shifting is very similar to regular, linear time shifting, except that as the items are shifted past a certain point, they are looped around to the other end of the sequence. This subject may seem like a bit of a tangent, but the importance of this topic will become apparent when we discuss the Circular Convolution operation in the next chapter.

Let's say we have a given sequence, x[n], as such:

x[n] = [1& 2 3 4]

As we know, we can time-shift this sequence to the left or the right by subtracting or adding numbers to the argument:

x[n+1] = [1 2& 3 4 0]

x[n-1] = [0& 1 2 3 4]

Here, we've padded both sequences with zeros to make it obvious where the next item is coming in from. Now, let's say that when we shift a set, instead of padding with zeros, we loop the first number around to fill the hole. We will use the notation x[<n+A>] to denote an A-point circular shift:

x[<n+1>] = [2& 3 4 1]

Notice how the length of the sequence started as 4, and ended as 4, and we didn't need to pad with zeros. Also notice that the 1 "fell off" the left side of the set, and "reappeared" on the right side. It's as if the set is a circle, and objects that rotate off one direction will come back from the other direction. Likewise, let us rotate in the other direction:

x[<n-1>] = [4& 1 2 3]

If we rotate around by N points, where N is the length of the set, we get an important identity:

x[<n+N>] = x[<n-N>] = x[n]

Time Inversion[edit | edit source]

Now, let's look at a more complicated case: mixing circular shifting with time inversion. Let's define our example set x[n]:

x[n] = [1& 2 3 4]

we can define our time-inverted set as such:

x[n] = [4 3 2 1&]

notice that the "4" went from n=3 to n=-3, but the "1" stayed at the zero point. Now, let's try to combine this with our circular shift operation, to produce a result x[<-n>]. We know that the "1" (in the zero position) has not moved in either direction, nor have we shifted to the left or right, because we aren't adding or subtracting anything to our argument (n). So we can put 1 back in the same place as it was. All the rest of the numbers (2 3 and 4) all shifted to the right of the sequence, and therefore we will loop them around to the other side:

x[<-n>] = [1& 4 3 2]

Here is a general rule for circular time-inversion:

Circular Convolution[edit | edit source]

Circular Convolution is an important operation to learn, because it plays an important role in using the DFT.

Let's say we have 2 discrete sequences both of length N, x[n] and h[n]. We can define a circular convolution operation as such:

notice how we are using a circular time-shifting operation, instead of the linear time-shift used in regular, linear convolution.

Circular Convolution Theorem[edit | edit source]

The DFT has certain properties that make it incompatible with the regular convolution theorem. However, the DFT does have a similar relation with the Circular Convolution operation that is very helpful. We call this relation the Circular Convolution Theorem, and we state it as such:

- Circular Convolution Theorem

- Multiplication in the discrete-time domain becomes circular convolution in the discrete-frequency domain. Circular convolution in the discrete-time domain becomes multiplication in the discrete-frequency domain.

Relation to Linear Convolution[edit | edit source]

Circular Convolution is related to linear convolution, and we can use the circular convolution operation to compute the linear convolution result. Let's say we have 2 sets, x[n] and h[n], both of length N:

- pad both sets with N-1 zeros on the right side.

- perform the circular convolution on the new sets of length N+(N-1)

- The result is the linear convolution result of the original two sets.

Fast Fourier Transform (FFT)[edit | edit source]

The Fast Fourier Transform refers to algorithms that compute the DFT in a numerically efficient manner.Algorithms like:-decimation in time and decimation in frequency. This is a algorithm for computing the DFT that is very fast on modern computers.

Z Transform

The Z Transform has a strong relationship to the DTFT, and is incredibly useful in transforming, analyzing, and manipulating discrete calculus equations. The Z transform is named such because the letter 'z' (a lower-case Z) is used as the transformation variable.

z Transform Definition[edit | edit source]

For a given sequence x[n], we can define the z-transform X(z) as such:

[Z Transform]

- it is important to note that z is a continuous complex variable defined as such:

- where is the imaginary unit.

There can be several sequences which will generate the same z-transform with the different functions being differentiated by the convergence region of for which the summation in the z-transform will converge. These convergence regions are annuli centered at the orgin. In a given convergence region, only one will converge to a given .

Example 1[edit | edit source]

- for

Example 2[edit | edit source]

- for

Note that both examples have the same function as their z-transforms but with different convergence regions needed for the respective infinite summations in their z-transforms to converge. Many textbooks on z-transforms are only concerned with so-called right-handed functions which is to say functions for which for all less than some initial start point ; that is, for all . So long as the function grows at most exponentially after the start point, the z-transform of these so-called right-handed functions will converge in an open annulus going to infinity, for some positive real .

It is important to note that the z-transform rarely needs to be computed manually, because many common results have already been tabulated extensively in tables, and control system software includes it (MatLab,Octave,SciLab).

The z-transform is actually a special case of the so-called Laurent series, which is a special case of the commonly used Taylor series.

The Inverse Z-Transform[edit | edit source]

The inverse z-transform can be defined as such:

[Inverse Z Transform]

Where C is a closed-contour that lies inside the unit circle on the z-plane, and encircles the point z = {0, 0}.

The inverse z-transform is mathematically very complicated, but luckily—like the z-transform itself—the results are extensively tabulated in tables.

Equivalence to DTFT[edit | edit source]

If we substitute , where is the frequency in radians per second, into the Z-transform, we get

which is equivalent to the definition of the Discrete-Time Fourier Transform. In other words, to convert from the Z-transform to the DTFT, we need to evaluate the Z-transform around the unit circle.

Properties[edit | edit source]

Since the z-transform is equivalent to the DTFT, the z-transform has many of the same properties. Specifically, the z-transform has the property of duality, and it also has a version of the convolution theorem (discussed later).

The z-transform is a linear operator.

Convolution Theorem[edit | edit source]

Since the Z-transform is equivalent to the DTFT, it also has a convolution theorem that is worth stating explicitly:

- Convolution Theorem

- Multiplication in the discrete-time domain becomes convolution in the z-domain. Multiplication in the z-domain becomes convolution in the discrete-time domain.

Y(s)=X(s).H(s)

Z-Plane[edit | edit source]

Since the variable z is a continuous, complex variable, we can map the z variable to a complex plane as such:

Transfer Function[edit | edit source]

Let's say we have a system with an input/output relationship defined as such:

Y(z) = H(z)X(z)

We can define the transfer function of the system as being the term H(z). If we have a basic transfer function, we can break it down into parts:

Where H(z) is the transfer function, N(z) is the numerator of H(z) and D(z) is the denominator of H(z). If we set N(z)=0, the solutions to that equation are called the zeros of the transfer function. If we set D(z)=0, the solutions to that equation are called the poles of the transfer function.

The poles of the transfer function amplify the frequency response while the zero's attenuate it. This is important because when you design a filter you can place poles and zero's on the unit circle and quickly evaluate your filters frequency response.

Example[edit | edit source]

Here is an example:

So by dividing through by X(z), we can show that the transfer function is defined as such:

We can also find the D(z) and N(z) equations as such:

And from those equations, we can find the poles and zeros:

- Zeros

- z → 0

- Poles

- z → -1/2

Stability[edit | edit source]

It can be shown that for any causal system with a transfer function H(z), all the poles of H(z) must lie within the unit-circle on the z-plane for the system to be stable. Zeros of the transfer function may lie inside or outside the circle. See Control Systems/Jurys Test.

Gain[edit | edit source]

Gain is the factor by which the output magnitude is different from the input magnitude. If the input magnitude is the same as the output magnitude at a given frequency, the filter is said to have "unity gain".

Properties[edit | edit source]

Here is a listing of the most common properties of the Z transform.

| Time domain | Z-domain | ROC | |

|---|---|---|---|

| Notation | ROC: | ||

| Linearity | At least the intersection of ROC1 and ROC2 | ||

| Time shifting | ROC, except if and if | ||

| Scaling in the z-domain | |||

| Time reversal | |||

| Conjugation | ROC | ||

| Real part | ROC | ||

| Imaginary part | ROC | ||

| Differentiation | ROC | ||

| Convolution | At least the intersection of ROC1 and ROC2 | ||

| Correlation | At least the intersection of ROC of X1(z) and X2() | ||

| Multiplication | At least | ||

| Parseval's relation |

- Initial value theorem

- , If causal

- Final value theorem

- , Only if poles of are inside unit circle

Phase Shift[edit | edit source]

Further reading[edit | edit source]

Hilbert Transform

Definition[edit | edit source]

The Hilbert transform is used to generate a complex signal from a real signal. The Hilbert transform is characterized by the impulse response:

The Hilbert Transform of a function x(t) is the convolution of x(t) with the function h(t), above. The Hilbert Transform is defined as such:

[Hilbert Transform]

We use the notation to denote the Hilbert transformation of x(t).

Fast Fourier Transform (FFT) Algorithm

The Fourier Transform (FFT) is an algorithm used to calculate a Discrete Fourier Transform (DFT) using a smaller number of multiplications.

Cooley–Tukey algorithm[edit | edit source]

The Cooley–Tukey algorithm is the most common fast Fourier transform (FFT) algorithm. It breaks down a larger DFT into smaller DFTs. The best known use of the Cooley–Tukey algorithm is to divide a N point transform into two N/2 point transforms, and is therefore limited to power-of-two sizes.

The algorithm can be implemented in two different ways, using the so-called:

- Decimation In Time (DIT)

- Decimation In Frequency (DIF)

Circuit diagram[edit | edit source]

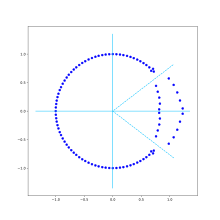

The following picture represents a 16-point DIF FFT circuit diagram:

In the picture, the coefficients are given by:

where N is the number of points of the FFT. They are evenly spaced on the lower half of the unit circle and .

Test script[edit | edit source]

The following python script allows to test the algorithm illustrated above:

#!/usr/bin/python3

import math

import cmath

# ------------------------------------------------------------------------------

# Parameters

#

coefficientBitNb = 8 # 0 for working with reals

# ------------------------------------------------------------------------------

# Functions

#

# ..............................................................................

def bit_reversed(n, bit_nb):

binary_number = bin(n)

reverse_binary_number = binary_number[-1:1:-1]

reverse_binary_number = reverse_binary_number + \

(bit_nb - len(reverse_binary_number))*'0'

reverse_number = int(reverse_binary_number, bit_nb-1)

return(reverse_number)

# ..............................................................................

def fft(x):

j = complex(0, 1)

# sizes

point_nb = len(x)

stage_nb = int(math.log2(point_nb))

# vectors

stored = x.copy()

for index in range(point_nb):

stored[index] = complex(stored[index], 0)

calculated = [complex(0, 0)] * point_nb

# coefficients

coefficients = [complex(0, 0)] * (point_nb//2)

for index in range(len(coefficients)):

coefficients[index] = cmath.exp(-j * index/point_nb * 2*cmath.pi)

coefficients[index] = coefficients[index] * 2**coefficientBitNb

# loop

for stage_index in range(stage_nb):

# print([stored[i].real for i in range(point_nb)])

index_offset = 2**(stage_nb-stage_index-1)

# butterfly additions

for vector_index in range(point_nb):

isEven = (vector_index // index_offset) % 2 == 0

if isEven:

operand_a = stored[vector_index]

operand_b = stored[vector_index + index_offset]

else:

operand_a = - stored[vector_index]

operand_b = stored[vector_index - index_offset]

calculated[vector_index] = operand_a + operand_b

# coefficient multiplications

for vector_index in range(point_nb):

isEven = (vector_index // index_offset) % 2 == 0

if not isEven:

coefficient_index = (vector_index % index_offset) \

* (stage_index+1)

# print( \

# "(%d, %d) -> %d" \

# % (stage_index, vector_index, coefficient_index) \

# )

calculated[vector_index] = \

coefficients[coefficient_index] * calculated[vector_index] \

/ 2**coefficientBitNb

if coefficientBitNb > 0:

calculated[vector_index] = complex( \

math.floor(calculated[vector_index].real), \

math.floor(calculated[vector_index].imag) \

)

# storage

stored = calculated.copy()

# reorder results

for index in range(point_nb):

calculated[bit_reversed(index, stage_nb)] = stored[index]

return calculated

# ------------------------------------------------------------------------------

# Main program

#

source = [0.0, 1.0, 2.0, 3.0, 4.0, 5.0, 6.0, 7.0]

transformed = fft(source)

amplitude = [0.0] * len(source)

amplitude_print = ''

for index in range(len(transformed)):

amplitude[index] = abs(transformed[index])

amplitude_print = amplitude_print + "%5.3f " % amplitude[index]

print()

print(amplitude_print)

It has a special parameter, coefficientBitNb, which allows to determine the calculation results

for a circuit using only integer numbers.

Digital Filters

Digital Filters can be very complicated devices, but they must be able to map to the difference equations of the filter design. This means that since difference equations only have a limited number of operations available (addition and multiplication), digital filters only have limited operations that they need to handle as well. There are only a handful of basic components to a digital filter, although these few components can be arranged in complex ways to make complicated filters.

Digital Filter Types[edit | edit source]

There are two types of filters in the digital realm: Finite Impulse Response (FIR) filters and Infinite Impulse Response (IIR) filters. They are very different in essence.

FIR Filters[edit | edit source]

FIR filters are specific to sampled systems. There is no equivalent in continuous-time systems. Therefore, only very specific analog filters are capable of implementing an FIR filter.

If we define the discrete time impulse function as

the response of an FIR filter to δ[n], denoted as h[n], will decay to zero after a finite number of samples. The transfer function of an FIR filter contains only zeros and either no poles or poles only at the origin.

An FIR filter with symmetric coefficients is guaranteed to provide a linear phase response, which can be very important in some applications. However, FIR filters suffer from low efficiency, and creating an FIR to meet a given spec requires much more hardware than an equivalent IIR filter.

IIR Filters (infinite impulse response filter)[edit | edit source]

IIR filters are typically designed basing on continuous-time transfer functions. IIR filters differ from FIR filters because they always contain feedback elements in the circuit, which can make the transfer functions more complicated to work with.

The transfer function of an IIR filter contains both poles and zeros. Its impulse response never decays to zero (though it may get so close to zero that the response cannot be represented with the number of bits available in the system).

IIR filters provide extraordinary benefits in terms of computing: IIR filters are more than an order of magnitude more efficient than an equivalent FIR filter. Even though FIR is easier to design, IIR will do the same work with fewer components, and fewer components translate directly to less money.

Filtering a time-series in Octave/Sciplot[edit | edit source]

First create some points for a time series. In this case we'll create one second of random data sampled at 44 kHz.

sampling_t = 1/44000; t = 0:sampling_t:1; x = rand(size(t));

Have a look at the time series

plot(t,x);

Have a look at its spectrum (it is mostly uniform, what we would expect from noise)

specgram(x)

Now we'll use a built-in function to create a third order Butterworth low-pass filter with cutoff frequency pi*0.1 radians

[b,a] = butter(3,0.1)

Now filter the time series.

y = filter(b,a,x);

Have a look at the first 100 points of the filtered data.

hold on plot(y(1:100)) plot(x(1:100)) hold off

Check its spectrogram

specgram(y)

Now look at the magnitude of the FFT

plot(log(abs(fft(y))))

Filter response Types[edit | edit source]

High-pass and Low-pass[edit | edit source]

High-Pass and Low-Pass filters are the simplest forms of digital filters, and they are relatively easy to design to specifications. This page will discuss high-pass and low-pass transfer functions, and the implementations of each as FIR and IIR designs.

Band-pass[edit | edit source]

Band-pass Filters are like a combination of a high-pass and a low-pass filter. Only specific bands are allowed to pass through the filter. Frequencies that are too high or too low will be rejected by the filter.

Stop-band[edit | edit source]

Notch filters are the complement of Band-pass filters in that they only stop a certain narrow band of frequency information, and allow all other data to pass without problem.

Notch[edit | edit source]

The direct complement of a bandpass filter is called a bandstop filter. A notch filter is essentially a very narrow bandstop filter.

Comb Filters[edit | edit source]

Comb Filters, as their name implies, look like a hair comb. They have many "teeth", which in essence are notches in the transfer function where information is removed. These notches are spaced evenly across the spectrum, so they are only useful for removing noise that appear at regular frequency intervals.

All-pass[edit | edit source]

The All-pass filter is a filter that has a unity magnitude response for all frequencies. It does not affect the gain response of a system. The All-pass filter does affect the phase response of the system. For example, if an IIR filter is designed to meet a prescribed magnitude response often the phase response of the system will become non-linear. To correct for this non-linearity an All-pass filter is cascaded with the IIR filter so that the overall response(IIR+All-pass) has a constant group delay.

Canonic and Non-Canonic[edit | edit source]

Canonic filters are filters where the order of the transfer function matches the number of delay units in the filter. Conversely, Noncanonic filters are when the filter has more delay units than the order of the transfer function. In general, FIR filters are canonic. Some IIR filter implementations are noncanonic.

Here is an example of a canonical, 2nd order filter:

Here is an example of a non-canonical 2nd order filter:

Notice that in the canonical filter, the system order (here: 2) equals the number of delay units in the filter (2). In the non-canonical version, the system order is not equal to the number of delays (4 delay units). Both filters perform the same task. The canonical version results in smaller digital hardware, because it uses fewer delay units. However, this drawback nearly disappears if several second order IIRs are cascaded, as they can share delay elements. In this case, the only "extra" delay elements are those on the input side of the first section.

Notations[edit | edit source]

There are some basic notations that we will be using:

- ωp Pass-band cut off frequency

- ωs Stop-band cut off frequency

- δp Pass-band ripple peak magnitude

- δs Stop-band ripple peak magnitude

FIR Filter Design

Filter design[edit | edit source]

The design procedure most frequently starts from the desired transfer function amplitude. The inverse Laplace transform provides the impulse response in the form of sampled values.

The length of the impulse response is also called the filter order.

Ideal lowpass filter[edit | edit source]

The ideal (brickwall or sinc filter) lowpass filter has an impulse response in the form of a sinc function:

This function is of infinite length. As such it can not be implemented in practice. Yet it can be approximated via truncation. The corresponding transfer function ripples on the sides of the cutoff frequency transition step. This is known as the Gibbs phenomenon.

Window design method[edit | edit source]

In order to smooth-out the transfer function ripples close to the cutoff frequency, one can replace the brickwall shape by a continuous function such as the Hamming, Hanning, Blackman and further shapes. These functions are also called window functions and are also used for smoothing a set of samples before processing it.

The following code illustrates the design of a Hamming window FIR:

#!/usr/bin/python3

import math

import numpy as np

import scipy.signal as sig

import matplotlib.pyplot as plt

# ------------------------------------------------------------------------------

# Constants

#

# filter

signal_bit_nb = 16

coefficient_bit_nb = 16

sampling_rate = 48E3

cutoff_frequency = 5E3

filter_order = 100

# time signal

input_signal_duration = 10E-3

frequency_1 = 1E3

amplitude_1 = 0.5

frequency_2 = 8E3

amplitude_2 = 0.25

# display

plot_time_signals = False

plot_transfer_function = False

plot_zeros = True

input_input_signal_color = 'blue'

filtered_input_signal_color = 'red'

transfer_function_amplitude_color = 'blue'

transfer_function_phase_color = 'red'

locus_axes_color = 'deepskyblue'

locus_zeroes_color = 'blue'

locus_cutoff_frequency_color = 'deepskyblue'

#-------------------------------------------------------------------------------

# Filter design

#

Nyquist_rate = sampling_rate / 2

sampling_period = 1/sampling_rate

coefficient_amplitude = 2**(coefficient_bit_nb-1)

FIR_coefficients = sig.firwin(filter_order, cutoff_frequency/Nyquist_rate)

FIR_coefficients = np.round(coefficient_amplitude * FIR_coefficients) \

/ coefficient_amplitude

transfer_function = sig.TransferFunction(

FIR_coefficients, [1],

dt=sampling_period

)

# ------------------------------------------------------------------------------

# Time filtering

#

sample_nb = round(input_signal_duration * sampling_rate)

# time signal

time = np.arange(sample_nb) / sampling_rate

# input signal

input_signal = amplitude_1 * np.sin(2*np.pi*frequency_1*time) \

+ amplitude_2*np.sin(2*np.pi*frequency_2*time)

input_signal = np.round(2**(signal_bit_nb-1) * input_signal)

# filtered signal

filtered_input_signal = sig.lfilter(FIR_coefficients, 1.0, input_signal)

# plot signals

if plot_time_signals :

x_ticks_range = np.arange(

0, (sample_nb+1)/sampling_rate, sample_nb/sampling_rate/10

)

y_ticks_range = np.arange(

-2**(signal_bit_nb-1), 2**(signal_bit_nb-1)+1, 2**(signal_bit_nb-4)

)

plt.figure("Time signals", figsize=(12, 9))

plt.subplot(2, 1, 1)

plt.title('input signal')

plt.step(time, input_signal, input_input_signal_color)

plt.xticks(x_ticks_range)

plt.yticks(y_ticks_range)

plt.grid()

plt.subplot(2, 1, 2)

plt.title('filtered signal')

plt.step(time, filtered_input_signal, filtered_input_signal_color)

plt.xticks(x_ticks_range)

plt.yticks(y_ticks_range)

plt.grid()

#-------------------------------------------------------------------------------

# Transfer function

#

# transfer function

(w, amplitude, phase) = transfer_function.bode(n=filter_order)

frequency = w / (2*np.pi)

# plot transfer function

if plot_transfer_function :

amplitude = np.clip(amplitude, -6*signal_bit_nb, 6)

log_range = np.arange(1, 10)

x_ticks_range = np.concatenate((1E2*log_range, 1E3*log_range, [2E4]))

amplitude_y_ticks_range = np.concatenate((

[-6*signal_bit_nb],

np.arange(-20*math.floor(6*signal_bit_nb/20), 1, 20)

))

phase_y_ticks_range = np.concatenate((

1E1*log_range, 1E2*log_range, 1E3*log_range

))

plt.figure("Transfer function", figsize=(12, 9))

plt.subplot(2, 1, 1)

plt.title('amplitude')

plt.semilogx(frequency, amplitude, transfer_function_amplitude_color)

plt.xticks(x_ticks_range)

plt.yticks(amplitude_y_ticks_range)

plt.grid()

plt.subplot(2, 1, 2)

plt.title('phase')

plt.loglog(frequency, phase, transfer_function_phase_color)

plt.xticks(x_ticks_range)

plt.yticks(phase_y_ticks_range)

plt.grid()

#-------------------------------------------------------------------------------

# Zeros

#

(zeroes, poles, gain) = sig.tf2zpk(FIR_coefficients, [1])

#print(zeroes)

# plot location of zeroes

if plot_zeros :

max_amplitude = 1.1 * max(abs(zeroes))

cutoff_angle = cutoff_frequency/sampling_rate*2*math.pi

cutoff_x = max_amplitude * math.cos(cutoff_angle)

cutoff_y = max_amplitude * math.sin(cutoff_angle)

plt.figure("Zeros", figsize=(9, 9))

plt.plot([-max_amplitude, max_amplitude], [0, 0], locus_axes_color)

plt.plot([0, 0], [-max_amplitude, max_amplitude], locus_axes_color)

plt.scatter(

np.real(zeroes), np.imag(zeroes),

marker='o', c=locus_zeroes_color)

plt.plot(

[cutoff_x, 0, cutoff_x], [-cutoff_y, 0, cutoff_y],

locus_cutoff_frequency_color, linestyle='dashed'

)

plt.axis('square')

#-------------------------------------------------------------------------------

# Show plots

#

plt.show()

Filter structure[edit | edit source]

A Finite Impulse Response (FIR) filter's output is given by the following sequence:

The following figure shows the direct implementation of the above formula, for a 4th order filter (N = 4):

With this structure, the filter coefficients are equal to the sample values of the impulse response.

IIR Filter Design

IIR filters are typically designed basing on continuous-time filter functions. Once the transfer function has been chosen, different filter structures allow to implement the filter, be it in hardware or in software.

Transfer function[edit | edit source]

The classical design technique is to start from a well known lowpass filter function and to transform it to a highpass, a bandpass or a bandstop function if required. Classical transfer functions are:

- Butterworth

- Chebyshev

- Elliptic or Cauer

The general form of the transfer function is given by the ratio of 2 polynoms:

Filter structures[edit | edit source]

The most straightforward digital filter implementation would be done by implementing its difference equation which is given by:

The following circuit shows a possible implementation of the difference equation for a 4th order filter:

Nevertheless, this form is not used in practice. Indeed, the first who tried to implement a filter in that way have found out that this circuit is very sensitive to the coefficient values: a small change in one coefficient can have dramatic effects on the shape of the filter's transfer function. So rounding the coefficients to integer or fixed-point values resulted into a design nightmare. Because of this, engineers turned over to one of the following approaches:

- simulating analog filter structures which had shown not to suffer of this acute sensitivity

- breaking the total transfer function into a chain of second order sections, with an additional first order section for even-order filters

All-Pole Filters[edit | edit source]

All-pole filters are limited to lowpass devices. The fact that they do not have zeros makes their structure much simpler. Indeed, their transfer function is written as:

Some filter structures based on the simulation of analog filters are used for the implementation of all-pole filters.

Butterworth and Chebyshev Type I functions are of all-pole kind.

Chains of Integrators[edit | edit source]

A Chain of Integrators with Feedback (CIF) allows a straightforward implementation of an all-pole transfer function.

This circuit, with to being the multiplier ouputs, can be described by the following equations:

which can be iteratively solved as:

From this, the time constants can be iteratively retrieved from the transfer function's coefficients.

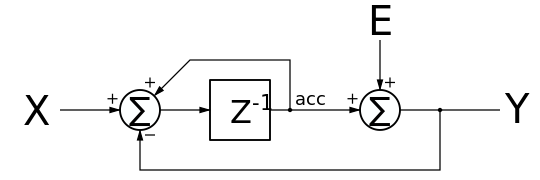

The digital structure corresponding to the analog CIF is given in the following figure:

If the sampling rate is much higher than the time constants, the filter coefficients can be obtained directly from the CIF in the analog domain. Else, they can be found from the sampled system transfer function:

with .

Leapfrog Filters[edit | edit source]

A leapfrog filter simulates the functionality of an analog ladder filter and provides a robust implementation of an all-pole transfer function. It can be shown that a large variation of coefficient values only leads to small variations of the transfer function, especially in the passband.

Taking the example of the following 4th order all-pole lowpass ladder filter,

one can write the following equations:

Rewriting these equations in order to have only voltages on the left side gives:

This new set of equations shows that the filter can be implemented with a chain of 4 integrators with:

- state variables having the form of or

- time constants having the form of or

The resulting circuit is an integrator chain where each integrator output is brought back to the preceeding integrator input. Hence the name leapfrog.

This analog device can be implemented as a digital circuit by replacing the analog integrators with accumulators:

The filter coefficients can be derived from tables providing the , and values for the selected filter functions and from the sampling period . An interesting point is that the relative values of the coefficients set the shape of the transfer function (Butterworth, Chebyshev, …), whereas their amplitudes set the cutoff frequency. Dividing all coefficients by a factor of two shifts the cutoff frequency down by one octave, which corresponds to a factor of two.

Let us note that the replacement of the analog integrators with accumulators corresponds is a very primitive design technique. In terms of signal processing, this corresponds to simplify the Z-transform to , which are the two first terms of the Taylor series of . Nevertheless, this approximation is good enough for filters where the sampling frequency is much higher than the signal bandwidth.

The state space representation of the preceeding filtre can be written as:

From this equation set, one can write the A, B, C, D matrices as:

From this representation, signal processing tools such as SciPy, Octave or Matlab allow to plot the filter's frequency response or to examine its zeroes and poles.

A special case is the Butterworth 3rd order filter which has time constants with relative values of 1, 1/2 and 1. Due to that, this filter can be implemented in hardware without any multiplier, but using shifts instead.

Filters with poles and zeroes[edit | edit source]

Filters with poles and zeroes are best implemented in the form of a chain of biquadratic cells or by the parallel connection of two all-pass filters.

Chain of Second Order Sections[edit | edit source]

A second order section, often referred as biquad, implements a second order transfer function. The transfer function of a filter can be split into a product of transfer functions each associated to a pair of poles and possibly a pair of zeroes. If the transfer function's order is odd, then a first order section has to be added to the chain. This section is associated to the real pole and to the real zero if there is one.

The second order section's transfer function is given by:

The most known biquad structures are shown next.

Direct-form 1 biquad[edit | edit source]

The following figure shows a direct form 1 biquad:

Direct-form 1 transposed biquad[edit | edit source]

The following figure shows a direct form 1 transposed biquad:

Direct-form 2 biquad[edit | edit source]

The following figure shows a direct form 2 biquad:

Direct-form 2 transposed biquad[edit | edit source]

The following figure shows a direct form 2 transposed biquad:

Obviously, the corresponding first order sections are done by removing the multipliers for coefficients and , together with the associated delay element(s).

Parallel connection of allpass filters[edit | edit source]

Allpass filters are used to modify the phase of a signal's frequency components without altering their amplitude. They can be used to compensate for other undesired phase shifts, as for example in the design of close-to-linear phase IIR filters.

Additionally, the parallel connection of two allpass filters allows to implement any kind of transfer function. Indeed, at frequencies where the two branches show the same phase shift, the output will follow the input, but at the frequencies where the relative phase shift is 180°, the output will be zero. An example of this functionality is given in the family of Wave Digital Filters (WDFs).

Filter Representation

Direct Form I[edit | edit source]

Direct Form II[edit | edit source]

Transpose[edit | edit source]

Cascade Form[edit | edit source]

Polyphase Form[edit | edit source]

Parallel Form[edit | edit source]

Tunable Filters

We have high-pass and lowpass filters, and it turns out that we can create both filter effects using a single piece of hardware called a tunable filter. Tunable filters rely on a device called an allpass filter to create other types of filter outputs.

Allpass Filters[edit | edit source]

A common, although unintuitive, tool in the DSP toolbox is the all-pass filter. As the name implies, the all-pass filter passes all frequency components without attenuation.

Why Allpass?[edit | edit source]

The question comes up, if an allpass filter doesn't actually filter anything, why do we bother to study it? The answer is that even though the allpass filter doesn't affect the magnitude response of the system, it will affect the phase response of the system.

Allpass filters have the property that cascading an allpass filter with another IIR filter will produce a linear output for the whole system.

Designing an Allpass[edit | edit source]

Tunable Filter Concept[edit | edit source]

Tunable Filter Design[edit | edit source]

Infinite Input Filtering

Filtering a data input that is infinite in length can be a tricky task for any computer to manage, and DSP engineers have identified two techniques for breaking down an infinite input into manageable chunks for filtering.

"Infinite Input"[edit | edit source]

infinite input response (IIR) filters are structures that use a feedback element to help filter data. An IIR filter has a transfer function of the form:

The constants an are known as the zeros of the transfer function, and the bm terms are known as the poles of the transfer function. FIR filters, by comparison do not have poles.

IIR filters are named as such because the filter does not stop responding once the input has stopped, but instead the feedback element will continue to input values into the filter structure for processing after the input has stopped.

IIR vs FIR Filters[edit | edit source]

| FIR | IIR | |

|---|---|---|

| Stability | Always BIBO Stable | May not be stable |

| Efficiency | Not efficient | Very Efficient |

| Phase | May have linear phase | phase not linear |

An FIR filter will have an order that is a significantly higher than the order of an equivalent IIR filter. This means that FIR filters need more complexity and more components then IIR filters to complete the same task. However, IIR filters have a potential for instability, which requires more analysis.

Overlap and Add[edit | edit source]

Overlap and Save[edit | edit source]

Comparison of Results[edit | edit source]

Ideal Filters

IDEAL DIGITAL SIGNALS[edit | edit source]

Filter Realizations[edit | edit source]

Realization Effects[edit | edit source]

Gibbs Phenomenon[edit | edit source]

Least Squares Design

The mathematical technique known as least squares can be used to design digital filters. This method works by creating the polynomial (transfer function) that is the "best fit" to a set of desired data (magnitude response).

Analog Filter Design

It may seem strange to have a section devoted to analog filter design in a digital signal processing book. However, there is a method to the madness. It turns out that digital filters can be modeled using analog filters, and analog techniques—which have been studied in depth for many years prior to digital systems—can be utilized to quickly and efficiently design digital filters.

The following chapters will show some of the techniques involved in designing analog filters, and transforming analog filters from one type to another. The section will culminate on a chapter on the bilinear transform, which transforms the mathematical representation for an analog filter into the equations for an equivalent digital filter.

Analog Design Process[edit | edit source]

Digital filters can be designed using analog design methods by following these steps:

- Filter specifications are specified in the digital domain. The filter type (highpass, lowpass,bandpass etc.) is specified.

- An equivalent lowpass filter is designed that meets these specifications.

- The analog lowpass filter is transformed using spectral transformations into the correct type of filter.

- The analog filter is transformed into a digital filter using a particular mapping.

There are many different types of spectral transformations and there are many mappings from analog to digital filters. the most famous mapping is known as the bilinear transform, and we will discuss that in a different chapter.

Butterworth[edit | edit source]

Butterworth ensures a flat response in the passband and an adequate rate of rolloff. A good "all rounder," the Butterworth filter is simple to understand and suitable for applications such as audio processing.

Chebyshev I[edit | edit source]

The Chebyshev 1 filter has ripple in the passband of the filter.

Chebyshev II[edit | edit source]

Chebyshev 2 has ripple in the stopband.

Elliptic[edit | edit source]

This filter has equiripple (the same amount of ripple in the passband and stopband).

Further reading[edit | edit source]

Spectral Transforms

When designing a particular filter, it is common practice to first design a Low-pass filter, and then using a spectral transform to convert that lowpass filter equation into the equation of a different type of filter. This is done because many common values for butterworth, cheybyshev and elliptical low-pass filters are already extensively tabulated, and designing a filter in this manner rarely requires more effort then looking values up in a table, and applying the correct transformations. This page will enumerate some of the common transformations for filter design.

It is important to note that spectral transformations are not exclusively used for analog filters. There are digital variants of these transformations that can be applied directly to digital filters to transform them into a different type. This page will only talk about the analog filter transforms, and the digital filter transforms will be discussed elsewhere.

Low-Pass to Low-Pass[edit | edit source]

This spectral transform is used to convert between two lowpass filters with different cutoff frequencies.

Low-Pass to High-Pass[edit | edit source]

Low-Pass to Band-Pass[edit | edit source]

Low-Pass to Band-Stop[edit | edit source]

Analog Mappings

There are a number of different mappings from the analog domain to the digital domain, and vice versa. All mappings must satisfy the following rules:

- Stability must be preserved in both domains. This means that the interior of the unit circle in the digital domain must correspond to the left-half plane in the analog plane.

- The mapping must be one-to-one. every point in one domain must correspond to a unique point in the other domain. This relationship should hold both ways.

The relationship between the analog and digital domains is highly nonlinear, and there is no way to perfectly reproduce an analog filter in a digital system, or to reproduce a digital filter in an analog system. However, it is possible to faithfully reproduce (within a certain tolerance) the magnitude response of the filter. It is rarely possible to reproduce the phase response of a filter across the transformation, however.

Bilinear Transform

The Bilinear Transform[edit | edit source]

The Bilinear transform is a mathematical relationship which can be used to convert the transfer function of a particular filter in the complex Laplace domain into the z-domain, and vice-versa. The resulting filter will have the same characteristics of the original filter, but can be implemented using different techniques. The Laplace Domain is better suited for designing analog filter components, while the Z-Transform is better suited for designing digital filter components.

The bilinear transform is the result of a numerical integration of the analog transfer function into the digital domain. We can define the bilinear transform as:

The bilinear transform can be used to produce a piecewise constant magnitude response that approximates the magnitude response of an equivalent analog filter. The bilinear transform does not faithfully reproduce the analog filters phase response, however.

As example, the phase response for the bilinear equivalent to an analog system is shown next, using a sampling frequency of 40 rad/sec. Compare the phase responses in the plot, realizing the limit is Ws/2=20 rad/s - the clear divergence is evident.

- num = [1 11.21 116.242 372.601 561.589 363.528 ];

- den= [1 26.489 340.47 2461.61 10433.1 23363.9 19049. 4981.82 ];

- C=tf(num,den); % GNU Octave command

- D=c2d(C,2*pi/40,'bi'); % A/D via Octave

Note that two zeros have been added so that zero and pole counts match.

-

Phase discrep approaching Ws/2

W Domain[edit | edit source]

The bilinear transform is a one-to-one mapping, that is that a unique point in one domain will be transformed into a unique point in the other domain. However, the transformation is not a linear transformation, and is not an exact equivalency between Laplace and Z domains. If a digital filter is designed and transformed into an analog filter, the resulting poles and zeros are not located on the s-plane, but instead on the w-plane that has similar properties, but with a nonlinear correspondence.

In the bilinear transform, the positive imaginary axis in the s-domain is transformed into the upper-half unit circle in the z-domain. Likewise, the negative imaginary axis in the s-domain is transformed into the lower-half unit circle in the z-domain. This mapping is highly nonlinear, however, resulting in a phenomena known as "frequency warping".

Prewarping[edit | edit source]

Frequency warping follows a known pattern, and there is a known relationship between the warped frequency and the known frequency. We can use a technique called prewarping to account for the nonlinearity, and produce a more faithful mapping.

The p subscript denotes the prewarped version of the same frequency. Note that in the limit , the continuous solution is .

Phase[edit | edit source]

The bilinear transform does not maintain phase characteristics of the analog filter, and there is no way to correct the phase response to match.

Filter Design Steps[edit | edit source]

When designing a digital filter using an analog approximation and the bilinear transform, we follow these steps:

- Prewarp the cutoff frequencies

- Design the necessary analog filter

- Apply the bilinear transform to the transfer function

- Normalize the resultant transfer function to be monotonic and have a unity passband gain (0dB).

Alternatively, if we have an inverse bilinear transform, we can follow these steps: