R Programming/Nonparametric Methods

Appearance

This page deals with a set of non-parametric methods including the estimation of a cumulative distribution function (CDF), the estimation of probability density function (PDF) with histograms and kernel methods and the estimation of flexible regression models such as local regressions and generalized additive models.

For an introduction to nonparametric methods you can have a look at the following books or handout :

- Nonparametric Econometrics: A Primer by Jeffrey S. Racine[1].

- Li and Racine's handbook, Nonparametric econometrics[2].

- Larry Wasserman All of Nonparamatric Statistics[3]

Empirical distribution function

[edit | edit source]- The easiest way to estimate the empirical CDF uses the rank() and the length() functions.

- ecdf() computes the empirical cumulative distribution function.

- ecdf.ksCI() (sfsmisc) plots the empirical distribution function with confidence intervals.

> N <- 1000

> x <- rnorm(N)

> edf <- rank(x)/length(x)

> plot(x,edf)

> plot(ecdf(x),xlab = "x",ylab = "Distribution of x")

> grid()

> library("sfsmisc")

> ecdf.ksCI(x1)

Density Estimation

[edit | edit source]Histogram

[edit | edit source]- hist() is the standard function for drawing histograms. If you store the histogram as an object the estimated parameters are returned in this object.

> x <- rnorm(1000)

> hist(x, probability = T) # The default uses Sturges method.

> # Sturges, H. A. (1926) The choice of a class interval.

> # Journal of the American Statistical Association 21, 65–66.

> hist(x, breaks = "Sturges", probability = T)

>

> # Freedman, D. and Diaconis, P. (1981) On the histogram as a density estimator: L_2 theory.

> # Zeitschrift für Wahrscheinlichkeitstheorie und verwandte Gebiete 57, 453–476.

> # (n^1/3 * range)/(2 * IQR).

> hist(x, breaks = "FD", probability = T)

>

> # Scott, D. W. (1979). On optimal and data-based histograms. Biometrika, 66, 605–610.

> # ceiling[n^1/3 * range/(3.5 * s)].

> hist(x, breaks = "scott", probability = T)

>

> # Wand, M. P. (1995). Data-based choice of histogram binwidth.

> # The American Statistician, 51, 59–64.

> library("KernSmooth")

> h <- dpih(x)

> bins <- seq(min(x)-h, max(x)+h, by=h)

> hist(x, breaks=bins, probability = T)

It is also possible to choose the break points.

> x <- rnorm(1000)

> hist(x, breaks = seq(-4,4,.1))

- n.bins() (car package) includes several methods to compute the number of bins for an histogram.

- histogram() (lattice)

- truehist() (MASS)

- hist.scott() (MASS) plot a histogram with automatic bin width selection, using the Scott or Freedman–Diaconis formulae.

- histogram package.

Kernel Density Estimation

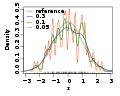

[edit | edit source]- density() estimates the kernel density of a vector.

- Choose the bandwidth selection method with bw.

- Check the sensitivity of the bandwidth choice using adjust. The default is one. It is good practice to look at adjust=.5 and adjust=2.

> x <- rnorm(10^3)

> plot(density(x,bw = "nrd0", adjust = 1, kernel = "gaussian"), col = 1)

> lines(density(x,bw = "nrd0", adjust = .5, kernel = "gaussian"), col = 2)

> lines(density(x,bw = "nrd0", adjust = 2, kernel = "gaussian"), col = 3)

> legend("topright", legend = c("adjust = 1", "adjust = .5", "adjust = 2"), col = 1:3, lty = 1)

- Choose the kernel function with kernel : "gaussian", "epanechnikov", "rectangular", "triangular", "biweight", "cosine", "optcosine".

> x <- rnorm(10^3)

> plot(density(x,bw = "nrd0", adjust = 1, kernel = "gaussian"), col = 1)

> lines(density(x,bw = "nrd0", adjust = 1, kernel = "epanechnikov"), col = 2)

> lines(density(x,bw = "nrd0", adjust = 1, kernel = "rectangular"), col = 3)

> lines(density(x,bw = "nrd0", adjust = 1, kernel = "triangular"), col = 3)

> legend("topright", legend = c("gaussian", "epanechnikov", "rectangular", "triangular"), col = 1:4, lty = 1)

- tkdensity() (sfsmisc) is a nice function which allow to dynamically choose the kernel and the bandwidth with a handy graphical user interface. This is a good way to check the sensitivity of the bandwidth and/or kernel choice on the density estimation.

> x <- rnorm(10^3)

> library("sfsmisc")

> tkdensity(x)

- kde2d() (MASS) estimates a bivariate kernel density.

> N <- 1000

> x <- rnorm(N)

> y <- 1 + x^2 + rnorm(N)

> dd <- kde2d(y,x) # estimate the bivariate kernel

> contour(dd) # plot the bivariate density

> image(dd) # another plot the bivariate density

Examples

[edit | edit source]Local Regression

[edit | edit source]- loess() is the standard function for local linear regression.

- lowess() is similar to loess() but does not have a standard syntax for regression

y ~ x.This is the ancestor of loess (with different defaults!). - ksmooth() (stats) computes the Nadaraya–Watson kernel regression estimate.

- locpoly() (KernSmooth package)

- npreg() (np package)

- locpol computes local polynomial estimators

- locfit local regression, likelihood and density estimation

Examples

[edit | edit source]Generalized additive semiparametric models (GAM)

[edit | edit source]- gam() (gam)

- gam() (mgcv)

> N <- 10^3

> u <- rnorm(N)

> x1 <- rnorm(N)

> x2 <- rnorm(N) + x1

> y <- 1 + x1^2 + x2^3 + u

>

> library(gam)

> g1 <- gam(y ~ x1 + x2 ) # Standard linear model

> par(mfrow=c(1,2))

> plot(g1, se = T)

>

> g1 <- gam(y ~ s(x1) + x2 ) # x1 is locally estimated

> par(mfrow=c(1,2))

> plot(g1, se = T)

>

> g1 <- gam(y ~ s(x1) + s(x2) ) # x1 and x2 are locally estimated

> par(mfrow=c(1,2))

> plot(g1, se = T)

>

> library(mgcv)

> g1 <- gam(y ~ s(x1) + s(x2) ) # x1 and x2 are locally estimated

> par(mfrow=c(1,2))

> plot(g1, se = T)

References

[edit | edit source]- ↑ Jeffrey S. Racine Nonparametric Econometrics: A Primer http://socserv.mcmaster.ca/racine/ECO0301.pdf and at the R code examples http://socserv.mcmaster.ca/racine/primer_code.zip

- ↑ Qi Li, Jeffrey S. Racine, Nonparametric econometrics, Princeton University Press - 2007

- ↑ Wasserman, Larry, "All of nonparametric statistics", Springer (2007) (ISBN: 0387251456)