Blender 3D: Noob to Pro/Advanced Tutorials/Advanced Animation/Guided tour/Mesh/Shape/Sync

|

|

Applicable Blender version: 2.4x. |

Lip-Sync with Shape Keys

[edit | edit source]Here I will attempt to explain my recent dealings with using Blender Shape Keys to produce convincing lip-sync (Lip-synchronisation, i.e.: "speech") for simple, humanoid characters.

This is aimed at people with an understanding of Blender fundamentals like vertex loops, face loops, sequencer and of course, Blender's new Shape Key system. If these terms mean nothing to you, then you may well struggle to keep up. If you're familiar with them then I hope this tutorial will prove to be a breeze and your characters will be speaking so fluently you'll have trouble shutting them up!

Other Lip-sync tutorials, if you can find them, recommend using other software like Magpie, Papagayo and others, but while I've no doubt they provide a valuable service and maybe make syncing easier, I will be using only Blender for this tutorial. I haven't tried using lip-sync software yet so I can't really say if it's easier or not.

You will find links to interesting audio files you can use for testing animation and lip-sync near the bottom of the page.

The hard work first

[edit | edit source]Setting up for Lip-Sync

[edit | edit source]First, set up your Blender screen so you have everything you need for this project. You'll need a front 3D Window, an Action Window, the Buttons Window (showing Editing - F9 - panels) and a Timeline Window. If you've got the room, a Side-view 3D Window would be handy too.

The basic sound units are called phonemes and the mouth shapes we use to represent these phonemes for lip-sync are called visemes (or sometimes, phoneme shapes) and there are many references for these around the web. Legendary animator Preston Blair created one of the most popular viseme sets, which is great for cartoon-style characters. Other visemes are aimed at more-realist, humanoid characters. The shapes you choose depend on the style of your model. (I'll try to provide some useful links later)

The first and most difficult step in good lip-sync is making the shape keys for these visemes. How well these are made ultimately determines the success of the animation and it is worth spending time getting them right, although they can be modified and tweaked later. So choose your favourite set of visemes (or even look in a mirror and use your own face as a reference) and start setting your keys. A model with good topology - especially well formed edge loops around the mouth area - will be a big help here!

What on Earth are topology and edge loops?!?!

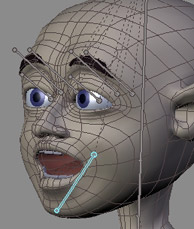

[edit | edit source]Topology refers to the way your 3D mesh defines the object, in this case your character's head. Edge loops refers to the flow of consecutive edges around the character's major features. Typically, good edge loops flow around the eyes and around the mouth in a somewhat deformed, "circular" manner. Selecting and deforming a single loop of edges and vertices surrounding a facial feature is much quicker than having to individually select a lot of edges that don't flow naturally around that feature. Similarly, deforming a single loop or collection of related, nested loops is much easier and quicker than trying to deform a seemingly random set of edges. You can easily see these loops in the character screenshots above. The series of edges surrounding the mouth simply stretch from wide ellipses to almost circular to create a useful variety of mouth shapes. The faces defined by paired edge loops are referred to as face loops. The close-up image below shows selected face loops.

There are many tutorials on the web about these topics so if you need more information before proceeding then a quick search is probably a good idea.

Setting the basic viseme shape keys

[edit | edit source]First, select your undeformed mesh and create your basis key. Go to Editing (F9) window and go to the "Shapes" panel. Press the "Add Shape Key" button. Enter and exit edit mode to set the basis key. Then create your first key Key 1 and name it "M". Enter edit mode and if your character's mouth isn't already closed (some people make them that way) then close it by carefully selecting the faces and loops around the mouth. You will usually use Size-Z plus a bit of grabbing and shifting to achieve this. Don't forget to include the faces on the inside of the lips or the deformation will be unpleasant. When you're happy with the shape, exit Edit mode and there you have it. Your character can now say "Mmmmmm" whenever you like. Test it by moving the Shape Key slider back and forth but make sure to leave it at zero before making more keys. (If you made your character with a closed mouth then you can just add the new "M" key then enter and exit edit mode to set it.)

For most new keys, you will select the basis key first then press "Add Shape Key" then make the required shape from scratch in edit mode. However, some mouth shapes are very similar, like "OH and OOO" or "EE and SS" and it would be easier if you could start with something close to what you want rather than shifting everything from scratch every time. Luckily, Blender allows you to do just this. Once you've made your "EE" key, for example, you can select it and immediately press "Add Shape Key" then enter edit mode and the mouth will already be deformed and you only need to make subtle changes to it to make your "S" shape.

Remember that the keys you make are for sounds, not letters.

In general, you'll need shape keys for the following sounds

- M, B, P

- EEE (long "E" sound as in "Sheep")

- Err (As in "Earth", "Fur", Long-er" - also covers phonemes like "H" in "Hello")

- Eye, Ay (As in "Fly" and "Stay")

- i (Short "I" as in "it", "Fit")

- Oh (As in "Slow")

- OOO, W (As in "Moo" and "Went")

- Y, Ch, J ("You, Chew, Jalopy")

- F, V

These can often be used in combination with each other and with jaw bone rotations and tongue actions to also produce other sounds like "S", "R", "T", "Th" and a short "O" as in "Hot" - or these can also be specifically made with their own shapes. This decision depends largely on your character. Start with the essentials and make others if you need them.

NOTE: I use one jawbone in my current character and this is also used to control the mouth shapes. It doesn't drive the shapes but it moves the bottom teeth and tongue (which can also be controlled separately) and the faces that make up the chin part of the character mesh. For some visemes, I move the jawbone into a logical position before adding the shape key. For example. I open the jaw for the "OH" key and close it for the "M" key. Later, when animating, the jawbone is animated along with the Shapes for a very convincing result.

Load the audio

[edit | edit source]Once all your viseme shapes are set the time comes to get your character to speak. (Normally you would animate the body first and leave the lip-sync till near the end).

If you haven't already done so, load your audio file into a Blender Video Sequencer Window and position it where you want it. Currently, Blender only supports 16 bit wav format audio files so you may need some editing or conversion software to process the file if it isn't in this format. "Audacity" is a good, open-source (free) editor that will suit this purpose and much more.

Blender Video Sequence Editor Window showing loaded Audio Strip

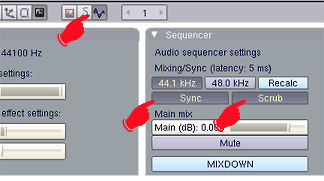

Go to the Scene Window (F10) and press the "Sound block buttons" button --(in the three buttons near the frame counter. It looks like an audio wave)--. Press the "Sync" and "Scrub" buttons. The "Sync" button makes the playback in the 3D window closely match the audio when you press Alt-A (it does this by dropping image frames if necessary and it generally provides only a rough guide of how things match-up). The "Scrub" button is important for lip-sync as it means that whenever you change frames, Blender plays the audio at that point (Currently in some Blender builds you have to press Alt-A at least once to get this feature to start working).

Select your character and your Shape Keys should be listed in the Action Window, in the order in which you made them (I don't think they can be shuffled). You will see a small triangle button labelled "Sliders" at the top of the list - press it to show the sliders for each shape. If you drag the mouse back and forth in this window, you should hear the audio play as you cross frames. This is how you will identify the main viseme/phoneme key frames.

Getting down and dirty

[edit | edit source]Before you begin your lip-sync, you obviously need to know what your character says. It might be worthwhile writing it down and even speaking it over and over, in time with the audio, to get a feeling for the sounds you'll be dealing with. Some sounds are what I'll call "key sounds" and others are almost dead, non-descript "in-between" sounds that fill in the gaps between the key sounds. Obvious key sounds are those where the lips close and those where they are tightly pursed or wide and round, other key sounds can differ with every piece of audio. Don't make assumptions about the shapes you'll use based on the words you know are there. What you are animating are the actual sounds - not letters or words (Keith Lango has much to say about this on his website and I recommend reading it)

The Timeline Window

[edit | edit source]Go to the Timeline Window. This window seems to have been largely overlooked in previous documentation yet it provides the basics for timed animation plus a few other goodies to make your animation life a little easier. Here you can turn auto-keying on and off at will (you'd normally go to the hidden User Preferences window which is a pain and easy to forget), navigate frames, play and pause the animation in the 3D window, turn Audio Sync on and off, set the start and end frames for the animation and set frame markers. This last feature is the key to our project.

In recent Blender releases,the audio "Scrub" feature works in most windows. As you scrub through the audio, listen for where the key sounds occur. As you hit a key sound, scrub back and forth to find where that sound commences. In the Timeline Window, press "M" to set a frame marker at this frame. The marker can be labelled by pressing CTRL-M in the Timeline Window while the marker is selected (yellow). Enter a sensible name for the marker that indicates what the marker is for (like the phoneme sound and/or which word it starts or ends). Markers are selectable and moveable and can be deleted just like other Blender items.

NOTE: Depending on the speed of your system, you may find you get more pleasing audio scrubbing and better 3D window playback if you turn off subsurf for your model and hide any unimportant parts of the scene on different layers. The fewer things Blender has to calculate as you scrub or play-back, the faster it can draw the frames to maintain sync with the audio.

Turn off these buttons to disable subsurf setting in 3D window

Scrub through the whole audio - or sections of it in a long piece - marking and labelling all the key sounds.

Setting the keys

[edit | edit source]Now you have everything you need for your first lip-sync pass. Start at the first frame and click once on all your Shape Key sliders in the Action Window to set them all to zero. Move through the frames from start to finish setting shape keys where you marked the key sounds in the Timeline Window. If your character has a jaw bone and tongue bone(s), you will need to set these where required as you go.

Trouble in paradise? A quick lesson in handling Shape Keys

[edit | edit source]If you haven't set shape keys before you might notice one interesting dilemma - they have great memories! Once you set a slider to any value, it stays at that value until you set another value somewhere. The shapes change in a linear fashion between keys. At first, this appears to be a problem if you want to key "MOO" because after you set the "M" key slider to 1 (one), it will stay there, making it impossible to get your character to say "OO" while his lips are trying to stay shut. So, you have to set the "M" slider on the "M" sound, then as the audio goes into the "OO" sound, you must set the "M" slider back to zero and then set the "OO" slider to its full value.

This introduces another problem. After you set the "OO" key, your "M" sound is messed up because the mouth is now also being affected by the "OO" shape that follows it. So, you must make sure the "OO" sound is set to zero when you want the lips closed in the "M" position.

In general, you'll find yourself setting each shape 3 times

- once to determine where you want the mouth to begin moving to this shape (slider set to zero)

- once to set the slider at the desired level for this phoneme

- and once more to end this shape and move into the next one (slider set to zero)

The same principle applies to the jaw bone and tongue - 3 keys to each move.

As you get more comfortable with this procedure, you'll find you can leave some shapes set longer or adjust them to different levels as they can provide some interesting mouth shapes when combined with other shapes.

Setting the in-between sounds

[edit | edit source]Once the key sounds are properly set, you should be able to scrub slowly through the Action Window and watch your character speak in time with the audio. It won't be perfect but it's a start. To fix his speech impediment, you now have to fill in the sounds between the key sounds. Mostly these will be dull vowel sounds ("err, uh, ah") and silence. These shapes are set in exactly the same way but here you'll have to really watch the 3D view as you set the sliders. Try combinations of sliders like "EE" and "OH" to get the perfect shape for each individual sound. You have to be guided by your character. Does he look like he's saying the sound you're hearing? (Remember that exactly what's being said is not important - it's only the sound that matters). Test each sound as you set it by scrubbing a few frames over and over and watching your character mouth the sounds.

All that's left, hopefully, is some polishing and tweaking. If it's not perfect then don't despair. Like other areas of animation, it can take a while to get a feeling for lip-sync and as the tools and workflow become more familiar you can pay more attention to the important work.

Putting it all together

[edit | edit source]When you're reasonably happy, it's time to combine the audio and video and watch the result. Since Blender can't do muxing (combined audio/video) you'll need to composite it with the editing software of your choice (OSX users can use recent versions of iMovie, virtualDub is often recommended for Windows users and Avidemux2 is often recommended for Linux users.).

What Blender can do is provide a fully synced version of the audio file the same length as the animation - even adding silence at the start and end if need be to maintain the synchronisation. To make this synced audio file, go back to the "Sound block buttons" panel and press "MIXDOWN". This saves a .wav file using the filename and location you entered in your output box (you did didn't you?) plus a frame count (something like 'speech.avi0001_0400.wav'). Then save your animation by pressing the "ANIM" button.

Combine the audio and video in your video editor and export a muxed file. You may find when you play it back that the mouth seems to be just slightly out of sync. This may be for two reasons: You're syncing per frame, the sound doesn't start exactly at a frame. The second reason is the way the brain processes faces and expressions and mixes it with sounds heard to comprehend speech. This comprehension phenomenon is barely understood and is a common challenge in animation, and some physiologists think our brains read lips and facial expression as a way of setting up to understand the context of sounds received and comprehending the meaning behind language. To solve it, you can go back into Blender, select the audio in the Sequencer Window and move it one or two frames later, then press "MIXDOWN" again to create a new .wav file with a split second of silence at the start. Remix this with your video and watch the results.

From here on its all a matter of testing and tweaking until you're happy!

Using Blender to render Audio AND Video TOGETHER.

[edit | edit source]Ever since Blender 2.3, it has been possible to render video with the audio attached. Once you have your animation done, and are ready to render: In the buttons window, click scene (F10). In the format tab, choose FFMpeg. There will now be three tabs. Click on Audio. Click Multiplex, and choose your audio codec. Click Video. Choose your video codec (including AVI). Now, in another section of the window, there will be an Anim tab. Click Do Sequence. And click ANIM. When you watch the video, you'll notice that it has sound.

Note: The mac version of blender doesn't have the FFMpeg codec in blender by default.

Audio Files

[edit | edit source]You can find some interesting audio files selected for animators at [1]animationmeat. These files come complete with a pre-marked phoneme sheet.

Note

[edit | edit source]One final note. Watch how the pros do this. When actors are doing voice overs for major releases their actions are recorded and even integrated into the final animation. If you have a digital camera, you may also try recording your own mouth performing your dialogue and approximating its positions to your animation. This can give you a great visual reference, possibly frames for frames if your frame rates match, and save you time.