Basic Physics of Digital Radiography/The Basics

Basic physical features of Digital Radiography are presented in this chapter. The chapter starts with a consideration of the atomic environment, specifically at the level of the electron shells, and then describes how X-rays are produced and detected. Their attenuation by different materials is treated mathematically and the design of modern radiographic instrumentation is overviewed. The Fourier Transform is also introduced from a conceptual perspective.

Atomic Structure

[edit | edit source]The atom can be considered to be one of the fundamental building blocks of all matter. Its a very complex entity which consists, according to a simplified Bohr model, of a central nucleus orbited by electrons, somewhat similar to planets orbiting the sun - see Figure 1.1. The nucleus consists of two particles - neutrons and protons. Protons have a positive electric charge while neutrons have no charge. The electrons have a negative electric charge.

Electrons are rather tiny, having a mass of about 0.05% of that of a proton or neutron. The proton mass itself is minuscule at about 10-24 g, and is similar to that of the neutron. Irrespective of the actual value, it can nevertheless be inferred that most of the mass of the atom resides in the nucleus.

The number of protons in a nucleus is called the Atomic Number (Z), which also indicates its place in the Periodic Table of Elements.

The nucleus is the source of radioactive emissions, which are exploited in Nuclear Medicine and Molecular Imaging. It is also the source of the nuclear magnetic resonance (NMR) phenomena which are exploited in Magnetic Resonance Imaging (MRI). In contrast, Diagnostic Radiography is fundamentally based on changes which occur in the orbiting electrons.

Let's look at the situation in more detail by considering a few common examples of atoms. The simplest one is hydrogen - the first entry in the Periodic Table. In its most common form, the nucleus consists of one proton which is orbited by a single electron - see Figure 1.2. Helium, the second entry in the Periodic Table, has a nucleus which consists of two protons and two neutrons and is orbited by two electrons.

The most abundant form of carbon, the sixth entry, has a nucleus consisting six protons and six neutrons and is orbited by six electrons. And finally oxygen, the eighth entry in the Periodic Table has eight protons and eight neutrons in its nucleus, and is orbited by eight electrons. Note that the number of protons and the number of electrons are equal so that in isolation the atom is electrically neutral.

This situation is more or less repeated throughout the Periodic Table so that an element such as calcium, the 20th entry, is orbited by 20 electrons, molybdenum, the 42nd entry, is orbited by 42 electrons and tungsten, the 74th entry, is orbited by 74 electrons.

There's a couple of additional points to note about the above figure. Firstly, note that the electrons occupy defined orbits around the nucleus in this simplified view. These orbits are sometimes called shells and are labelled K, for the innermost one, L, M, N etc.

Secondly, note that these shells can be occupied only by certain numbers of electrons, e.g. the maximum number able to occupy the K-shell is 2, for the L-shell its 8, for the M-shell its 18, for the N-shell its 32 and for the O-shell its 50. Finally, note that when an electron is removed from a shell, a positive ion results.

Electron Binding

[edit | edit source]The electrons are bound to the atom by what is called their Binding Energy. This is strongest for the K-shell and gets weaker the further an electron is from the nucleus. K-shell binding energies increase with the atomic number of the nucleus, as shown in the following table:

| Element | Atomic Number (Z) | Binding Energy (keV) |

|---|---|---|

| Oxygen | 8 |

0.5

|

| Calcium | 20 |

4.0

|

| Iodine | 53 |

33

|

| Barium | 56 |

37

|

| Tungsten | 74 |

69.5

|

| Lead | 82 |

88

|

The situation can be illustrated using an energy level diagram, an example of which for tungsten is shown in Figure 1.3.

The figure shows the K-shell at the bottom with an occupancy of 2 electrons and with the greatest binding energy. Above it is the L-shell with an occupancy of 8 electrons and a lower binding energy - and so on for the other shells.

Note firstly that the binding energy is expressed in keV, i.e. kiloelectronvolts. This is a measurement unit that is used to express the tiny amounts of energy involved at the atomic level. Here, the joule (the SI unit of energy) is far too big. It is possible to derive that:

In other words, an electronvolt is less that one millionth, millionth, millionth of a joule - its quite small!

Note from the diagram above, that the binding energy of the K-shell electrons in tungsten atoms is 69.5 keV. What this means is that if we want to remove one of those electrons from the atom, we would need to give it an energy is excess of 69.5 keV. To remove an L-shell electron, a lower energy of just 10.2 keV would be required.

Remember from before that an ion results following the removal of an electron from an atom. We can say from this perspective that the Ionization Potential of the K-shell is 69.5 keV, while that of the L-shell is 10.2 keV - and so on. What is of interest to us here is what happens following such an ionization process.

When an electron is removed from the K-shell, for instance, an electron from an outer shell can drop down to fill the vacant spot that results. This process is accompanied by the emission of electromagnetic radiation from the atom with an energy equivalent to the difference in the binding energies of the two shells involved. For instance in tungsten, if a K-shell vacancy was filled by an L-shell electron, the energy of the emitted radiation is:

and its 69.0 keV when the vacancy is filled by a N-shell electron.

Electromagnetic Radiation

[edit | edit source]We've just considered how electromagnetic radiation can be produced when electrons inside materials transit between energy levels. The radiation consists of transverse waves which can be described by their wavelength (λ), frequency (f) and radiant energy (E). These variables are inter-related through the following two equations:

and

c = f λwhere h is Planck's Constant (6.63x10-34 m2kg/s) and c is the wave velocity (e.g. 3x108 m/s). Thus high frequencies equate with relatively energetic, shorter wavelength waves, and, conversely, low energy radiation equates with lower frequencies and longer wavelengths.

The energies of these emitted radiations form a broad spectrum depending on the particular electronic arrangements involved in their production which is called the Electromagnetic Spectrum - see Figure 1.4.

The spectrum is the broadest ranging physical phenomenon known to science. It can be seen in the figure to extend from waves with a wavelength of much greater than 1 meter to wavelengths less than a millionth, millionth, millionth of a meter. At these tiny wavelengths, the radiation can be considered to be small wave packets, which are also called photons. The spectrum can also be seen to encompass frequencies from less than a kHz to over 1024 Hz. Correspondingly, the energies range from less than 1 μeV to over 10 MeV.

Electromagnetic radiation is further classified depending on how the radiation interacts with matter. At the lower end of the spectrum the radiation manifests itself as radio waves, which have found wide application in the communications field. Above this region is a broad band of radiations which are known to cause heating and are called Infrared (IR) waves. Slightly higher than this is a very narrow band, which the retinas of our eyes respond to, called the Visible light region. Above this are the more energetic Ultraviolet (UV) rays, some of which are known to cause skin damage. The most energetic of these waves are called X-Rays, which are known to penetrate optically-opaque materials. Within this very broad band are waves which are called Gamma Rays which are exactly the same as X-rays but originate from inside the nuclei of atoms rather than from the electron shells. The X-ray region can also be divided into diagnostic and therapeutic radiations with the lower energy (20 - 150 keV) X-rays being used for Diagnostic Radiography and higher energy rays (1 - 25 MeV) being used in Radiation Therapy. Diagnostic X-ray wavelengths range from roughly 0.1 nm to 0.01 nm, while that of visible light in comparison is between about 400 nm to 650 nm (colors violet to red).

Finally the radiation can be classified depending on whether or not it produces ions in the material it irradiates. Energetic radiations such as some ultraviolet, X-rays and gamma rays generate ions when they interact with matter and are referred to as Ionizing Radiation, while lower energies give rise to Non-Ionizing Radiation.

Diagnostic X-Rays

[edit | edit source]

This form of electromagnetic radiation was originally discovered by Wilhelm Röntgen at the end of the 19th century[1]. He was using a Crookes tube to experiment with cathode rays and noticed that a nearby piece of cardboard which was coated with barium platinocyanide crystals began to fluoresce. Shortly after his discovery he noticed the X-ray shadow of his hand on this screen and later produced the first radiograph, that of his wife’s hand. The rest, as they say, is history!

Röntgen himself used to refer to the radiation as X-rays - the ‘X’ implying unknown. In some parts of the world, they are known as Röntgen-Rays, in memory of their discoverer. In fact, a leading society is known as the American Roentgen Ray Society and has published the American Journal of Roentgenology for many years.

Today we know that X-rays can be produced by a whole range of materials when we fire an energetic beam of electrons at them. Tungsten is a very common metal used for X-ray production in medicine partly because its electron binding energies are such that quite penetrating radiation can be produced. What happens is illustrated in Figure 1.5.

An electric current can be passed through a small filament inside a vacuum tube to produce electrons. These are formed into an electron cloud and accelerated across the tube to hit a tungsten anode. The acceleration can be provided by hooking the anode and cathode up to a high voltage supply, which can typically generate voltages in the region of 20 to 150 kV. X-rays are then produced within the tungsten target, as indicated by the short arrows in the figure.

X-rays are produced within the tungsten by two processes: electron ejection and electron deceleration. In the former process, X-rays can be produced when electrons in the electron beam collide with and eject electrons from the tungsten atoms - as illustrated in Figure 1.6.

Panel (a) of the figure illustrates four electron shells (K, L, M and some N) of a tungsten atom. The other electrons aren’t shown so as to simplify the figure. When an incoming electron from the electron beam collides with a K-shell electron with sufficient energy, it can eject the electron from the atom and leave a vacant position in that shell, as shown in panel (b). This vacancy is then filled by an electron dropping from an outer shell - a process which is accompanied by the emission of an X-ray. The dropping electron can come from any of the outer shells - the N-shell is shown as an example in panel (c), but it could be the L- or M-shells, or indeed from the P- or O-shells (which if you remember aren’t shown in the figure).

In the case where an L-shell electron fills a K-shell vacancy, the L-shell is subsequently left with its own vacancy, which can then be filled by an electron from an outer shell resulting in a cascading-type effect until the vacancy is transferred to the outer shell.

The X-rays emitted in this type of process have an energy equivalent to the difference in binding energies of the electron shells involved in the transitions. In the case of tungsten, for a N-shell to K-shell transition (Figure 1.3), the energy of the X-ray is given by

For an L-shell to K-shell transition, the X-ray energy is

The X-rays produced by these transitions are called Characteristic Radiation because they are characteristic of the element which produces them. Thus, for example, the principal transitions in copper, whose energy level diagram is shown in Figure 1.8, are 8.97 keV (N-shell to K-shell), 8.91 keV (M-shell to K-shell) and 8.05 keV (L-shell to K-shell).

The cascaded refilling of a vacancy in the K-shell of copper is illustrated in Figure 1.9 for the case where the incoming photon transfers 30 keV to a K-shell electron. Note (see the left side of the figure) that the ejected electron has an energy of 30 keV minus the binding energy of the K-shell (8.98 keV), i.e. 21.02 keV. Note also that the subsequent (see the right side of the diagram) L-shell to K-shell transition will generate an X-ray with an energy of 8.05 keV, that an M-shell to L-shell transition will generate one with an energy of 0.86 keV and that an N-shell to M-shell transition will generate a 0.06 keV X-ray.

The second process by which X-rays are generated when an energetic electron beam is fired at a dense material like tungsten is due to the deceleration of these electrons as they get deflected by the electrostatic fields of the material’s atoms. This process results in what is called braking radiation - also more commonly known as Bremsstrahlung, a German word meaning the same thing.

The electrons in the beam experience the positive and negative electrostatic forces of the material’s atomic nuclei and orbiting electrons. An incoming electron can be deflected from its original direction by these forces and can lose energy which is emitted in the form of an X-ray photon. Typically, the electrons in the beam experience many such interactions before coming to rest. Occasionally, some of them may collide with atomic nuclei, which can give rise to the generation of an X-ray photon whose energy is equal to that of the incoming electron.

A broad spectrum of X-ray energies results with a maximum energy equivalent to that of those in the electron beam.

The combined effect of these two processes is illustrated in Figure 1.10. It is seen that the X-ray spectrum generated when a beam of 100 kV electrons collides with a tungsten anode consists of a broad Bremsstrahlung spectrum, whose intensity decreases with energy, with lines from the energies of tungsten’s Characteristic Radiation superimposed. Note that the maximum energy of the X-ray’s generated by the 100 kV electron beam is 100 keV. Similarly, if the electron beam had a different energy, then the maximum X-ray energy would be at that different energy (the Duane-Hunt Law). Also note that if an electron beam energy lower than the K-shell binding energy of tungsten is used, e.g. 60 kV, then only a Bremsstrahlung spectrum would be obtained without any lines from the K-Characteristic Radiation.  The Characteristic lines for shells other than the K-shell are not shown in the figure because for tungsten they have such a low energy (around 7.5 to 10 keV in the case of the L-Characteristic Radiation) that they are of little interest to us here.

Finally, note that the bulk of the radiation in the X-ray spectrum for a tungsten anode is seen in Figure 1.10 to result from Bremsstrahlung.

X-Ray Detection

[edit | edit source]

X-rays were first detected by Röntgen himself using the fluorescence generated within barium platinocyanide crystals. These fluorescent crystals produce scintillations (i.e. minute flashes of light) when they absorb X-rays. Numerous other materials were also found to scintillate, e.g. calcium tungstate, gadolinium oxysulfide, cesium iodide and sodium iodide to name just four which have been widely used in Diagnostic Radiography. Figure 1.11 illustrates the use of an intensifying screen in the early 20th century.

The fluorescence of these materials following X-ray exposure can be explained using an Energy Band Theory. Here the electron environment in certain crystalline materials can be considered to be represented by two energy bands - the Valence Band and the Conduction Band. These are separated by a forbidden energy zone known as the Energy Gap, as shown in Figure 1.12.

The ‘Increasing Energy’ arrow in the figure refers to the energy of the electrons. The outer-shell electrons of the crystal’s atoms are considered to occupy the valence band (V). When they leave the atom they are considered to join the conduction band (C). An energy gap exists between these two bands which normally cannot be occupied by electrons. However, impurities and lattice defects in the crystal may generate intermediate energy levels (T) which can trap electrons within the energy gap. These traps may result from the manufacturing process, for instance, or they may be designed into the crystal structure.

The process of X-ray absorption is shown in Figure 1.13. X-ray energy absorption can cause an electron from the valence band to be elevated to the conduction band, once it is given sufficient energy to overcome the energy gap (Process #1). Electrons from such stimulation can be collected directly (Process #2), as in materials such as amorphous selenium, for the detection of X-rays. This process is exploited in direct Digital Radiography (DR) image receptors.

Alternatively, the stimulated electron may immediately drop back down to the valence band (Process #3) - an event which is accompanied by the emission of visible and/or ultraviolet radiation. This is the process followed in fluorescent materials and which is exploited in radiographic intensifying screens for applications such as film/screen radiography, fluoroscopy and indirect Digital Radiography. It is also the process exploited by scintillation-based radiation detectors.

Another alternative is that the electron dropping from the conduction band gets trapped in an intermediate energy level (Process #4). The electron stays in this trap until something happens to release it - an event which is accompanied by the emission of light in some crystals. This ‘something’ can generally be provided by another form of energy, such as heat - as in thermoluminescence - or light - as in photo-stimulable luminescence. The thermoluminescent process is exploited in the thermoluminescent dosimeter (TLD) which is widely used in personal radiation monitoring. Photo-stimulable luminescence (PSL) is exploited for X-ray imaging purposes in Computed Radiography (CR).

The Detection Efficiency expresses the ability of radiation detectors and image receptors to absorb radiation while the Conversion Efficiency is used to express the conversion of that absorbed energy into a measurable quantity, e.g. light or electric current. Note that both quantities are needed to express detector performance. For instance, if lead were to be used as a radiation detector, it could have a detection efficiency of close to 100% depending on its thickness, but a conversion efficiency close to 0% since a measurable light or electric current signal would not be generated.

Materials used for X-ray image reception are generally of three types:

- Unstructured Phosphors, such as gadolinium oxysulfide (Gd2O2S:Tb) and barium fluorobromide (BaFBr:Eu), in a powder form distributed within a transparent binding substrate;

- Structured Phosphors such as cesium iodide (CsI:Tl), which consist of dense, narrow crystals each ~5 μm diameter; and

- Photoconductors, such as amorphous selenium (a-Se), which generate electric signals directly in response to X-ray exposure.

Detection efficiency increases with larger thicknesses for all these materials. However, a thicker phosphor causes a loss in spatial resolution with unstructured phosphors because of diffusion of light photons produced within the phosphor substrate. This is the case for early CR and some DR imagers. Thinner phosphors will reduce the amount of this diffusion but its lower detection efficiency will substantially increase the exposure required for adequate images.

In contrast, structured phosphors, used in some CR, many indirect DR receptors and in X-ray image intensifiers, are made from microscopic columnar crystalline structures that internally reflect light in a fiber optic-like manner with minimal diffusion. This allows a relatively thick phosphor to be used that has superior detection and conversion efficiencies.

Photoconductors, used in direct DR receptors, generate electrons directly which are collected with minimal spread by electrodes and as a result give excellent conversion efficiency. The semiconducting material can be made relatively thick which improves the detection ability. However, the X-ray absorption characteristics of these materials tends to be poor relative to phosphorescent materials and their detection efficiency is therefore lower. Their detection efficiency for visible light, however, is rather high, as evidenced by their widespread use in photocopiers, laser printers etc., which leads to the concept of a hybrid detector consisting of a phosphor screen coupled to a photoconductor.

The ionization of atoms, illustrated in Figure 1.13 (Process #2), can also occur in other materials, such as gases. Here we consider the ionization process to result in the creation of an ion-pair, i.e. a positive ion and an electron. Following creation by X-ray absorption, ion pairs are swept away by an applied electric field to be collected by electrodes and measured as an electric current. This is what happens in radiation detectors such as Ionization Chambers and Geiger Counters.

Attenuation of X-Rays

[edit | edit source]The intensity of an X-ray beam is reduced as it passes through a material because of collisions between the X-ray photons and the atoms of the material. The beam is said to be attenuated because the collisions result in both the absorption and the scattering of photons. The main absorption process (called the Photoelectric Effect) totally removes photons from the radiation beam. The main scattering process (called the Compton Effect) results in a reduction in the energy of the photons and a change in their direction. We will consider these processes in more detail in a later chapter, and for the time being will develop a mathematical description of the phenomenon.

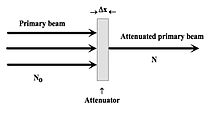

For simplicity, consider what happens when a narrow X-ray beam strikes an attenuating material, as shown in Figure 1.14. Let's assume that the primary beam consists of photons of a single X-ray energy, i.e. monoenergetic radiation, and that no scattering occurs. The X-ray attenuation, i.e. the reduction in the number of photons in the beam, can therefore be represented by:

where N0 and N are the number of X-ray photons in the incident and transmitted radiation beams, Δx is the thickness of the attenuator and μ is a constant called the Linear Attenuation Coefficient, which is characteristic of the attenuating material. The more attenuating a material is, the greater the value of μ.

By integrating this expression for all attenuator thicknesses, we can write:

The number of X-ray photons can be multiplied by their energy to express the above relationship in terms of radiation intensities, i.e.

where I and I0 are the transmitted and incident radiation intensities. This equation is called the Beer-Lambert Law.

Thus a value of μ=0.01 cm-1 implies that ~1% of X-ray photons incident on an absorber of thickness 1 cm will be attenuated and 99% will be transmitted through. When μ increases to 0.5 cm-1 for an absorber of the same thickness, the transmission is ~60% (i.e. e-0.5) and the attenuation provided is therefore ~40%.

The transmissions calculated for 1 cm thickness of soft tissue and bone, for instance, are shown in the following table at X-ray energies of 30 and 60 keV. Note that the typical transmission for a chest radiograph is about 10%, for the skull its about 1% while for the abdomen its about 0.5%.

| Material | 30 keV (cm-1) | Transmission | 60 keV (cm-1) | Transmission | ||

|---|---|---|---|---|---|---|

| Soft Tissue | 0.38 |

68% |

0.21 |

81%

| ||

| Bone | 1.6 |

20% |

0.45 |

64%

|

To include the density, ρ, of the attenuating material, the Mass Attenuation Coefficient, μm, is defined as:

This parameter is used to account for the different material phases of different absorbers, e.g. for water, μm is theoretically the same whether the substance is in its liquid, ice or vapour phase. The Beer-Lambert Law can be re-written on this basis as:

with the product ρx being referred to as an absorber’s projected thickness.

This equation can be plotted on linear/linear axes - see Figure 1.15. An exponential decrease in intensity can be seen in panel (a) in contrast to the equivalent linear decrease shown in panel (b). Note that the slope of this linear decrease is given by -μm.

A useful practical parameter can be derived from the linear attenuation coefficient called the Half Value Layer (HVL). This expresses the thickness of absorber required to halve the beam intensity. It is possible to show that:

Calculated HVLs for soft tissue and bone at 30 and 60 keV are shown in the following table:

| Material | 30 keV (cm) | 60 keV (cm) |

|---|---|---|

| Soft Tissue | 1.82 |

3.3

|

| Bone | 0.43 |

1.54

|

The HVL is a concept widely applied in radiological protection and is generally expressed in terms of the thickness of the material used for attenuation, e.g. mm of aluminium, lead, copper etc.

In the case of polychromatic radiation, in contrast to the monoenergetic situation considered above, the effect of beam hardening, where lower energy photons are preferentially removed from the beam as it passes through the attenuator, effectively reduces the slope of the log intensity/projected thickness plot and a pure linear relationship is no longer valid - see Figure 1.16. From a practical point of view, this implies that the second HVL of an attenuator is greater than the first HVL, for instance, when polychromatic X-rays are used.

A consequence of using polychromatic X-rays is that the HVL for the range of energies needs consideration. As a result, the HVL for soft tissue in diagnostic radiography is different from that calculated in the table above, and is generally about 2.5 to 3 cm.

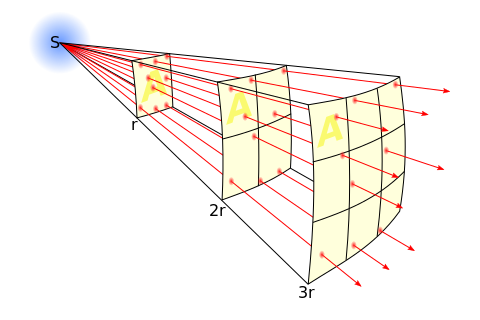

A related effect has to do with the divergent nature of X-rays beams and results in the Inverse Square Law - see Figure 1.17. Note that the intensity of the X-ray beam is reduced by this divergence by a factor directly dependent on square of the distance, r, from the radiation source, S. Therefore, if we re-position a patient three times the distance from the source, the radiation intensity will be reduced by a factor of nine and exposure factors ideally need to be increased by this factor to compensate. This law is also a fundamental tenet of radiation protection along with exposure duration and radiation shielding. We will consider such protective measures in a later chapter.

In addition, note that the intensity per unit area of the beam is independent of the distance, r, from the source. This phenomenon occurs because the reduction in intensity provided by the inverse square law is counterbalanced by the increased beam area as r increases. This is the basis of operation for a radiation dosemeter called the Dose-Area Product (DAP) meter. It typically consists of an Ionization Chamber which is located in the X-ray beam close to the source and can be used for the estimation of patient dose - as discussed in a later chapter.

Radiography Systems

[edit | edit source]An X-ray imaging system consists basically of an X-ray tube (XRT), an image receptor and patient positioning devices. Additional components are required to supply electrical power to the XRT, to shape the radiation beam to the region of interest and to position the XRT relative to the patient. A computer is also needed in modern systems for displaying the radiographic image, annotating it and for communicating with a Picture Archival Communication System (PACS). The computer can also be used for providing a user interface to the X-ray system and for controlling radiation exposures.

The basic set-up is shown in Figure 1.18. An XRT is seen to fire a defined X-ray beam at a patient, where the radiation is attenuated by absorption and scattering before an X-ray shadow is recorded by a digital image receptor. The scattering is in all directions (indicated by the grey haze around the patient in the figure). X-ray absorption gives rise to the total removal of X-rays from the beam depending on constituents of the patient’s anatomy, so that it is the X-rays which are not absorbed which reach the image receptor to record the X-ray shadow. We refer to these X-rays as Primary Radiation. It can be seen in the diagram that some of the scattered radiation also reaches the image receptor.

This simple set-up is referred to as a Radiography system and diagnostic applications include thoracic, abdominal and skeletal imaging. Typical systems use XRTs powered with generators rated at up to 80 kW. The exposure factors are selected on a console according to the specific body part being imaged. Given internal body motions, it may be necessary to use X-ray exposures of very short duration (e.g. 1 ms) to avoid image blurring.

The digital image receptor, be it Computed Radiography (CR) or Digital Radiography (DR), has replaced the traditional film-screen receptor in many environments. The maximum size is typically 43 cm by 43 cm and images are digitized at a resolution up to 3k x 3k pixels. Each pixel can therefore have a size of about 140 μm which generates reasonably sharp X-ray images. In addition, digital filters can be applied to enhance image quality. Furthermore, radiation detectors can be mounted at the image receptor to provide automatic exposure control (AEC), as we will describe in more detail in the next chapter.

The XRT and image receptor can be deployed in a number of mechanical configurations. A broad range of positions can be provided using ceiling-supported XRTs - see Figure 1.19 - although wall and floor-mounted configurations, for example, are also in use. This type of system can also be adapted for mobile radiography so that a console/generator supported XRT can be used. As a final point of note, modern radiography systems are capable of cataloging the XRT and image receptor locations, the exposure settings as well as post-processing functions in body part-specific acquisition protocols, which makes for easy and efficient operation.

While fluoroscopy systems are generally used to record X-ray movies, they can also be used to snapshot individual exposures. Hence, they are referred to as Radiography/Fluoroscopy (R/F) systems. In addition, while some fluoroscopy systems employ the traditional X-ray image intensifier (XII) as image receptor, many modern systems are based solely on digital image receptors. Furthermore, in XII-based systems, radiographs can be obtained with the addition of a DR, CR or film/screen receptor.

Applications areas include the oesophagus, stomach and colon (using barium-based contrast media) as well as phlebography, myelography and vascular studies (using iodine-based contrast media), urology and weight-bearing skeletal studies. Generally, the XRT, image receptor and patient table are attached to each other mechanically - see Figure 1.20 - although it is also possible for the XRT and receptor to be mounted on a structure such as a C-arm - see this photo - to allow improved flexibility.

The table can also be tilted in many designs so as to allow gravity to encourage the flow of contrast media into a body part, for instance. Many conventional systems use an under-table XRT with the XII suspended above the patient table, while remotely-controlled systems use an over-table XRT with the image receptor mounted under the patient table - see Figure 1.21 for an example. Furthermore, mobile fluoroscopy systems are available, where a small C-arm holding the XRT and image receptor is mounted on the generator/console - Figure 1.22 - for use in operating theaters and emergency departments, for instance.

The size of the image receptor depends on the primary application of the system. Mobile fluoroscopy generally have smaller sized receptors, e.g. 23 cm, while fixed systems have detectors with sizes up to 40 cm. These larger devices can also be used to provide electronically-magnified images.

Angiography systems are specially-adapted fluoroscopy systems designed specifically for vascular studies and interventional radiology, e.g. percutaneous transluminal angioplasty, repair of vascular stenoses and aneurysms using stents and coils, and transjugular intrahepatic portosystemic shunt (TIPS) procedures. Angiography systems used in neuroradiology, can have a biplane design where two intersecting C-arms (each with its own XRT and image receptor) are used to acquire images simultaneously from two different projections. This arrangement is particularly useful because of the complexity of the cerebrovascular tree. In addition, by rotating the C-arm around the patient during exposure it is possible to perform Cone-Beam Computed Tomography (CT) and to reconstruct three-dimensional (3D) images of the vasculature.

These sophisticated systems typically digitize images up to 2k x 2k pixel resolution and can generate frame rates of up to 30 images per second using continuous exposures, although pulsed acquisitions of lower frame rates can also be used. They can be powered by high-voltage generators of up to 80 kW rating. Older system designs are based on XIIs for image reception. These can be up to 40 cm in diameter. More modern designs incorporate digital detectors of similar field of view. In addition, some specialized systems can incorporate an ultrasound scanner which allows for the imaging of arterial punctures and biopsies, for instance.

Cardiology systems are designed exclusively for the diagnosis and treatment of cardiac diseases and are housed in facilities called Catheter Laboratories, or Cath Lab for short. A standard procedure might involve imaging of the coronary tree, following selective injection of contrast medium using a catheter, and analysis of left ventricular function. The analysis might involve, for instance, calculation of ejection fractions and assessment of regional ventricular wall motion. In addition, the internal electrophysiological state of the heart can be monitored using special catheters. Instrumentation for displaying vital signs, such as blood pressure, oxygen saturation and ECG, can also be incorporated into these systems. Typical therapeutic applications include percutaneous transluminal coronary angioplasty (PTCA) to open stenotic lesions (and possible subsequent stenting), ablation of atrial fibrillations and the placement of intra-cardiac devices.

Image receptors for cardiac angiography can generate frame rates of up to 50/60 images per second, in order to effectively capture cardiac motion. They typically use receptors sized about 25 cm.

The Fourier Transform

[edit | edit source]An elegant approach to the assessment of detail in radiographic images can be provided by Fourier methods. These methods can be used, for instance, in the mathematical analysis of factors which contribute to and detract from the generation of images with excellent quality, and for the computer manipulation of subtle details within images. The basis of these methods is described conceptually in this section.

The conventional way of interpreting radiographic images in medicine is to regard them as representations of human anatomy. In the mathematical analysis of these images, however, we can interpret them as fluctuations of signal amplitude in space, as demonstrated in Figure 1.23.

A far simpler image to start our consideration is provided by a resolution grating - see Figure 1.24. Note that the width of each slit in the grating is the same as that of the adjacent piece of lead, so that the radiation intensity transmitted through it can be considered in profile to be represented by a square wave - see panel (b). A spatial period (usually measured in mm) can be used to characterize this square wave and is equal to the width of one line pair, i.e. the width of a slit plus its adjacent piece of lead. Its reciprocal is called the Spatial Frequency, which is generally expressed in line pairs/mm (LP/mm). Note that the equivalent unit cycles/mm is also in common use. Note that the spatial frequency increases from left to right in the figure to an extent greater than can be resolved by the imaging system. This spatial frequency perspective is generally adopted in Fourier methods, and such frequencies are said to occupy the spatial frequency domain.

A fundamental feature of Fourier methods is that they can be used to demonstrate that any waveform can be approximated by the sum of a large number of sine waves of different frequencies and amplitudes. The converse is also true, i.e. that a composite waveform can be broken into an infinite number of constituent sine waves. This is demonstrated in Figure 1.25, where the process of adding sine waves can be seen generate reasonable approximations to a square wave. The mathematical process for identifying constituent sine waves in a composite waveform is called the Fourier Transform. It is generally calculated using a computer algorithm called the Fast Fourier Transform (FFT). The reverse process of summing such sine waves to generate a composite waveform is called the Inverse Fourier Transform - see Figure 1.26.

The Fourier Transform can therefore be used to convert image data from the spatial domain into the spatial frequency domain and the inverse transform can be used to reconvert such frequency information back into spatial dimensions. We can see in Figure 1.25 that a large number of sine waves can be summed together to form a square wave. It can therefore be concluded that when a square wave is presented to an imaging system, the equivalent of a infinite number of sine waves is also being presented. Furthermore, that the response of an imaging system to a square wave input is equivalent to that of the system’s response to an input of a infinite number of sine waves, i.e. the response of the imaging system to all spatial frequencies. Fourier methods are therefore widely used in the assessment of the spatial resolving capabilities of different imaging systems.

In addition, while in the frequency domain, image data can be manipulated with sophisticated methods not available to spatial domain data. This is the basis of its use in digital image processing to enhance subtle features in images. We will consider this feature in more detail when we consider the processing of digital radiography images and in image reconstruction for 3D radiographic imaging in later chapters.

FFTs can also be calculated in two-dimensions to give results such as those in Figure 1.27. Since Fourier analysis generates results in terms of both positive and negative spatial frequencies, these can be plotted in the form of a 2D image so that the maximum frequency lies at the origin and those for the horizontal and vertical directions are shown increasing towards that origin. The modulation at different spatial frequencies is represented using a grey-scale. Low frequency bands can be seen along the horizontal axis in the figure, for example, representing the horizontal periodicity of image data from the fingers, while finer bands along the y-dimension are indicative of a periodicity of image data from the various metacarpophalangeal joints. Higher frequency features can also be seen running diagonally in this 2D-FFT, representative of the trabecular structure of the bones, for example.

The essence of this approach is that it can be used to produce a range of image processing effects by enhancing and/or suppressing features in the 2D-FFT and then converting the result back into the spatial domain using the IFT, as illustrated in Figure 1.28. Such image manipulations are considered in more detail in a later chapter. Note that the form of image processing demonstrated in the figure is for purely illustrative purposes and bears no direct medical significance.

Section added by Gil Zweig of Glenbrook Technologies Inc.

[edit | edit source]

Current fluoroscopic imaging devices Presently two types of fluoroscopic imaging devices are commonly used: the cesium iodide (CsI) image intensifier, and the digital flat-panel radiographic imager. The intrinsic spatial resolution of an imaging device is, in effect, the resolution of the shadow plane. This value can be observed on the video display of the X-ray image of a radiographic resolution target when it’s placed in contact with the input window of the imaging device. As such, the commercial CsI intensifier displays a spatial resolution of three to four line pairs per millimeter (lp/mm). This resolution limits magnification. That’s due, in part, to limitations of the CsI input scintillator caused by cross-talk, scatter, and, additionally, to demagnifying the photoelectron image.

As for the digital flat-panel imager, it isn’t a true, real-time fluoroscopic imaging device. It’s more like digital film. Unlike the CsI image intensifier, which operates in real time and is capable of limited optical magnification, the flat-panel display consists of a charged-coupled device (CCD) array coated with an X-ray-sensitive scintillation layer. The visual image formed by the X-rays striking the scintillation layer is converted into a digital image by the CCD sensors. A control computer composites the CCD signal into a video image. Magnification is determined by the ratio of the size of the CCD array to the video monitor. Flat-panel imagers resolve at 4 lp/mm.

Recently awarded U.S.patent 7,426,258 describes a fluoroscopic camera which can achieve an intrinsic resolution of 18 lp/mm and is capable of magnifying the fluoroscopic image optically up to 40 times without resorting to geometric or digital pixel magnification. See: "Finger Tip" Fluoroscopic Image. This performance is achieved, in effect, by creating a very smooth shadow plane, positioning the specimen in close proximity to it, and then viewing the intensified shadow image optically with a zooming video camera. When the specimen is in close proximity to the shadow plane, the shadow sharpness is maximized and less influenced by the size of the focal spot. The closer the object is to its shadow, the sharper the shadow. The video camera employs a high- resolution, x-ray scintillator coating coupled to the input window of a non-demagnifying night-vision image intensifier. The fine detail from the scintillator image is intensified 30,000 times at the output window. That image is viewed optically with an auto-focusing, analog CCD camera capable of programmable zoom. The first important advancement realized from this development is a magnified, real-time fluoroscopic X-ray imaging system that isn’t dependent on micro-focal spot size, and the high costs associated with it. In addition, these new systems are compact and can record the fluoroscopic images as either dynamic video or static JPG.

Improved applications The unique capability of this fluoroscopic camera has allowed the development of X-ray inspection systems for medical device applications. Because of the camera’s compact size and the increased sensitivity it exhibits, fluoroscopic imaging can be achieved at radiation levels lower than normally required for x-ray inspection. The X-ray image can be magnified without moving the specimen toward the X-ray source. A desktop X-ray inspection system produces static and dynamic fluoroscopic images with variable magnification up to 40 times, at power levels of less than 5 watts. This system is presently in use by injection molders to inspect for voids and flaws in catheter hubs as well as low X-ray opacity PEEK (the trade name for polyetheretherketone--a polymer used frequently in molded orthopedic implants, but whose low radiopacity makes detection of voids difficult with standard X-ray methods). The system is portable and can be moved to various areas of a production facility. These features are useful for monitoring the quality of a stent’s production and development. Because the MXRA fluoroscopic camera requires relatively low exposure levels of radiation, thereby eliminating radiation scatter, access ports to the X-ray chamber permit fluoroscopic video recording of the stent’s deployment from its catheter. Rotating the stent with magnified fluoroscopy reveals wire breaks that couldn’t be detected with static imaging. To evaluate a particular stent design, the device is often held in a curved, flexible fixture and subjected to high-frequency flexing by machines designed for this purpose. Afterward, to determine if the particular stent design resulted in fatigue breaks, the fixture containing the stent is placed in the X-ray chamber and slowly rotated. The resulting video can be carefully studied for any evidence of wire breaks.

References

[edit | edit source]- ↑ Rosenow UF, 1995. Notes on the legacy of the Röntgen rays. Med Phys, 22(11 Pt 2):1855-67.