User:Arch dude/Using Materials and Textures

The geometry of an object is defined by the location of its faces, as described by the location of its vertices. The description can be further refined by subsurfing and smoothing. The geometry tells us where the object's faces are in space, but it does not describe what the object looks like. This overview describes the concept of the defining the visual appearance of the object using a material.

We describe the look of each face by assigning a material to it. The material describes how light interacts with each point on the face. There are are many ways in which light interacts with a surface in the real world, and Blender permits us to describe many of them for our material. We start by defining base material: a single set of interactions that will apply to all the points on the face. We then use Textures to modify these interactions for individual points.

Finally there must be a way to describe how a non-uniform material, which is described in two dimensions, is to be applied to our object three-dimensional object: that is, which points on the two-dimensional plane of the material map onto which parts of the object.

Base material

[edit | edit source]

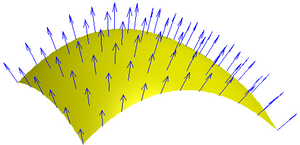

The base material settings describe how light reacts to a point on the surface given the surface normal at that point. The "surface normal" is the direction that is straight out from the tiny area surrounding the point. For a completely flat smooth surface, the surface normals for all points on the surface point in the same direction. For a rough surface, the normals vary a lot.

Material textures

[edit | edit source]When applied to a material, a texture is a description of how to vary one or more parameters of each location on the surface. (Blender uses textures for world, light and brush also, but those uses are different and will be covered in a different chapter.)

A texture consists of a texture map, an effect, and a point mapping. The texture map is either an arbitrary image, or one of a number of procedural maps. The texture map provides a value at each point of the texture. The effect tells Blender what to do with that value. You can create a texture with any map combined with any effect, but some combinations make more sense than others. The point mapping tells blender how to determine which point of the texture map are to be applied to each point of the object.

Texture Map

[edit | edit source]A Map is not a Picture

[edit | edit source]

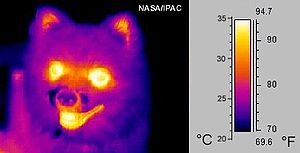

To describe the points, we use a 2-dimensional array of numbers called a "map." Each texture has a map. The map may be "procedural" or it may be an "image." It is imperative to understand that an "image map" is NOT a picture of what the observer will see when looking at the surface. Rather, it is a collection of numbers, each of which tells the renderer something about how light is affected at a point on the surface. Here is a real-world example of the difference between an image map and a picture. This temperature map, or thermogram, conveys information about the temperature at each point. It is not a picture of something the observer will actually see.

As it happens, there are several well-defined formats for conveying 2-dimensional map information for use by computer programs. These formats were developed to store images such as drawings and photographs, so we call them image formats and we manipulate them and view them as images. This is unfortunate, because when use them as textures, we can easily fall into the trap of thinking of them as images rather than maps.

There are dozens of separate parameters needed to describe the interactions of light at a surface point, but our commonly-accepted map formats ("image formats") only provide one (or three, or four) numbers per point. We get around this problem by using multiple textures on one material, with each texture providing numbers for different parameters. For the curious, an image file for a greyscale image has one value per point: intensity. A file with a photograph has three values per point red intensity, Green intensity, and Blue intensity. An computer-generated image file intended for compositing can have an additional number per point called "Alpha" to describe how transparent the point is.

As with the thermogram above, the human visual system can recognize and interpret patterns in two-dimensional maps that are presented as pictures. Furthermore, The visible patterns in the map are recognizably related to the visible patterns produced when the map is used in a texture. Take advantage of this, but do not be fooled by it.

An Exception: The Colormap

[edit | edit source]In one important case, a texture map really is an image ... almost.

When the texture is being used as a colormap, then the four numbers per point in the map (Red, Green, Blue, and Alpha) are used to specify Red, green, blue, and alpha of the point on the surface. In this one case, the map really is an image. Unfortunately, this is frequently the very first use of texturing a Noob is exposed to, and it is therefore hard to understand that it is a special case.

Furthermore, not all real-world photographs are useful as colormaps, because a typical photograph is not a simple one-to-one description of the colors of the points on a surface. One obvious difference is the shadows in a photograph. To be generally useful as a colormap, a photograph should be an orthogonal shot of a surface with uniform illumination. Of course, a blender artist can make good use of a photograph that does not meet these conditions, but simply ignoring this distinction is likely to yield unexpected results.

Procedural maps

[edit | edit source]It is often possible to describe a recipe for determining the number at each point in a map. In this case, there is no need to provide an image at all: we just provide the recipe, or procedure. For example, the procedure for a particular type of gradient map is: "use the number 0 on the left and the number 1 on the right and interpolate between them." Blender 2.49 is delivered with eleven procedural texture types. These are in a list that also includes "none," image," and "plugin." As you can guess, "image" lets you select an image, not a procedure, and "none" specifies that there is no map (Noob: what?) while "plugin." allows you to specify an external script as your procedure. Each procedural map is generated by an algorithm, and many of the algorithms have parameters. When you select a procedure with parameters, a panel is added to the buttons window to let you set the parameters. The panel's contents vary depending on the type of the procedural texture.

Effects

[edit | edit source]Outside of Blender, image files are used to describe images to be viewed by humans. When an image file is used for its intended purpose, (e.g., to print a photograph) there is a defined meaning for the numbers in the file: when the file has three numbers per point, the numbers specify the Red, Green, and Blue intensities, respectively, and this tells the printer exactly how much ink of each color to use at each point. When we use an image file for a different purpose, there is no accepted interpretation for the numbers. Therefore, we must provide information to Blender to tell it how to use each number. For texture maps, the way this is done varies in detail depending on which parameters are being affected by that particular texture, but the overall idea is the same: you must tell Blender how to interpret the map. Each effect controls the how map numbers are mapped to blender parameters.

Blender provides thirteen different effects. Any texture may implement any combination of these effects. The effects are selected in the map to tab. (As a Noob, I understand how to use a texture with exactly one effect. I do not understand what happens when no effects are selected, and I do not understand what happens when multpile effects are selected.) The effects are: Cor,Nor,Csp,Cmir,ref,Spec,Amb,Hard,RayMir,Alpha,Enit,TransLu, and Disp. these can be divided into categories:

- Color effects: Col, Csp, and Cmir affect the Diffuse, Specular, and mirror colors of the material, respectively. Each of these effects uses three values per point from the image: R, G, and B.

- Scalar parameters: Ref, Spec, Amb, Hard, RayMir, Alpha, Emit, and TransLu affect specific "scalar" values of the point. A scalar value is represented by a single number , not three different numbers. For these effects, we must describe how to reduce the R, G, and B values from a point in the map to a single number.

- Normal or bump: a Nor effect (which is either a "normal map" or a "bump map") does not affect a scalar value of the base material. Instead, it tells Blender how to recompute the surface normal at the point. A "normal map" uses the three map values atthe point (R, G, B) as offset of the normal in three dimensions (X, Y, Z.) A Bump map uses a single number per point to specify an offset to be applied to the point for purposed of computing the normal.

- Displacement: Disp is conceptually very different from the other types. It provides a scalar that tells Blender to actually move the point.

Color and scalar effects are relatively easy to understand: after an effect is applied, the affected point will react exactly as if you had made the same change to the untextured material, but only for that point. You can in theory subdivide the mesh into enough faces to provide one face per texture point, and then replace the texture with a separate untextured material per point, to achieve the same effect. This is of course completely impractical, but aids in understanding the way textures color and scalar textures work.

The Displacement effect is also easy to understand: The effect tells Blender to actually offset the points and create new vertices. Again, it is clear that the same result can theoretically be achieved by actually subdividing the original mesh and adding the vertices. This is also impractical, but aids in understanding how a displacement mesh works.

Normal maps (and bump maps) are very different. They describe how to "tilt" each point on a face. It is not conceptually possible to achieve the same effect by replacing the mesh with a higher-resolution mesh, and in fact you cannot construct a real-world object that affects light in the same way as a bump-mapped or normal-mapped texture on an mesh. These maps are a very valuable way to dramatically reduce the computational complexity of rendering a model by making certain tradeoffs. The Noob should consider these to be advanced techniques to be used only when optimization becomes necessary.

Applying the Effect

[edit | edit source]We now know how to derive a value, and what parameter is to be affected, but we still need to know how the value is to affect the parameter. Blender allows us to specify one of fourteen different texture blending modes to apply the value to the parameter. The modes are called "color, value, saturation, hue, lighten, darken, divide, difference,overlay, screen, multiply, subtract, add, and mix. Nine of these are described in this Yafaray tutorial. and summarized below.

Remember that in general, order is important. When a material has one texture, the values of all parameters are set by the material, and then the texture is applied. When there is a "stack" of textures, the topmost is applied, and then the next one, and then the next, until finally the texture at the bottom is applied. This means that the last texture in the stack generally has the most obvious effect.

A mode is either "commutative" or not. If it is commutative, then the two textures may be applied in either order to achieve an identical result. If the mode is not commutative, then order is important.

material parameters are all numeric values that are scaled to be between 0, and 1: think of them as ratios, or values on a slider from "black" to "white." When a mathematical operation causes a value to be below 0 or above 1, the parameter is adjusted: below 0 becomes 0, and above 1 becomes 1.

| Mode | Rule | commutes | Comment |

|---|---|---|---|

| color | ? | ? | ? |

| value | ? | ? | ? |

| saturation | ? | ? | ? |

| hue | ? | ? | ? |

| lighten | max(a,b) | yes | pick the greater (lighter) of a and b |

| darken | min(a,b) | yes | pick the smaller (darker) of a and b |

| divide | max(a/b,1) | no | divide a by b and set to max if >1. |

| difference | abs(a-b) | yes | absolute value of difference. |

| overlay | ? | ? | ? |

| screen | ? | ? | visual "opposite" of multiply: (maybe 1/(1/a*1/b))?) |

| multiply | a*b | yes | multiply the values. Since inputs are between 0 and 1, the result can never be whiter than either input |

| subtract | min(a-b,0) | no | subtract. if <0, set to 0 |

| add | max(a+b,1) | yes | add the values, if >1, set to 1 |

| mix | a*alpha+b*(1-alpha) | no | use the alpha channel to decide the proportion of a and b (?) |

Stencils

[edit | edit source]It is sometimes useful to apply two different maps to the same parameter in a controlled fashion. You could do this by using an external program to create a new map from the two original map, and then apply the new map to the parameter. However, there is a simpler way to do this within Blender: the stencil. You can tell blender to use a map to apply an effect to another map, instead of applying it to a parameter. The resulting map is then applied to a parameter.

Point Mapping

[edit | edit source]A map is flat, but an object is not. A classic example of this problem is that you cannot glue a paper rectangular map of the world onto a basketball to make a globe. To use a flat material on a 3D object, we must specify the relationship of the points on the object to the points on the material. There is no feasible way for a user to specify the mapping for each point individually, and there is no reason to do so. Instead, we tell blender to use one of its simple internal point-mapping algorithms, or we supply a UV map.

UV mapping

[edit | edit source]A UV map is a description of which points of the material are to be used for each point of the object. Instead, we create a 2D layout of the object's mesh. Each face of the mesh is represented in this layout. The layout explicitly identifies the mapping for each vertex, and the points on a face are interpolated based on the vertices for that face. Blender has tools that simplify the process of making a UV map from a mesh.

The easiest way to think about the UV map is to think in terms of cutting seams in the mesh, laying it as flat as possible, and then stretching it until it is flat.