Intellectual Property and the Internet/Streaming media

| This page needs additional citations for verification. Please help improve this page by adding reliable references. Unsourced material may be challenged and removed. |

| This page was imported and needs to be de-wikified. Books should use wikilinks rather sparsely, and only to reference technical or esoteric terms that are critical to understanding the content. Most if not all wikilinks should simply be removed. Please remove {{dewikify}} after the page is dewikified. |

Streaming media is multimedia that is constantly received by and presented to an end-user while being delivered by a streaming provider. With streaming, the client browser or plug-in can start displaying the data before the entire file has been transmitted.[note 1] The name refers to the delivery method of the medium rather than to the medium itself. The distinction is usually applied to media that are distributed over telecommunications networks, as most other delivery systems are either inherently streaming (e.g., radio, television) or inherently non-streaming (e.g., books, video cassettes, audio CDs). The verb 'to stream' is also derived from this term, meaning to deliver media in this manner. Internet television is a commonly streamed medium. Streaming media can be something else other than video and audio. Live closed captioning and stock tickers are considered streaming text, as is Real-Time Text.

Live streaming, delivering live over the Internet, involves a camera for the media, an encoder to digitize the content, a media publisher, and a content delivery network to distribute and deliver the content.

History

[edit | edit source]Attempts to display media on computers date back to the earliest days of computing in the mid-20th century. However, little progress was made for several decades, primarily due to the high cost and limited capabilities of computer hardware.

From the late 1980s through the 1990s, consumer-grade personal computers became powerful enough to display various media. The primary technical issues related to streaming were:

- having enough CPU power and bus bandwidth to support the required data rates

- creating low-latency interrupt paths in the operating system (OS) to prevent buffer underrun.

However, computer networks were still limited, and media was usually delivered over non-streaming channels, such as by downloading a digital file from a remote server and then saving it to a local drive on the end user's computer or storing it as a digital file and playing it back from CD-ROMs.

During the late 1990s and early 2000s, Internet users saw:

- greater network bandwidth, especially in the last mile

- increased access to networks, especially the Internet

- use of standard protocols and formats, such as TCP/IP, HTTP, and HTML

- commercialization of the Internet.

Severe Tire Damage was the first band to perform live on the Internet. On June 24, 1993, the band was playing a gig at Xerox PARC while elsewhere in the building, scientists were discussing new technology (the Mbone) for broadcasting on the Internet using multicasting. As proof of their technology, the band was broadcast and could be seen live in Australia and elsewhere.

RealNetworks were also pioneers in the streaming media markets and broadcast one of the earlier audio events over the Internet - a baseball game between the Yankees and Seattle Mariners - in 1995.[1] They went on to launch the first streaming video technology in 1997 with RealPlayer.

When Word Magazine launched in 1995, they featured the first ever streaming soundtracks on the internet. Using local downtown musicians the first music stream was 'Big Wheel' by Karthik Swaminathan and the second being 'When We Were Poor' by Karthik Swaminathan with Marc Ribot and Christine Bard.[citation needed]

Shorty after in the beginning of 1996, Microsoft developed a media player know as ActiveMovie that allowed streaming media and included a proprietary streaming format, which was the successor to the streaming feature later in Windows Media Player 6.4 in 1999.

In June of 1999, Apple also introduced a streaming media format in its Quicktime 4 application. It was later also widely adopted on websites along with RealPlayer and Windows Media streaming formats. The competing formats on websites required each user to download the respective applications for streaming and resulted in many users having to have all three applications on their computer for general compatibility.

Around 2002, the interest in a single, unified, streaming format and the widespread adoption of Adobe Flash on computers prompted the development of a video streaming format through Flash, which is the format used in Flash-based players on many popular video hosting sites today such as YouTube.

Increasing consumer demand for live streaming has prompted YouTube to implement their new Live Streaming service to users.[2]

These advances in computer networking combined with powerful home computers and modern operating systems made streaming media practical and affordable for ordinary consumers. Stand-alone Internet radio devices emerged to offer listeners a no-computer option for listening to audio streams.

In general, multimedia content has a large volume, so media storage and transmission costs are still significant. To offset this somewhat, media are generally compressed for both storage and streaming.

Increasing consumer demand for streaming of high definition (HD) content to different devices in the home has led the industry to develop a number of technologies, such as Wireless HD or ITU-T G.hn, which are optimized for streaming HD content without forcing the user to install new networking cables.

Today, a media stream can be streamed either live or on demand. Live streams are generally provided by a means called true streaming. True streaming sends the information straight to the computer or device without saving the file to a hard disk. On Demand streaming is provided by a means called progressive streaming or progressive download. Progressive streaming saves the file to a hard disk and then is played from that location. On Demand streams are often saved to hard disks and servers for extended amounts of time; while the live streams are only available at one time only (e.g. during the Football game).[3]

With the increasing popularity of mobile devices such as tablets and smartphones that are dependent on battery life, the development of digital media streaming is now focused on formats that do not depend on Adobe Flash —known for its relatively high computer resource usage and thus compromising a mobile device's battery life.

Streaming bandwidth and storage

[edit | edit source]A broadband speed of 2.5 Mbit/s or more is recommended for streaming movies, for example to an Apple TV, Google TV or a Sony TV Blu-ray Disc Player, 10 Mbit/s for High Definition content.[4]

Streaming media storage size is calculated from the streaming bandwidth and length of the media using the following formula (for a single user and file):

Real world example:

One hour of video encoded at 300 kbit/s (this is a typical broadband video as of 2005[update] and it is usually encoded in a 320 × 240 pixels window size) will be:

- (3,600 s × 300,000 bit/s) / (8×1024×1024) requires around 128 MiB of storage.

If the file is stored on a server for on-demand streaming and this stream is viewed by 1,000 people at the same time using a Unicast protocol, the requirement is:

- 300 kbit/s × 1,000 = 300,000 kbit/s = 300 Mbit/s of bandwidth

This is equivalent to around 135 GB per hour. Using a multicast protocol the server sends out only a single stream that is common to all users. Hence, such a stream would only use 300 kbit/s of serving bandwidth. See below for more information on these protocols.

The calculation for Live streaming is similar.

Assumptions: speed at the encoder, is 500 kbit/s.

If the show lasts for 3 hours with 3,000 viewers, then the calculation is:

- Number of MiB transferred = encoder speed (in bit/s) × number of seconds × number of viewers / (8*1024*1024)

- Number of MiB transferred = 500,000 (bit/s) × 3 × 3,600 ( = 3 hours) × 3,000 (nbr of viewers) / (8*1024*1024) = 1,931,190 MiB

Codec, bitstream, transport, control

[edit | edit source]The audio stream is compressed using an audio codec such as MP3, Vorbis or AAC.

The video stream is compressed using a video codec such as H.264 or VP8.

Encoded audio and video streams are assembled in a container bitstream such as FLV, WebM, ASF or ISMA.

The bitstream is delivered from a streaming server to a streaming client using a transport protocol, such as MMS or RTP.

The streaming client may interact with the streaming server using a control protocol, such as MMS or RTSP.

Protocol issues

[edit | edit source]Designing a network protocol to support streaming media raises many issues, such as:

- Datagram protocols, such as the User Datagram Protocol (UDP), send the media stream as a series of small packets. This is simple and efficient; however, there is no mechanism within the protocol to guarantee delivery. It is up to the receiving application to detect loss or corruption and recover data using error correction techniques. If data is lost, the stream may suffer a dropout.

- The Real-time Streaming Protocol (RTSP), Real-time Transport Protocol (RTP) and the Real-time Transport Control Protocol (RTCP) were specifically designed to stream media over networks. RTSP runs over a variety of transport protocols, while the latter two are built on top of UDP.

- Another approach that seems to incorporate both the advantages of using a standard web protocol and the ability to be used for streaming even live content is the HTTP adaptive bitrate streaming. HTTP adaptive bitrate streaming is based on HTTP progressive download, but contrary to the previous approach, here the files are very small, so that they can be compared to the streaming of packets, much like the case of using RTSP and RTP.[5]

- Reliable protocols, such as the Transmission Control Protocol (TCP), guarantee correct delivery of each bit in the media stream. However, they accomplish this with a system of timeouts and retries, which makes them more complex to implement. It also means that when there is data loss on the network, the media stream stalls while the protocol handlers detect the loss and retransmit the missing data. Clients can minimize this effect by buffering data for display. While delay due to buffering is acceptable in video on demand scenarios, users of interactive applications such as video conferencing will experience a loss of fidelity if the delay that buffering contributes to exceeds 200 ms.[6]

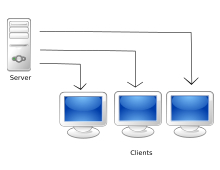

- Unicast protocols send a separate copy of the media stream from the server to each recipient. Unicast is the norm for most Internet connections, but does not scale well when many users want to view the same television program concurrently.

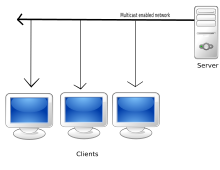

- Multicast protocols were developed to reduce the data replication (and consequent server/network loads) that occurs when many recipients receive unicast content streams independently. These protocols send a single stream from the source to a group of recipients. Depending on the network infrastructure and type, multicast transmission may or may not be feasible. One potential disadvantage of multicasting is the loss of video on demand functionality. Continuous streaming of radio or television material usually precludes the recipient's ability to control playback. However, this problem can be mitigated by elements such as caching servers, digital set-top boxes, and buffered media players.

- IP Multicast provides a means to send a single media stream to a group of recipients on a computer network. A multicast protocol, usually Internet Group Management Protocol, is used to manage delivery of multicast streams to the groups of recipients on a LAN. One of the challenges in deploying IP multicast is that routers and firewalls between LANs must allow the passage of packets destined to multicast groups. If the organization that is serving the content has control over the network between server and recipients (i.e., educational, government, and corporate intranets), then routing protocols such as Protocol Independent Multicast can be used to deliver stream content to multiple Local Area Network segments.

- Peer-to-peer (P2P) protocols arrange for prerecorded streams to be sent between computers. This prevents the server and its network connections from becoming a bottleneck. However, it raises technical, performance, quality, and business issues.

Notes

[edit | edit source]Footnotes

[edit | edit source]Citations

[edit | edit source]- ↑ "RealNetworks Inc". Funding Universe. Retrieved 2011-07-23.

- ↑ Josh Lowensohn (2008). "YouTube to Offer Live Streaming This Year". Retrieved 2011-07-23.

- ↑ Grant and Meadows. (2009). Communication Technology Update and Fundamentals 11th Edition. pp.114

- ↑ Mimimum requirements for Sony TV Blu-ray Disc Player, on advertisement attached to a NetFlix DVDTemplate:Nonspecific

- ↑ Ch. Z. Patrikakis, N. Papaoulakis, Ch. Stefanoudaki, M. S. Nunes, “Streaming content wars: Download and play strikes back” presented at the Personalization in Media Delivery Platforms Workshop, [218 – 226], Venice, Italy, 2009.

- ↑ Krasic, C. and Li, K. and Walpole, J., The case for streaming multimedia with TCP, Lecture Notes in Computer Science, pages 213--218, Springer, 2001