Cg Programming/Vertex Transformations

One of the most important tasks of the vertex shader and the following stages in a programmable graphics pipeline is the transformation of vertices of primitives (e.g. triangles) from the original coordinates (e.g. those specified in a 3D modeling tool) to screen coordinates. While programmable vertex shaders allow for many ways of transforming vertices, some transformations are usually performed in the fixed-function stages after the vertex shader. When programming a vertex shader, it is therefore particularly important to understand which transformations have to be performed in the vertex shader. These transformations are usually specified as uniform parameters and applied to the input vertex positions (and normal vectors) by means of matrix-vector multiplications. In a vertex shader with an input argument vertex : POSITION, the standard transformation could be computed by this matrix-vector product:

mul(UNITY_MATRIX_MVP, vertex)

Unity's built-in uniform parameter UNITY_MATRIX_MVP specifies the standard vertex transformation as a 4x4 matrix. (In Unity, however, it is recommended to perform this transformation with the function UnityObjectToClipPos(vertex), which also works for stereo projection and projection to 360 images, etc.)

While this is straightforward for points and directions, it is less straightforward for normal vectors as discussed in Section “Applying Matrix Transformations”.

Here, we will first present an overview of the coordinate systems and the transformations between them and then discuss individual transformations.

Overview: The Camera Analogy

[edit | edit source]It is useful to think of the whole process of transforming vertices in terms of a camera analogy as illustrated to the right. The steps and the corresponding vertex transformations are:

- positioning the model — modeling transformation

- positioning the camera — viewing transformation

- adjusting the zoom — projection transformation

- cropping the image — viewport transformation

The first three transformations are applied in the vertex shader. Then the perspective division (which might be considered part of the projection transformation) is automatically applied in the fixed-function stage after the vertex shader. The viewport transformation is also applied automatically in this fixed-function stage. While the transformations in the fixed-function stages cannot be modified, the other transformations can be replaced by other kinds of transformations than described here. It is, however, useful to know the conventional transformations since they allow to make best use of clipping and perspectively correct interpolation of varying variables.

The following overview shows the sequence of vertex transformations between various coordinate systems and includes the matrices that represent the transformations:

| object/model coordinates | vertex input parameters with semantics (in particular the semantic POSITION)

| |||

| ↓ | modeling transformation: model matrix (in Unity: unity_ObjectToWorld)

| |||

| world coordinates | ||||

| ↓ | viewing transformation: view matrix (in Unity: UNITY_MATRIX_V)

| |||

| view/eye coordinates | ||||

| ↓ | projection transformation: projection matrix (in Unity: UNITY_MATRIX_P)

| |||

| clip coordinates | vertex output parameter with semantic SV_POSITION

| |||

| ↓ | perspective division (by the w coordinate) | |||

| normalized device coordinates | ||||

| ↓ | viewport transformation | |||

| screen/window coordinates | ||||

Note that the modeling, viewing and projection transformation are applied in the vertex shader. The perspective division and the viewport transformation is applied in the fixed-function stage after the vertex shader. The next sections discuss all these transformations in detail.

Modeling Transformation

[edit | edit source]The modeling transformation specifies the transformation from object coordinates (also called model coordinates or local coordinates) to a common world coordinate system. Object coordinates are usually specific to each object or model and are often specified in 3D modeling tools. On the other hand, world coordinates are a common coordinate system for all objects of a scene, including light sources, 3D audio sources, etc. Since different objects have different object coordinate systems, the modeling transformations are also different; i.e., a different modeling transformation has to be applied to each object.

Structure of the Model Matrix

[edit | edit source]The modeling transformation can be represented by a 4×4 matrix, which we denote as the model matrix (in Unity: unity_ObjectToWorld). Its structure is:

is a 3×3 matrix, which represents a linear transformation in 3D space. This includes any combination of rotations, scalings, and other less common linear transformations. t is a 3D vector, which represents a translation (i.e. displacement) in 3D space. combines and t in one handy 4×4 matrix. Mathematically spoken, the model matrix represents an affine transformation: a linear transformation together with a translation. In order to make this work, all three-dimensional points are represented by four-dimensional vectors with the fourth coordinate equal to 1:

When we multiply the matrix to such a point , the combination of the three-dimensional linear transformation and the translation shows up in the result:

Apart from the fourth coordinate (which is 1 as it should be for a point), the result is equal to

Accessing the Model Matrix in a Vertex Shader

[edit | edit source]The model matrix can be defined as a uniform parameter such that it is available in a vertex shader. However, it is usually combined with the matrix of the viewing transformation to form the modelview matrix, which is then set as a uniform parameter. In some APIs, the matrix is available as a built-in uniform parameter, e.g., unity_ObjectToWorld in Unity. (See also Section “Applying Matrix Transformations”.)

Computing the Model Matrix

[edit | edit source]Strictly speaking, Cg programmers don't have to worry about the computation of the model matrix since it is provided to the vertex shader in the form of a uniform parameter. In fact, render engines, scene graphs, and game engines will usually provide the model matrix; thus, the programmer of a vertex shader doesn't have to worry about computing the model matrix. In some cases, however, the model matrix has to be computed when developing graphics application.

The model matrix is usually computed by combining 4×4 matrices of elementary transformations of objects, in particular translations, rotations, and scalings. Specifically, in the case of a hierarchical scene graph, the transformations of all parent groups (parent, grandparent etc.) of an object are combined to form the model matrix. Let's look at the most important elementary transformations and their matrices.

The 4×4 matrix representing the translation by a vector t is:

The 4×4 matrix representing the scaling by a factor along the axis, along the axis, and along the axis is:

The 4×4 matrix representing the rotation by an angle about a normalized axis is:

Special cases for rotations about particular axes can be easily derived. These are necessary, for example, to implement rotations for Euler angles. There are, however, multiple conventions for Euler angles, which won't be discussed here.

A normalized quaternion corresponds to a rotation by the angle . The direction of the rotation axis can be determined by normalizing the 3D vector .

Further elementary transformations exist, but are of less interest for the computation of the model matrix. The 4×4 matrices of these or other transformations are combined by matrix products. Suppose the matrices , , and are applied to an object in this particular order. ( might represent the transformation from object coordinates to the coordinate system of the parent group; the transformation from the parent group to the grandparent group; and the transformation from the grandparent group to world coordinates.) Then the combined matrix product is:

Note that the order of the matrix factors is important. Also note that this matrix product should be read from the right (where vectors are multiplied) to the left, i.e. is applied first while is applied last.

Viewing Transformation

[edit | edit source]The viewing transformation corresponds to placing and orienting the camera (or the eye of an observer). However, the best way to think of the viewing transformation is that it transforms the world coordinates into the view coordinate system (also: eye coordinate system) of a camera that is placed at the origin of the coordinate system, points (by convention) to the negative axis in OpenGL and to the positive axis in Direct3D, and is put on the plane, i.e. the up-direction is given by the positive axis.

Accessing the View Matrix in a Vertex Shader

[edit | edit source]Similarly to the modeling transformation, the viewing transformation is represented by a 4×4 matrix, which is called view matrix . It can be defined as a uniform parameter for the vertex shader (in Unity: UNITY_MATRIX_V); however, it is usually combined with the model matrix to form the modelview matrix (in Unity: UNITY_MATRIX_MV). Since the model matrix is applied first, the correct combination is:

(See also Section “Applying Matrix Transformations”.)

Computing the View Matrix

[edit | edit source]Analogously to the model matrix, Cg programmers don't have to worry about the computation of the view matrix since it is provided to the vertex shader in the form of a uniform parameter. However, when developing graphics applications, it is sometimes necessary to compute the view matrix.

Here, we briefly summarize how the view matrix can be computed from the position t of the camera, the view direction d, and a world-up vector k (all in world coordinates). Here we limit us to the case of the right-handed coordinate system of OpenGL where the camera points to the negative axis. (There are some sign changes for Direct3D.) The steps are straightforward:

1. Compute (in world coordinates) the direction z of the axis of the view coordinate system as the negative normalized d vector:

2. Compute (again in world coordinates) the direction x of the axis of the view coordinate system by:

3. Compute (still in world coordinates) the direction y of the axis of the view coordinate system:

Using x, y, z, and t, the inverse view matrix can be easily determined because this matrix maps the origin (0,0,0) to t and the unit vectors (1,0,0), (0,1,0) and (0,0,1) to x, y,, z. Thus, the latter vectors have to be in the columns of the matrix :

However, we require the matrix ; thus, we have to compute the inverse of the matrix . Note that the matrix has the form

with a 3×3 matrix and a 3D vector t. The inverse of such a matrix is:

Since in this particular case the matrix is orthogonal (because its column vectors are normalized and orthogonal to each other), the inverse of is just the transpose, i.e. the fourth step is to compute:

While the derivation of this result required some knowledge of linear algebra, the resulting computation only requires basic vector and matrix operations and can be easily programmed in any common programming language.

Projection Transformation and Perspective Division

[edit | edit source]First of all, the projection transformations determine the kind of projection, e.g. perspective or orthographic. Perspective projection corresponds to linear perspective with foreshortening, while orthographic projection is an orthogonal projection without foreshortening. The foreshortening is actually accomplished by the perspective division; however, all the parameters controlling the perspective projection are set in the projection transformation.

Technically spoken, the projection transformation transforms view coordinates to clip coordinates. (All parts of primitives that are outside the visible part of the scene are clipped away in clip coordinates.) It should be the last transformation that is applied to a vertex in a vertex shader before the vertex is returned in the output parameter with the semantic SV_POSITION. These clip coordinates are then transformed to normalized device coordinates by the perspective division, which is just a division of all coordinates by the fourth coordinate. (Normalized device coordinates are called this way because their values are between -1 and +1 for all points in the visible part of the scene.)

Accessing the Projection Matrix in a Vertex Shader

[edit | edit source]Similarly to the modeling transformation and the viewing transformation, the projection transformation is represented by a 4×4 matrix, which is called projection matrix . It is usually defined as a uniform parameter for the vertex shader (in Unity: UNITY_MATRIX_P).

Computing the Projection Matrix

[edit | edit source]Analogously to the modelview matrix, Cg programmers don't have to worry about the computation of the projection matrix. However, when developing applications, it is sometimes necessary to compute the projection matrix.

Here, we present the projection matrices for three cases (all for the OpenGL convention with a camera pointing to the negative axis in view coordinates):

- standard perspective projection (corresponds to the OpenGL 2.x function

gluPerspective) - oblique perspective projection (corresponds to the OpenGL 2.x function

glFrustum) - orthographic projection (corresponds to the OpenGL 2.x function

glOrtho)

The standard perspective projection is characterized by

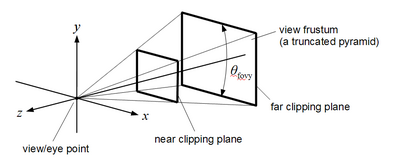

- an angle that specifies the field of view in direction as illustrated in the figure to the right,

- the distance to the near clipping plane and the distance to the far clipping plane as illustrated in the next figure,

- the aspect ratio of the width to the height of a centered rectangle on the near clipping plane.

Together with the view point and the clipping planes, this centered rectangle defines the view frustum, i.e. the region of the 3D space that is visible for the specific projection transformation. All primitives and all parts of primitives that are outside of the view frustum are clipped away. The near and far clipping planes are necessary because depth values are stored with a finite precision; thus, it is not possible to cover an infinitely large view frustum.

With the parameters , , , and , the projection matrix for the perspective projection is:

The oblique perspective projection is characterized by

- the same distances and to the clipping planes as in the case of the standard perspective projection,

- coordinates (right), (left), (top), and (bottom) as illustrated in the corresponding figure. These coordinates determine the position of the front rectangle of the view frustum; thus, more view frustums (e.g. off-center) can be specified than with the aspect ratio and the field-of-view angle .

Given the parameters , , , , , and , the projection matrix for the oblique perspective projection is:

An orthographic projection without foreshortening is illustrated in the figure to the right. The parameters are the same as in the case of the oblique perspective projection; however, the view frustum (more precisely, the view volume) is now simply a box instead of a truncated pyramid.

With the parameters , , , , , and , the projection matrix for the orthographic projection is:

Viewport Transformation

[edit | edit source]The projection transformation maps view coordinates to clip coordinates, which are then mapped to normalized device coordinates by the perspective division by the fourth component of the clip coordinates. In normalized device coordinates (ndc), the view volume is always a box centered around the origin with the coordinates inside the box between -1 and +1. This box is then mapped to screen coordinates (also called window coordinates) by the viewport transformation as illustrated in the corresponding figure. The parameters for this mapping are the coordinates and of the lower, left corner of the viewport (the rectangle of the screen that is rendered) and its width and height , as well as the depths and of the far and near clipping planes. (These depths are between 0 and 1). In OpenGL and OpenGL ES, these parameters are set with two functions:

glViewport(GLint , GLint ,

GLsizei , GLsizei );

glDepthRangef(GLclampf , GLclampf );

The matrix of the viewport transformation isn't very important since it is applied automatically in a fixed-function stage. However, here it is for the sake of completeness:

Further reading

[edit | edit source]The conventional vertex transformations are also described in less detail in Chapter 4 of Nvidia's Cg Tutorial.

The conventional OpenGL transformations are described in full detail in Section 2.12 of the “OpenGL 4.1 Compatibility Profile Specification” available at the Khronos OpenGL web site. A more accessible description of the vertex transformations is given in Chapter 3 (on viewing) of the book “OpenGL Programming Guide” by Dave Shreiner published by Addison-Wesley. (An older edition is available online).

![{\displaystyle \mathrm {M} _{{\text{object}}\to {\text{world}}}=\left[{\begin{matrix}a_{1,1}&a_{1,2}&a_{1,3}&t_{1}\\a_{2,1}&a_{2,2}&a_{2,3}&t_{2}\\a_{3,1}&a_{3,2}&a_{3,3}&t_{3}\\0&0&0&1\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/74f8aaab560e5cb2f74f328599b57b54638f29f6)

![{\displaystyle {\text{ with }}\mathrm {A} =\left[{\begin{matrix}a_{1,1}&a_{1,2}&a_{1,3}\\a_{2,1}&a_{2,2}&a_{2,3}\\a_{3,1}&a_{3,2}&a_{3,3}\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/dcff6fb39a87563940e5b11f2cd33c100e98d8de)

![{\displaystyle {\text{ and }}\mathbf {t} =\left[{\begin{matrix}t_{1}\\t_{2}\\t_{3}\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1762d98b5711974550f359f7b06264db7c931360)

![{\displaystyle P=\left[{\begin{matrix}p_{1}\\p_{2}\\p_{3}\\1\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/fa4c63dd467c6eb272d3b11d2dcb27c641be56bf)

![{\displaystyle \mathrm {M} _{{\text{object}}\to {\text{world}}}\;P=\left[{\begin{matrix}a_{1,1}&a_{1,2}&a_{1,3}&t_{1}\\a_{2,1}&a_{2,2}&a_{2,3}&t_{2}\\a_{3,1}&a_{3,2}&a_{3,3}&t_{3}\\0&0&0&1\end{matrix}}\right]\left[{\begin{matrix}p_{1}\\p_{2}\\p_{3}\\1\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/13d11dfa872a62f11d49f0b7594bea2e57f36ab1)

![{\displaystyle =\left[{\begin{matrix}a_{1,1}p_{1}+a_{1,2}p_{2}+a_{1,3}p_{3}+t_{1}\\a_{2,1}p_{1}+a_{2,2}p_{2}+a_{2,3}p_{3}+t_{2}\\a_{3,1}p_{1}+a_{3,2}p_{2}+a_{3,3}p_{3}+t_{3}\\1\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0f96ff85224b8f3fb35ccd73c31b54bd69cca25d)

![{\displaystyle \mathrm {A} \left[{\begin{matrix}p_{1}\\p_{2}\\p_{3}\end{matrix}}\right]+\left[{\begin{matrix}t_{1}\\t_{2}\\t_{3}\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ff6335e75528b3f090325320d6db5f829863e54e)

![{\displaystyle \mathrm {M} _{\text{translation}}=\left[{\begin{matrix}1&0&0&t_{1}\\0&1&0&t_{2}\\0&0&1&t_{3}\\0&0&0&1\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a9ccb86cb24fb7ce0bf772f83efbb7391c312146)

![{\displaystyle \mathrm {M} _{\text{scaling}}=\left[{\begin{matrix}s_{x}&0&0&0\\0&s_{y}&0&0\\0&0&s_{z}&0\\0&0&0&1\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b366a6c227cafdd36ec11f98948bc514b42ef014)

![{\displaystyle \mathrm {M} _{\text{rotation}}=\left[{\begin{matrix}(1-\cos \alpha )x\,x+\cos \alpha &(1-\cos \alpha )x\,y-z\sin \alpha &(1-\cos \alpha )z\,x+y\sin \alpha &0\\(1-\cos \alpha )x\,y+z\sin \alpha &(1-\cos \alpha )y\,y+\cos \alpha &(1-\cos \alpha )y\,z-x\sin \alpha &0\\(1-\cos \alpha )z\,x-y\sin \alpha &(1-\cos \alpha )y\,z+x\sin \alpha &(1-\cos \alpha )z\,z+\cos \alpha &0\\0&0&0&1\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/68f40eec0dda33d6ffef4c0c25b0586470d62fef)

![{\displaystyle \mathrm {M} _{{\text{view}}\to {\text{world}}}=\left[{\begin{matrix}x_{1}&y_{1}&z_{1}&t_{1}\\x_{2}&y_{2}&z_{2}&t_{2}\\x_{3}&y_{3}&z_{3}&t_{3}\\0&0&0&1\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3e00ec26b5506a084ce548aef7c370c0097ed34a)

![{\displaystyle \mathrm {M} _{{\text{view}}\to {\text{world}}}=\left[{\begin{matrix}\mathrm {R} &\mathbf {t} \\\mathbf {0} ^{T}&1\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/bcd0a52a1646832fca2af268dd812f7a7cbbc5ca)

![{\displaystyle \mathrm {M} _{{\text{view}}\to {\text{world}}}^{-1}=\mathrm {M} _{{\text{world}}\to {\text{view}}}=\left[{\begin{matrix}\mathrm {R} ^{-1}&-\mathrm {R} ^{-1}\mathbf {t} \\\mathbf {0} ^{T}&1\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/24280cf60fc5e0c97a0623ca0dea8a080d80b017)

![{\displaystyle \mathrm {M} _{{\text{world}}\to {\text{view}}}=\left[{\begin{matrix}\mathrm {R} ^{T}&-\mathrm {R} ^{T}\mathbf {t} \\\mathbf {0} ^{T}&1\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c67a2e4ff6d60e39d428d0cac5ab3266f5c1b586)

![{\displaystyle {\text{with }}\mathrm {R} =\left[{\begin{matrix}x_{1}&y_{1}&z_{1}\\x_{2}&y_{2}&z_{2}\\x_{3}&y_{3}&z_{3}\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ba6f143978631c3e9f3414b755a11e4429c16ee3)

![{\displaystyle \mathrm {M} _{\text{projection}}=\left[{\begin{matrix}{\frac {d}{a}}&0&0&0\\0&d&0&0\\0&0&{\frac {n+f}{n-f}}&{\frac {2nf}{n-f}}\\0&0&-1&0\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/43afae7ea0ce408c5a1a479a265177d988a0fb1c)

![{\displaystyle \mathrm {M} _{\text{projection}}=\left[{\begin{matrix}{\frac {2n}{r-l}}&0&{\frac {r+l}{r-l}}&0\\0&{\frac {2n}{t-b}}&{\frac {t+b}{t-b}}&0\\0&0&{\frac {n+f}{n-f}}&{\frac {2nf}{n-f}}\\0&0&-1&0\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/43a44af0b15159b3378c0d628f98e88f6fa82c86)

![{\displaystyle \mathrm {M} _{\text{projection}}=\left[{\begin{matrix}{\frac {2}{r-l}}&0&0&-{\frac {r+l}{r-l}}\\0&{\frac {2}{t-b}}&0&-{\frac {t+b}{t-b}}\\0&0&{\frac {-2}{f-n}}&-{\frac {f+n}{f-n}}\\0&0&0&1\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f7345d54b1caa48a201493b48d21f86f2a2321ac)

![{\displaystyle \left[{\begin{matrix}{\frac {w_{s}}{2}}&0&0&s_{x}+{\frac {w_{s}}{2}}\\0&{\frac {h_{s}}{2}}&0&s_{y}+{\frac {h_{s}}{2}}\\0&0&{\frac {f_{s}-n_{s}}{2}}&{\frac {n_{s}+f_{s}}{2}}\\0&0&0&1\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2dccc455d0d455f5128c324abe28d96a952a728d)