Calculus/Approximating Values of Functions

Although many times, a value can and does have an exact representation that is and can be described by some function, it is sometimes useful to get an approximation of those values especially in many real world contexts. For example, a construction worker might need a room to be feet long. However, this value is not useful because most rulers do not have a measurement for . Instead, workers need an approximation of the length to be able to construct a room that is feet long.

Some numbers were and still are very hard to approximate, but calculus makes it easier to do so. The subfield of Numerical Analysis studies algorithms used to approximate numbers, including but not limited to the residual (how much the value is off from the true value), the level of decimal precision, and the amount of times the procedure needs to be done to reach a certain level of precision.

While this section will not be a replacement for numerical analysis (not even close), this section will hopefully introduce efficient algorithms to approximate values to surprising levels of accuracy.

Before diving into this section, Section 2.4 already introduced a method to approximate the solution to a function using calculus as justification behind this approximation algorithm, referred to as the Bisection Method. Using calculus to approximate values should therefore not be very surprising.

Linear Approximation

[edit | edit source]

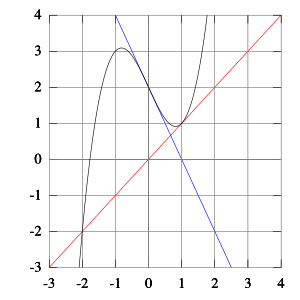

Recall one of the definitions of the derivative: it is the slope of the tangent line at a point, , of the function . Thinking about the local behavior of the function around , the tangent line can be a good approximation of the value (refer to Figure 1) if is small and is small.

If is a known value for the differentiable and continuous function , the derivative at that point is , and for all small , then the value of on the interval for some small is approximated by the following equation:

(1)

Justification: Notice the tangent line at for some differentiable function is given by the following equation:

(2)

where is the equation of the tangent line.

If we are trying to obtain (the true value) through the tangent line, and the distance for all small , then . Therefore,

Notice that for this technique to be used, it needs to be the case that

- is differentiable at and continuous in .

- is small and is small. Otherwise, some very strange possible approximations may appear.

- is monotonic in . (You will learn this more comprehensively in Section 6.2.)

If any one of these conditions are false, then this technique will either not work or will not be very useful.

|

Example 3.17.1: Approximating Let . The exact value of . The tangent line equation at is given by Let . Suppose . Then, . Therefore, . |

The benefit of linear approximation is that instead of having to use harder to understand functions, we estimate the value of a function using linear functions, arguably the easiest possible calculation we will ever have to use, assuming the value of the derivative is easy to find.

Determining over- or underestimation

[edit | edit source]Notice that for any approximation we obtain using a tangent line approximation (same as a linear approximation), there exists a remainder term that will make it equal to the true value we can obtain from the function. That is,

(3)

where is the remainder term. This, unfortunately, does not give us a precise estimate of the residual, especially if we cannot find the exact value of the term. While there is a technique to determine the upper bound of a residual for this type of estimate, it will be done much later in Section 6.11.

The best solution we have for now is determining if the estimate we have given is below or above the true value, which can be done with the following technique.

- If is concave down in the interval between and , the approximation will be an overestimate.

- If is concave up in the interval between and , the approximation will be an underestimate.

Justification: Suppose is a twice differentiable function in and .

- Case 1(A): Let and for . Then, the tangent line, at has positive slope and (see the bottom function of Figure 1).

- Case 1(B): Let and for . Then, the tangent line, at has positive slope and .

- Case 2(A): Let and for . Then, the tangent line, at has negative slope and .

- Case 2(B): Let and for . Then, the tangent line, at has negative slope and (see the bottom function of Figure 1).

Since a concave down function has no matter the slope of the tangent, and a concave up function has no matter the slope of the tangent, we have justified what we wanted to do.

|

Example 3.17.2: Is an over- or underestimate? Let . The function is concave down for all because

The last implication is justified because (as an exercise, show this yourself). If is monotonically decreasing and bounded (which it is – you can show this multiple ways), then we can be certain that the inequality shown is correct. Because is certainly concave down from to , is an overestimate of the true value. |

Issues with Linear Approximation

[edit | edit source]While linear (or tangent line) approximation is a powerful, easy tool that can be used to approximate functions, it does have its issues. These issues were alluded to when introducing the technique. Each issue will highlight why this tool may not be very useful all the time.

|

Example 3.17.3: Approximate using Tangent Line Approximation Let . Since we are approximating , we may use the tangent line approximation at to obtain an approximation at . Unfortunately, does not converge to a finite value. This therefore means that the tangent line is either vertical or does not exist. Since the derivative does not exist at , it is impossible to obtain an accurate approximation using the linear method. |

|

Example 3.17.4: Approximate using Tangent Line Approximation Let . Since we are approximating , and is a small difference in , we may use the tangent line approximation at to obtain an approximation at . The tangent line equation at is given by Let . Suppose . Then, . Therefore, . However, this approximation is very off from the actual value. It has an error of within the actual value of the function at . The reason for this large error has to do with something subtle. While the derivative does exist at , the function is not monotonic from : the function will both decrease and increase. Recall from your reading that a function is monotonically decreasing if and only if for any and , . However, there exists a counterexample for above, which becomes apparent if you graph the function. Hence, it would be irresponsible to use a tangent line to approximate the value of . |

Because this will be something you will learn more comprehensively in Section 6.2, assume that all exercises have a monotonic function. This example is simply meant to illustrate a common pitfall that this method falls to.

Newton-Raphson Method

[edit | edit source]While tangent line approximations are very helpful, they tend to only be useful when you know a nearby value. However, if there does not exist a nearby value to help estimate its value, then it will be very difficult to obtain a precise estimate of the exact value desired.

The Newton-Raphson method, introduced in Section 3.13, is a useful method to determine the zeros of a function, whether polynomial, transcendental, irrational, exponential, etc. However, the Newton-Raphson method can also be used to approximate values of specific functions.

Suppose is the value of interest. Let . There exists some function so that . If an approximation of is desired, then iteratively find as follows:

(4)

If you read Section 3.13, then this equation should be known and already justified to you.

|

Example 3.17.5: Approximate . Let . We need to manipulate this equation so that we may obtain . From this, let . Since is a root of the equation, we can use the Newton-Rhapson method to approximate . First, we choose an initial guess. Since , and , is a good initial guess. Before we can begin, we need the derivative of the function, which can be easily shown to be . Now we begin by finding the root. Out of convenience, we will stop here. However, the value we have obtained is already correct to four decimal places. |

|

Example 3.17.6: Approximate . Let . We need to manipulate this equation so that we may obtain . Keep in mind to eliminate as many square roots as possible so that our jobs are easier. Notice this equation has four possible roots, but we only care about one of them. Looking back: Notice that , so we choose .

Notice that means that for . Finally, notice that cannot be simplified. We are going to have to work with these values. (Keep in mind that even without calculators, many people in the past used slide rulers to work with these ugly values. We have the benefit of an electric computer on our fingertips as opposed to an analog one.) Now we begin by finding the root. Out of convenience, we will stop here. However, the value we have obtained is already correct to six decimal places. |

Failure Analysis

[edit | edit source]

Of course, as we know from Section 3.13, the Newton-Raphson method is not perfect and will fail in some instances. One obvious instance is when the derivative at a particular point is zero. Because that is in the denominator, we cannot find the next possible root once that occurs. However, there are others.

Starting Point Enters a Cycle

[edit | edit source]For some functions, some starting points may enter an infinite cycle, preventing convergence. Let

and take as the starting point. The first iteration produces and the second iteration returns to so the sequence will alternate between the two without converging to a root (see Figure 2). The real solution of this equation is . In those instances, one should select another starting point.

Derivative does not exist at root

[edit | edit source]A simple example of a function where Newton's method diverges is trying to find the cube root of zero. The cube root is continuous and infinitely differentiable, except for , where its derivative is undefined:

For any iteration point , the next iteration point will be:

The algorithm overshoots the solution and lands on the other side of the -axis, farther away than it initially was; applying Newton's method actually doubles the distances from the solution at each iteration.

In fact, the iterations diverge to infinity for every , where . In the limiting case of , the iterations will alternate indefinitely between points and , so they do not converge in this case either.

Discontinuous Derivative

[edit | edit source]If the derivative is not continuous at the root, then convergence may fail to occur in any neighborhood of the root. Consider the function

Within any neighborhood of the root, this derivative keeps changing sign as approaches from the right (or from the left) while for .

Thus, is unbounded near the root, and Newton's method will diverge almost everywhere in any neighborhood of it, even though:

- the function is differentiable (and thus continuous) everywhere;

- the derivative at the root is nonzero;

- is infinitely differentiable except at the root; and

- the derivative is bounded in a neighborhood of the root (unlike ).

Euler's Method

[edit | edit source]As will be shown in the examples below, Euler's method does not tend to an approximation as quickly as Newton's method. There are a number of reasons for this, but Euler's method does not stop being useful in spite of this issue. The examples will showcase how useful Euler's method can be however.

|

Example 3.17.7: Approximate . Let . We need to find a differential equation . Notice that we will do something in the last step which will be important for approximating . The last step shown here is very important. We need to approximate . However, if we are trying to approximate , that cannot be done if we need to use the square root to do so. Instead, since and Euler's method gives us new -values to work with, we can get an approximation. We next need to choose an initial point, . This is the only point in which we use the actual value from the function . In this instance, since we want , we will choose . From there, we can choose the step size, which can be arbitrary. Here, we will choose As can be seen here, the approximation for is . This has an absolute error of , which is pretty good for most contexts. If this was to design a box for example, then this is an okay margin of error. |

While the margin of error appears to be big compared to the local linear approximation of (with an absolute error of ), keep in mind that our step size is considerably larger than the one shown in Example 3.17.1. If we have chosen a smaller step size, then the approximation would be more accurate (if only a little more cumbersome). For instance, with a step size of , the absolute error would be approximately .

![{\displaystyle \left[\alpha ,c\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/726706c3a0d704c73b14de9870efc7b0887f1c18)

![{\displaystyle x_{0}\in \left[\alpha ,c\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0ac083804e04a47eed50ad2e6a6dd83b5e7727a2)

![{\displaystyle {\sqrt[{3}]{0.01}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a7ae7406069685b769e3e766e1ff48c327b53777)

![{\displaystyle f(x)={\sqrt[{3}]{x}}=x^{1/3}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7414d427019b409754f6dd97f10b66ad34d197c6)

![{\displaystyle f^{\prime }(0)=\lim _{x\to 0}{\dfrac {\sqrt[{3}]{x}}{x}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0e19176072715b9d5fe98a7744c58afb2f49b604)

![{\displaystyle \left[-1,-0.8\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/cf7362e16de06f3383c7f2666cceb0dab91cbf9e)

![{\displaystyle x_{1},x_{2}\in \left[a,b\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a6c18397bc1a9a0255326fac14c4b869c1fea9d8)

![{\displaystyle f(x)={\sqrt[{3}]{x}}.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0d0572ba8a8d137d46b3aee3602d7d5b99b549ee)