CPU Design

Preface

[edit | edit source]

This book is about how a CPU may be designed and all its components. My CPU is an ordinary Von Neumann machine which means that it execute its instructions sequentially. I have actually managed to design a CPU using a Xilinx CPLD and is on the verge of designing a follow up with a Xilinx FPGA instead. The reason for mentioning this is that the only programming language I understand is gate-CAD and Xilinx has a fantastic program called ECS which makes this possible. The only problem is while I can program pure gate logic I can not program ROM functions which are needed for the Instruction Register (IR) which consist of microcode realizing all instructions. These are realized in 6pcs of 27C512 EPROMS. However, I do think that this is possible by using Verilog/VHDL but I understand nothing about these languages and fear that it is hard to combine ECS with Verilog/VHDL so my plan with my follow up will be to still use IR externally. I am using an old architecture which means the use of something called an Accumulator (AC) where data temporarily is stored and manipulated like shifting and such. I will try to explain the parts you need to use in a CPU with this book.

The Chosen CPU Architecture

[edit | edit source]

Here we have some modules to be explained lightly here and more advanced later.

FA: Full Adder, its the heart of all additions having to be made (subtraction is done by adding with two's complement)

CCR: Condition Code Register, indicates the outcome of FA-operations which sets different flags i CCR.

SR: Shift Register, I use two SRs to be able to shift in both directions and I call them Accumulator (AC)

SP: Stack Pointer register, this register keeps track of push/pull operations, it is an additional way to temporarily store data.

PC: Program Counter, it points out which address to use and is a fundamental component in a CPU.

AR: Address Register, while the CPU uses a data buss of only 8 bits wide and the address bus is 16 bits wide, two bytes are needed to read and manipulate an address

AND: bitwise AND

OR: bitwise OR

XOR: bitwise XOR

IRR: Instruction Register Register, this register reads the OP-code coming in from the program memory on the data bus and therby sets the address for the Instruction Register (IR) which is the real brain in the CPU because it realizes each instruction/OP-code by pulling a lot of enables, the data width of my IR is 43 bits (IRR+BR+IRC constitutes the IR register).

BR: Branch Register, this register fetches the N and Z flags from the CCR and thereby determines what to do with a branch instruction (preceeded by a compare of CMP type).

IRC: Instruction Register Counter, this counter toggles through the addresses that are needed to realize an instruction, I have limited it to 16 steps.

D: Internal Data Bus

A: Internal ALU Bus, this second bus simplifies some data manipulations

OE: All boxes labeled with OE are three-state buffers, putting its value on the line when enabled, resting in high impedance mode otherwise

LD: All boxes labeled LD are registers which store data for at least one clock cycle (CP).

Accumulator (AC)

[edit | edit source]

This picture shows how an accumulator may be built. Accumulator A and B are just shift registers where A is used to shift data to the right (ROR) and B is for shifting to the left (ROL). I do however think that there are shift registers that can shift in both directions but I haven't found any and use this approach instead. I use three buses here (D/A/B) but this is not how I have designed my CPU, at this moment I do not really understand why I use three buses. SA_A stands for Shift Around accumulator A which means that the bit shifted out to the right is put back into the left of the shift register. Ain_A is the bit to be shifted into the shift register, LDA (Load accumulator A) is the command that loads the shift register from the D-bus. The different En stands for Enable and enables the data to be transferred to the buses, disabled gives a Three State output.

Shift Register

[edit | edit source]

This register is named rather wrongfully as Accumulator A/B above but they are registers of the type I am showing in this picture. I use two of these in my CPU to be able to shift in both directions. Data is always loaded to both shift registers (see architecture) but depending on which way you want to shift only that shift register is used to enter data to the internal bus. Normally you can not buy an SR-Flip/Flop and will have to reside to a JK-Flip/Flop instead, probably because the condition 11 as inputs is not allowed in a SR-Flip/Flop but is allowed in a JK-Flip/Flop (toggles data). It is however possible to design a SR-Flip/Flop with the aid of a D-Flip/Flop and I think that this is what I have done using a pre-defined D-Flip/Flop from the Xilinx library. Actually I tried to design my own positive edge triggered SR-Flip/Flop descrete in ECS by using ordinary NAND gates but this was impossible in spite of very nice help from Xilinx forum. So I use pre-defined Flip/Flops.

Xn are the parallel inputs, Qn are the parallel outputs, LD (LDA/LDB) loads the parallel data, x is the serial data input (Ain/Bin), when LD is low and En (Enable) is high serial data is shifted, when LD is high and En is low parallel data is loaded. I have called En for ROR/ROL that is Rotate Right and Rotate Left.

When micro coding this works fine because you just sets what you want while keeping all other signals low so if you want to shift you just set En to high and LD will be low and if you want parallel load you set LD high while En will be low.

P and R stands for asyncronous Preset and Reset, active low.

Counters

[edit | edit source]

For the Program Counter (PC) and the Stack Pointer (SP) and the Instruction Register Counter (IRC) we need a counter. SP has also have to be an up/down counter while the other two may just count up but it complicate things not to use an up/down counter for them all so that is what I have done.

PC and IRC thus always only count up, the SP is however a special counter used for pointing out RAM addresses regarding push and pull instructions (PSHA/PULA) to be able to use the so called stack where you store intermediate data. You may have data you want to temporarily store and read back at a later time, this is when you use the stack and SP keeps track.

It is able to parallel load Xn data into the counter by pulling load (LD) high, the output (Qn) change will come at positive edge of the clock pulse (CP). When load is low and enable (EN) and up/down' (U/D') is high the counter counts up while if U/D' is low the counter counts down

I use two clocks which I have called CP and E where E is an inverted CP which makes it possible to set up the counters and such with E before positive edge of CP comes. This is very important while programming the micro instructions in the Instruction Register (IR).

P and R are asynchronous Preset and Reset, active low.

Instruction Register Counter (IRC)

[edit | edit source]I have called this counter the Instruction Register Counter (IRC), this counter counts the different steps to realize each instruction with micro code. I have limited it to 16 steps so during a maximum of 16 clock pulses (CP) the instruction must be realized. Each step is actually an address with a data width of 41 bits.

Program Counter (PC)

[edit | edit source]This counter is called the Program Counter (PC) and is the heart of the CPU because it actually is the addresses for the memories and input/output (I/O). It always counts up so U/D' is high. It may be set with a new value/address by using load (LD) according to above. When enable (En) is set, the PC counts up with CP.

Stack Pointer Counter (SP)

[edit | edit source]This counter is called the Stack Pointer (SP), it points out the address for a small memory area where data is temporarily stored using the instructions PSHA/PULA (push A onto stack)/pull A from stack) which means that PSHA stores the accumulator A data on the stack at the address SP is pointing to while decreasing one step. If you want to read back the data you use the instruction PULA and the pointer will increase one step before reading. The SP normally resides close to the bottom of the memory map and has a rather small memory area which in my case is only 256 bytes large (0000h-00FFh).

Registers

[edit | edit source]

Registers are frequently used in a CPU. I for instance use registers for the address part of the Instruction Register (IR) which I call the IRR (Instruction Register Register) which is a part of the IR address built by IRR+BR+IRC where IRR is 8 bits wide, BR (Branch Register) is 4 bits wide and IRC (IR Counter) is 4 bits wide.

Registers are used to temporarily store data, usually for only a clock pulse (CP) long.

Xn are parallel data loaded into the register when load (LD) is high (and CP goes high), the Xn data is then stored in the register (or flip/flops) and are presented on the outputs (Qn).

P and R are asyncronous Preset and Reset, active low.

Condition Code Register (CCR)

[edit | edit source]The Condition Code Register (CCR) reads the outcome of the Full Adder (FA) operations. It recognizes if an operation for instance is negative (setting the N-flag to one) or if it is zero (setting the Z-flag to one). It also tells if the operation is a so called overflow (setting the V-Flag to one). It even sniffs if an incoming operand is negative or not. Without the CCR branches would be impossible to do.

Instruction Register Register (IRR)

[edit | edit source]The Instruction Register Register (IRR) is the first part of the Instruction Register (IR) and it reads the incoming OP-code from the Program Memory, and thus Data Bus, on the internal D-bus. The IRR is a vital part of the IR and I have called it IRR due to lack of fantasy.

Branch Register (BR)

[edit | edit source]The Branch Register (BR) is the second part of the Instruction Register (IR) and fetches two flags from the Condition Code Register (CCR) called N and Z where N checks if the Full Adder (FA) operation (preceeded by a compare, CMP) has become Negative or if the FA operation is Zero. This means that if Z=1 the operation is zero and if N=1 the operation has become negative.

We may write this like

NZ=00: The operation was not negative and not zero (i.e positive)

NZ=01: The operation was not negative but zero (i.e zero)

NZ=10: The operation was negative but not zero (i.e negative)

NZ=11: The operation was both negative and zero (can not happen)

Instruction Register (IR)

[edit | edit source]The Instruction Register (IR) consist of three parts that is the Instruction Register Register (IRR), the Branch Register (BR) and the Instruction Register Counter (IRC), totally 16 bit wide. These modules which are all registers, except for IRC which is a counter, makes up the address for the Instruction Register (IR). This address then sets up to 41 data bits which are sequently used internally in the CPU to jerk in different enables, loads and such to realize the different instructions. All these data bits are very critical but two are especially important and they are the Ready and Branch bits. Ready signals when an instruction is finished and Branch signals when there is a branch. When an op-code is a branch, data must be fetched from the Condition Code Register (CCR) so that the IR knows what to do.

Address Register (AR)

[edit | edit source]The Address Register (AR) sets the address from either the Program Counter (PC) or internally (Extended Addressing mode). I use one register for each byte because the Data Bus (DB) is only 8 bits wide while the Address Bus (AB) is 16 bits wide. Actually, the bytes are loaded in sequence which means that the address is not valid until both bytes are loaded. During this single clock pulse (CP) duration the AR addresses "wildly" but this doesn't matter because a read or write is only done when it is finished. Perhaps an additional register loaded with both high byte (HB) and low byte (LB) after the AR would have been more neatly.

Data Bus (DB)

[edit | edit source]The Data Bus (DB) is a bidirectional bus that is kind of hard to implement. I thought I had verification for it working in both directions but I do not. While I can do JMP I do however know that the data is coming in from the data bus because the jump address is correctly read from the program memory (and thus DB) and loaded into the Program Counter setting the jump address. The architecture above shows rather exactly how the Data Bus is implemented. It uses two 3-state buffers connected in anti-parallel, high R/W' enables data to be read into the CPU, low R/W' enables data to be written to external memories or I/O. Also, there is an important special signal which I have called D_REL, this signal releases the internal D-bus in such a way that the D-bus may be used internally to circulate internal data.

Full Adder (FA)

[edit | edit source]

The Full Adder (FA) I use is depicted in this picture, for the output/sum we have according to the Karnaugh Diagram

and for the carry generation we have

This expression is however a fix because if ci is high it is enough if either xi or yi is high to generate carry, it doesn't matter if both xi and yi are high in that case.

The FA is used all the time in a CPU, there are few instructions that doesn't use the FA. Logical instructions like AND/OR/XOR along with stack and subroutine instructions and load/store (LDA/STA) of accumulator value are about the only ones I can think of. Most of the instructions use the FA, for instance branches, increment (INCA), decrement (DECA) and of course addition/subtraction.

In a CPU we do however not only want to add but we do also want to subtract. There has been invented something interesting and useful and that is two's complement. Adding with two's complement of a number, we actually get a subtraction!

So we do not need an additional Full Subtractor (FS) but can add with the two's complement of the number to subtract.

The branch offsets are written in two's complement which makes the Program Counter jump backwards.

Two's complent of a number is created by inverting all the bits and add with one, it is actually a circle of numbers and if we simplify with a datawidth of 4 bits the positive ones go from 0000b to 0111b, the negative ones then goes from 1000b to 1111b where 1111b equals -1.

The highest bit will still indicate a negative number (like using a signed representation) but here the numbers in the circle goes in opposite directions, that is when the positive number increases up to 7h and rolls over to 8h the number becomes negative and the value is -8 and "abs" decreases as the value reaches Fh which is -1.

Carry generation is delayed two tpd (propagation delay per gate) which for my 8 bits of data means a total delay of 16 tpd. This is important when it comes to how fast my CPU can run. While the FA must finish and tpd is of the order of 5ns, maximum clock speed is then (using symmetrical clock, i.e two "tpd") 6MHz, faster than this my CPU can not run. There are however technics called "carry acceleration" but that is don't care for me.

The chosen instructions (CPU Mnemonics)

[edit | edit source]

This picture shows the chosen instructions. I have decided to skip the two instructions on the bottom, partly because the Stack Pointer (SP) is actually initiated at start up (POR, or Power On Reset) and there is no need to change it (POR sets it to 00FFh). The OP-codes are stolen from HCS08 and the idea was to not to have to design an own compiler, it is however possible to program in pure machine code which is my aim. I have chosen as few instructions as possible to make my CPU simple (also called RISC as in Reduced Instruction Set Computer).

The addressing modes I use in my CPU are Immediate, Extended and Inherent. Immediate means that data is directly read from the external program memory, this is depicted "dd" in the picture. Extended means that data is read from RAM or I/O's, this data type may be called variables and is depicted "hhll" to show that the operand is two bytes long (h as in high byte and l as in low byte) because the value actually resides in a 16 bit address. Inherent means that we need no operand, an example is INCA which only increments the value in accumulator A.

There is actually another "addressing mode" which is kind of special because only branches uses it. It is depicted as "rr" in the picture which is a relative number in two's complement which sets the jumps of the Program Counter (PC) whenever we wish the CPU to jump somewhere according to a condition.

Branch Jumps

[edit | edit source]

This picture shows how relative branch jumps are done. If the PC for instance stands at 28h and the offset (rr) is F8h (using inversion + 1 we get -8) the addition PC+offset in two's complement yields 20h which means that the PC jumps to 20h. Here we can also see that Carry is generated when the fist "nibble" is added (so the addition must be done using ADC, ADd with Carry). If the PC stands at 26h and the rr is +3, the jump address for the PC is 29h but here the V-flag is set, this overflow flag has in this simplified case to do with the lower nibble value being larger than 7h, in the normal case this means that the byte value exceeds 255. If the PC stands at 26h and we wish to add with -8 we end up at 1E. I can not see any problem with this but have implemented two EP-signals, ADD_00 and ADD_FF, to use if the rr is positive or negative. I think I have thought too much because normal two's complement addition with rr is shown to work in the picture.

Two's complement is very interesting. If you visualize a circle where 0h is at the top, as long as the number is positive it propagates clockwise (CW) and stops with 7h. On the other side of 0h we have Fh which is -1, this value is then increased negatively moving counter clockwise (CCW) in the circle from the other side downto 8h which is -8 while Fh is -1. Looking at the circle as a clock, positive numbers increases with time, negative numbers (without the sign) thus decreases with time.

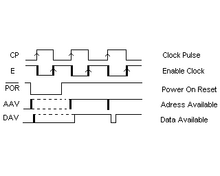

CPU Timing

[edit | edit source]

This picture shows the timing of my CPU. The E-clock is the inversion of the CP-clock but it is delayed (but not with much, normally <10ns, also called tpd as in Propagation Delay). I have dubbed the E-Clock as in practice being an EP-pulse because it controls the addresses of the Instruction Register (IR) and thus enable data for one full clock period (T(CP)). This means that it enables data over a whole CP-cycle. During the EP data is enabled pulling all data "pins" but nothing really happens before CP goes high which is in the middle of the EP. The data during EP is thus stable when CP comes. Data Available (DAV) is just a way to express that when an address is set there is a small delay (tpd) before data is available. I circumvent this fact by using an E-clock which has a delay of half the CP-clock period time yielding a delay of T(CP)/2 which is enough if the CP frequency isn't too high.

Micro Coding

[edit | edit source]

This picture shows how micro coding is done. Each instruction in the Instruction Register (IR) has an address width of 16 bits and a data width of 43 bits. Each data bit sets or disables the different load (LD) of the internal registers and the different output enables (OE) of the buffers as well as some key signals such as if the stack pointer (SP) should increment or decrement. Each line is set up by the E-clock, and CP executes at the line border, I have below renamed these signals as EP-pulse and EC-clock, which stands for E-clock Pulse (EP) and Execute Clock (EC). This is very important to keep in mind while micro coding.

Reset (RST) is an internal instruction that is executed at reset only. It has an op-code (in the IRR) that equals 00h which is what happens at POR (i.e the IRR register is set to 00h) and everything starts from there. At POR, the PC is initiated to FFFEh (pointing out the HB-part of the start address). This address is, by setting LD_AR_HB and LD_AR_LB high, loaded into AR at next coming positive edge of the EC-clock to become an actual address (FFFEh is then the address). At the next row R/W' is set to read and LD_PC_HB is set to load high byte of jump address at FFFEh, when the EC-clock comes HB of the address to jump to is read (and loaded into the PC_HB register). At the same time PC_EN is set to be able to increase PC for the next reset byte (PC_LB). When the next EC-clock comes AR is updated with the new address (FFFFh) where the LB of the jump address resides. At this row I set LD_PC_LB and enables read. Now both PC_HB and PC_LB registers has the correct jump address where the actual program begins. All we need to do now is to transfer the jump address to the Address Register (AR) which I do by pulling LD_AR_HB and LD_AR_LB high in a the last row.

There's lots to think of while micro coding and I am contemplating inverting R/W' while most are reads. This would also mean that R'/W actually is the same as OE' which is what the memories want and the micro coding is simplified (using as few 1's as possible). CS_I/O will of course also have to be revised.

Below I show the micro coding of a few very important instructions, without these types of instructions a CPU is pointless.

LDA Micro Coding

[edit | edit source]

LDA stand for LoaD Accumulator A and that is where the most interesting things happen. There are two modes for the LDA which can read directly from the program memory in the so called Immediate mode (LDA#) or it can read the data from an address in the so called Extended mode (LDA$) and the micro coding is of course different.

STA Micro Coding

[edit | edit source]

STA stand for STore accumulator A. This instruction can only store data at an specified address (i.e RAM or I/O), this is commonly known as Extended mode.

BEQ Micro Coding

[edit | edit source]

BEQ stands for Branch if EQual. This type of instruction is very important. Without branch instructions not much can be done in a CPU because branches are a way to make the PC jump to where you want it to jump according to a condition. If you for instance have a condition where you wish the CPU to repeat a part of the code you just wait until the Z-flag is set and as long as it isn't the instructions are repeated (i.e as long as the operation is not zero).

This instruction does not work, I have tried it. So don't look too hard on my schematic other than perhaps recognice the basics. I am doing a more pedagogic example of the same instruction below which I think may work.

Simplified BEQ Micro Coding

[edit | edit source]

I have put some effort into understanding how the branch BEQ may be implemented. I have made it more pedagogical while many bits may be set at the same time but this way I can describe each step. Still, this version is a version I can actually use because the number of steps is actually exactly the number of steps the IRC (Instruction Register Counter) of four bits can handle. I have decided, when it comes to micro coding, that a better name for the CP-clock is actually EC-clock or Execute-Clock because each row border downwards in my schematics means an execution by EC, the rows themselves use the E-clock which I have decided to call the EP-pulse instead while, inside IR, it is actually a pulse with the duration of total CP period time and each row just shows what to happen and EC comes in the middle of the EP while the E-clock only is an inverted CP-clock. In other words, EC clocks data when valid because all OE/LD and such has a propagation delay which must be waited out.

For all branches the status of the NZ-flags must be read before anything can be done. In this case the NZ-flags must indicate Z=1 for Zero and all other combinations must be disregarded. However, all the other combinations (except NZ=11, which can not happen) must be taken care of and just increase the Program Counter (PC) so it stands at the next OP-code/instruction.

My version of BEQ Micro Coding

[edit | edit source]Here I will invert R/W' so it does not have to be a one almost always, then I will try to reduce the number of IRC steps because many things can actually be enabled at the same time. Perhaps we can call it "look ahead". I am not aiming at a fast CPU, I just wish it to work but if I can reduce the number of IRC steps I will be happy.

Memory Map

[edit | edit source]

This picture shows my Memory Map that is how I have used the available 65kB of addressing space for the different memories and I/O's. It also shows rather exactly how I have encoded the different Chip Selects (CS). X-TAL (CP) and Reset has however been revised. For CP I use three clocks, one is my "DC-Clock" using a SR-latch with a switch, the other clocks are firstly a 1Hz automatic clock using a Schmitt inverter and lastly my naive Schmitt 1MHz clock. I have only tried the other two yet.

Motherboard

[edit | edit source]Some kind of motherboard must be used to test a CPU. I have used ordinary LED's arranged in nibbles with the color red for high nibble and the color green for low nibble to more easily read the HEX Code. I also use two external memories where one is the program memory (ROM) and the other is a RAM memory (work memory?) to temporarily store data. I also use one single address, within an address space of 16kB, to read and write I/O data. In other words, my motherboard/computer will not have a mouse but just interact with a keyboard and a display which at this prototype stage only consist of two HEX-switches for primitive keyboard and a primitive display where all data is indicated by my LEDs.

First motherboard

[edit | edit source]

This old motherboard is the motherboard of my first CPU using a Xilinx CPLD. It is in much detail because the motherboard is wire-wrapped and there is no help from a CAD program.

New motherboard

[edit | edit source]

This new motherboard is designed for my new Xilinx FPGA version. It is not in so much detail because I plan to use a CAD program. Probably not Eagle which I have used up to now and this is because my free Eagle can not handle such a large PCB which I need. My plan is to use KiCAD instead. In practice, I/O keyboard (KB) and display (DSP) will be interchanged position wise because it is more simple to "hand-clock" if the switch is furthest towards you.

New Version

[edit | edit source]I have decided to take this in baby-steps. I will only copy my first motherboard and inject a possibility to output disable the EPROMs with the purpose of perhaps (observe) incorporate the EPROMs inside the Spartan. The I/O possibilities will be omitted and I will just aim at verifying the instructions. If (observe) I manage to verify all 33 instructions, I will design a new motherboard to be able to build my own computer. All signals from the 5V external memories (and other) will have 100Ohm in series to the Spartan (but I am a bit uncertain if this is enough, think it depends on the current capability of the external ICs)

CPU Test Circuit

[edit | edit source]

This picture shows a test circuit for our CPU. A binary value is set by the two HEX-switches (MSB uppermost). While this is done the value is registered by the LEDs furthermost to the left. When you have set the value you want you jerk in the momental switch SW1. The value is then clocked into the LED-array next furthermost to the left. This value is then read by the CPU whenever it has time and the value is presented to the CPU by the HC374 which is a parallel loadable register. If we call this register (an octal D-type flip/flop) IC2 the value into IC2 then propagates to the data bus (DB) when Chip Select (CS) and R/W' are high. If R/W' is low the value to write propagates to the lower register (IC3) which is always enabled while it just sniffs the data bus. As we have configured our memory map above we can write and read to the same address (for instance $4000) and at read get the value from the HEX-switches and at write enlighten the LEDs of our primitive display. This means that no mouse can be used, only a keyboard and some kind of display.

We have also put some LED-indications of both the address bus (AB) and the data bus (DB) as well as CS and R/W' (indicating both high and low for R/W'). If there can be any use of this you do however have to be an expert on HEX code (all LEDs are grouped in four using red for high nibble and green for low nibble to somewhat simplify).

There is however an academic problem with how the address is being set up during extended addressing mode because the address is set up with one byte at the time. However, the micro instruction will finish after this happens so only in theory you will get a wild addressing where the address is incorrect during one clock cycle.

CPU Adapter

[edit | edit source]

KLD_F is my special kind of PCB adapter for my motherboard. The nice thing with this approach is that you actually are able to use whatever CPU you want (as long at it is 8x16). All you need is an adapter PCB for your special CPU. I have designed a PCB adapter for my Spartan FPGA which footprint is called PQ208 (i.e it got 208 pins). The problem with this is the availability of my Spartan which is hard to find. Every good things changes all the time so the concept of being able to use whatever footprint there is is nice. I am actually planing to design two more adapter PCBs where i will call the first KLD_D for processors that are of the DIL type (such as MC6809) and the other will be called KLD_H for PQ44 (HCS08). There might even be a version for BGA.

Gate Technology

[edit | edit source]If we count the three-state gate we have seven different kinds of logic gates, and I am describing them all below using TTL (Transistor Transistor Logic) while ordinary DTL (Diode Transistor Logic) would have been somewhat more pedagogic. Both the truth table and the gate symbol is shown. All gate symbols are of European standard.

The NOT gate (Inverter)

[edit | edit source]

This picture shows a NOT gate. When the input is high the output is low and vice verse.

The NAND gate

[edit | edit source]

This picture shows a NAND gate. It is low when all inputs are high but otherwise high. Everything in a computer, except a Hard Drive (HD) or some permanent memory, may be built with NAND gates only!

The OR gate

[edit | edit source]

This picture shows an OR gate. This gate is high when at least one input is high and otherwise low. The symbol >1 is not fully correct because it should read >=1 which however is hard to fit into the symbol.

The AND gate

[edit | edit source]

This picture shows an AND gate. This gate is high when all inputs are high and low otherwise.

The NOR gate

[edit | edit source]

This picture shows a NOR gate. This gate is high when all inputs are low and low otherwise. Everything in a computer, except a Hard Drive (HD) or some permanent memory, may be built with NOR gates only!

The XOR gate

[edit | edit source]

This picture shows a XOR gate. This gate is high when the inputs are high/low or low/high and low otherwise. This means that a high on one input and a low on the other and vice verse gives a high output, all other combinations gives a low output. I have used the above symbols to display this gate. It would be interested to see a discrete version.

The Three-State gate

[edit | edit source]

This picture shows the three-state gate. This gate is, like the NOT gate, high when the input is low and vice verse. The signal OE (Output Enable) must however be high in this case. When OE is low the output is high impedance and at this condition a signal may be applied to the output. This is essential for bus systems like inside (or outside) a CPU.

Flip-Flop Technology

[edit | edit source]Below I show how to build flip/flops and some key elements.

Hazard Generators

[edit | edit source]

The picture shows how to generate hazards used to edge-trig flip/flops. The hazards are generated based on the propagation delay (tpd) for the gate, The picture to the left thus generates a negative hazard when the input signal goes high and this is because the NAND-gate is high when the stable input is 0 (01 at NAND pins). But when the input goes high, the inverter stays high for a very short time (tpd) so the net input to the NAND-gate is 11 which gives the hazard. The picture to the right works in the same way but gives a positive hazard.

The time duration of the hazard may not be large enough to set for instance a counter at POR but if the pulse is too small a capacitor may be fit to the inverter output to generate a larger hazard. It is also possible to connect an odd number of inverters in series to get a larger hazard.

Contact Bounce Eliminator (SR-Latch)

[edit | edit source]

This picture shows a couple of crossed NAND-gates. These are actually a memory cell in the form of an SR-flip/flop (observe that the inputs are inverted). Suppose SW stands in lower position, Q' will then be guaranteed high and Q will be low because both inputs are high for the upper NAND gate. When then SW switches position Q goes high and Q' goes low because both lower NAND inputs are high. When SW is in the air the former condition is hold (due to S'R'=11) and the condition switch is made only once. This means that contact bounces are eliminated.

This setup is very effective if you wish to connect a switch to a processor. You thus do not have to use filters or special routines to software-wise filter an input signal. The drawback is that the switch need to be dual throw which makes ordinary simple push buttons obsolete.

Edge Triggered D-Type Flip/Flop

[edit | edit source]

This picture shows the architecture of an positive edge triggered D-type flip/flop. Each time the positive edge of CP comes (because it generates a hazard according to above) the value of D input is transferred to the output (Q). You may remove the input inverter to get a SR-flip/flop instead. This is the most simple memory element for digital circuits. 74HC74 is a positive edge-triggered D-type flip/flop and may be designed in this way.

I tried to use this approach with Xilinx fantastic program for gate CAD (called ECS) but it was unfortunately impossible so all my SR flip/flops are pre-defined. I do however think that my approach is feasible.

Presetable D-Type Flip/Flop

[edit | edit source]

This picture shows a positive edge triggered D-type flip/flop with asyncronous Preset and Clear. It is thus possible to trip the flip/flop with the aid of R' and P' (active low). These signals must be released high before usage but are a perfect way to "POR" the state of the flip/flop. POR means Power On Reset.

SR Flip/Flop

[edit | edit source]

This picture shows a SR-type flip/flop and its truth table. It is a simplified version of the D-type flip/flop. The truth table should be interpreted as the values within it are the next value. SR=11 is not allowed.

D Flip/Flop

[edit | edit source]

This picture shows a D-type flip/flop with its truth table. It is most frequently used in CPU

JK Flip/Flop

[edit | edit source]

This picture shows a JK-type flip/flop and its truth table. The JK flip/flop is an extended version of the SR flip/flop and incorporates feedback. The JK flip/flop has the advantage over the SR flip/flop that it is defined for all type of inputs. At JK=11 it toggles. It also is possible to buy as capsule in contrast to the SR flip/flop which I have not found.

T Flip/Flop

[edit | edit source]

This picture shows a T flip/flop and its truth table. The T flip/flop can divide a clock pulse (CP) in half while setting T high. When T is low, nothing happens. The T input is in fact shorted JK inputs and controls if the outputs should toggle or not. There is a more simple way to divide CP which I show below.

Frequency Divider

[edit | edit source]

This picture shows how the clock frequency (CP) may be divided in two. It is built around a D-type flip/flop. The depicted flip/flop changes state each time CP goes high. This happens because Q' has been connected to the D input, so if Q is 1, D is presented with 0 which makes Q go low at next positive edge of CP. Two flanks are thus needed for a period which gives half the CP frequency.

RAM Memory

[edit | edit source]

This picture shows how a single cell in a RAM memory may be built. One bit of information is thus stored with the aid of an ordinary flip/flop (in this case a D-Type Flip/Flop). This makes the memory very fast. At the same time it however loses its information when there is no more supply. Its function is that it is addressed by Adress where data is read if R/W' (Read/Write) is high or written is R/W' is low. The whole memory cell is not shown because we need a couple of three-state gates also because data in and data out shares the same bus.

Miscellanous Gate Technology

[edit | edit source]Here I show what you can do with different gate configurations

Mono stable multivibrator

[edit | edit source]

This picture shows a mono stable multivibrator. The word mono states that there is only one stable state. This circuit is triggered by a negative flank on the trigger input B' which makes the output Q go high for

seconds, which of course only is valid for the 4538 but the principle is the same for all circuits.

Astable multivibrator

[edit | edit source]

This picture shows an astable multivibrator. It has no stable state so it changes state all the time. Usually I design my astable multivibrators with the aid of a single Schmitt Inverter (normally HC14). It can be shown that the frequency for this circuit is

but for a single 4093 Schmitt NAND I have calculated

when driven with Vdd=5V where the supply (Vdd) is crucial for exact frequency.

Multiplexer

[edit | edit source]

This picture shows a multiplexer (MUX). The rings mean inversion, & means AND gate and >1 means OR gate. With the aid of the control signals ABC it is possible to select which input to be active and propagate to output. If the control signal for instance is 110 binary, input 6 is active. 74HC251 is a 8 Channel Multiplexer

Decoder

[edit | edit source]

The picture shows a decoder or demultiplexer. It is built around a number of AND gates and a number of inverters. The decoder realizes all combinations of the input signal. If for instance ABC is 011, the AND gate number 3 goes high. 74HC138 is a 3-To-8 Line Decoder.

Comparators

[edit | edit source]The need to compare two numbers is common in a CPU. If we suppose X=<x1, x2,...,xn> and Y=<y1, y2,...yn> the goal is to either indicate X=Y or one of the cases X>=Y, X>Y, X<=Y. We then note that X>=Y is the inversion of X<Y, due to this reasoning we only have to consider two cases (while the variables also may be interchanged).

and

Basic Comparator

[edit | edit source]

This picture shows a basic comparator because xi=yi is realized with a simple XOR-gate and an inverter. The output is then high when both signals are high or both are low thus when they are equal.

X=Y

[edit | edit source]

This picture shows a 4 bit realization of a comparator for X=Y. If any of the bit pairs is different their XOR gate generates a high signal. When comparing numbers it is thus enough to check if any XOR gate has gone high because this implies unequal numbers. If then any of the XOR gates are high (i.e shows that the bit pairs are unequal) the NOR gate goes low which means that if the NOR gate is high we have equality.

X<Y

[edit | edit source]

This picture shows a coomparator for X<Y. If we start with the most significant position and observe that x1'y1=1 then immediately X<Y, if also w2=x1'+y1=1 (which means the combinations x1y1=00, 01 and 11, thus equal or less) and x2'y2=1 we also have X<Y. Wi thus means that the next position also have to be checked if xi<yi or not.

ALU Components

[edit | edit source]ALU stands for "Arithmetic Logic Unit" and is the part of a CPU which executes arithmetic calculations and specially addition and subtraction

Basic Adder

[edit | edit source]

This picture shows a basic adder in the form of a single XOR gate. An addition of two one-bit numbers is thus complete after passing through a XOR gate. Two things are however missing, the first is the carry generation which is a kind of overflow when both numbers are high. This carry generation may be done by a basic adder with an AND gate working at the two bits. This may be depicted in the form that 1+1=0 with 1 as carry (due to the AND-gate) for the next position. A full adder (FA) also takes care if carry was generated from the lower bit addition and transfers this high signal (when carry) to the next cell. I see this fact rather simply like if the lowest bits are both high, the bit-wise adding is zero but with a carry. Now carry is added to the next higher position making carry and the bit-wise adding 10 binary (i.e 2), if a carry also have to be added the result is 11 binary (i.e 3) because bit-wise addition (including carry) is now 1. I'm a bit uncertain here but this works. Perhaps you may see it as each addition is actually a XOR operation so if both the bits are high this XOR gives a low output, putting carry and this output into a new XOR makes the signal high.

Full Adder (FA)

[edit | edit source]

I have described this above but shows it here for continuity. While I have already described it I just give you the formulas for the sum and the carry generation (these signals comes from the picture using the Karnaugh diagrams)

and

Full Subtractor (FS)

[edit | edit source]

This pictures shows a full subtractor (FS), its theory and realization. The numbers are supposed to be positive. If the numbers are

Y=<y1, y2,...,yn>

and

X=<x1, x2,...,xn>

Y is subtracted from X (D=X-Y).

I will not dig deeply into this because subtraction is normally done using adding with two's complement. The principle is however "clearly" shown in my picture but I won't describe it because my English skills is not that good. I will however emphasize that the result is in two's complement and show you the formulas.

och

Permanent Memory (ROM)

[edit | edit source]A CPU needs to have some kind of permanent memory (ROM, Read Only Memory) where it can fetch instructions. This ROM needs however not to be programmable more than once. I use 6 EPROMS (27C512) for my instruction register (IR) and one for the program memory. Due to EPROMs I can reprogram them if I want which is what I need because I'm not so skilled.

Basic ROM

[edit | edit source]

This picture shows a basic permanent memory (ROM) realized with the aid of AND gates and OR gates. X indicates the three bit wide address bus and W indicate the word-line that is selected and b indicate the bits and thus data bus. If the address is 010 (w2) the data is 0110.

ROM in NAND-NAND structure

[edit | edit source]

This picture shows a ROM which may be called MROM as in Mechanically Programmable Read Only Memory. Here diodes are used in the positions you want to set high. So if we again have that the address is 010 we here have that W2 is low and all diodes connected to W2 will give low output to the output inverters making their outputs high. We thus have the same situation as above but here we can set whatever data we want using diodes. An 8-bit data output (bus) however requires a maximum of 256 diodes. So this type of ROM only suffice for narrow buses (or if data most frequently is low), but it works!

Programmable Logic Array (PLA)

[edit | edit source]

This picture shows a PLA which is a programmable permanent memory or PROM (Programable Read Only Memory). The programming is very simple because diode functions are used in both the AND part and the OR part. For very small memories it is possible to program with diodes as shown. If we look at w0 we see that x1'x3=1 selects it as high, now b1 and b2 is high in the OR part which only means that data output is "OR-able" using diodes.

I think PLA is a smoother name for all types of programmable circuits such as CPLD and FPGA.

Implementation

[edit | edit source]An asymmetric 8x16-bit architecture (an architecture that is not symmetrical, meaning that it does not have a mirror image on either side of a central axis) is considered optimal because programs can be written in a clear way (read two hexadecimal symbols for data and four for address) and it is easy to obtain peripheral circuits such as EPROM and RAM etc. There is also nothing preventing one from expanding to arbitrary asymmetric architecture model 16x32. The only obstacle may be peripheral circuits. In any case, one can go far with the chosen architecture i.e., typically a 32kB large program, more than just a demo point of view. The only problem with asymmetric architecture is that the processor becomes slightly more complex than necessary.

Advanced Architecture

[edit | edit source]

This picture shows my first attempt in designing a CPU. This CPU also has an index register (X-Reg) implemented. Personally I can not see the real point of using an index register so it has been removed in the more simple CPU below. Interestingly a index register was not used until after 1949 (according to Wikipedia). The accumulator A (ACC_R + ACC_L) has however been implemented accurately according to my current knowledge. As far as I know an accumulator is three things such as parallel loadable register, shift register and intermediate storage of data. The shift register function is used by multiplication using LSL (Logical Shift Left) or division using LSR (Logic Shift Right) where only multiplication with powers of two and division by powers of two is possible (one shift to the left means a multiplication by two and one shift to the right means division by two). MUL and DIV is thus realized hardware wise in a normal CPU. The storing function using LDA (Load Accumulator A) is the most common, when the value needs to be written to RAM or I/O you run STA (Store Accumulator A).

Simple Architecture

[edit | edit source]

In this more simple (and used) architecture we have removed the index register and removed the possibility to clear carry (C_CL) and simplified the accumulator A to just consist of two parallel loadable shift registers (SR_R and SR_L) and also simplified the stack pointer register (SP). The point of removing C_CL is that two types of addition instructions will be made, one is normal ADD which adds two integer numbers disregarding carry (which is stored in CCR), the other is ADC (Add with Carry) which adds two numbers with carry consideration (meaning if the former addition gave carry, carry is taken into account). So if you for instance add PC_LB with a relative (two's complement) branch jump such as BEQ and get carry out you can then add 0+C+PC_HB (by setting ADD_00+ADC) and get the new PC_HB out. The zero comes from the fact that the addition must be done relative something (there are two "sides" of the adder). As a consequence of our simplification the simple instructions INCA (Increment accumulator A) and DECA (Decrement accumulator A) must be done using the full adder (FA). This could be done at micro instruction level like, firstly we load accumulator A with for instance the value $FE, then the value is put onto the ALU bus (and is reachable on top of FA) with the aid of the signal ALU, then ADD_01 (on bottom of FA) is set high (and ADC is set low) which means that $01 is added to the value on the ALU bus (thus the value within accumulator A). At next clock pulse (CP) accumulator A is loaded with the incremented value (here LDA is high and the result of the FA operation is in theory on the D-bus, an additional CP is required due to LD_FA). If we instead wish to decrement we can do it in a similar way but with ADD_FF (-1) instead. This results in a slower CPU, but the goal is to create a functioning system rather than a fast one.

CPU Rambling

[edit | edit source]Below I will ramble about how to design a CPU. I will use the current pictures even though they may not be relevant, some pictures I do however plan to change. The rambling will not be that accurate but only a translation of my Swedish book and thus perhaps of some fundamental theoretical use.

Architecture Rambling

[edit | edit source]When studying the more simple architecture you see that the E-clock is wrongly generated. It is only supposed to be delayed as much as to enable data to be valid at the data bus (or output) when the memory has been addressed (this is called access time). When you look at how fast a 74HC is it is not enough with four inverters in a row (typically 80ns tpd) to be able to use older memories (typically 150ns for 27C256-15 EPROM). The solution could either be to let the E-clock be the (symmetrical) inversion of the CP-clock which makes 3MHz the maximum frequency for chosen EPROMs or that we let the E-clock be generated externally.

Here I add that the maximum frequency for the Spartan CPU is dependent upon how the full adder (FA) is designed, using no carry acceleration and only 8 bits of data, the propagation delay (tpd) for the FA will be 16*tpd and the Spartan has a tpd of only 4ns. So if we use the inverting trick of the CP-clock as E-clock the period time for CP will have to be twice that which means 32tpd yielding a maximum CP-clock of 1/(32*4ns)=8MHz and now I see that the chosen EPROMs will limit it further and that is down to 3MHz (1/2x150ns). This is however mainly with regard to the external instruction register (6pcs of 27C512) which is planned to become integrated in the Spartan. While the external program memory preliminary is of same type maximum CP is 3MHz. The conclusion is that if I use fastest possible memories maximum frequency is still 8MHz only (I see in my data sheet that 27C512 actually is manufactured down to 100ns but I believe there are even faster versions).

The Condition Code Register (CCR) signals how an arithmetic operation has gone. It indicates with so called flags if the the answer is zero (Z=1), negative (N=1), too large (C=1) or overflow (V=1) where the V-flag is the most cryptic while it handles the special case that the operands may be both positive but the answer still is negative (due to two's complement). Imagine for instance the number range -128 to +127 for a byte. If 30 is added to 100 it results in overflow. In the beginning it was hard for me to understand two's complement but nowadays I find it rather simple. The instruction NEGA for instance inverts a number in the accumulator and adds one which gives us two's complement. Except for overflow the number will be correct in both positive and negative form. The value of the number just depends on how you see it. Add for instance 2 (0010) with C (1100) which is -4 or 12. The answer is E which is -2 or 14.

I also use a H-flag but it isn't a real Half-carry flag (which perhaps may be called "Nibble flag") but instead it sniffs the polarity of branch offset (which is always injected on top of FA), H is thus bit 7 (or a7) of the offset telling us if we should increment (a7=0) or decrement (a7=1) the high byte of the program counter (PC). Incrementing is done by putting PCHB on top of FA and add 00h (ADD_00) with carry (from former LB+offset addition). Here a7=0 is indicating a positive offset. If a7 is set (offset negative) we add PCHB with carry and FFh (ADD_FF) making PCHB decrement one step.

I don't know if this works but we can look at a couple of examples (using 4 bits only): If offset is 3 and input LB (LB') is 6 we have the new LB by simple addition which gives 9 (or -7), here a3=0 so it is a positive addition, the new HB is then HB+C+00 (where C comes from the LB addition with offset). If we have PC'=0010 0110 (HBLB) and LB' is added with 3, LB becomes 1001. Here there is no carry (<15) so PC should become 0010 1001. We then have PC'=26h and PC=29h and thus +3 due to offset! If offset is 8 (or -8) and LB' is 2, LB becomes 10 (or -6), here a3=1 so it is a negative addition, HB' will then have to decrease and the new HB is HB+C+FF making HB' decrease one step (from for instance 2 to 1). Here it seems to me that when a3 is high, HB is always decreased with one step but say that you have PC'=0010 0010 (HBLB) and offset is 1000, offset + LB will be 1010 (-6) and if we now decrement (C=0) HB, we wind up with 0001 so the new HBLB will become PC=0001 1010 while we have subtracted with 6. Original HBLB is 22h (34), the new HBLB is 1Ah (26) and the difference 34-26 is actually 8! The use of carry (C) I am however a bit uncertain of but if you wish to add two numbers carry always has to be there, adding for instance Fh with 2h will give you the result 1 + carry where carry needs to be taken care of.

The Stack Pointer register (SP) is a parallel loadable up/down counter. It is preliminary initiated to 00FF at RESET/POR. It's use is necessary while using subroutines (JSR) and stack operations (PSHA). It waits for some kind of push/pull instruction like JSR (Jump to Subroutine) which stores the low byte (PC_LB) return address initially on address 00FF and PC_HB on 00FE.

The Accumulator (SR_R+SR_L or AC) consists of two parallel loadable shift registers. It is divided in two parts to be able to shift to the right (division by multiples of two) and to the left (multiplication by multiple of two). A true accumulator should also be able to INC/DEC which indicates a up/down counting feature also. Preliminary we however solve INC/DEC by using the Full Adder (FA) instead. While shifting in both directions is wanted the accumulator must (in my world) consist of two separate shift registers. It has been implemented a memory function (L/R'+LD_D) to remember which unit that was used. There might be shift registers out there which can shift in both directions, I have however not found any. Finally I think shifting of the accumulator value is not so important because the "accuracy" is bad, I just want the feature in my LSHR/LSHL instruction. With the aid of the signal STA the accumulator value is put onto the internal data bus (D) and with the aid of the signal ALU the value is put onto the ALU bus (A). All arithmetic and logical (AND, OR, XOR) operations thus have a special internal bus controlled by the accumulator. The logic function INV (or NOT) is hardwired to belong to the Full Adder (FA).

The Program Counter (PC) consists of a parallel loadable up-counter. It has been dedicated two separate loading registers for HB (high byte) and LB (low byte) of the new PC address. It also has two output 3-state buffers which puts chosen PC byte onto the internal D-bus (they have to be in 3-state when the D-bus is used for other things) which is used for calculations of branch-jumps such as BEQ. After PC comes the address register (AR) which also consists of two bytes. These registers are loadable with HB and LB separately (because we use an asymmetric architecture). There is also a control signal (EXT as in extended) which means that the address bus (AB) temporarily may be taken over by an instruction such as STA $AAFF (as in STore accumulator A) to write the value in A at that address in RAM (or I/O) and return the control of the address bus to CP afterwards.

The Data Bus (DB) holds no registers. This is because I think it is not needed and it even destroys the data flow while data still only is available after t_acc (access time) which is after the memory has been addressed, also called DAV (as in data valid). A register would mean another clock cycle to be waited out. Imagine now the memory being addressed and the memory address has been clocked out on positive edge of CP (Clock Pulse), at the same time we have an instruction register (IR) working with the E-clock (which is supposed to be a clock with its positive edge delayed at least t_acc or CP/2 in my case). At the E-clock we then may read data securely (as long as CP/2>t_acc). As an example PC is firstly stepped up, AR is then loaded with the new address (one clock cycle delayed) and memory is addressed. At this CP flank there is a t_acc delayment before DAV but here the E-clock for the instruction register waits CP/2 before reading the data value.

The Instruction Register (IR) consists of four parts. These are two parallel loadable registers (IRR+BR), one counter (IRC) and a ROM memory where the chosen instructions are realized in the form of micro instructions. Into the Instruction Register Register (IRR) comes all instructions in the form of an Op-code which it always recognizes. One can wonder why but it simply has to do with the start of the Von Neumann machine (i.e a machine that executes instructions in sequence) where Reset must succeed as well as the OP-code in the program memory must fit with the list of OP-codes in IR besides the fact that you also must program right with the right amount of operands for the OP-code. Our processor uses three amounts of operands and they are 0, 1 and 2. An OP-code + operand makes an instruction. The OP-codes is then part of the address for the Instruction Register (IR), in this case the high byte of ROM. Into the Branch Register (BR) the N and Z flags are loaded at a branch instruction (by Branch as micro code) which becomes the next part of the IR address. With the way we have solved it, these inputs will guaranteed be zero after Reset and then get their value from the NZ-flags whenever IR detects a branch. The NZ-flags are then reset when a branch has finished (by Ready as micro code). This way BR is always 00 as default. Now, if there is a branch four combinations of NZ are possible and we need to take care of them all except for the fact that NZ=11 can't happen because a number can't be both negative and zero (perhaps this must be taken care of too?). The Instruction Register Counter (IRC) has 16 steps. It steps through the sequencial micro instructions by jerking in different signals such as LDA in my schematic above. All this is then (prliminary) programmed in 6pcs of EPROM (here called ROM). As far as I understand these EPROMs can however be implemented in my Spartan FPGA which also is my plan but I will take it in baby-steps.

Microinstruction Rambling

[edit | edit source]Here, there has been a struggle and it is probably far from correct. However, what strikes you when attempting to microprogram is that the instruction depth becomes quite large, meaning that all instructions require much more clock cycles than HCS08. For example, EOR $ requires fifteen clock cycles, while HCS08 solves it in four. We solve NEGA in 3 while HCS08 only needs 1, etc. So, we are building a slow processor. But it feels like it should be able to function and accomplish what we want. Mostly, the author hopes that we can program these micro-instructions in the CPLD so that we don't need an external 41-bit memory. It was terrible how the complexity skyrocketed when we decided to build something useful.

What worries the author again is the timing. We don't have sufficient control over what happens when the fetch instruction arrives. As it is now, the always-ending micro-instruction AR, which updates the address register, is supposed to move PC to the next instruction while enabling the reading of that instruction. But what about the clocking? If Ready finishes the realization of the instruction, can it also be made to load the next instruction (because if we do it correctly, there will ALWAYS be a new instruction waiting when Ready arrives)? We have the synchronization where the E-clock goes high in the middle of CP, which seems to give us the nice function that we can do two things at the same time since we can enable OE and clock in the result simultaneously because OE has time to put out its signals before CP comes half a clock cycle later. However, the author is very unsure about this.

One way to speed up the processor would be to add a temp register that is only used internally and that can output data on both the ALU bus and D bus. One problem is that we cannot change the address register (AR) until both bytes are fetched (in e.g. an ORA $ instruction). Therefore, we have needed to store HB in A, which has resulted in A having to be pushed away on the stack before storage and then pulled back when we have fetched the value and can perform the operation. With a temp register, we could speed up the processor by at least 30%. Another way to speed it up would be to make it possible to reach the "top" of the adder (FA) by introducing a couple of three-state registers. Always going through FA also gives us the bonus feature that all numbers can be CCR-checked (Z=1 for zero, etc.).

The branches have been a bit special to implement. When any of the four selected branch opcodes are loaded into the instruction register (IR), the flags N and Z will always be loaded immediately as Branch goes high. Therefore, we seemingly have four different states to decode. However, NZ=11 is not valid because N works on b7 and Z is NOR on all bits, so the number cannot be both negative and zero, which is why NZ=11 is ruled out. We are left with NZ=00, 01, and 10. NZ=00 means that the number is not negative and not zero, i.e., >0. NZ=01 means that the number is zero, and NZ=10 means that the number is <0. As an example, for BPL, we should perform the branch when NZ is 00, but exit and generate Ready for the other two cases. However, for BNE, we must perform the branch for two combinations (00 and 10) and exit only for 01.

We will now try to explain some of the notes. AR means, as mentioned, that the address register is updated (with LD_AR_HB and LD_AR_LB). This must always be done when switching from Extended addressing (EXT) or stepped PC (EN_PC). We have assumed that the opcode is loaded correctly, so we always (except in the Inherent case) need to step forward to the first operand (PC+1, AR). We would like to clarify that our program counter (PC) always steps one byte at a time, and according to the memory configuration above, we have the convention that the highest address is downward. Therefore, PC always steps from a low address and downwards in the figure (although we always count up). After a reset, it starts with the first opcode, and in the most extreme case, it needs to step two more bytes to read all the operands. The storage in the PROM is, in accordance with PC's stepping direction, opcode (1 byte), operand 1 (1 byte), and operand 2 (1 byte), where operand 1 is always the high byte (HB) and operand 2 the low byte (LB). When allocation has been made from the outside, it has been indicated by a right arrow. Internal allocation, such as storage in the accumulator, has always been indicated by a left arrow. Anything that may be on the data bus has been labeled M (as in Memory).

MUL/DIV Rambling

[edit | edit source]I have not HW-implemented the instructions Multiply (MUL) or Divide (DIV) so my CPU can only add and subtract (using two's complement). I have chosen not to do this because it is rather complicated. A true CPU does however have to realize these instructions and I have though rather a lot about this. Right now I think that there may be done in another way.

Consider that a number in exponential form may me multiplied by only adding the exponents, for division you just subtract the exponents. So if the number may be represented in exponenttial form it is easy to MUL/DIV.

Here I also have the idea that my 8 bits can only represent around +/-128 BUT if the byte is represented in exponential form so large a number as +/-E38 may be represented. The problem with my idea (which do not use an mantissa) is the resolution because each adjacent number will differ a factor 2 while my representation is planned to be

that is without a mantissa in the CPU.

If bin is 128 the number is around E38 but the next smaller number is half of that (bin-1).

I have calculated that this may be useful, because let's say that you wish to represent 3, the closest 2^bin value is either 4 (bin=2) or 2 (bin=1) and yields these discrepancies

or

if we would want to represent 48 (in the middle of 64 and 32), closest bin is either 6 or 5 so the discrepancies are still +33%/-33% as maximum.

For physics with rather large or small numbers it works perfect. I for instance often add/subtract exponents and round the numbers to half a decade (3,16) so +/-33% is close to what I think is necessary (as long as we do not talk private economics).

The problem is to convert the numbers before put into the CPU. This must be done manually to yield some kind of precision. My first formula

may be rearranged as

or

which means that the bin-value to put into the CPU is the one depicted, then MUL is done by easy addition and DIV is done by easy subtraction so that MUL/DIV does not have to be hardware implemented, at least that is my idea.

Of course, the output must be converted also but you just have raise the output from two to get the number.

Using an ordinary 8-bit number in the CPU, it is possible to multiply and divide by simply shifting. In this case the 8-bit number is in the +128/-128 range only.

Shifting one step left means at multiplication of two, shifting one step right means a division of two, each shift means the power of two so two shifts to the left makes a multiplication of four and so on.

While the number range using only 8 bits is so small, this is rather academical regarding usefulness.

Still, worst case discrepancy is if you for instance wish to MUL/DIV with 3 where the closest possibility is 4 or 2, the discrepancy is then +/-33% as maximum.

I have come to the conclusion that my idea simply doesn't work. If we skip the mantissa of the decade number the exponent of the number will have to be multiplied with 1/log2 (3,32) and this number is impossible to hit with shifting (you'll get 2 or 4). Using linear representation (without a binary mantissa) you can't get closer than 3 and while we are talking exponents the discrepancy is too high.

We can look at an example, let's say that the first number is

and that the other number is

and we wish to multiply them.

With paper and pen it is simple because the result is just adding the exponents like

But if we wish to convert them to binary form with the CPU the CPU must first multiply the exponents with 3,32 which can not be done, the closest is 4 (shifting two steps to the left), so if we now add 44 with 60 we get

which equals approximately E31, this is 10000 times higher (and this value must also be converted to decade form at the output).

If we then use my method we can set the binary exponent linearly (but without decimals), 3,32*11=37 and 3,32*15=50 so that the number is approximatelly

which is rather close but computing the binary exponent means a multiplication of 3,32 of the decade exponent and the only way of doing this with my current CPU is to do it manually, 3,32 can otherwise only be computed by shifting which obviously gives to large a discrepancy.

So this does not work.

On the other hand, if you look at an 8-bit CPU, how large a number can it handle if for instance the two operands are equal (and the operation is unsigned)?

The square root of 256 is only 16.

So operand values will (often) have be so small as 16 which is kind of useless for a CPU.

I therefore conclude that the instructions MUL/DIV are not neccesary.

Moreover, the most important instructions for a CPU is ADD/SUB because these instructions are the instructions which the CPU depend on like adding branch offsets for PC jumps and such.

I think (observe) that much can be done with a CPU in spite of lacking MUL/DIV.

I have decided to proceed with my idea, the formula is here repeated

which means that

A MUL (micro) instruction could look something like this (using two accumulators, which I don't have and will not get)

1) LDA #bin_1

2) LDB #bin_2

3) ADD (B+A->A)

4) STA $[bin_1+bin_2] (the address where the exponential sum is stored)

5) LSRA #2 (shifts A two steps to the right and thereby divide with four instead of 3,32, gives e')

6) STA $[e'] (e' out like 10^e')

Point 4) can not be done exactly while bin is linear and without decimals, the discrepancy is maximum

for each number to be multiplied, for two numbers the discrepancy can thus be so large as 2 but this is the worst case. The discrepancy may be calculated like

and will always differ the square root of two but both numbers must be half away from whole for this "catastrophy", you can calculate the probability while saying that each number has one value of three (low, middle, high) so the probability is 1/9 and thus some 10% only, but in worst case the discrepancy while multiplying two numbers is two, point four thus gives best tolerance (if the value is manually converted)

In point five I just shift two steps to the right to divide with 4 (it should be divided by 3,32) to yield e while setting m to 1, this gives an additional 1,6 in discrepancy like

so the output is a maximum 3,2 (2*1,6) off the correct value, for physics I use half a decade (3,16) as something to count with so this is not so bad as it looks like.

For simplicity I suggest that the bin value is calculated by multiplying e with four and put that into the CPU, there will then be an additional discrepancy from start but the procedure to use a calculator for the numbers to be multiplied renders this idea kind of pointless because this can be done more easily with paper and pen. A hole exponential number times four is easy to put into the CPU.

Except point 5, I think the discrepancy becomes

so it is better to use a calculator before putting the bin value into the CPU but remember that 2 is a worst case, if the numbers fit better the discrepancy is only 2,6 and there is a 10% risk of my worst maximum.

This is however before "shift-generation" of the output which gives an additional 1,6 if it is not done manually.

I want to have the instruction DIV (divide) too, here I need to subtract the exponents and the only way I can do that now is to add with two's complement. This means that MUL also will have to be of two's complement. I'm not sure how to do this yet. I've seen in my course literature that float numbers are actually represented using a separate sign bit, my plan is however to use two's complement, how I don't know.

While I can not program values with micro-coding, MUL will have to be a separate program. Further more, if the CPU will have to relay the outcome, the outcome must be in binary code.

If you for instance wish to add the outcome later on, the outcome must be in binary code (within the CPU range).

So if we define the value inside the CPU as "CPU" and the ADD value as "bin" while we wish to multiply and thus add exponents, we may write

Here we have that the representation of our add-value (bin) is 2^CPU which means that CPU holds the exponential version and while I use 8 bits the CPU value can be 8 (n) as maximum.

Now, if we multiply two numbers by adding two CPU exponential numbers we may say that this new number is CPU', so now bin'=2^CPU'

Here we need to create a table, CPU' can't be larger than 8 and we can represent linear n only so we need to to set the intermediate values to n without decimals such as [0;1]=0, [>1;2]=1 and such, the discrepancy for this is 2 maximum.

The same thing happens at input, CPU can only have integer values. At the input CPU can be defined as

or

and we need a table for lg(bin), while my CPU cannot count.

So we need two tables such as

and preliminary

Actually lg2 may be eliminated if you use

which makes it possible to write

so we need a table for lg_2(bin) where bin is smaller than 255.

A program for this might look something like

MUL(bin_1, bin_2)

CPU=lg2(bin) [table needed]

ADD CPU(bin_1, bin_2)

Bin=2^CPU [table needed]

This can then be relayed further in the CPU because it is pure HEX code, that means that the result can be used later on.

My original idea however, substitutes a 10-based exponential number to a 2-based exponential number (where the 2-based number lacks a mantissa). Here we can multiply two 2-based numbers by simply adding the exponents. Moreover, rather large numbers can be represented (~E38). For adding we simply use the number with the largest exponent (and skip the other).

To be able to relay a number inside the CPU it does however have to be of pure 8-bit HEX-type and it it is not possible to write the number E38 in binary code so the value can't be depicted outside the CPU.

If we stick to "128" we can manipulate and present it to the outside world, the only problem is that this number can't be larger than 128.

While we formally can represent E38 inside the CPU, we simply can not use it outside the CPU but as long we keep it inside the CPU it works but outside it can only be represented as an exponent.

I have come to the conclusion that DIV can not be realized with my idea. The reason is that I can only subtract by using two's complement and that simply don't work. I am contemplating the structure of float numbers which seams to have a sign bit and the rest is always a positive number. This works fine for MUL where the exponents just have to be added but for DIV the exponents will have to be subtracted and I can't subtract numbers that are not in two's complement.

There may be a fix where the unsigned numbers could be converted into two's complement but while I use 8 bits and 7 bits then are the positive number (using float) and the number representation in two's complement will only be around +/-64.

This is because we have a sign bit (b7) which gives the two's complement of the rest as +/-64.

I don't know how or if it possible to convert a positive number to two's complement other than if b6=1 the number is negative so the number to use is the inversion of the number +1 ?

Also for both MUL and DIV I will have to use tables to convert

and

and these tables will be far from exact.

While I have come to the conclusion that DIV does not work using two's complement I have decided to make MUL/DIV using the same kind of number representation and that is two's complement initially and sign-magnitude during calculation and finally two's complement as output.

The reason for two's complement for "bin" is that my CPU uses it internally so the numbers generated can thus be used later on inside the CPU, another reason is that I like positive and negative numbers. Actually Motorola seams to have hardware-implemented MUL/DIV in their super MC6809 with the use of unsigned numbers only so in this aspect (only) my version is better.

Here comes first my algorithm for MUL (8 bit):

MUL(bin1, bin2)

b7=1=>NEG(bin)+1=abs, s=1 [8 bit]

b7=0=>bin=abs, s=0

s1 XOR s2=s [store]

s.abs=Number [sign magnitude, 8 bit]

Number'=Number AND 7Fh [7 bit, <64]

CPU=lg2 Number' [table, our exponent]

ADD CPU(Number1', Number2') [the exponents are added]

abs=2^{ADD} [table, our magnitude]

s=0=>out=abs [from the above stored s]

s=1=>out=NEG(abs)+1

out=7 bit two's complement

The drawback with this solution is partly that it only covers +/-64 as number range (which I however think may be increased with a multiplier BUT the steps between the numbers will be the multiplier) partly that while my CPU can not count correctly and I have to use tables, the accuracy is poor. I have however calculated that max discrepancy is 2.8 which is lower than my allowed 3,16 for half a decade doing physics calculations.

Here comes my DIV algorithm:

DIV(bin1, bin2)

b7=1=>NEG(bin)+1=abs, s=1 [8 bit]

b7=0=>bin=abs, s=0

s1 XOR s2=s [store]

s.abs=Number [sign magnitude, 8 bit]

Number'=Number AND 7Fh [7 bit, <64]

CPU=lg2 Number' [table, our exponent]

SUB CPU(Number1', Number2') [the exponents are subtracted]

abs=2^{SUB} [table, our magnitude]

s=0=>out=abs [from the above stored s]

s=1=>out=NEG(abs)+1

out=7 bit two's complement

Here comes my first table (2-log of a binary number)

CPU=lg2 bin Table [7 bit]

bin=[(>0);1]->CPU=0

bin=[>1;2]->CPU=1

bin=[>2;4]->CPU=2

bin=[>4;8]->CPU=3

bin=[>8;16]->CPU=4

bin=[>16;32]->CPU=5

bin=[>32;64]->CPU=6 [2^6=64]

The discrepancy here is 2 while CPU is the exponent and 2^1=2, this is however a worst case

Here comes my second table (bin with CPU raised from two)

bin=2^{CPU} Table [7 bit}

CPU=0->bin=1

CPU=[>0;1]->bin=2

CPU=[>1;2]->bin=4

CPU=[>2;3]->bin=8

CPU=[>3;4]->bin=16

CPU=[>4;5]->bin=32

CPU=[>5;6]->bin=64

The discrepancy here is obviously 2 which of course is worst case.

Total maximum discrepancy is thus 4 (and not the 2.8 I earlier calculated). However, 4 is not so much larger than my allowed 3,16 so my idea still may be usable.

New Idea

[edit | edit source]I think I now have another more simple solution, the key is to create a factor of 3,32(1/lg2) to both multiply and divide with. A binary exponent have to be divided with 3,32 to give the decade exponent, the decade exponent has to be multiplied with 3,32 to give the binary exponent. While these are exponents the error becomes rather large if we for instance shift two times to the left for a multiplication of four.

So what to do? We need to multiply and divide with rather exactly 3,32. The solution my friends may lay in two external analog multipliers/dividers, this way we don't need separate digital multipliers or dividers!

I have an I/O memory map of 16kB and my plan was to use one I/O address only within this area to be able to enter (keyboard) and show (display) values, reading and writing to the same address.

If I however wish to implement this new idea I need to use two more I/O addresses (one for the analog multiplier and one for the analog divider) and will have to reconfigure the I/O chip enable. A rather nice idea if you ask me, here's an example:

which means

where