User:Dirk Hünniger/gsl

Introduction[edit | edit source]

| This page is the introduction for the collection Wikibooks:Collections/GLSL Programming in Unity. It should not be referenced by other pages. |

About GLSL[edit | edit source]

GLSL (OpenGL Shading Language) is one of several commonly used shading languages for real-time rendering (other examples are Cg and HLSL). These shading languages are used to program shaders (i.e. more or less small programs) that are executed on a GPU (graphics processing unit), i.e. the processor of the graphics system of a computer – as opposed to the CPU (central processing unit) of a computer.

GPUs are massively parallel processors, which are extremely powerful. Most of today's real-time graphics in games and other interactive graphical applications would not be possible without GPUs. However, to take full advantage of the performance of GPUs, it is necessary to program them directly. This means that small programs (i.e. shaders) have to be written that can be executed by GPUs. The programming languages to write these shaders are shading languages. GLSL is one of them. In fact, it is the shading language of several 3D graphics APIs (application programming interfaces), namely OpenGL, OpenGL ES 2.x, and WebGL. Therefore, GLSL is commonly used in applications for desktop computers, mobile devices, and the web.

About this Wikibook[edit | edit source]

This wikibook was written with students in mind, who like neither programming nor mathematics. The basic motivation for this book is the observation that students are much more motivated to learn programming environments, programming languages and APIs if they are working on specific projects. Such projects are usually developed on specific platforms and therefore the approach of this book is to present GLSL within the game engine Unity.

Chapters 1 to 8 of the book consist of tutorials with working examples that produce certain effects. Note that these tutorials assume that you read them in the order in which they are presented, i.e. each tutorial will assume that you are familiar with the concepts and techniques introduced by previous tutorials. If you are new to GLSL or Unity you should at least read through the tutorials in Chapter 1, “Basics”.

More details about the OpenGL pipeline and GLSL syntax in general are included in an “Appendix on the OpenGL Pipeline and GLSL Syntax”. Readers who are not familiar with OpenGL or GLSL might want to at least skim this part since a basic understanding of the OpenGL pipeline and GLSL syntax is very useful for understanding the tutorials.

About GLSL in Unity[edit | edit source]

GLSL programming in the game engine Unity is considerably easier than GLSL programming for an OpenGL, OpenGL ES, or WebGL application. Import of meshes and images (i.e. textures) is supported by a graphical user interface; mipmaps and normal maps can be computed automatically; the most common vertex attributes and uniforms are predefined; OpenGL states can be set by very simple commands; etc.

A free version of Unity can be downloaded for Windows and MacOS at Unity's download page. All of the included tutorials work with the free version. Three points should be noted:

- First, Windows users have to use the command-line argument

-force-opengl[1] when starting Unity in order to be able to use GLSL shaders; for example, by changing theTargetsetting in the properties of the desktop icon to:"C:\Program Files\Unity\Editor\Unity.exe" -force-opengl. (On MacOS X, OpenGL and therefore GLSL is used by default.) Note that GLSL shaders cannot be used in Unity applications running in web browser on Windows. - Secondly, this book assumes that readers are somewhat familiar with Unity. If this is not the case, readers should consult the first three sections of Unity's User Guide [2] (Unity Basics, Building Scenes, Asset Import and Creation).

- Furthermore, as of version 3.5, Unity supports a version of GLSL similar to version 1.0.x for OpenGL ES 2.0 (the specification is available at the “Khronos OpenGL ES API Registry”); however, Unity's shader documentation [3] focuses on shaders written in Unity's own “surface shader” format and Cg/HLSL [4]. There are only very few details documented that are specific to GLSL shaders [5]. Thus, this wikibook might also help to close some gaps in Unity's documentation. However, optimizations (see, for example, this blog) are usually not discussed.

Martin Kraus, August 2012

Minimal Shader[edit | edit source]

This tutorial covers the basic steps to create a minimal GLSL shader in Unity.

Starting Unity and Creating a New Project[edit | edit source]

After downloading and starting Unity (Windows users have to use the command-line argument -force-opengl ), you might see an empty project. If not, you should create a new project by choosing File > New Project... from the menu. For this tutorial, you don't need to import any packages but some of the more advanced tutorials require the scripts and skyboxes packages. After creating a new project on Windows, Unity might start without OpenGL support; thus, Windows users should always quit Unity and restart it (with the command-line argument -force-opengl) after creating a new project. Then you can open the new project with File > Open Project... from the menu.

If you are not familiar with Unity's Scene View, Hierarchy View, Project View and Inspector View, now would be a good time to read the first two (or three) sections (“Unity Basics” and “Building Scenes”) of the Unity User Guide.

Creating a Shader[edit | edit source]

Creating a GLSL shader is not complicated: In the Project View, click on Create and choose Shader. A new file named “NewShader” should appear in the Project View. Double-click it to open it (or right-click and choose Open). An editor with the default shader in Cg should appear. Delete all the text and copy & paste the following shader into this file:

Shader "GLSL basic shader" { // defines the name of the shader

SubShader { // Unity chooses the subshader that fits the GPU best

Pass { // some shaders require multiple passes

GLSLPROGRAM // here begins the part in Unity's GLSL

#ifdef VERTEX // here begins the vertex shader

void main() // all vertex shaders define a main() function

{

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

// this line transforms the predefined attribute

// gl_Vertex of type vec4 with the predefined

// uniform gl_ModelViewProjectionMatrix of type mat4

// and stores the result in the predefined output

// variable gl_Position of type vec4.

}

#endif // here ends the definition of the vertex shader

#ifdef FRAGMENT // here begins the fragment shader

void main() // all fragment shaders define a main() function

{

gl_FragColor = vec4(1.0, 0.0, 0.0, 1.0);

// this fragment shader just sets the output color

// to opaque red (red = 1.0, green = 0.0, blue = 0.0,

// alpha = 1.0)

}

#endif // here ends the definition of the fragment shader

ENDGLSL // here ends the part in GLSL

}

}

}

Save the shader (by clicking the save icon or choosing File > Save from the editor's menu).

Congratulations, you have just created a shader in Unity. If you want, you can rename the shader file in the Project View by clicking the name, typing a new name, and pressing Return. (After renaming, reopen the shader in the editor to make sure that you are editing the correct file.)

Unfortunately, there isn't anything to see until the shader is attached to a material.

Creating a Material and Attaching a Shader[edit | edit source]

To create a material, go back to Unity and create a new material by clicking Create in the Project View and choosing Material. A new material called “New Material” should appear in the Project View. (You can rename it just like the shader.) If it isn't selected, select it by clicking. Details about the material appear now in the Inspector View. In order to set the shader to this material, you can either

- drag & drop the shader in the Project View over the material or

- select the material in the Project View and then in the Inspector View choose the shader (in this case “GLSL basic shader” as specified in the shader code above) from the drop-down list labeled Shader.

In either case, the Preview in the Inspector View of the material should now show a red sphere. If it doesn't and an error message is displayed at the bottom of the Unity window, you should reopen the shader and check in the editor whether the text is the same as given above. Windows users should make sure that OpenGL is supported by restarting Unity with the command-line argument -force-opengl.

Interactively Editing Shaders[edit | edit source]

This would be a good time to play with the shader; in particular, you can easily change the computed fragment color. Try neon green by opening the shader and replacing the fragment shader with this code:

#ifdef FRAGMENT

void main()

{

gl_FragColor = vec4(0.6, 1.0, 0.0, 1.0);

// red = 0.6, green = 1.0, blue = 0.0, alpha = 1.0

}

#endif

You have to save the code in the editor and activate the Unity window again to apply the new shader. If you select the material in the Project View, the sphere in the Inspector View should now be green. You could also try to modify the red, green, and blue components to find the warmest orange or the darkest blue. (Actually, there is a movie about finding the warmest orange and another about dark blue that is almost black.)

You could also play with the vertex shader, e.g. try this vertex shader:

#ifdef VERTEX

void main()

{

gl_Position = gl_ModelViewProjectionMatrix

* (vec4(1.0, 0.1, 1.0, 1.0) * gl_Vertex);

}

#endif

This flattens any input geometry by multiplying the coordinate with . (This is a component-wise vector product; for more information on vectors and matrices in GLSL see the discussion in Section “Vector and Matrix Operations”.)

In case the shader does not compile, Unity displays an error message at the bottom of the Unity window and displays the material as bright magenta. In order to see all error messages and warnings, you should select the shader in the Project View and read the messages in the Inspector View, which also include line numbers, which you can display in the text editor by choosing View > Line Numbers in the text editor menu. You could also open the Console View by choosing Window > Console from the menu, but this will not display all error messages and therefore the crucial error is often not reported.

Attaching a Material to a Game Object[edit | edit source]

We still have one important step to go: attaching the new material to a triangle mesh. To this end, create a sphere (which is one of the predefined game objects of Unity) by choosing GameObject > Create Other > Sphere from the menu. A sphere should appear in the Scene View and the label “Sphere” should appear in the Hierarchy View. (If it doesn't appear in the Scene View, click it in the Hierarchy View, move (without clicking) the mouse over the Scene View and press “f”. The sphere should now appear centered in the Scene View.)

To attach the material to the new sphere, you can:

- drag & drop the material from the Project View over the sphere in the Hierarchy View or

- drag & drop the material from the Project View over the sphere in the Scene View or

- select the sphere in the Hierarchy View, locate the Mesh Renderer component in the Inspector View (and open it by clicking the title if it isn't open), open the Materials setting of the Mesh Renderer by clicking it. Change the “Default-Diffuse” material to the new material by clicking the dotted circle icon to the right of the material name and choosing the new material from the pop-up window.

In any case, the sphere in the Scene View should now have the same color as the preview in the Inspector View of the material. Changing the shader should (after saving and switching to Unity) change the appearance of the sphere in the Scene View.

Saving Your Work in a Scene[edit | edit source]

There is one more thing: you should save you work in a “scene” (which often corresponds to a game level). Choose File > Save Scene (or File > Save Scene As...) and choose a file name in the “Assets” directory of your project. The scene file should then appear in the Project View and will be available the next time you open the project.

One More Note about Terminology[edit | edit source]

It might be good to clarify the terminology. In GLSL, a “shader” is either a vertex shader or a fragment shader. The combination of both is called a “program”.

Unfortunately, Unity refers to this kind of program as a “shader”, while in Unity a vertex shader is called a “vertex program” and a fragment shader is called a “fragment program”.

To make the confusion perfect, I'm going to use Unity's word “shader” for a GLSL program, i.e. the combination of a vertex and a fragment shader. However, I will use the GLSL terms “vertex shader” and “fragment shader” instead of “vertex program” and “fragment program”.

Summary[edit | edit source]

Congratulations, you have reached the end of this tutorial. A few of the things you have seen are:

- How to create a shader.

- How to define a GLSL vertex and fragment shader in Unity.

- How to create a material and attach a shader to the material.

- How to manipulate the ouput color

gl_FragColorin the fragment shader. - How to transform the input attribute

gl_Vertexin the vertex shader. - How to create a game object and attach a material to it.

Actually, this was quite a lot of stuff.

Further Reading[edit | edit source]

If you still want to know more

- about vertex and fragment shaders in general, you should read the description in Section “OpenGL ES 2.0 Pipeline”.

- about the vertex transformations such as

gl_ModelViewProjectionMatrix, you should read Section “Vertex Transformations”. - about handling vectors (e.g. the

vec4type) and matrices in GLSL, you should read Section “Vector and Matrix Operations”. - about how to apply vertex transformations such as

gl_ModelViewProjectionMatrix, you should read Section “Applying Matrix Transformations”. - about Unity's ShaderLab language for specifying shaders, you should read Unity's ShaderLab reference.

RGB Cube[edit | edit source]

This tutorial introduces varying variables. It is based on Section “Minimal Shader”.

In this tutorial we will write a shader to render an RGB cube similar to the one shown to the left. The color of each point on the surface is determined by its coordinates; i.e., a point at position has the color . For example, the point is mapped to the color , i.e. pure blue. (This is the blue corner in the lower right of the figure to the left.)

Preparations[edit | edit source]

Since we want to create an RGB cube, you first have to create a cube game object. As described in Section “Minimal Shader” for a sphere, you can create a cube game object by selecting GameObject > Create Other > Cube from the main menu. Continue with creating a material and a shader object and attaching the shader to the material and the material to the cube as described in Section “Minimal Shader”.

The Shader Code[edit | edit source]

Here is the shader code, which you should copy & paste into your shader object:

Shader "GLSL shader for RGB cube" {

SubShader {

Pass {

GLSLPROGRAM

#ifdef VERTEX // here begins the vertex shader

varying vec4 position;

// this is a varying variable in the vertex shader

void main()

{

position = gl_Vertex + vec4(0.5, 0.5, 0.5, 0.0);

// Here the vertex shader writes output data

// to the varying variable. We add 0.5 to the

// x, y, and z coordinates, because the

// coordinates of the cube are between -0.5 and

// 0.5 but we need them between 0.0 and 1.0.

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

#endif // here ends the vertex shader

#ifdef FRAGMENT // here begins the fragment shader

varying vec4 position;

// this is a varying variable in the fragment shader

void main()

{

gl_FragColor = position;

// Here the fragment shader reads intput data

// from the varying variable. The red, gree, blue,

// and alpha component of the fragment color are

// set to the values in the varying variable.

}

#endif // here ends the fragment shader

ENDGLSL

}

}

}

If your cube is not colored correctly, check the console for error messages (by selecting Window > Console from the main menu), make sure you have saved the shader code, and check whether you have attached the shader object to the material object and the material object to the game object.

Varying Variables[edit | edit source]

The main task of our shader is to set the output fragment color (gl_FragColor) in the fragment shader to the position (gl_Vertex) that is available in the vertex shader. Actually, this is not quite true: the coordinates in gl_Vertex for Unity's default cube are between -0.5 and +0.5 while we would like to have color components between 0.0 and 1.0; thus, we need to add 0.5 to the x, y, and z component, which is done by this expression: gl_Vertex + vec4(0.5, 0.5, 0.5, 0.0).

The main problem, however, is: how do we get any value from the vertex shader to the fragment shader? It turns out that the only way to do this is to use varying variables (or varyings for short). Output of the vertex shader can be written to a varying variable and then it can be read as input by the fragment shader. This is exactly what we need.

To specify a varying variable, it has to be defined with the modifier varying (before the type) in the vertex and the fragment shader outside of any function; in our example: varying vec4 position;. And here comes the most important rule about varying variables:

| The type and name of a varying variable definition in the vertex shader has to match exactly the type and name of a varying variable definition in the fragment shader and vice versa. |

This is required to avoid ambiguous cases where the GLSL compiler cannot figure out which varying variable of the vertex shader should be matched to which varying variable of the fragment shader.

A Neat Trick for Varying Variables in Unity[edit | edit source]

The requirement that the definitions of varying variables in the vertex and fragment shader match each other often results in errors, for example if a programmer changes a type or name of a varying variable in the vertex shader but forgets to change it in the fragment shader. Fortunately, there is a nice trick in Unity that avoids the problem. Consider the following shader:

Shader "GLSL shader for RGB cube" {

SubShader {

Pass {

GLSLPROGRAM // here begin the vertex and the fragment shader

varying vec4 position;

// this line is part of the vertex and the fragment shader

#ifdef VERTEX

// here begins the part that is only in the vertex shader

void main()

{

position = gl_Vertex + vec4(0.5, 0.5, 0.5, 0.0);

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

#endif

// here ends the part that is only in the vertex shader

#ifdef FRAGMENT

// here begins the part that is only in the fragment shader

void main()

{

gl_FragColor = position;

}

#endif

// here ends the part that is only in the fragment shader

ENDGLSL // here end the vertex and the fragment shader

}

}

}

As the comments in this shader explain, the line #ifdef VERTEX doesn't actually mark the beginning of the vertex shader but the beginning of a part that is only in the vertex shader. Analogously, #ifdef FRAGMENT marks the beginning of a part that is only in the fragment shader. In fact, both shaders begin with the line GLSLPROGRAM. Therefore, any code between GLSLPROGRAM and the first #ifdef line will be shared by the vertex and the fragment shader. (If you are familiar with the C or C++ preprocessor, you might have guessed this already.)

This is perfect for definitions of varying variables because it means that we may type the definition only once and it will be put into the vertex and the fragment shader; thus, matching definitions are guaranteed! I.e. we have to type less and there is no way to produce compiler errors because of mismatches between the definitions of varying variables. (Of course, the cost is that we have to type all these #ifdef and #end lines.)

Variations of this Shader[edit | edit source]

The RGB cube represents the set of available colors (i.e. the gamut of the display). Thus, it can also be used show the effect of a color transformation. For example, a color to gray transformation would compute either the mean of the red, green, and blue color components, i.e. , and then put this value in all three color components of the fragment color to obtain a gray value of the same intensity. Instead of the mean, the relative luminance could also be used, which is . Of course, any other color transformation (changing saturation, contrast, hue, etc.) is also applicable.

Another variation of this shader could compute a CMY (cyan, magenta, yellow) cube: for position you could subtract from a pure white an amount of red that is proportional to in order to produce cyan. Furthermore, you would subtract an amount of green in proportion to the component to produce magenta and also an amount of blue in proportion to to produce yellow.

If you really want to get fancy, you could compute an HSV (hue, saturation, value) cylinder. For and coordinates between -0.5 and +0.5, you can get an angle between 0 and 360° with 180.0+degrees(atan(z, x)) in GLSL and a distance between 0 and 1 from the axis with 2.0 * sqrt(x * x + z * z). The coordinate for Unity's built-in cylinder is between -1 and 1 which can be translated to a value between 0 and 1 by . The computation of RGB colors from HSV coordinates is described in the article on HSV in Wikipedia.

Interpolation of Varying Variables[edit | edit source]

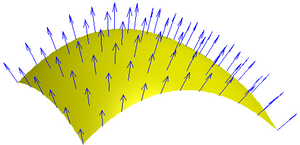

The story about varying variables is not quite over yet. If you select the cube game object, you will see in the Scene View that it consists of only 12 triangles and 8 vertices. Thus, the vertex shader might be called only eight times and only eight different outputs are written to the varying variable. However, there are many more colors on the cube. How did that happen?

The answer is implied by the name varying variables. They are called this way because they vary across a triangle. In fact, the vertex shader is only called for each vertex of each triangle. If the vertex shader writes different values to a varying variable for different vertices, the values are interpolated across the triangle. The fragment shader is then called for each pixel that is covered by the triangle and receives interpolated values of the varying variables. The details of this interpolation are described in Section “Rasterization”.

If you want to make sure that a fragment shader receives one exact, non-interpolated value by a vertex shader, you have to make sure that the vertex shader writes the same value to the varying variable for all vertices of a triangle.

Summary[edit | edit source]

And this is the end of this tutorial. Congratulations! Among other things, you have seen:

- What an RGB cube is.

- What varying variables are good for and how to define them.

- How to make sure that a varying variable has the same name and type in the vertex shader and the fragment shader.

- How the values written to a varying variable by the vertex shader are interpolated across a triangle before they are received by the fragment shader.

Further Reading[edit | edit source]

If you want to know more

- about the data flow in and out of vertex and fragment shaders, you should read the description in Section “OpenGL ES 2.0 Pipeline”.

- about vector and matrix operations (e.g. the expression

gl_Vertex + vec4(0.5, 0.5, 0.5, 0.0);), you should read Section “Vector and Matrix Operations”. - about the interpolation of varying variables, you should read Section “Rasterization”.

- about Unity's official documentation of writing vertex shaders and fragment shaders in Unity's ShaderLab, you should read Unity's ShaderLab reference about “GLSL Shader Programs”.

Debugging of Shaders[edit | edit source]

This tutorial introduces attribute variables. It is based on Section “Minimal Shader” and Section “RGB Cube”.

This tutorial also introduces the main technique to debug shaders in Unity: false-color images, i.e. a value is visualized by setting one of the components of the fragment color to it. Then the intensity of that color component in the resulting image allows you to make conclusions about the value in the shader. This might appear to be a very primitive debugging technique because it is a very primitive debugging technique. Unfortunately, there is no alternative in Unity.

Where Does the Vertex Data Come from?[edit | edit source]

In Section “RGB Cube” you have seen how the fragment shader gets its data from the vertex shader by means of varying variables. The question here is: where does the vertex shader get its data from? Within Unity, the answer is that the Mesh Renderer component of a game object sends all the data of the mesh of the game object to OpenGL in each frame. (This is often called a “draw call”. Note that each draw call has some performance overhead; thus, it is much more efficient to send one large mesh with one draw call to OpenGL than to send several smaller meshes with multiple draw calls.) This data usually consists of a long list of triangles, where each triangle is defined by three vertices and each vertex has certain attributes, including position. These attributes are made available in the vertex shader by means of attribute variables.

Built-in Attribute Variables and how to Visualize Them[edit | edit source]

In Unity, most of the standard attributes (position, color, surface normal, and texture coordinates) are built in, i.e. you need not (in fact must not) define them. The names of these built-in attributes are actually defined by the OpenGL “compability profile” because such built-in names are needed if you mix an OpenGL application that was written for the fixed-function pipeline with a (programmable) vertex shader. If you had to define them, the definitions (only in the vertex shader) would look like this:

attribute vec4 gl_Vertex; // position (in object coordinates,

// i.e. local or model coordinates)

attribute vec4 gl_Color; // color (usually constant)

attribute vec3 gl_Normal; // surface normal vector

// (in object coordinates; usually normalized to unit length)

attribute vec4 gl_MultiTexCoord0; //0th set of texture coordinates

// (a.k.a. “UV”; between 0 and 1)

attribute vec4 gl_MultiTexCoord1; //1st set of texture coordinates

// (a.k.a. “UV”; between 0 and 1)

...

There is only one attribute variable that is provided by Unity but has no standard name in OpenGL, namely the tangent vector, i.e. a vector that is orthogonal to the surface normal. You should define this variable yourself as an attribute variable of type vec4 with the specific name Tangent as shown in the following shader:

Shader "GLSL shader with all built-in attributes" {

SubShader {

Pass {

GLSLPROGRAM

#ifdef VERTEX

varying vec4 color;

attribute vec4 Tangent; // this attribute is specific to Unity

void main()

{

color = gl_MultiTexCoord0; // set the varying variable

// other possibilities to play with:

// color = gl_Vertex;

// color = gl_Color;

// color = vec4(gl_Normal, 1.0);

// color = gl_MultiTexCoord0;

// color = gl_MultiTexCoord1;

// color = Tangent;

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

#endif

#ifdef FRAGMENT

varying vec4 color;

void main()

{

gl_FragColor = color; // set the output fragment color

}

#endif

ENDGLSL

}

}

}

In Section “RGB Cube” we have already seen, how to visualize the gl_Vertex coordinates by setting the fragment color to those values. In this example, the fragment color is set to gl_MultiTexCoord0 such that we can see what kind of texture coordinates Unity provides.

Note that only the first three components of Tangent represent the tangent direction. The scaling and the fourth component are set in a specific way, which is mainly useful for parallax mapping (see Section “Projection of Bumpy Surfaces”).

How to Interpret False-Color Images[edit | edit source]

When trying to understand the information in a false-color image, it is important to focus on one color component only. For example, if the standard attribute gl_MultiTexCoord0 for a sphere is written to the fragment color then the red component of the fragment visualizes the x coordinate of gl_MultiTexCoord0, i.e. it doesn't matter whether the output color is maximum pure red or maximum yellow or maximum magenta, in all cases the red component is 1. On the other hand, it also doesn't matter for the red component whether the color is blue or green or cyan of any intensity because the red component is 0 in all cases. If you have never learned to focus solely on one color component, this is probably quite challenging; therefore, you might consider to look only at one color component at a time. For example by using this line to set the varying in the vertex shader:

color = vec4(gl_MultiTexCoord0.x, 0.0, 0.0, 1.0);

This sets the red component of the varying variable to the x component of gl_MultiTexCoord0 but sets the green and blue components to 0 (and the alpha or opacity component to 1 but that doesn't matter in this shader).

If you focus on the red component or visualize only the red component you should see that it increases from 0 to 1 as you go around the sphere and after 360° drops to 0 again. It actually behaves similar to a longitude coordinate on the surface of a planet. (In terms of spherical coordinates, it corresponds to the azimuth.)

If the x component of gl_MultiTexCoord0 corresponds to the longitude, one would expect that the y component would correspond to the latitude (or the inclination in spherical coordinates). However, note that texture coordinates are always between 0 and 1; therefore, the value is 0 at the bottom (south pole) and 1 at the top (north pole). You can visualize the y component as green on its own with:

color = vec4(0.0, gl_MultiTexCoord0.y, 0.0, 1.0);

Texture coordinates are particularly nice to visualize because they are between 0 and 1 just like color components are. Almost as nice are coordinates of normalized vectors (i.e., vectors of length 1; for example, gl_Normal is usually normalized) because they are always between -1 and +1. To map this range to the range from 0 to 1, you add 1 to each component and divide all components by 2, e.g.:

color = vec4((gl_Normal + vec3(1.0, 1.0, 1.0)) / 2.0, 1.0);

Note that gl_Normal is a three-dimensional vector. Black corresponds then to the coordinate -1 and full intensity of one component to the coordinate +1.

If the value that you want to visualize is in another range than 0 to 1 or -1 to +1, you have to map it to the range from 0 to 1, which is the range of color components. If you don't know which values to expect, you just have to experiment. What helps here is that if you specify color components outside of the range 0 to 1, they are automatically clamped to this range. I.e., values less than 0 are set to 0 and values greater than 1 are set to 1. Thus, when the color component is 0 or 1 you know at least that the value is less or greater than what you assumed and then you can adapt the mapping iteratively until the color component is between 0 and 1.

Debugging Practice[edit | edit source]

In order to practice the debugging of shaders, this section includes some lines that produce black colors when the assignment to color in the vertex shader is replaced by each of them. Your task is to figure out for each line, why the result is black. To this end, you should try to visualize any value that you are not absolutely sure about and map the values less than 0 or greater than 1 to other ranges such that the values are visible and you have at least an idea in which range they are. Note that most of the functions and operators are documented in Section “Vector and Matrix Operations”.

color = gl_MultiTexCoord0 - vec4(1.5, 2.3, 1.1, 0.0);

color = vec4(gl_MultiTexCoord0.z);

color = gl_MultiTexCoord0 / tan(0.0);

The following lines require some knowledge about the dot and cross product:

color = dot(gl_Normal, vec3(Tangent)) * gl_MultiTexCoord0;

color = dot(cross(gl_Normal, vec3(Tangent)), gl_Normal) *

gl_MultiTexCoord0;

color = vec4(cross(gl_Normal, gl_Normal), 1.0);

color = vec4(cross(gl_Normal, vec3(gl_Vertex)), 1.0);

// only for a sphere!

Do the functions radians() and noise() always return black? What's that good for?

color = radians(gl_MultiTexCoord0);

color = noise4(gl_MultiTexCoord0);

Consult the documentation in the “OpenGL ES Shading Language 1.0.17 Specification” available at the “Khronos OpenGL ES API Registry” to figure out what radians() is good for and what the problem with noise4() is.

Special Variables in the Fragment Shader[edit | edit source]

Attributes are specific to vertices, i.e., they usually have different values for different vertices. There are similar variables for fragment shaders, i.e., variables that have different values for each fragment. However, they are different from attributes because they are not specified by a mesh (i.e. a list of triangles). They are also different from varyings because they are not set explicitly by the vertex shader.

Specifically, a four-dimensional vector gl_FragCoord is available containing the screen (or: window) coordinates of the fragment that is processed; see Section “Vertex Transformations” for the description of the screen coordinate system.

Moreover, a boolean variable gl_FrontFacing is provided that specifies whether the front face or the back face of a triangle is being rendered. Front faces usually face the “outside” of a model and back faces face the “inside” of a model; however, there is no clear outside or inside if the model is not a closed surface. Usually, the surface normal vectors point in the direction of the front face, but this is not required. In fact, front faces and back faces are specified by the order of the vertex triangles: if the vertices appear in counter-clockwise order, the front face is visible; if they appear in clockwise order, the back face is visible. An application is shown in Section “Cutaways”.

Summary[edit | edit source]

Congratulations, you have reached the end of this tutorial! We have seen:

- The list of built-in attributes in Unity:

gl_Vertex,gl_Color,gl_Normal,gl_MultiTexCoord0,gl_MultiTexCoord1, and the specialTangent. - How to visualize these attributes (or any other value) by setting components of the output fragment color.

- The two additional special variables that are available in fragment programs:

gl_FragCoordandgl_FrontFacing.

Further Reading[edit | edit source]

If you still want to know more

- about the data flow in vertex and fragment shaders, you should read the description in Section “OpenGL ES 2.0 Pipeline”.

- about operations and functions for vectors, you should read Section “Vector and Matrix Operations”.

Shading in World Space[edit | edit source]

This tutorial introduces uniform variables. It is based on Section “Minimal Shader”, Section “RGB Cube”, and Section “Debugging of Shaders”.

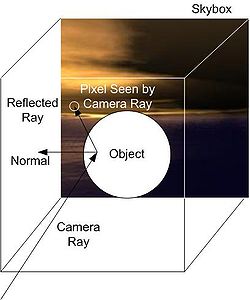

In this tutorial we will look at a shader that changes the fragment color depending on its position in the world. The concept is not too complicated; however, there are extremely important applications, e.g. shading with lights and environment maps. We will also have a look at shaders in the real world; i.e., what is necessary to enable non-programmers to use your shaders?

Transforming from Object to World Space[edit | edit source]

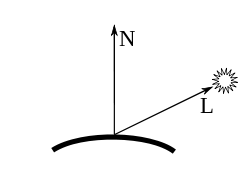

As mentioned in Section “Debugging of Shaders”, the attribute gl_Vertex specifies object coordinates, i.e. coordinates in the local object (or model) space of a mesh. The object space (or object coordinate system) is specific to each game object; however, all game objects are transformed into one common coordinate system — the world space.

If a game object is put directly into the world space, the object-to-world transformation is specified by the Transform component of the game object. To see it, select the object in the Scene View or the Hierarchy View and then find the Transform component in the Inspector View. There are parameters for “Position”, “Rotation” and “Scale” in the Transform component, which specify how vertices are transformed from object coordinates to world coordinates. (If a game object is part of a group of objects, which is shown in the Hierarchy View by means of indentation, then the Transform component only specifies the transformation from object coordinates of a game object to the object coordinates of the parent. In this case, the actual object-to-world transformation is given by the combination of the transformation of a object with the transformations of its parent, grandparent, etc.) The transformations of vertices by translations, rotations and scalings, as well as the combination of transformations and their representation as 4×4 matrices are discussed in Section “Vertex Transformations”.

Back to our example: the transformation from object space to world space is put into a 4×4 matrix, which is also known as “model matrix” (since this transformation is also known as “model transformation”). This matrix is available in the uniform variable unity_ObjectToWorld(in Unity 5, maybe _Object2World in elder version), which is defined and used in the following shader:

Shader "GLSL shading in world space" {

SubShader {

Pass {

GLSLPROGRAM

uniform mat4 unity_ObjectToWorld;

// definition of a Unity-specific uniform variable

#ifdef VERTEX

varying vec4 position_in_world_space;

void main()

{

position_in_world_space = unity_ObjectToWorld * gl_Vertex;

// transformation of gl_Vertex from object coordinates

// to world coordinates;

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

#endif

#ifdef FRAGMENT

varying vec4 position_in_world_space;

void main()

{

float dist = distance(position_in_world_space,

vec4(0.0, 0.0, 0.0, 1.0));

// computes the distance between the fragment position

// and the origin (the 4th coordinate should always be

// 1 for points).

if (dist < 5.0)

{

gl_FragColor = vec4(0.0, 1.0, 0.0, 1.0);

// color near origin

}

else

{

gl_FragColor = vec4(0.3, 0.3, 0.3, 1.0);

// color far from origin

}

}

#endif

ENDGLSL

}

}

}

Note that this shader makes sure that the definition of the uniform is included in both the vertex and the fragment shader (although this particular fragment shader doesn't need it). This is similar to the definition of varyings discussed in Section “RGB Cube”.

Usually, an OpenGL application has to set the value of uniform variables; however, Unity takes care of always setting the correct value of predefined uniform variables such as unity_ObjectToWorld; thus, we don't have to worry about it.

This shader transforms the vertex position to world space and gives it to the fragment shader in a varying. For the fragment shader the varying variable contains the interpolated position of the fragment in world coordinates. Based on the distance of this position to the origin of the world coordinate system, one of two colors is set. Thus, if you move an object with this shader around in the editor it will turn green near the origin of the world coordinate system. Farther away from the origin it will turn dark grey.

More Unity-Specific Uniforms[edit | edit source]

There are, in fact, several predefined uniform variables similar to unity_ObjectToWorld. Here is a short list (including unity_ObjectToWorld), which appears in the shader codes of several tutorials:

// The following built-in uniforms (except _LightColor0 and

// _LightMatrix0) are also defined in "UnityCG.glslinc",

// i.e. one could #include "UnityCG.glslinc"

uniform vec4 _Time, _SinTime, _CosTime; // time values from Unity

uniform vec4 _ProjectionParams;

// x = 1 or -1 (-1 if projection is flipped)

// y = near plane; z = far plane; w = 1/far plane

uniform vec4 _ScreenParams;

// x = width; y = height; z = 1 + 1/width; w = 1 + 1/height

uniform vec4 unity_Scale; // w = 1/scale; see _World2Object

uniform vec3 _WorldSpaceCameraPos;

uniform mat4 unity_ObjectToWorld; // model matrix

uniform mat4 unity_WorldToObject; // inverse model matrix

// (all but the bottom-right element have to be scaled

// with unity_Scale.w if scaling is important)

uniform vec4 _LightPositionRange; // xyz = pos, w = 1/range

uniform vec4 _WorldSpaceLightPos0;

// position or direction of light source

uniform vec4 _LightColor0; // color of light source

uniform mat4 _LightMatrix0; // matrix to light space

As the comments suggest, instead of defining all these uniforms (except _LightColor0 and _LightMatrix0), you could also include the file UnityCG.glslinc. However, for some unknown reason _LightColor0 and _LightMatrix0 are not included in this file; thus, we have to define them separately:

#include "UnityCG.glslinc"

uniform vec4 _LightColor0;

uniform mat4 _LightMatrix0;

Unity does not always update all of these uniforms. In particular, _WorldSpaceLightPos0, _LightColor0, and _LightMatrix0 are only set correctly for shader passes that are tagged appropriately, e.g. with Tags {"LightMode" = "ForwardBase"} as the first line in the Pass {...} block; see also Section “Diffuse Reflection”.

More OpenGL-Specific Uniforms[edit | edit source]

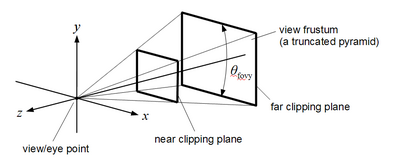

Another class of built-in uniforms are defined for the OpenGL compability profile, for example the mat4 matrix gl_ModelViewProjectionMatrix, which is equivalent to the matrix product gl_ProjectionMatrix * gl_ModelViewMatrix of two other built-in uniforms. The corresponding transformations are described in detail in Section “Vertex Transformations”.

As you can see in the shader above, these uniforms don't have to be defined; they are always available in GLSL shaders in Unity. If you had to define them, the definitions would look like this:

uniform mat4 gl_ModelViewMatrix;

uniform mat4 gl_ProjectionMatrix;

uniform mat4 gl_ModelViewProjectionMatrix;

uniform mat4 gl_TextureMatrix[gl_MaxTextureCoords];

uniform mat3 gl_NormalMatrix;

// transpose of the inverse of gl_ModelViewMatrix

uniform mat4 gl_ModelViewMatrixInverse;

uniform mat4 gl_ProjectionMatrixInverse;

uniform mat4 gl_ModelViewProjectionMatrixInverse;

uniform mat4 gl_TextureMatrixInverse[gl_MaxTextureCoords];

uniform mat4 gl_ModelViewMatrixTranspose;

uniform mat4 gl_ProjectionMatrixTranspose;

uniform mat4 gl_ModelViewProjectionMatrixTranspose;

uniform mat4 gl_TextureMatrixTranspose[gl_MaxTextureCoords];

uniform mat4 gl_ModelViewMatrixInverseTranspose;

uniform mat4 gl_ProjectionMatrixInverseTranspose;

uniform mat4 gl_ModelViewProjectionMatrixInverseTranspose;

uniform mat4 gl_TextureMatrixInverseTranspose[gl_MaxTextureCoords];

struct gl_LightModelParameters { vec4 ambient; };

uniform gl_LightModelParameters gl_LightModel;

...

In fact, the compability profile of OpenGL defines even more uniforms; see Chapter 7 of the “OpenGL Shading Language 4.10.6 Specification” available at Khronos' OpenGL page. Unity supports many of them but not all.

Some of these uniforms are arrays, e.g gl_TextureMatrix. In fact, an array of matrices

gl_TextureMatrix[0], gl_TextureMatrix[1], ..., gl_TextureMatrix[gl_MaxTextureCoords - 1] is available, where gl_MaxTextureCoords is a built-in integer.

Computing the View Matrix[edit | edit source]

Traditionally, it is customary to do many computations in view space, which is just a rotated and translated version of world space (see Section “Vertex Transformations” for the details). Therefore, OpenGL offers only the product of the model matrix and the view matrix , i.e. the model-view matrix , which is available in the uniform gl_ModelViewMatrix. The view matrix is not available. Unity also doesn't provide it.

However, unity_ObjectToWorld is just the model matrix and unity_WorldToObject is the inverse model matrix. (Except that all but the bottom-right element have to be scaled by untiy_Scale.w.) Thus, we can easily compute the view matrix. The mathematics looks like this:

In other words, the view matrix is the product of the model-view matrix and the inverse model matrix (which is unity_WorldToObject * unity_Scale.w except for the bottom-right element, which is 1). Assuming that we have defined the uniforms unity_WorldToObject and unity_Scale, we can compute the view matrix this way in GLSL:

mat4 modelMatrixInverse = _World2Object * unity_Scale.w;

modelMatrixInverse[3][3] = 1.0;

mat4 viewMatrix = gl_ModelViewMatrix * modelMatrixInverse;

User-Specified Uniforms: Shader Properties[edit | edit source]

There is one more important type of uniform variables: uniforms that can be set by the user. Actually, these are called shader properties in Unity. You can think of them as parameters of the shader. A shader without parameters is usually used only by its programmer because even the smallest necessary change requires some programming. On the other hand, a shader using parameters with descriptive names can be used by other people, even non-programmers, e.g. CG artists. Imagine you are in a game development team and a CG artist asks you to adapt your shader for each of 100 design iterations. It should be obvious that a few parameters, which even a CG artist can play with, might save you a lot of time. Also, imagine you want to sell your shader: parameters will often dramatically increase the value of your shader.

Since the description of shader properties in Unity's ShaderLab reference is quite OK, here is only an example, how to use shader properties in our example. We first declare the properties and then define uniforms of the same names and corresponding types.

Shader "GLSL shading in world space" {

Properties {

_Point ("a point in world space", Vector) = (0., 0., 0., 1.0)

_DistanceNear ("threshold distance", Float) = 5.0

_ColorNear ("color near to point", Color) = (0.0, 1.0, 0.0, 1.0)

_ColorFar ("color far from point", Color) = (0.3, 0.3, 0.3, 1.0)

}

SubShader {

Pass {

GLSLPROGRAM

// uniforms corresponding to properties

uniform vec4 _Point;

uniform float _DistanceNear;

uniform vec4 _ColorNear;

uniform vec4 _ColorFar;

#include "UnityCG.glslinc"

// defines _Object2World and _World2Object

varying vec4 position_in_world_space;

#ifdef VERTEX

void main()

{

mat4 modelMatrix = _Object2World;

position_in_world_space = modelMatrix * gl_Vertex;

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

#endif

#ifdef FRAGMENT

void main()

{

float dist= distance(position_in_world_space, _Point);

if (dist < _DistanceNear)

{

gl_FragColor = _ColorNear;

}

else

{

gl_FragColor = _ColorFar;

}

}

#endif

ENDGLSL

}

}

}

With these parameters, a non-programmer can modify the effect of our shader. This is nice; however, the properties of the shader (and in fact uniforms in general) can also be set by scripts! For example, a JavaScript attached to the game object that is using the shader can set the properties with these lines:

renderer.sharedMaterial.SetVector("_Point",

Vector4(1.0, 0.0, 0.0, 1.0));

renderer.sharedMaterial.SetFloat("_DistanceNear",

10.0);

renderer.sharedMaterial.SetColor("_ColorNear",

Color(1.0, 0.0, 0.0));

renderer.sharedMaterial.SetColor("_ColorFar",

Color(1.0, 1.0, 1.0));

Use sharedMaterial if you want to change the parameters for all objects that use this material and just material if you want to change the parameters only for one object. With scripting you could, for example, set the _Point to the position of another object (i.e. the position of its Transform component). In this way, you can specify a point just by moving another object around in the editor. In order to write such a script, select Create > JavaScript in the Project View and copy & paste this code:

@script ExecuteInEditMode() // make sure to run in edit mode

var other : GameObject; // another user-specified object

function Update () // this function is called for every frame

{

if (null != other) // has the user specified an object?

{

renderer.sharedMaterial.SetVector("_Point",

other.transform.position); // set the shader property

// _Point to the position of the other object

}

}

Then, you should attach the script to the object with the shader and drag & drop another object to the other variable of the script in the Inspector View.

Summary[edit | edit source]

Congratulations, you made it! (In case you wonder: yes, I'm also talking to myself here. ;) We discussed:

- How to transform a vertex into world coordinates.

- The most important Unity-specific uniforms that are supported by Unity.

- The most important OpenGL-specific uniforms that are supported by Unity.

- How to make a shader more useful and valuable by adding shader properties.

Further Reading[edit | edit source]

If you want to know more

- about vector and matrix operations (e.g. the

distance()function), you should read Section “Vector and Matrix Operations”. - about the standard vertex transformations, e.g. the model matrix and the view matrix, you should read Section “Vertex Transformations”.

- about the application of transformation matrices to points and directions, you should read Section “Applying Matrix Transformations”.

- about the specification of shader properties, you should read Unity's documentation about “ShaderLab syntax: Properties”.

Cutaways[edit | edit source]

This tutorial covers discarding fragments, determining whether the front face or back face is rendered, and front-face and back-face culling. This tutorial assumes that you are familiar with varying variables as discussed in Section “RGB Cube”.

The main theme of this tutorial is to cut away triangles or fragments even though they are part of a mesh that is being rendered. The main two reasons are: we want to look through a triangle or fragment (as in the case of the roof in the drawing to the left, which is only partly cut away) or we know that a triangle isn't visible anyways; thus, we can save some performance by not processing it. OpenGL supports these situations in several ways; we will discuss two of them.

Very Cheap Cutaways[edit | edit source]

The following shader is a very cheap way of cutting away parts of a mesh: all fragments are cut away that have a positive coordinate in object coordinates (i.e. in the coordinate system in which it was modeled; see Section “Vertex Transformations” for details about coordinate systems). Here is the code:

Shader "GLSL shader using discard" {

SubShader {

Pass {

Cull Off // turn off triangle culling, alternatives are:

// Cull Back (or nothing): cull only back faces

// Cull Front : cull only front faces

GLSLPROGRAM

varying vec4 position_in_object_coordinates;

#ifdef VERTEX

void main()

{

position_in_object_coordinates= gl_Vertex;

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

#endif

#ifdef FRAGMENT

void main()

{

if (position_in_object_coordinates.y > 0.0)

{

discard; // drop the fragment if y coordinate > 0

}

if (gl_FrontFacing) // are we looking at a front face?

{

gl_FragColor = vec4(0.0, 1.0, 0.0, 1.0); // yes: green

}

else

{

gl_FragColor = vec4(1.0, 0.0, 0.0, 1.0); // no: red

}

}

#endif

ENDGLSL

}

}

}

When you apply this shader to any of the default objects, the shader will cut away half of them. This is a very cheap way of producing hemispheres or open cylinders.

Discarding Fragments[edit | edit source]

Let's first focus on the discard instruction in the fragment shader. This instruction basically just discards the processed fragment. (This was called a fragment “kill” in earlier shading languages; I can understand that the fragments prefer the term “discard”.) Depending on the hardware, this can be a quite expensive technique in the sense that rendering might perform considerably worse as soon as there is one shader that includes a discard instruction (regardless of how many fragments are actually discarded, just the presence of the instruction may result in the deactivation of some important optimizations). Therefore, you should avoid this instruction whenever possible but in particular when you run into performance problems.

One more note: the condition for the fragment discard includes only an object coordinate. The consequence is that you can rotate and move the object in any way and the cutaway part will always rotate and move with the object. You might want to check what cutting in world space looks like: change the vertex and fragment shader such that the world coordinate is used in the condition for the fragment discard. Tip: see Section “Shading in World Space” for how to transform the vertex into world space.

Better Cutaways[edit | edit source]

If you are not(!) familiar with scripting in Unity, you might try the following idea to improve the shader: change it such that fragments are discarded if the coordinate is greater than some threshold variable. Then introduce a shader property to allow the user to control this threshold. Tip: see Section “Shading in World Space” for a discussion of shader properties.

If you are familiar with scripting in Unity, you could try this idea: write a script for an object that takes a reference to another sphere object and assigns (using renderer.sharedMaterial.SetMatrix()) the inverse model matrix (renderer.worldToLocalMatrix) of that sphere object to a mat4 uniform variable of the shader. In the shader, compute the position of the fragment in world coordinates and apply the inverse model matrix of the other sphere object to the fragment position. Now you have the position of the fragment in the local coordinate system of the other sphere object; here, it is easy to test whether the fragment is inside the sphere or not because in this coordinate system all spheres are centered around the origin with radius 0.5. Discard the fragment if it is inside the other sphere object. The resulting script and shader can cut away points from the surface of any object with the help of a cutting sphere that can be manipulated interactively in the editor like any other sphere.

Distinguishing between Front and Back Faces[edit | edit source]

A special boolean variable gl_FrontFacing is available in the fragment shader that specifies whether we are looking at the front face of a triangle. Usually, the front faces are facing the outside of a mesh and the back faces the inside. (Just as the surface normal vector usually points to the outside.) However, the actual way front and back faces are distinguished is the order of the vertices in a triangle: if the camera sees the vertices of a triangle in counter-clockwise order, it sees the front face. If it sees the vertices in clockwise order, it sees the back face.

Our fragment shader checks the variable gl_FrontFacing and assigns green to the output fragment color if gl_FrontFacing is true (i.e. the fragment is part of a front-facing triangle; i.e. it is facing the outside), and red if gl_FrontFacing is false (i.e. the fragment is part of a back-facing triangle; i.e. it is facing the inside). In fact, gl_FrontFacing allows you not only to render the two faces of a surfaces with different colors but with completely different styles.

Note that basing the definition of front and back faces on the order of vertices in a triangle can cause problems when vertices are mirrored, i.e. scaled with a negative factor. Unity tries to take care of these problems; thus, just specifying a negative scaling in the Transform component of the game object will usually not cause this problem. However, since Unity has no control over what we are doing in the vertex shader, we can still turn the inside out by multiplying one (or three) of the coordinates with -1, e.g. by assigning gl_Position this way in the vertex shader:

gl_Position = gl_ModelViewProjectionMatrix

* vec4(-gl_Vertex.x, gl_Vertex.y, gl_Vertex.z, 1.0);

This just multiplies the coordinate by -1. For a sphere, you might think that nothing happens, but it actually turns front faces into back faces and vice versa; thus, now the inside is green and the outside is red. (By the way, this problem also affects the surface normal vector.) Thus, be careful with mirrors!

Culling of Front or Back Faces[edit | edit source]

Finally, the shader (more specifically the shader pass) includes the line Cull Off. This line has to come before GLSLPROGRAM because it is not in GLSL. In fact, it is the command of Unity's ShaderLab to turn off any triangle culling. This is necessary because by default back faces are culled away as if the line Cull Back was specified. You can also specify the culling of front faces with Cull Front. The reason why culling of back-facing triangles is active by default, is that the inside of objects is usually invisible; thus, back-face culling can save quite some performance by avoiding to rasterize these triangles as explained next. Of course, we were able to see the inside with our shader because we have discarded some fragments; thus, we had to deactivate back-face culling.

How does culling work? Triangles and vertices are processed as usual. However, after the viewport transformation of the vertices to screen coordinates (see Section “Vertex Transformations”) the graphics processor determines whether the vertices of a triangle appear in counter-clockwise order or in clockwise order on the screen. Based on this test, each triangle is considered a front-facing or a back-facing triangle. If it is front-facing and culling is activated for front-facing triangles, it will be discarded, i.e., the processing of it stops and it is not rasterized. Analogously, if it is back-facing and culling is activated for back-facing triangles. Otherwise, the triangle will be processed as usual.

Summary[edit | edit source]

Congratulations, you have worked through another tutorial. (If you have tried one of the assignments: good job! I didn't yet.) We have looked at:

- How to discard fragments.

- How to render front-facing and back-facing triangles in different colors.

- How to deactivate the default culling of back faces.

- How to activate the culling of front faces.

Further Reading[edit | edit source]

If you still want to know more

- about the vertex transformations such as the model transformation from object to world coordinates or the viewport transformation to screen coordinates, you should read Section “Vertex Transformations”.

- about how to define shader properties, you should read Section “Shading in World Space”.

- about Unity's ShaderLab syntax for specifying culling, you should read Culling & Depth Testing.

Transparency[edit | edit source]

This tutorial covers blending of fragments (i.e. compositing them) using GLSL shaders in Unity. It assumes that you are familiar with the concept of front and back faces as discussed in Section “Cutaways”.

More specifically, this tutorial is about rendering transparent objects, e.g. transparent glass, plastic, fabrics, etc. (More strictly speaking, these are actually semitransparent objects because they don't need to be perfectly transparent.) Transparent objects allow us to see through them; thus, their color “blends” with the color of whatever is behind them.

Blending[edit | edit source]

As mentioned in Section “OpenGL ES 2.0 Pipeline”, the fragment shader computes an RGBA color (i.e. red, green, blue, and alpha components in gl_FragColor) for each fragment (unless the fragment is discarded). The fragments are then processed as discussed in Section “Per-Fragment Operations”. One of the operations is the blending stage, which combines the color of the fragment (as specified in gl_FragColor), which is called the “source color”, with the color of the corresponding pixel that is already in the framebuffer, which is called the “destination color” (because the “destination” of the resulting blended color is the framebuffer).

Blending is a fixed-function stage, i.e. you can configure it but not program it. The way it is configured, is by specifying a blend equation. You can think of the blend equation as this definition of the resulting RGBA color:

vec4 result = SrcFactor * gl_FragColor + DstFactor * pixel_color;

where pixel_color is the RGBA color that is currently in the framebuffer and result is the blended result, i.e. the output of the blending stage. SrcFactor and DstFactor are configurable RGBA colors (of type vec4) that are multiplied component-wise with the fragment color and the pixel color. The values of SrcFactor and DstFactor are specified in Unity's ShaderLab syntax with this line:

Blend {code for SrcFactor} {code for DstFactor}

The most common codes for the two factors are summarized in the following table (more codes are mentioned in Unity's ShaderLab reference about blending):

| Code | Resulting Factor (SrcFactor or DstFactor)

|

|---|---|

One |

vec4(1.0)

|

Zero |

vec4(0.0)

|

SrcColor |

gl_FragColor

|

SrcAlpha |

vec4(gl_FragColor.a)

|

DstColor |

pixel_color

|

DstAlpha |

vec4(pixel_color.a)

|

OneMinusSrcColor |

vec4(1.0) - gl_FragColor

|

OneMinusSrcAlpha |

vec4(1.0 - gl_FragColor.a)

|

OneMinusDstColor |

vec4(1.0) - pixel_color

|

OneMinusDstAlpha |

vec4(1.0 - pixel_color.a)

|

As discussed in Section “Vector and Matrix Operations”, vec4(1.0) is just a short way of writing vec4(1.0, 1.0, 1.0, 1.0). Also note that all components of all colors and factors in the blend equation are clamped between 0 and 1.

Alpha Blending[edit | edit source]

One specific example for a blend equation is called “alpha blending”. In Unity, it is specified this way:

Blend SrcAlpha OneMinusSrcAlpha

which corresponds to:

vec4 result = vec4(gl_FragColor.a) * gl_FragColor + vec4(1.0 - gl_FragColor.a) * pixel_color;

This uses the alpha component of gl_FragColor as an opacity. I.e. the more opaque the fragment color is, the larger its opacity and therefore its alpha component, and thus the more of the fragment color is mixed in the result and the less of the pixel color in the framebuffer. A perfectly opaque fragment color (i.e. with an alpha component of 1) will completely replace the pixel color.

This blend equation is sometimes referred to as an “over” operation, i.e. “gl_FragColor over pixel_color”, since it corresponds to putting a layer of the fragment color with a specific opacity on top of the pixel color. (Think of a layer of colored glass or colored semitransparent plastic on top of something of another color.)

Due to the popularity of alpha blending, the alpha component of a color is often called opacity even if alpha blending is not employed. Moreover, note that in computer graphics a common formal definition of transparency is 1 − opacity.

Premultiplied Alpha Blending[edit | edit source]

There is an important variant of alpha blending: sometimes the fragment color has its alpha component already premultiplied to the color components. (You might think of it as a price that has VAT already included.) In this case, alpha should not be multiplied again (VAT should not be added again) and the correct blending is:

Blend One OneMinusSrcAlpha

which corresponds to:

vec4 result = vec4(1.0) * gl_FragColor + vec4(1.0 - gl_FragColor.a) * pixel_color;

Additive Blending[edit | edit source]

Another example for a blending equation is:

Blend One One

This corresponds to:

vec4 result = vec4(1.0) * gl_FragColor + vec4(1.0) * pixel_color;

which just adds the fragment color to the color in the framebuffer. Note that the alpha component is not used at all; nonetheless, this blending equation is very useful for many kinds of transparent effects; for example, it is often used for particle systems when they represent fire or something else that is transparent and emits light. Additive blending is discussed in more detail in Section “Order-Independent Transparency”.

More examples of blend equations are given in Unity's ShaderLab reference about blending.

Shader Code[edit | edit source]

Here is a simple shader which uses alpha blending to render a green color with opacity 0.3:

Shader "GLSL shader using blending" {

SubShader {

Tags { "Queue" = "Transparent" }

// draw after all opaque geometry has been drawn

Pass {

ZWrite Off // don't write to depth buffer

// in order not to occlude other objects

Blend SrcAlpha OneMinusSrcAlpha // use alpha blending

GLSLPROGRAM

#ifdef VERTEX

void main()

{

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

#endif

#ifdef FRAGMENT

void main()

{

gl_FragColor = vec4(0.0, 1.0, 0.0, 0.3);

// the fourth component (alpha) is important:

// this is semitransparent green

}

#endif

ENDGLSL

}

}

}

Apart from the blend equation, which has been discussed above, there are only two lines that need more explanation: Tags { "Queue" = "Transparent" } and ZWrite Off.

ZWrite Off deactivates writing to the depth buffer. As explained in Section “Per-Fragment Operations”, the depth buffer keeps the depth of the nearest fragment and discards any fragments that have a larger depth. In the case of a transparent fragment, however, this is not what we want since we can (at least potentially) see through a transparent fragment. Thus, transparent fragments should not occlude other fragments and therefore the writing to the depth buffer is deactivated. See also Unity's ShaderLab reference about culling and depth testing.

The line Tags { "Queue" = "Transparent" } specifies that the meshes using this subshader are rendered after all the opaque meshes were rendered. The reason is partly because we deactivate writing to the depth buffer: one consequence is that transparent fragments can be occluded by opaque fragments even though the opaque fragments are farther away. In order to fix this problem, we first draw all opaque meshes (in Unity´s “opaque queue”) before drawing all transparent meshes (in Unity's “transparent queue”). Whether or not a mesh is considered opaque or transparent depends on the tags of its subshader as specified with the line Tags { "Queue" = "Transparent" }. More details about subshader tags are described in Unity's ShaderLab reference about subshader tags.

It should be mentioned that this strategy of rendering transparent meshes with deactivated writing to the depth buffer does not always solve all problems. It works perfectly if the order in which fragments are blended does not matter; for example, if the fragment color is just added to the pixel color in the framebuffer, the order in which fragments are blended is not important; see Section “Order-Independent Transparency”. However, for other blending equations, e.g. alpha blending, the result will be different depending on the order in which fragments are blended. (If you look through almost opaque green glass at almost opaque red glass you will mainly see green, while you will mainly see red if you look through almost opaque red glass at almost opaque green glass. Similarly, blending almost opaque green color over almost opaque red color will be different from blending almost opaque red color over almost opaque green color.) In order to avoid artifacts, it is therefore advisable to use additive blending or (premultiplied) alpha blending with small opacities (in which case the destination factor DstFactor is close to 1 and therefore alpha blending is close to additive blending).

Including Back Faces[edit | edit source]

The previous shader works well with other objects but it actually doesn't render the “inside” of the object. However, since we can see through the outside of a transparent object, we should also render the inside. As discussed in Section “Cutaways”, the inside can be rendered by deactivating culling with Cull Off. However, if we just deactivate culling, we might get in trouble: as discussed above, it often matters in which order transparent fragments are rendered but without any culling, overlapping triangles from the inside and the outside might be rendered in a random order which can lead to annoying rendering artifacts. Thus, we would like to make sure that the inside (which is usually farther away) is rendered first before the outside is rendered. In Unity's ShaderLab this is achieved by specifying two passes, which are executed for the same mesh in the order in which they are defined:

Shader "GLSL shader using blending (including back faces)" {

SubShader {

Tags { "Queue" = "Transparent" }

// draw after all opaque geometry has been drawn

Pass {

Cull Front // first pass renders only back faces

// (the "inside")

ZWrite Off // don't write to depth buffer

// in order not to occlude other objects

Blend SrcAlpha OneMinusSrcAlpha // use alpha blending

GLSLPROGRAM

#ifdef VERTEX

void main()

{

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

#endif

#ifdef FRAGMENT

void main()

{

gl_FragColor = vec4(1.0, 0.0, 0.0, 0.3);

// the fourth component (alpha) is important:

// this is semitransparent red

}

#endif

ENDGLSL

}

Pass {

Cull Back // second pass renders only front faces

// (the "outside")

ZWrite Off // don't write to depth buffer

// in order not to occlude other objects

Blend SrcAlpha OneMinusSrcAlpha

// standard blend equation "source over destination"

GLSLPROGRAM

#ifdef VERTEX

void main()

{

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

#endif

#ifdef FRAGMENT

void main()

{

gl_FragColor = vec4(0.0, 1.0, 0.0, 0.3);

// fourth component (alpha) is important:

// this is semitransparent green

}

#endif

ENDGLSL

}

}

}

In this shader, the first pass uses front-face culling (with Cull Front) to render the back faces (the inside) first. After that the second pass uses back-face culling (with Cull Back) to render the front faces (the outside). This works perfect for convex meshes (closed meshes without dents; e.g. spheres or cubes) and is often a good approximation for other meshes.

Summary[edit | edit source]

Congratulations, you made it through this tutorial! One interesting thing about rendering transparent objects is that it isn't just about blending but also requires knowledge about culling and the depth buffer. Specifically, we have looked at:

- What blending is and how it is specified in Unity.

- How a scene with transparent and opaque objects is rendered and how objects are classified as transparent or opaque in Unity.

- How to render the inside and outside of a transparent object, in particular how to specify two passes in Unity.

Further Reading[edit | edit source]

If you still want to know more

- the OpenGL pipeline, you should read Section “OpenGL ES 2.0 Pipeline”.

- about per-fragment operations in the OpenGL pipeline (e.g. blending and the depth test), you should read Section “Per-Fragment Operations”.

- about front-face and back-face culling, you should read Section “Cutaways”.

- about how to specify culling and the depth buffer functionality in Unity, you should read Unity's ShaderLab reference about culling and depth testing.

- about how to specify blending in Unity, you should read Unity's ShaderLab reference about blending.

- about the render queues in Unity, you should read Unity's ShaderLab reference about subshader tags.

Order-Independent Transparency[edit | edit source]

This tutorial covers order-independent blending.

It continues the discussion in Section “Transparency” and solves some problems of standard transparency. If you haven't read that tutorial, you should read it first.

Order-Independent Blending[edit | edit source]

As noted in Section “Transparency”, the result of blending often (in particular for standard alpha blending) depends on the order in which triangles are rendered and therefore results in rendering artifacts if the triangles are not sorted from back to front (which they usually aren't). The term “order-independent transparency” describes various techniques to avoid this problem. One of these techniques is order-independent blending, i.e. the use of a blend equation that does not depend on the order in which triangles are rasterized. There two basic possibilities: additive blending and multiplicative blending.

Additive Blending[edit | edit source]

The standard example for additive blending are double exposures as in the images in this section: colors are added such that it is impossible (or at least very hard) to say in which order the photos were taken. Additive blending can be characterized in terms of the blend equation introduced in Section “Transparency”:

vec4 result = SrcFactor * gl_FragColor + DstFactor * pixel_color;

where SrcFactor and DstFactor are determined by a line in Unity's ShaderLab syntax:

Blend {code for SrcFactor} {code for DstFactor}

For additive blending, the code for DstFactor has to be One and the code for SrcFactor must not depend on the pixel color in the framebuffer; i.e., it can be One, SrcColor, SrcAlpha, OneMinusSrcColor, or OneMinusSrcAlpha.

An example is:

Shader "GLSL shader using additive blending" {

SubShader {

Tags { "Queue" = "Transparent" }

// draw after all opaque geometry has been drawn

Pass {

Cull Off // draw front and back faces

ZWrite Off // don't write to depth buffer

// in order not to occlude other objects

Blend SrcAlpha One // additive blending

GLSLPROGRAM

#ifdef VERTEX

void main()

{

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

#endif

#ifdef FRAGMENT

void main()

{

gl_FragColor = vec4(1.0, 0.0, 0.0, 0.3);

}

#endif

ENDGLSL

}

}

}

Multiplicative Blending[edit | edit source]

An example for multiplicative blending in photography is the use of multiple uniform grey filters: the order in which the filters are put onto a camera doesn't matter for the resulting attenuation of the image. In terms of the rasterization of triangles, the image corresponds to the contents of the framebuffer before the triangles are rasterized, while the filters correspond to the triangles.

When specifying multiplicative blending in Unity with the line

Blend {code for SrcFactor} {code for DstFactor}

the code for SrcFactor has to be Zero and the code for DstFactor must depend on the fragment color; i.e., it can be SrcColor, SrcAlpha, OneMinusSrcColor, or OneMinusSrcAlpha. A typical example for attenuating the background with the opacity specified by the alpha component of fragments would use OneMinusSrcAlpha for the code for DstFactor:

Shader "GLSL shader using multiplicative blending" {

SubShader {

Tags { "Queue" = "Transparent" }

// draw after all opaque geometry has been drawn

Pass {

Cull Off // draw front and back faces

ZWrite Off // don't write to depth buffer

// in order not to occlude other objects

Blend Zero OneMinusSrcAlpha // multiplicative blending

// for attenuation by the fragment's alpha

GLSLPROGRAM

#ifdef VERTEX

void main()

{

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

#endif

#ifdef FRAGMENT

void main()

{

gl_FragColor = vec4(1.0, 0.0, 0.0, 0.3);

// only A (alpha) is used

}

#endif

ENDGLSL

}

}

}

Complete Shader Code[edit | edit source]

Finally, it makes good sense to combine multiplicative blending for the attenuation of the background and additive blending for the addition of colors of the triangles in one shader by combining the two passes that were presented above. This can be considered an approximation to alpha blending for small opacities, i.e. small values of alpha, if one ignores attenuation of colors of the triangle mesh by itself.

Shader "GLSL shader using order-independent blending" {

SubShader {

Tags { "Queue" = "Transparent" }

// draw after all opaque geometry has been drawn

Pass {

Cull Off // draw front and back faces

ZWrite Off // don't write to depth buffer

// in order not to occlude other objects

Blend Zero OneMinusSrcAlpha // multiplicative blending

// for attenuation by the fragment's alpha

GLSLPROGRAM

#ifdef VERTEX

void main()

{

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

#endif