Routing protocols and architectures/Print version

| This is the print version of Routing protocols and architectures You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/Routing_protocols_and_architectures

Forwarding and routing

Routing is the process which determines the 'best' path for a packet and sends it out toward the destination:

- routing algorithm: it is in charge of deciding the paths to take for incoming packets:

- it determines the destinations reachable by each node;

- it computes the best paths (according to certain criteria) in a cooperative way with the other nodes;

- it stores local information in each node;

- forwarding algorithm: it is in charge of taking the path decided for each incoming packet:

- it performs a lookup in the local information computed and stored by the routing algorithm;

- it sends the outgoing packet along the best path.

Routing protocols differentiate into two classes:

- Interior Gateway Protocol (IGP): it includes the protocols used in intra-domain routing (e.g. RIP, IGRP, OSPF) to propagate routing information inside an Autonomous System[1];

- Exterior Gateway Protocol (EGP): it includes the protocols used in inter-domain routing (e.g. BGP) to propagate routing information between Autonomous Systems.

According to the OSI model, routing is a feature proper to the network layer, but it can be implemented at different layers:

- routing is implemented altogether at the network layer by protocols such as IP, X.25 and OSI/Decnet;

- some of the routing algorithms are implemented at the data-link layer by protocols such as Frame Relay and ATM and by bridges in switched LANs.

Modern routers implement two tables:

- Routing Information Base (RIB): it is the classical routing table listing all the destinations reachable within the network;

- Forwarding Information Base (FIB): it is a routing table optimized to speed up packet forwarding:

- dedicated hardware: TCAMs are able to store bits whose possible values are 0, 1 and 'don't care' → the netmask is integrated in the network address itself: each bit in the aggregated part has value 'don't care';

- cache: the FIB only includes the last used destination addresses;

- additional information: output port, destination MAC address.

Forwarding algorithms[edit | edit source]

Routing by network address[edit | edit source]

- Each node is identified by a network address.

- Each packet contains the address of the destination node.

- Each node contains the list of the reachable destination addresses with their corresponding next hops.

When a packet comes, the node uses the destination address included in it as the 'key' in the forwarding table to find the next hop.

- Advantage

It is a simple and efficient algorithm because it is stateless: packet forwarding takes place regardless of the forwarding of other packets, that is the node once a packet is forwarded will forget about it.

- Disadvantage

It is not possible to select different routes for the same destination based on the kind of traffic for quality of service.

- Adoption

Connectionless protocols (such as IP) typically use this forwarding algorithm.

'Coloured path' technique[edit | edit source]

- Each path between two nodes is identified by a PathID ('color').

- Each path contains a label corresponding to the PathID of the path to follow.

- Each node contains the lists of PathIDs with their corresponding output ports.

When a packet comes, the node uses the label included in it as the 'key' in the forwarding table to find the output port.

- Advantage

Several paths towards the same destination are possible → it is possible to choose the best path based on the kind of traffic for quality of service.

- Disadvantage

The PathID is global:

- path 'colouring' must be coherent on all the nodes over the network;

- scalability: the number of possible paths between all the node pairs in the network is very big → a lot of bits are needed to encode each PathID, and it is hard to find an identifier which has not been used yet.

Label swapping[edit | edit source]

The forwarding table in each node contains the mapping between the labels of the input ports and the labels of the output ports, including entries like:

When a packet comes, the node uses the label included in it and the input port as the 'key' in the forwarding table to find the output port, and it replaces the current label in the packet with the output label.

- Advantages

- scalability: the PathID of the path to follow is not global, but the label is decided locally node by node, and it must be coherent only between the nodes to the link endpoints:

- labels are made up of less bits because they have to encode less paths;

- each node must know only the labels of the paths crossing it → the forwarding table is smaller;

- efficiency: label swapping is fast with respect to forwarding algorithms such as 'longest prefix matching' in IP.

- Adoption

Label swapping is used by:

- telecommunication-derived network technologies (e.g. X.25, Frame Relay, ATM): label swapping allows quality of service, a feature considered as important by the world of phone operators;

- MPLS: in the backbone paths are in a fairly limited number and quite stable because they are created not end-to-end but in the MPLS cloud, where the network topology changes less frequently and traffic is more regular with respect to edges.

Path setup[edit | edit source]

When a host wants to generate and send the first packet toward a destination, how does it ask for setup of a new path and which label should it use?

- Manual setup

Paths and their corresponding labels are manually set by the network administrator.

Disadvantages

- high risk of human configuration mistakes;

- no automatic re-routing in case of faults;

- not suitable for highly dynamic networks where users frequently ask for new paths.

- On-demand setup

A signaling phase for path setup, that is for preparing labels in every node, is required, after which the host learns the label to use and it can send the first packet toward the destination.

Advantages

Quality of service is simpler:

- it is possible to set up different paths based on the source asking for its setup (e.g. the rector can have a path privileged compared to the researcher);

- it is possible to include inside the signaling packet a piece of information specifying how much bandwidth to reserve for the path.

Disadvantages

- complexity: signaling is achieved through another forwarding technique (e.g. routing by network address) over a dedicated circuit → complexity increases because the network must now manage two different forwarding techniques;

- scalability: if the path is long and the number of nodes to cross is high, the signaling phase may last too long, especially if the communication sessions are quite short like in the network world.

Source routing[edit | edit source]

The sender host writes into the packet itself the whole path which must follow to arrive at the destination.

- Advantage

Internal routers in the network are extremely simple.

- Disadvantage

The sender host must know the network topology and must interact directly with the other hosts in order to be able to compute paths → this breaks the paradigm according to which end users should just send packets and the network is in charge of forwarding packets toward their destinations.

- Adoption

IPv4 and IPv6 contemplate an option affecting the path of packets.

Comparison[edit | edit source]

- Routing by network address

- + simplicity: no setup, no state

- + scalability (forwarding): no 'per-session' state (stateless)

- − efficiency: big packet header

- − scalability (routing): very big routing table

- − reliability: difficult to guarantee the service

- − multipath: it does not support multiple paths between two entities

- Label swapping

- + scalability (routing): reduced routing table

- + efficiency: small packet header

- + guarantee of service: possibility to guarantee the service (path booking)

- + multipath: multiple paths allowed between two entities

- − scalability (setup): processing of the packets for path setup (critical with 'short' sessions)

- − scalability (forwarding): 'per-session' state (needed for quality of service)

- − complexity: path setup (path setup process, ad-hoc forwarding for path setup packets)

- Source routing

- + efficiency (routers): intermediate systems are extremely simple

- − efficiency (sources): end systems should worry about computing paths

References[edit | edit source]

- ↑ An Autonomous System (AS) is generally the network under the control of an ISP.

Routing algorithms

| Routing algorithms presented in the following assume they work on a network based on routing by network address. |

A routing algorithm is a process of collaborative type in charge of deciding, in every intermediate node, the directions which must be used to reach destinations:

- it determines the destinations reachable by each node:

- it generates information about the reachability of local networks: the router informs its neighbor routers that the local network exists and it is reachable through it;

- it receives information about the reachability of remote networks: a neighbor router informs the router that the remote network exists and it is reachable through it;

- it propagates information about the reachability of remote networks: the router informs the other neighbor routers that the remote network exists and it is reachable through it;

- it computes optimal paths (next hops), in a cooperative way with the other nodes, according to certain criteria:

- a metric has to be established: a path may be the best one based on a metric but not based on another metric;

- criteria must be coherent among all the nodes in the network to avoid loops, black holes, etc.;

- the algorithm must operate automatically to avoid human errors in manual configuration and to favor scalability;

- it stores local information in the routing table of each node: a routing algorithm is not required to know the entire topology of the network, but it is only interested in building the correct routing table.

- Characteristics of an ideal routing algorithm

- simple to implement: less bugs, easy to understand, etc.;

- lightweight to execute: routers should spend as less resources as possible in running the algorithm because they have limited CPU and memory;

- optimal: the computed paths should be optimal according to the chosen metrics;

- stable: it should switch to another path just when there is a topology or a cost change to avoid route flapping, that is the frequent change of preferred routes with consequent excess of transient periods;

- fair: it should not favour any particular node or path;

- robust: it should be able to automatically adapt to topology or cost changes:

- fault detection: it should not rely on external components to detect a fault (e.g. a fault can not be detected at the physical layer if it occurs beyond a hub);

- auto-stabilization: in case of variations in the network it should converge to a solution without any external intervention (e.g. explicit manual configuration);

- byzantine robustness: it should recognize and isolate a neighbor node which is sending fake information, due to a fault or a malicious attack.

Internet does not implement byzantine robustness, but it is based on confidence → faults and malicious behaviours require human intervention.

- Classification of routing algorithms

- non-adaptive algorithms (static): they take decisions independently of how the network is:

- static routing (or fixed directory routing)

- random walk

- flooding, selective flooding

- adaptive algorithms (dynamic): they learn information about the network to better take decisions:

- centralized routing

- isolated routing: hot potato, backward learning

- distributed routing: Distance Vector, Link State

- hierarchical algorithms: they allow routing algorithms to scale up on wide infrastructures.

Metric[edit | edit source]

A metric is the measure of how good a path is, obtained by transforming a physical quantity (e.g. distance, transmission speed), or a combination of them, in numerical form (cost), in order to choose the least-cost path as the best path.

A best metric does not exist for all the kinds of traffic: for example bandwidth is suitable for file-transfer traffic, while transmission delay is suitable for real-time traffic. The choice of the metric can be determined from the 'Type of Service' (TOS) field in the IP packet.

- Issues

- (non-)optimization: the primary task of routers is to forward users' traffic, not to spend time in computing paths → it is better to prefer solutions which, even if they are not fully optimized, do not compromise the primary functionality of the network and do not manifest problems which can be perceived by the end user:

- complexity: the more criteria are combined, the more complex the algorithm becomes and the more computational resources at run-time it requires;

- stability: a metric based on the available bandwidth on the link is too unstable, because it depends on the instantaneous traffic load which is very variable in time, and may lead to route flapping;

- inconsistency: metrics adopted by nodes in the network must be coherent (for every packet) to avoid the risk of loops, that is packet 'bouncing' between two routers using different conflicting metrics.

Transients[edit | edit source]

Modern routing algorithms are always 'active': they exchange service messages all the time to detect faults autonomously. However, they do not change the routing table unless a status change is detected:

- topology changes: link fault, addition of a new destination;

- cost changes: for example a 100-Mbps link goes up to 1 Gbps.

Status changes result in transient phases: all the nodes in a distributed system can not be updated at the same time, because a variation is propagated throughout the network at a finite speed → during the transient, status information in the network may not be coherent: some nodes already have new information (e.g. the router detecting the fault), while other ones still have old information.

Not all status changes have the same impact on data traffic:

- positive status changes: the effect of the transient is limited because the network may just work temporarily in a sub-optimal condition:

- a path gets a better cost: some packets may keep following the old path now become less convenient;

- a new destination is added: the destination may appear unreachable due to black holes along the path towards it;

- negative status changes: the effect of the transient manifests itself more severely to the user because it interferes also with the traffic that should not be affected by the fault:

- a link fault occurs: not all routers have learnt that the old path is no longer available → the packet may start `bouncing' back and forth saturating the alternative link (routing loop);

- a path worsens its cost: not all routers have learnt that the old path is no longer convenient → analogous to the case of fault (routing loop).

In general, two common problems affect routing algorithms during the transient: black holes and routing loops.

Black holes[edit | edit source]

A black hole is defined as a router which, even if at least a path exists through which it could reach a certain destination, does not know any of them (yet).

- Effect

The effect on data traffic is limited to packets directed toward the concerned destination, which are dropped until the node updates its routing table and acquires information about how to reach it. Traffic directed to other destinations is not affected by the black hole at all.

Routing loops[edit | edit source]

A routing loop is defined as a cyclic path from the routing point of view: a router sends a packet on a link but, because of an inconsistency in routing tables, the router at the other endpoint of the link sends it back.

- Effect

Packets directed toward the concerned destination start 'bouncing' back and forth (bouncing effect) → the link gets saturated → also traffic directed to other destinations crossing that link is affected.

Backup route[edit | edit source]

Backup route is a concept mostly used in telephone networks based on a hierarchical organization: every exchange is connected to an upper-level exchange by a primary route, and to another upper-level exchange by a backup route → if a fault occurs in the primary route, the backup route is ready to come into operation without any transient period.

A data network is instead based on a meshed topology, where routers are interconnected in various ways → it is impossible to foresee all possible failures of the network to prearrange backup paths, but when a failure occurs a routing algorithm is preferable automatically computing on the fly an alternative path (even if the computational step requires a transient period).

Backup route in modern networks can still have applications:

- a company connected to the Internet via ADSL can keep its connectivity when the ADSL line drops by switching to a backup route via HiperLAN technology (wireless);

- the internet backbone is by now in the hands of telephone companies, which have modeled it according to criteria of telephone networks → its organization is hierarchical enough to allow backup routes to be prearranged.

Multipath routing[edit | edit source]

With routing by network address, all the packets toward a destination follow the same path, even if alternative paths are available → it would be preferable to make part of traffic follow an alternative path, even if more costly, not to saturate the path chosen by the algorithm.

Multipath routing, also known as 'load sharing', is a traffic engineering feature aiming at distributing traffic toward the same destination over multiple paths (when available), allowing multiple entries for each destination in the routing table, for a more efficient usage of network resources.

Unequal-cost multipath routing[edit | edit source]

An alternative path is used only if it has cost not too greater than cost of the least-cost primary path:

Traffic is distributed inversely proportionally to the cost of the routes. For example in this case:

the router may decide to send the packet with 66\% probability along the primary path and 33\% probability along the secondary path.

- Problem

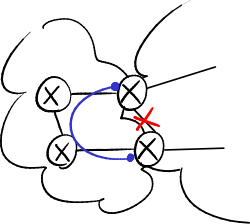

Given a packet, each router autonomously decides on which path to forward it → incoherent decisions between routers may make the packet enter a routing loop, and since the forwarding is usually session-based that packet will never exit the loop:

Equal-cost multipath routing[edit | edit source]

An alternative path is used only if it has cost exactly equal to cost of the primary path ():

Traffic is equally partitioned on both the paths (50%).

- Problems

If the first packet follows the slow path and the second packet follows the fast path, TCP mechanisms may cause overall performance to get worse:

- Problems caused by equal-cost multipath routing.

-

TCP reordering problem.

-

Transmission rate decrease.

- TCP reordering problem: packets may arrive out of sequence: the second packet arrives at the destination before the first packet → the process for sequence number reordering keeps busy the computational resources of the receiver;

- transmission rate decrease: if the acknowledgment packet (ACK) of the first packet arrives too late, the source thinks that the first packet went lost → when 3 duplicate (cumulative) ACKs have arrived and the timeout expires, TCP sliding-window mechanisms get into the action:

- fast retransmit: the source unnecessarily transmits again the packet → the packet gets duplicate;

- fast recovery: the source thinks that the network is congested → it slows down packet sending by limiting the transmission window: it sets the threshold value to half of the current value of the congestion window, and makes the congestion window restart from value 1 (that is only one packet at a time is sent and its ACK is awaited before sending the next packet).

Criteria[edit | edit source]

Real multipath routing implementations split traffic so that traffic toward a same destination follows the same path:

- flow-based: every transport-layer session is identified by the quintuple:

- <source IP address>

- <destination IP address>

- <transport-layer protocol type> (e.g. TCP)

- <source port>

- <destination port>

and the router stores a table mapping session identifiers with output ports:

- extracting the fields forming the session ID from the packet is onerous, due to the variety of supported packet formats (VLAN, MPLS...);

- information about transport-layer ports is unavailable in case of fragmented IP packets;

- searching the session ID table for the quintuple is onerous;

- often TCP connection shutdown is not 'graceful leaving', that is FIN and ACK packets are not sent → entries in the session ID tables are not cleared → it is required a thread performing sometimes a cleanup of old entries by looking at their timestamps;

- packet-based: the router sends the packets having even (either destination or source or both) IP address to a path, and odd IP address to the other path → the hashing operation is very fast.

- Problem

Traffic toward a same destination can not use both the paths at the same time → there are troubles in case of very big traffic toward a certain destination (e.g. a nightly backup between two servers).

Non-adaptive algorithms[edit | edit source]

Static routing[edit | edit source]

The network administrator manually configures on each router its routing table.

- Disadvantage

If a change in the network occurs, routing tables need to be manually updated.

- Application

Static routing is mainly used on routers at the network edges:

- edge routers are not allowed to propagate routing information toward the backbone: the core router stops all the advertisements coming from the edge, otherwise a user could advertise a network with the same address as an existing network (e.g. google.com) and redirect towards him a part of traffic directed to that network.

Since users can not advertise their own networks, how can they be reachable from outside? The ISP, which knows which addresses are used by the networks it sold to users, must configure the core router so that it advertises those networks to other routers even if they are not locally connected; - edge routers are not allowed to receive routing information from the backbone: an edge router is typically connected by a single link to a core router → a global default route is enough to reach the entire Internet.

Random walk[edit | edit source]

When a packet arrives, the router chooses a port randomly (but the one from which it was received) and sends it out on that port.

- Applications

It is useful when the probability that the packet reaches the destination is high:

- peer-to-peer (P2P) networks: for contents lookup;

- sensor network: sending messages should be a low-power operation.

Flooding[edit | edit source]

When a packet arrives, the router sends it out on all the ports (but the one from which it was received).

Packets may have a 'hop count' field to limit flooding to a network portion.

- Applications

- military applications: in case of attack the network could be damaged → it is critical that the packet arrives at destination, even at the cost of having a huge amount of duplicate traffic;

- Link State algorithm: each router when receiving the network map by a neighbor has to propagate it to the other neighbors:

A4. The Link State algorithm#Flooding algorithm.

A4. The Link State algorithm#Flooding algorithm.

Selective flooding[edit | edit source]

When a packet arrives, the router first checks if it has already received and flooded it in the past:

- old packet: it discards it;

- new packet: it stores and sends it out on all the ports (but the one from which it was received).

Each packet is recognized through the sender identifier (e.g. the source IP address) and the sequence number:

- if the sender disconnects from the network or shutdowns, when it connects again the sequence number will restart from the beginning → the router sees all the received packets as old packets;

- sequence numbers are encoded on a limited number of bits → the sequence number space should be chosen so as to minimize new packets wrongly recognized as old packets.

Sequence number spaces[edit | edit source]

- Linear space

It can be tolerable if selective flooding is used for few control messages:

- when the sequence number reaches the maximum possible value, an overflow error occurs;

- old packet: the sequence number is lower than the current one;

- new packet: the sequence number is greater than the current one.

- Circular space

It solves the sequence number space exhaustion problem, but it fails if a packet arrives with sequence number too far away from the current one:

- when the sequence number reaches the maximum possible value, the sequence number restarts from the minimum value;

- old packet: the sequence number is in the half preceding the current one;

- old packet: the sequence number is in the half following the current one.

- Lollipop space

The first half of the space is linear, the second half is circular.

Adaptive algorithms[edit | edit source]

Centralized routing[edit | edit source]

All routers are connected to a centralized control core called Routing Control Center (RCC): every router tells the RCC which its neighbors are, and the RCC uses this information to create the map of the network, compute routing tables and communicate them to all routers.

- Advantages

- performance: routers have not to have a high computational capacity, all focused on a single device;

- debugging: the network administrator can get the map of the whole network from a single device to check its correctness;

- maintenance: intelligence is focused on the control center → to update the algorithm just the control center has to be updated.

- Disadvantages

- fault tolerance: the control center is a single point of failure → a malfunction of the control center impacts on all the network;

- scalability: the more routers the network is made up of, the more the work for the control center increases → it is not suitable for wide networks such as Internet.

- Application

Similar principles are used in telephone networks.

Isolated routing[edit | edit source]

There is no control center, but all nodes are peer: each node decides its paths autonomously without exchanging information with other routers.

- Advantages and disadvantages

They are practically the opposite of the ones of the centralized routing.

Backward learning[edit | edit source]

Each node learns network information based on packet source addresses: ![]() Local Area Network design/A3. Repeaters and bridges#Trasparent bridge.

Local Area Network design/A3. Repeaters and bridges#Trasparent bridge.

- it works well only with 'loquacious' nodes;

- it is not easy to realize the need of switching to an alternative path when the best path becomes no longer available;

- it is not easy to detect the destinations become unreachable → a special timer is required to delete old entries.

Distributed routing[edit | edit source]

Distributed routing uses a 'peer' model: it takes the advantages of centralized routing and the ones of isolated routing:

- centralized routing: routers participate in exchange of information regarding connectivity;

- isolated routing: routers are equivalent and there is not a 'better' router.

- Applications

Modern routing protocols use two main distributed routing algorithms:

- Distance Vector: each node tells all its neighbors what it knows about the network;

- Link State: each node tells all the network what it knows about its neighbors.

Comparison[edit | edit source]

- Neighbors

- LS: needs the 'hello' protocol;

- DV: knows its neighbors through the DV itself.

- Routing table

DV and LS create the same routing table, just computed in different ways and with different duration (and behavior) of the transient:

- LS: routers cooperate to keep the map of the network up to date, then each of them computes its own spanning tree: each router knows the topology of the network, and knows the precise path to reach a destination;

- DV: routers cooperate to compute the routing table: each router knows only its neighbors, and it trusts them for determining the path towards the destination.

- Simplicity

- DV: single algorithm easy to implement;

- LS: incorporates many different components.

- Debug

Better in LS: each node has the map of the network.

- Memory consumption (in each node)

They may be considered equivalent:

- LS: each of the LSs has adjacencies (Dijkstra: );

- DV: each of the DVs has destinations (Bellman-Ford: ).

- Traffic

Better in LS: Neighbor Greeting packets are much smaller than DVs.

- Convergence

Better in LS: fault detection is faster because it is based on Neighbor Greeting packets sent with a high frequency.

The Distance Vector algorithm

The Distance Vector (DV) algorithm is based on distribution of information about the whole network within the neighborhood of the router.

Every router periodically generates a DV, that is a set of destination-cost pairs:

- destination: all the destinations known by the generating router (in real IP networks they are network addresses with netmask);

- cost: the cost of the path from the generating router to the destination.

The receiving router learns from each DV:

- reachable destinations: they are added to the ones already known locally;

- direction: those destinations are reachable through the generating router;

- cost: the one reported by the generating router plus the cost of the link between the receiving router and the generating router.

Each node stores all the DVs coming from its neighbors, and integrates them by selecting the best costs for every destination in order to build its routing table and its DV:

Basic algorithm[edit | edit source]

- main process:

- the DV is announced to adjacent routers;

- it waits for timeout;

- it comes back to step 1;

- upon receiving a new DV:

- the DV is saved into memory;

- the DV is merged with stored DVs;

- upon failure of a link (detected at the physical layer):

- all the DVs coming from that link are deleted;

- remaining DVs are merged;

- when a DV has not been received within timeout:

- the missing DV is deleted;

- remaining DVs are merged.

- Remarks

- reliability: timeouts avoid the use of link-up signals that may not be always available (e.g. if the failure occurs beyond a hub);

- efficiency: on a link failure, the router gets its new routing table without exchanging any DVs with its adjacent nodes;

- convergence speed: when a router changes its DV, it does not announce it until the next timeout of the main process (no triggered updates).

Triggered updates[edit | edit source]

A router can send its updated DV as soon as it updates its routing table, without waiting for the default timeout, to improve convergence time. It can announce either the entire DV or, like it is more frequent in real implementations, just the changed routes.

The triggered update does not reset the timeout of the main process, to avoid that routers start generating DVs at the same time (synchronization).

Count to infinity[edit | edit source]

A count to infinity is triggered when the cost to reach a destination, which is no longer reachable, is progressively increased to the infinity.

- Example

In the side figure, a failure on the link between A and B triggers a count to infinity:

- B detects the failure at the physical layer and deletes the DV from A, but C is not able to detect the failure at the physical layer;

- C announces to B it can reach A through a path of cost 2, which really was the old one crossing B;

- B updates the DV from C, appearing that A became reachable through an alternative path at cost 3 crossing C;

- B in turn sends its DV to C, which updates it and increase the cost to 4, and so on.

- Effect

B thinks it can reach A through C, while C thinks it can reach A through B → a packet which is directed to A starts bouncing between B and C (bouncing effect) saturating the link between B and C until its TTL goes down to 0.

- Cause

Unlike black hole and routing loop, count to infinity is a specific problem of the DV algorithm, due to the fact that the information included in the DV does not consider the network topology.

- Possible solutions

- threshold for infinity: upper bound to count to infinity;

- additional algorithms: they prevent count to infinity, but they make the protocol heavier and tend to reduce its reliability because they can not foresee all the possible failures:

- route poisoning: bad news is better than no news;

- split horizon: if C reaches destination A through B, it does not make sense for B to try to reach A through C;

- path hold down: let the rumors calm down waiting for the truth.

Threshold for infinity[edit | edit source]

A threshold value can be defined: when the cost reaches the threshold value, the destination is considered no longer reachable.

For example RIP has a threshold value equal to 16: more than 15 routers in a cascade can not be connected.

Protocols with complex metrics (e.g. IGRP) require a very high threshold value to consider differentiated costs: for example a metric based on bandwidth may result in a wide range of cost values.

If the bouncing effect takes place on a low-cost link, it is required too much time to increase costs up to the threshold value → two metrics at the same time can be used:

- a metric for path costs (e.g. based on link bandwidth);

- a metric for count to infinity (e.g. based on hop count).

When the metric used for count to infinity returns 'infinity', the destination is considered unreachable whatever the path cost is.

Route poisoning[edit | edit source]

The router which detected the failure propagates the destinations no longer reachable with cost equal to infinity → the other routers hear about the failure and in turn propagate the 'poisoned' information.

Split horizon[edit | edit source]

Every router differentiates DVs sent to its neighbors: in each DV it omits the destinations which are reachable through a path crossing the neighbor to which it is sending it → it does not trigger 'ghost' paths to appear toward a destination no longer reachable after sending obsolete information in the DV.

- Characteristics

- it avoids count to infinity between two nodes (except in case of particular loops);

- it improves convergence time of the DV algorithm;

- routers have to compute a different DV for each link.

Split horizon with poisoned reverse[edit | edit source]

In real implementations, the DV may be fragmented into multiple packets → if some entries in the DV are omitted, the receiving node does not know whether those entries were intentionally omitted by the split horizon mechanism or the packets where they were included went lost.

In split horizon with poisoned reverse, destinations instead of being omitted are transmitted anyway but 'poisoned' with infinite cost, so the receiving node is sure it has received all the packets composing the DV → this increases convergence time.

Path hold down[edit | edit source]

If the path toward a destination increases its cost, it is likely to trigger a count to infinity → that entry is 'frozen' for a specific period of time waiting for the rest of the network to find a possible alternative path, whereupon if no one is still announcing that destination it will be considered unreachable and its entry will be deleted.

DUAL[edit | edit source]

Diffusing Update Algorithm (DUAL) is an additional algorithm that aims at improving the scalability of the DV algorithm by guaranteeing the absence of routing loops even during the transient:

- positive status change: if any neighbor node announces an alternative path with a lower cost, it is immediately accepted because definitely it will not cause a routing loop;

- negative status change: if

- either the current next hop announces the increase of the current route (worsening announces by other neighbor nodes are ignored),

- or the router detects at the physical layer a fault on the link belonging to the current route

then the DUAL algorithm must be activated:

- selection of a feasible successor: another neighbor is selected only if it guarantees that the alternative path across it will not cause routing loops;

- diffusing process: if no feasible successors can be found, the node enters a sort of 'panic mode' and asks its neighbors for help, waiting for someone to report a feasible path toward that destination.

Selection of a feasible successor[edit | edit source]

If the current route is no longer available due to a negative status change, an alternative path is selected only if it can be proved that the new path does not create loops, that is if it is certain that the new next hop does not use the node itself to reach the destination.

A neighbor node is a feasible successor for router if and only if its distance toward destination is smaller than the distance that router had before the status change:

This guarantees that neighbor can reach destination by using a path that does not go through router : if path passed across , its cost could not be lower than the one of sub-path .

In case more than one feasible successor exists, neighbor is selected offering the least-cost path toward destination :

where:

- is the cost of the link between router and its neighbor ;

- is the distance between neighbor and destination .

The selected feasible successor is not guaranteed to be the neighbor which the best possible path toward the destination goes across. If the mechanism does not select the best neighbor, the latter will keep announcing the path which is really the best one without changing its cost → the router will recognize the existence of a new, better path which was not selected and adopt the new path (positive status change).

Diffusing process[edit | edit source]

If router can not find any feasible successor for the destination:

- it temporarily freezes the entry in its routing table related to the destination → packets keep taking the old path, which definitely is free of loops and at most is no longer able to lead to the destination;

- it enters an active state:

- it sends to each of its neighbors, but the next hop of the old path, a query message asking if it is able to find a path which is better than its old path and which is definitely free of loops;

- it waits for a reply message to be received from each of its neighbor;

- it chooses the best path exiting from the active state.

Each neighbor router receiving the query message from router sends back a reply message containing its DV related to a path across it:

- if router is not its next hop toward the destination, and therefore the cost of its path toward the destination has not been changed, then router reports that router can use that path;

- if router is its next hop toward the destination, then router should in turn set out to search for a new path, by either selecting a feasible successor or entering the active state too.

Advantages and disadvantages[edit | edit source]

- Advantages

- very easy to implement, and protocols based on the DV algorithm are simple to configure;

- it requires limited processing resources → cheap hardware in routers;

- suitable for small and stable networks with not too frequent negative status changes;

- the DUAL algorithm guarantees loop-free networks: no routing loops can occur, even in the transient (even though black holes are still tolerated).

- Disadvantages

- the algorithm has an exponential worst case and it has a normal behavior between and ;

- convergence may be rather slow, proportional to the slowest link and the slowest router in the network;

- difficult to understand and predict its behavior in big and complex networks: no node has a map of the network → it is difficult to detect possible routing loops;

- it may trigger routing loops due to particular changes in topology;

- additional techniques for improving its behavior make the protocol more complex, and they do not solve completely the problem of the missing topology knowledge anyway;

- the threshold 'infinity' limits the usage of this algorithm only to small networks (e.g. with few hops).

The Path Vector algorithm[edit | edit source]

The Path Vector (PV) algorithm adds information about the announced routes: also the path, that is the list of crossed nodes along it, is announced:

The list of crossed nodes allows to avoid the appearance of routing loops: the receiving node is able to detect that the announced route crosses it by observing the presence of its identifier in the list, discarding it instead of propagating it → paths crossing twice the same node can not form.

Path Vector is an intermediate algorithm between Distance Vector and Link State: it adds the strictly needed information about announced paths without having the complexity related to Link State where the whole network topology needs to be known.

- Application

The PV algorithm is used in inter-domain routing by the BGP protocol: ![]() B5. Border Gateway Protocol#Path Vector algorithm.

B5. Border Gateway Protocol#Path Vector algorithm.

The Link State algorithm

The Link State (LS) algorithm is based on distribution of information about the neighborhood of the router over the whole network. Each node can create the map of the network (the same for all nodes), from which the routing table has to be obtained.

Components[edit | edit source]

Neighbor Greetings[edit | edit source]

Neighbor Greetings are messages periodically exchanged between adjacent nodes to collect information about adjacencies. Each node:

- sends Neighbor Greetings to report its existence to its neighbors;

- receives Neighbor Greetings to learn which are its neighbors and the costs to reach them.

Neighbor Greetings implement fault detection based on a maximum number of consecutive Neighbor Greetings not received:

- fast: Neighbor Greetings can be sent with a high frequency (e.g. every 2 seconds) to recognize variations on adjacencies in a very short time:

- once they are received, they are not propagated but stop on the first hop → they do not saturate the network;

- they are packets of small size because they do not include information about nodes other than the generating node;

- they require a low overhead for routers, which are not forced to compute again their routing table whenever they receive one of them;

- reliable: it does not rely on the 'link-up' signal, unavailable in presence of hubs.

Link States[edit | edit source]

Each router generates a LS, which is a set of adjacency-cost pairs:

- adjacency: all the neighbors of the generating node;

- cost: the cost of the link between the generating router and its neighbor.

Each node stores all the LSs coming from all nodes in the network into the Link State Database, then it scans the list of all adjacencies and builds a graph by merging nodes (routers) with edges (links) in order to build the map of the network.

LS generation is mainly event-based: a LS is generated following a change in the local topology (= in the neighborhood of the router):

- the router has got a new neighbor;

- the cost to reach a neighbor has changed;

- the router has lost its connectivity to a neighbor previously reachable.

Event-based generation:

- allows a better utilization of the network: it does not consume bandwidth;

- it requires the 'hello' component, based on Neighbor Greetings, as the router can no longer use the periodic generation for detecting faults toward its neighbors.

In addition, routers implement also a periodic generation, with a very low frequency (in the order of tens of minutes):

- this increases reliability: if a LS for some reason goes lost, it can be sent again without having to wait for the next event;

- this allows to include an 'age' field: the entry related to a disappeared destination remains in the routing table and packets keep being sent to that destination until the piece of information, if not refreshed, ages enough that it can be deleted.

Flooding algorithm[edit | edit source]

Each LS must be sent in 'broadcast' to all the routers in the network, which must receive it unchanged → real protocols implement a sort of selective flooding, representing the only way to reach all routers with the same data and with minimum overhead. Broadcast is limited only to LSs, to avoid saturating the network.

LS propagation takes place at a high speed: unlike DVs, each router can immediately propagate the received LS and in a later time process it locally.

Real protocols implement a reliable mechanism for LS propagation: every LS must be confirmed 'hop by hop' by an acknowledgment, because the router must be sure that the LS sent to its neighbors has been received, also considering that LSs are generated with a low frequency.

Dijkstra's algorithm[edit | edit source]

After building the map of the network from its adjacency lists, each router is able to compute the spanning tree of the graph, that is the tree with least-cost paths having the node as a root, thanks to the Dijkstra algorithm: on every iteration all the links are considered connecting nodes already selected with nodes not yet selected, and the closest adjacent node is selected.

All nodes have the same Link State Database, but each node has a different routing tree to the destinations, because the obtained spanning tree changes as the chosen node as a root changes:

- better distribution of the traffic: reasonably there are no unused links (unlike Spanning Tree Protocol);

- obviously the routing tree must be consistent among the various nodes.

Adjacency bring-up[edit | edit source]

Bringing up adjacencies is required to synchronize Link State Databases of routers when a new adjacency is detected:

- a new node connects to the network: the adjacent node communicates to it all the LSs related to the network, to populate its Link State Database from which it will be able to compute its routing table;

- two partitioned subnetworks (e.g. due to a failure) are re-connected together: each of the two nodes at the link endpoints communicates to the other node all the LSs related to its subnetwork.

- Procedure

- a new adjacency is detected by the 'hello' protocol, which keeps adjacencies under control;

- synchronization is a point-to-point process, that is it affects only the two routers at the endpoints of the new link;

- the LSs which had previously been unknown are sent to other nodes in the network in flooding.

Behaviour over broadcast data-link-layer networks[edit | edit source]

The LS algorithms models the network as a set of point-to-point links → it suffers in presence of broadcast[1] data-link-layer networks (such as Ethernet), where any entity has direct access to any other entity on the same data link (shared bus), hence creating a full-mesh set of adjacencies ( nodes → point-to-point links).

The high number of adjacencies has a severe impact on the LS algorithm:

- computation problems: the convergence of the Dijkstra's algorithm depends on the number of links (), but the number of links explodes on broadcast networks;

- unnecessary overhead when propagating LSs: whenever a router needs to send its LS on the broadcast network, it has to generate LSs, one for every neighbor, even if it would be enough to send it only once over the shared channel to reach all its neighbors, then each neighbor will in turn propagate multiple times the received LS ();

- unnecessary overhead when bringing up adjacencies: whenever a new router is added to the broadcast network, it has to start bring-up phases, one for every neighbor, even if it would be enough to re-align the database just with one of them.

The solution is to transform the broadcast topology in a star topology, by adding a pseudo-node (NET): the network is considered an active component that will start advertising its adjacencies, becoming the center of a virtual star topology:

- one of the routers is 'promoted' which will be in charge of sending also those additional LSs on behalf of the broadcast network;

- all the other routers advertise an adjacency to that node only.

The virtual star topology is valid only for LS propagation and adjacency bring-up, while normal data traffic still uses the real broadcast topology:

- LS propagation: the generating node sends a LS to the pseudo-node, which sends it to the other nodes ();

- adjacency bring-up: the new node activates a bring-up phase only with the pseudo-node.

Advantages[edit | edit source]

- fast convergence:

- LSs are quickly propagated without any intermediate processing;

- every node has certain information because coming directly from the source;

- short duration of routing loops: they may happen during transient for a limited amount of time;

- debug simplicity: each node has a map of the network, and all nodes have identical databases → it is enough to query a single router to have the full map of the network in case of need;

- good scalability, although it is better not to have large domains (e.g. OSPF suggests not to have more than 200 routers in a single area).

References[edit | edit source]

- ↑ To be precise, on all the data-link-layer networks with multiple access (e.g. also on NBMA).

Hierarchical routing

Hierarchical routing allows to partition the network into autonomous routing domains. A routing domain is the portion of the network which is handled by the same instance of a routing protocol.

Routers belonging to a domain do not know the exact topology of another domain, but they only know the list of destinations included in it with their related costs (sometimes fictitious) → a good choice to reach them is to take the best exit path toward the target domain across a border router.

Every border router has visibility on both the domains which it interconnects:

- it is called egress router when the packet is exiting the domain;

- it is called ingress router when the packet is entering the domain.

Hierarchical routing introduces a new rule for handling the routes related to destinations which are outside the current domain:

- internal destinations: if the destination is inside the same routing domain, the routing information generated by the "internal" routing protocol has to be used;

- external destinations: if the destination is inside another routing domain, traffic has to be forwarded toward the closest egress router out from the current domain to the target domain, and then the latter will be in charge of delivering the packet to the destination by using its internal routing information.

The sub-path from the source to the closest egress router and the sub-path from here to the final destination taken individually are optimal, but the overall path which is their concatenation is not optimal: given a destination, the first part of the path (source-border router) is the same for all the destinations in the remote domain.

- Motivations

- interoperability: domains handled by different routing protocols can be interconnected;

- visibility: an ISP does not want to let a competitor know details about its network;

- scalability: a too wide portion of network can not be handled by a single instance of a routing protocol, but needs to be partitioned:

- memory: it excludes information about the precise topology of remote domains, reducing the amount of information which every router needs to keep in memory;

- summarization: it allows to announce a 'virtual' destination (with a conventional cost) grouping together several 'real' destinations (e.g. in IP networks multiple network addresses can be aggregated into a network address with a longer netmask);

- isolation: if inside a certain domain a failure occurs or a new link is added, route changes do not perturb other domains, that is routing tables in routers of remote domains stay unchanged → less transients, more stable network, quicker convergence.

- Implementations

Hierarchical routing can be implemented in two ways (not mutually exclusive):

- automatic: some protocols (such as OSPF, IS-IS) automatically partition the network into routing domains (called 'areas' in OSPF);

- manual: the redistribution process can be enabled on a border router to interconnect domains handled by even different routing protocols.

Partitioned domains[edit | edit source]

A domain becomes partitioned if starting from a border router it is no longer possible to reach all its internal destinations through paths always remaining within the domain itself.

In the example in the side figure, the packet sent by node A exits routing domain A as soon as possible, but once it has entered domain B it can not reach final destination H due to the link failure between the border router and node I. Really an alternative path exists leading to destination H across the other border router, but it can not be taken because the packet would be required to exit domain B and cross domain A.

Moreover, paths can be asymmetrical: the reply packet may take a path other than the one taken by the query packet, by going across a different border router → data may be received, but ACKs confirming they have been received may go lost.

- Solutions

- links inside every domain can be redounded to make it strongly connected, to avoid that a link failure could cause a domain to be partitioned;

- the OSPF protocol allows the manual configuration of a sort of virtual tunnel between two border routers called Virtual Link.

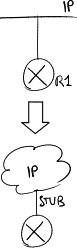

Redistribution[edit | edit source]

Redistribution is the software process, running on a border router, which allows to transfer routing information from a routing domain to another one.

In the example in the side figure, destinations learnt in a RIP domain can be injected into an OSPF domain and vice versa.

- Remarks

- The command for redistribution is unidirectional → it is possible to do a selective redistribution in one direction only (for example the ISP does not accept untrusted routes announced by the customer).

- Redistribution can be performed also among domains handled by instances of the same protocol.

- Routes learnt by the redistribution process can be marked as 'external routes' by the routing protocol.

Costs[edit | edit source]

Routers in a domain will know a broader set of destinations, even if some of them may have a 'wrong' (simplified) topology: in fact the redistribution process can

- either keep the cost of the original route, at most fixed by a coefficient,

- or set the cost to a conventional value when:

- the two protocols use different metrics: for example a cost learnt in 'hop count' can not be converted to another one using 'delays';

- multiple destinations with different costs are aggregated into a summarized route.

| Route source | Administrative distance | |

|---|---|---|

| connected interface | 0 | |

| static route | 1 | |

| dynamic route | external BGP | 20 |

| internal EIGRP | 90 | |

| IGRP | 100 | |

| OSPF | 110 | |

| RIP | 120 | |

When a destination is announced as reachable by both the domains, handled by routing protocols with different metrics, how can the border router compare costs to determine the best route toward that destination? Each routing protocol has an intrinsic cost pre-assigned by the device manufacturer → the router always chooses the protocol with the lowest intrinsic cost (even if the selected route could be not the best one).

Inter-domain routing

Inter-domain routing is in charge of deciding and propagating information about external routes among multiple interconnected ASes over the network.

Autonomous Systems[edit | edit source]

An Autonomous System (AS) is a set of IP networks that are under control of a set of entities that agree to present themselves as a unique entity, everyone adopting the same set of routing policies.

From the inter-domain routing point of view, Internet is organized into ASes: an AS represents an homogeneous administrative entity, generally an ISP, at the highest hierarchical level on the network. Each AS is uniquely identified by a 32-bit number (it was 16-bit in the past) assigned by IANA.

Each AS is completely independent: it can decide internal routing according to its own preferences, and IP packets are routed inside it according to internal rules. Each AS can have one or more internal routing domains served by IGP protocols: each domain can adopt its favourite IGP protocol, and thanks to redistribution it can exchange routing information with other domains.

A network being AS can keep under its control incoming and outgoing traffic thanks to routing policies, but is subject to a greater responsibility: routing is more difficult to configure, and possible configuration mistakes may affect traffic of other ASes.

For network portions who are going to become ASes, in the past some additional rules were enforced which nowadays have been relaxed:

- all the network has to be on the same administrative domain:

- nowadays the administrative entity of an AS does not necessarily coincides with the organization actually managing internally the network: for example, the network at the Politecnico di Torino, although being owned by the university and being under the control of bodies inside it, is one of the subnetworks inside the AS administered by the GARR research body, which is in charge of deciding long-distance interconnections toward other ASes;

- the network has to be of at least a given size:

- in recent years content providers have needed to have some ASes spread around the world of very small size: for example, Google owns some web servers in Italy which distribute custom content for the Italian audience (e.g. advertisements) and which, being closer to users, return more quickly search results acting as a cache (

B8. Content Delivery Networks) → if those web servers constitute themselves an AS, Google has control over the distribution of its content to Italian ISPs, and can make commercial agreements with the latter favouring some of them at the expense of other ones;

B8. Content Delivery Networks) → if those web servers constitute themselves an AS, Google has control over the distribution of its content to Italian ISPs, and can make commercial agreements with the latter favouring some of them at the expense of other ones;

- in recent years content providers have needed to have some ASes spread around the world of very small size: for example, Google owns some web servers in Italy which distribute custom content for the Italian audience (e.g. advertisements) and which, being closer to users, return more quickly search results acting as a cache (

- the AS has to be connected with at least two other ASes to guarantee, at least technically, transit across it for traffic from an AS to another one:

- a local ISP of small size (Tier 3) may buy by a national ISP of big size (Tier 2) the whole connectivity toward the Internet:

B4. Inter-domain routing: peering and transit in the Internet#Commercial agreements among ASes.

B4. Inter-domain routing: peering and transit in the Internet#Commercial agreements among ASes.

- a local ISP of small size (Tier 3) may buy by a national ISP of big size (Tier 2) the whole connectivity toward the Internet:

EGP protocol class[edit | edit source]

A single border router put between ASes belonging to different ISPs arises some issues:

- who owns it? who configures it?

- who is responsible in case of failure?

- how to prevent an ISP from collecting information about a competitor's network?

The solution is to use two border routers, each one administered by either of the two ISPs, separated by a sort of intermediate 'free zone' handled by a third routing protocol instance of type Exterior Gateway Protocol (EGP).

Through an EGP protocol, every border router at the border of an AS exchanges external routing information with other border routers:

- it propagates to other ASes information about destinations which are inside its AS;

- it propagates to other ASes information about destinations which are inside other ASes but can be reached through its AS.

EGP protocols differentiate from IGP protocols especially for support to routing policies reflecting commercial agreements among ASes.

EGP protocols[edit | edit source]

- static routing: configuration of routers by hand:

- this is the best "algorithm" to implement complex policies and to have the complete control over network paths;

- no control traffic is needed: information about destinations is avoided to be exchanged;

- it does not react to topological changes;

- it is easy to introduce inconsistencies;

- Exterior Gateway Protocol (EGP)[1]: it was the first protocol completely dedicated to routing among domains, but currently nobody uses it because it provides just information about reachability and not about distance:

- if the reachability of a destination is advertised across multiple paths, the least-cost best path can not be chosen;

- if the reachability of a destination is advertised across multiple paths, all routers are not guaranteed that will choose a coherent path → this can be used only in networks without closed paths where no loop can form;

- Border Gateway Protocol (BGP): it is the only EGP protocol which has been adopted in the whole Internet at the expense of other EGP protocols: all border routers in the whole network of interconnected ASes must adopt the same EGP protocol for exchanging external routes, because if two ASes would choose to use different EGP protocols, their border routers could not communicate one with each other (

B5. Border Gateway Protocol);

B5. Border Gateway Protocol); - Inter-Domain Routing Protocol (IDRP): it was created as an evolution to BGP in order to support OSI addressing, but currently nobody uses it because:

- it is made up of rather complex parts;

- since then improvements introduced by IDRP have been ported to the next versions of BGP;

- it is not compatible with BGP → its adoption by an AS would break interoperability with the rest of the network which is still using BGP.

Redistribution[edit | edit source]

On every border router a redistribution process is running from the IGP protocol inside the AS to the EGP protocol outside the AS and vice versa → routes are redistributed first from an AS to the intermediate area and then from here to the other AS:

- the IGP protocol learns external routes toward destinations which are in other ASes, and propagates them into the AS as internal routes;

- the EGP protocol learns internal routes toward destinations which are in the AS, and propagates them to other ASes as external routes.

Redistribution defines:

- which internal networks must be known to the outside world: private networks for example must not be propagated to other ASes;

- which external networks must be known inside the AS: the amount of announced routing information can be reduced by avoiding to include full details about external networks:

- announced addresses can be 'collapsed' into aggregate routes when they share part of their network prefixes;

- a single default route can be announced when the AS has a single exit point.

Redistribution must not introduce incoherences in routing:

- a routing loop may form if, for example, a route learnt in IGP and exported in EGP is then re-imported in IGP appearing as an external route;

- if a certain AS is reachable across multiple border routers of the same AS, these border routers need to agree in order to internally redistribute a single exit point for that route.

Often redistribution on a border router at the border of an AS is enabled in one way only from the IGP protocol to the EGP protocol: internal routes are exported to the external world, while external routes are replaced by a default route.

References[edit | edit source]

- ↑ The EGP protocol is one of the protocols belonging to the EGP protocol class.

Multicast routing

Multicast is the capability to transmit the same information to multiple end users without being forced to address the latter singly and without having, hence, the need to duplicate for each of them the information to spread.

Multicast routing is in charge of deciding and propagating information needed to forward multicast packets outside Local Area Networks among multiple interconnected multicast routers (mrouter) over the network:

- determining the existence of receivers on a particular LAN segment: in case no receivers exist, it does not make sense to forward those packets to the LAN → the networks which have no receivers are cut away from the tree (pruning);

- propagating the existence and location of receivers over the whole IP network: multicast routing should keep track of locations of the various receivers, creating a 'spanning tree', called distribution tree, so as to minimize costs and deliver packets to everyone;

- transmitting and forwarding data: transmitters generate packets with a particular multicast destination address, and mrouters forward them along the distribution tree up to receivers.

Multicast routing algorithms use two types of distribution tree:

- source-specific tree (RPB, TRPB, RPM, Link State): there is one tree for every sender → paths are optimal, but updates are more complex;

- shared tree (CBT): there is one tree for every multicast group, valid for all senders → updates are simpler, but paths are not optimal.

- Multicast routing algorithms

- selective flooding

- Distance Vector

- reverse path forwarding (RPF)

- reverse path broadcasting (RPB)

- truncated reverse path broadcasting (TRPB)

- reverse path multicasting (RPM)

- Link State

- core-based tree (CBT)

- hierarchical

Distance-Vector multicast routing[edit | edit source]

Reverse path forwarding[edit | edit source]

When a router receives a multicast packet, it sends it on all the other interfaces, provided that the one from which it has arrived is on the shortest path between the router and the source.

- Problems

- traffic: it loads the network unacceptably:

- no routing trees: on a LAN multiple copies of the same packet can transit if two routers attached to the LAN have the same minimum distance from the source;

- no pruning: the packet is always distributed on all links, without considering the fact that there could be no listeners;

- symmetric network: it considers the cost of the reverse path from the router to the source, which could be different from the cost of the path from the source to the router due to the presence of unidirectional links.

Reverse path broadcasting[edit | edit source]

A source (root node)-based distribution spanning tree is built, and packets reach all destinations going along branches of this tree:

- parent interface: the interface at the minimum distance toward the source, from which packets are received from upper levels;

- child interfaces: the other interfaces of the router, to which packets are sent toward subtrees (possible received packets are always discarded).

On a LAN a single copy of the same packet transits: among routers having child interfaces on the LAN, the router which has the lowest distance toward the source is elected as the designated router for that link (in case of equal cost, the interface with the lowest IP address is taken).

- Problems

- traffic: it loads the network unacceptably:

- no pruning: the packet is always distributed on all links, without considering the fact that there could be no listeners;

- symmetric network: it considers the cost of the reverse path from the router to the source, which could be different from the cost of the path from the source to the router due to the presence of unidirectional links.

Truncated reverse path broadcasting[edit | edit source]

Interested hosts send membership reports to subscribe to the multicast group → routers will send multicast packets only to interested hosts, and will delete from the tree the branches on which no membership reports have been received (pruning).

Unfortunately the distribution tree depends, besides on the source, even on the multicast group, resulting in reporting bandwidth and router memory requirements in the order of the total number of groups times the total number of possible sources → to reduce bandwidth and memory requirements, only leaf LANs which have no listeners are deleted from the tree: a leaf LAN is a network not used by any other router to reach the multicast source.

How to determine whether a certain LAN is a leaf LAN? In split horizon with poisoned reverse, the destinations reached through the link on which the announcement has been sent are put with distance equal to infinite: if at least a downstream router propagates the entry related to the concerned source with infinite distance, then that router is using that link as its shortest path to reach the source → that link is not a leaf, and then there could be further downstream leaf LANs with listeners.

- Problem

It is not possible to perform pruning of whole subtrees, but only leaf LANs are deleted → useless traffic travels on internal nodes in the tree.

Reverse path multicasting[edit | edit source]

It is possible to perform pruning of a whole subtree:

- the first packet sent by the source is propagated according to the TRPB algorithm;

- if the first packet reaches a router attached only to leaf LANs devoid of listeners for that group, the router sends a non-membership report (NMR) message to its parent router;

- if the parent router receives NMR messages from all its children, it in turn generates a NMR message toward its parent.

NMR messages have limited validity: when the timeout expires, the TRPB algorithms is adopted again. When in a pruned branch a listener is added to that group, the router sends to its parent node a membership report message to quickly enable the branch of the tree without waiting for the timeout.

- Problems

- periodic broadcast storms: they are due to the TRPB algorithm on every timeout expiration;

- scalability: it is critical, because each router should keep a lot of information for every (source, group) pair.

Link-State multicast routing[edit | edit source]

Thanks to the full map of the network built by a LS-like (unicast) routing protocol, each router is able to compute the distribution tree from every source toward every potential receiver.

'Flood and prune' is no longer needed, but every router is able to autonomously determine whether it is along the distribution tree:

- in a leaf LAN devoid of listeners, a host communicates to be interested in the group;

- the attached router sends in flooding a LS packet which announces the existence of a LAN with listeners and its location inside the network;

- the other nodes in the network store the LS packet and in turn propagate it in flooding to all the network;

- when the first transmission packet arrives at a router, before being able to forward it it needs to compute the shortest-path tree to know whether it is along the distribution tree and, if so, on which links it should be forward the packet;

- for the following packets this computation is no longer needed because the information will be found in the cache.

- Problems

- routing the first packet in a transmission may require quite a lot of time: each router needs to compute the shortest-path tree for the (source, group) pair;

- memory resources: each source has a distinct tree toward every destination → an entry for every active (source, group) pair is in the routing table;

- CPU resources: running the Dijkstra's algorithm to compute the routing tree is heavy for routers.

Multicast routing with core-based tree algorithm[edit | edit source]

The multicast distribution tree is unique for the whole multicast group and independent of the source (shared tree). The core router is the main router in the distribution tree.

- Tree building

- a host notifies its edge router (leaf router) which it wants to join the multicast group (as both receiver and transmitter);

- the edge router sends a Join Request message to the core router;

- intermediate routers receiving the Join Request message mark the interface from which the message has arrived as one of the interfaces to be used to forward multicast packets for that group;

- when the core router receives the Join Request message, also it marks that interface for forwarding and signaling stops.

In case the message reaches a router which is already belonging to the tree, signaling stops before reaching the core router, and a new branch is added to the previous tree.

- Data forwarding

- a group member simply sends the packet in multicast;

- the packet is forwarded first along the branch from the source to the core router, then on branches from the core router to other group members: every router receiving the packet, including the core router, sends it on all the interfaces belonging to that multicast group defined at the tree-building time (except for the one from which the packet has arrived).

- Advantage

- scalability: few state information in routers.

- Disadvantages

- usage of 'hard states': the core router is fixed, and no periodic refresh messages about the status of multicast groups is sent → little suitable for highly variable situations;

- the core router is a single point of failure (even though another router can be elected);

- the location of the core router heavily affects algorithm performance: the core router may become a bottleneck because all traffic crosses it;

- paths are not optimized: the distribution tree is not built based on the location of the source, but all group members can be sources.

Hierarchical multicast routing[edit | edit source]

Hierarchical algorithms are needed for inter-domain routing: the complexity of traditional algorithms (and state information to be kept) do not allow scalability to the whole Internet.

In general, routing policies come into play, and 'hosts' are replaced by 'domains':

- non-hierarchical routing: host X wants to receive groups A, B, C;

- hierarchical routing: domain Y wants to receive groups A, B, C.

Routing Information Protocol

Routing Information Protocol (RIP) is an intra-domain routing protocol based on the Distance Vector (DV) algorithm. RIP version 1 was defined in 1988 and was the first routing protocol used on the Internet.

RIP, depending on the implementation, includes split horizon, route poisoning and path hold down mechanisms to limit propagation of incorrect routing information.

RIP is suitable for small, stable and homogeneous networks:

- small: the metric is simply based on the hop count (each link has cost 1), but the 16-hop limit can not be exceeded → more than 15 routers in a cascade within a same RIP domain are not allowed;

- stable: status changes may trigger long-lasting transients;

- homogeneous:

- homogeneous links: costs on different links can not be differentiated based on bandwidth;

- homogeneous routers: every router needs to finish processing before producing its new DV → the transient duration is bound to performance of the slowest router.

Packet format[edit | edit source]

RIP packets have the following format:

| 8 | 16 | 32 | |

| Command | Version (1) | 0 | |

| Address Family Identifier | 0 | ||

| IP Address | |||

| 0 | |||

| 0 | |||

| Metric | |||

where the most significant fields are:

- Command field (1 byte): it specifies the type of message:

- 'Response' value: the packet is transporting a DV containing one or more addresses;

- 'Request' value: a router newly connected to the network is notifying its neighbors of its presence → neighbors will send back their DVs without having to wait the timeout, increasing the convergence speed;