Proteomics/Protein Chips/Algorithms

Algorithms:

Algorithms

[edit | edit source]Introduction

[edit | edit source]The immense amount of data that is generated from the protein chip must be analyzed. Looking at each data point would be too time consuming, so statistical methods are applied to the data set. The analysis consists of using statistical algorithms to find the similarity between the protein expressions. The grouping together of similar proteins is called data clustering. Data clustering is a powerful method when identifying an individual within a group.

Distance

[edit | edit source]The clusters are made by the similarity of the distance between two data elements. The elements that are closest together will be grouped in the same cluster. Depending on the data set a different distance function may be used. The most common one in published research studies is the Euclidean distance function. Euclidean distance is the most straight forward method. It can be compared to measuring the straight line distance between two points with a ruler. The Euclidean distance equation can be used on a n-dimensional data set. This makes it very versatile for research studies.

The n-dimensional Euclidean equation is:

:

pn = n-dimension of point p.

qn = n-dimension of point q.

Hierarchical Clustering

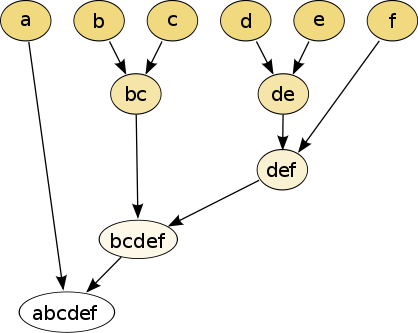

[edit | edit source]Hierarchical clustering is when previously defined clusters are used to determine new clusters. A hierarchical algorithm can be either an agglomerative algorithm or a divisive algorithm. An agglomerative algorithms starts with each data element as a separate cluster and then an algorithm used to sort them into larger clusters of the same similarity. A divisive algorithm works the exact opposite. It starts with the data set as one cluster and then divides the larger cluster into smaller ones.

Linkage

[edit | edit source]The term linkage refers distance between two points of two different clusters. The way that the distance is computed determines what type of linkage is used. All of the following types of linkages apply to hierarchical clustering.

Single linkage is the distance between the closet members of two different data clusters in hierarchical clustering.

Complete linkage is the opposite of single linkage in that the maximum distance between two members of two different clusters in calculated. This can be useful when compact clusters are needed.

Average linkage takes the average distance between the two clusters. It is also referred to as Unweighted Pair-Group Method using Arithmetic averages(UPGMA). This method is the most computational intense

Partitional Clustering

[edit | edit source]Partitional clustering finds the all the clusters at one time.

Self Organizing Maps

[edit | edit source]A self organizing map is (SOM) is best used when analyzing high dimensional data. A SOM can be used to reduce the dimensionality of the data so that it may be visualized. It consists of a training part and a mapping part. The training part builds the map using a sample input. The mapping part recognizes a new data vector and places it in the map according to the training algorithm. The SOM is made up of nodes that have a weight vector associated to them. The weight is assigned with small random values or it can be sampled using principal component eigenvectors. Using the eigenvectors will produce a better map because the weight would already have a good approximation. The weight vector has the same dimensionality of the input vector. A new data vector is placed by finding a node with the closest weight vector to it and assigning it the coordinates of that node to the vector. Euclidean distance is used to determine the closest neighbors. A SOM is best used for protein chip analysis if a particular protein is known and you want to see if there are any similar proteins to it.

An example of a self organizing map.

K-means Clustering

[edit | edit source]K-means clustering works by clustering n objects into k groups. The number of groups (k) has to be less than the number of objects(n) or else the algorithm will not work. The initial n values are divided into k sets using Lloyd’s algorithm, which iterates through the data set by sorting through common data points. The center of each of the newly formed cluster is then calculated and the algorithm is applied again. This process repeats until the centroids no longer switches clusters. Other types of algorithms can be used to iterate through the points besides Lloyd’s algorithm. Another type of clustering similar to K-means is fuzzy c-means. With a fuzzy c-mean cluster the points are based on the degree of likelihood that the point will be in a cluster. K-means clustering is appropriate to use if there is a specific number of protein groups you want to analyze on the protein chip.

An example of K-means clustering

Neural Network

[edit | edit source]A neural network can be used to determine relationships between data of finding patterns within the data set. It is called a neural network because it is related to the way the central nervous system in the human body works. Nodes or neurons are connected in the network. Different algorithms can be used to change the strength of the connections within the network. A cost function is employed depending on the data being studied. A neural network has to be trained in the same way a self organized map needs to be trained. This is called learning. There are set algorithms for different learning paradigms. Neural networks can be used in analyzing statistics, cognitive psychology, and artificial intelligence. The steps taken for a neural network is to first choose the model. The choice of model depends on the input data and what is being analyzed. Next a learning algorithm needs to be selected. The algorithm can be changed to reflect the data set. The robustness of the model can be tweaked based on the cost function and the learning algorithm. The neural network would be best used with protein chips when analyzing a new set of data without any prior information about it, a function can be inferred from the observed data. The high complexity of the data from the protein chips fits well into a neural network algorithm.

Reproducibility

[edit | edit source]Once the data from the protein chip has been gathered it needs to be analyzed. The most common method is to use a means of clustering to find relating expression patterns. These clustering methods will discard data points that do not fit within range of the algorithm. The problem is that by repeating analysis of the data, different data points may be discarded from each analysis. If a specific gene expression is being researched the data that most closely matches the expected results is kept.

A study was conducted on 37 microarray data sets and 8 simulated data sets. A low stability was achieved for all four clustering algorithms for the real data set. For the simulated datasets a larger data set was used. The results were same until samples were larger than n = 50. K-means and CLARA showed a better stability for large sample sizes (300-500).

Microarray Quality Control(MAQC) is an effort to establish a standard for microarray analysis.

References

[edit | edit source]1. Bhadeshia, H. K. Neural Networks in Materials Science. ISIJ International, Vol. 39 (1999), No. 10, pp, 966-979. Retrieved on 2008-04-20.

2. Chen, J. Hsueh, H. et al. Reproducibility of microarray data: a further analysis of microarray quality control (MAQC) data. BMC Bioinformatics. 2007; 8: 412. Published online 2007 October 25. doi: 10.1186/1471-2105-8-412.

3. Garge, N. Page, G. et al. Reproducible Clusters from Microarray Research: Whiter? BMC Bioinformatics. 2005; 6(Suppl 2):S10. Published online 2005 July 15. doi: 10.1186/1471-2105-6-S2-S10.

4. Intro to SOM by Teuvo Kohonen. SOM Toolbox. Retrieved on 2008-04-20.

5. Wikipedia.org. Cluster Analysis

6. Wikipedia.org. K-means Clustering

7. Wikipedia.org. Neural Network

8. Wikipedia.org. Self Organizing Map.