Probability/Random Variables

Random variable

[edit | edit source]Motivation

[edit | edit source]In many experiments, there may be so many possible outcomes in the sample space that we may want to instead work with a "summary variable" for those outcomes. For example, suppose a poll is conducted for 100 different people to ask them whether they agree with a certain proposal. Then, to keep track of the answers from those 100 people completely, we may first use a number to indicate the response:

- number "1" for "agree".

- number "0" for "disagree".

(For simplicity, we assume that there are only these two responses available.) After that, to record which person answer which response, we use a vector with 100 numbers for the record. For example, , etc. Since for every coordinate in the vector, there are two choices: "0" or "1", there are in total different vectors in the sample space (denoted by )! Hence, it is very tedious and complicated to work with that many outcomes in the sample space . Instead, we are often only interested in how many "agree" and "disagree" are there, instead of which person answers which response, since the number of "agree" and "disagree" determines whether the proposal is agreed by majority of them, and thus captures the essence of the poll.

Hence, it is more convenient to define a variable which gives the number of "1"s in the 100 coordinates in every outcome in the sample space . Then, can only take 101 possible values: 0,1,2,...,100, which is much fewer than the number of outcomes in the original sample space.

Through this, we can change the original experiment to a new experiment, where the variable takes one of the 101 possible values according to certain probabilities. For this new experiment, the sample space becomes .

During the above process of defining the variable (called random variable), we have actually (implicitly) defined a function where the domain is the original sample space, and the range is . Usually, we take the codomain of the random variable to be the set of all real numbers . That is, we define the random variable by for every .

Definition

[edit | edit source]To define random variable formally, we need the concept of measurable function:

Definition. (Measurable function) Let and be measurable spaces (that is, and are -algebras of and respectively). A function is (-)measurable if for every , the pre-image of under

Remark.

- If is -measurable, then we may also write to emphasize the dependency on the -algebras and .

- We just consider the pre-image of set in the -algebra since only the sets in are "well-behaved", and hence they are "of interest". Then, the measurability of ensures that the pre-image is also "well-behaved".

- So, a measurable function preserves the "well-behavedness" of a set in some sense.

- It also turns out that using pre-image (instead of image) in the definition is more useful.

Definition. (Random variable)

Let be a probability space. A random variable is a -measurable function .

Remark.

- Usually, a capital letter is used to represent a random variable, and the small corresponding letter is used to represent the realized value (i.e., the numerical value mapped from a sample point) of the random variable. For example, we say that a realized value of the random variable is .

- The -algebra is the Borel -algebra on . We will not discuss its definition in details here.

- Since is -measurable, we have for every set , the pre-image .

- It is common to use to denote . Furthermore, we use , etc. to denote , etc.

- We require the random variable to be -measurable, so that the probability (often written instead) is defined for every . (The domain of probability measure is , and because of the -measurability of the random variable .)

- Generally, most functions (that are supposed to be random variables) we can think of are -measurable. Hence, we will just assume without proof that the random variables constructed here are -measurable, and thus are actually valid.

By defining a random variable from a probability space , we actually induce a new probability space where

- The induced sample space is the range of the random variable : .

- The induced event space is a -algebra of . (Here, we follow our previous convention: when is countable.)

- The induced probability measure is defined by

- for every .

It turns out the induced probability measure satisfies all the probability axioms:

Example. Prove that the induced probability measure satisfies all the probability axioms, and hence is valid.

Proof. Nonnegativity: For every event , Unitarity: We have Particularly, we always have for every by the construction of ( is the range of the random variable ).

Countable additivity: For every infinite sequence of pairwise disjoint events (every event involved belongs to ),

After proving this result, it follows that all properties of probability measure discussed previously also apply to the induced probability measure . Hence, we can use the properties of probability measure to calculate the probability , and hence , for every set . More generally, to calculate the probability for every ( does not necessarily belong to ), we notice that , and it turns out that . Hence, we can calculate by considering .

Example. Suppose we toss a fair coin twice. Then, the sample space can be expressed by . Now, we define the random variable to be the number of heads obtained in the tosses of a sample point (this means maps every sample point in the sample space to the number of heads obtained in that sample point). Then, we have Thus, . Hence, we have (The four outcomes in the sample space should be equally likely.) (It is common to write instead of , instead of , etc.)

Exercise. Suppose we toss a fair coin three times, and define the random variable to be the number of heads obtained in the tosses of a sample point. Then, . Calculate the probability for every . Hence, calculate the probability for every . (Hint: We can write . Now, consider .)

First, we have It follows that we have Since , it follows that we have

Sometimes, even it is infeasible to list out all sample points in the sample space, we can also determine the probability related to the random variable.

Example. Consider the example about the poll discussed in the motivation part. We define the random variable to give the number of "1"s. Here, we assume that every sample point in the sample space is equally likely. Show that for every .

Proof. Since there are sample points that contains "1"s (regard this as placing indistingusihable "1"s into 100 distinguishable cells), the result follows.

A special kind of random variable that is quite useful is the indicator random variable, which is a special case of indicator function:

Definition. (Indicator function)

The indicator function of a subset of a set is a function defined by

Remark.

- Special case: we can regard the indicator function as a random variable by modifying it a little bit:

- Let be a probability space, and be an event. Then, the indicator random variable of the event is (here, we change the codomain to to match with the definition of random variable) defined by

Example. Suppose we randomly select a citizen from a certain city, and record the annual income (in the currency used for that city) of that citizen. In this case, we can define the sample space as . Assume that the city has a taxation policy such that the annual income is taxable if it exceeds 10000 (in the same currency as ). Now, we let be the taxable income of the recorded annual income. To be more precise, is defined by for every . That is, , denoted by , for every .

Example. Suppose we roll two distinguishable dice, and define to be the sum of the numbers obtained in a outcome from the roll. Then, the sample space is . Here, we can see that the range of is . Calculate for every .

Solution. Notice that there are 1,2,3,4,5,6,5,4,3,2,1 sample points in the sample space where respectively. Thus, we have

Cumulative distribution function

[edit | edit source]For every random variable , there is function associating with it, called the cumulative distribution function (cdf) of :

Definition.

(Cumulative distribution function) The cumulative distribution function (cdf) of a random variable , denoted by (or ) is for every .

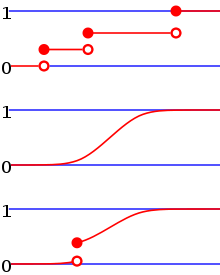

Example. Consider a previous exercise where we toss a fair coin three times, and the random variable is defined to be the number of heads obtained in the tosses of a sample point. We have calculated . So, the cdf of the random variable is given by Graphically, the cdf is a step function with a jump for every , where the size of the jump is .

We can see from the cdf in the example above that the cdf is not necessarily continuous. There are several discontinuities at the jump points. But we can notice that at each jump point the cdf takes the value at the top of the jump, by the definition of cdf (the inequality involved includes also the equality). Loosely speaking, this suggests that the cdf is right-continuous. However, the cdf is not left-continuous in general.

In the following, we will discuss three defining properties of cdf.

Theorem. (Defining properties of cdf) A function is the cdf of a random variable if and only if

(i) for each real number .

(ii) is nondecreasing.

(iii) is right-continuous.

Proof. Only if part ( is cdf these three properties):

(i) It follows the axioms of probability since is defined to be a probability.

(ii)

(iii) Fix an arbitrary positive sequence with . Define for each positive number . It follows that . Then, It follows that for each with as . That is, which is the definition of right-continuity.

If part is more complicated. The following is optional. Outline:

- Draw an arbitrary curve satisfying the three properties.

- Throw a fair coin infinitely many times.

- Encode each result into a binary number, e.g.

- Transform each binary number to a decimal number, e.g. . Then, the decimal number is a random variable .

- Use this decimal number as the input of the inverse function of the arbitrarily drawn curve, and we get a value, which is also a random variable, say .

- Then, we obtain a cdf of the random variable , if we throw a fair coin infinitely many times.

Sometimes, we are only interested in the values such that , which are more 'important'.

Roughly speaking, the values are actually the elements of the support of , which is defined in the following.

Definition. (Support of random variable) The support of a random variable , , is the smallest closed set such that .

Remark.

- E.g. closed interval is closed set.

- Closedness will not be emphasized in this book.

- Practically, (which is the smallest closed set).

- is probability mass function for discrete random variables;

- is probability density function for continuous random variables.

- The terms mentioned above will be defined later.

Example. If then , since and this set is the smallest set among all sets satisfying this requirement.

Remark. etc. also satisfy the requirement, but they are not the smallest set.

Discrete random variables

[edit | edit source]Definition. (Discrete random variables) If is countable (i.e. 'enumerable' or 'listable'), then the random variable is a discrete random variable.

Example. Let be the number of successes among Bernoulli trials. Then, is a discrete random variable, since which is countable.

On the other hand, if we let be the temperature on Celsius scale, is not discrete, since which is not countable.

Often, for discrete random variable, we are interested in the probability that the random variable takes a specific value. So, we have a function that gives the corresponding probability for each specific value taken, namely probability mass function.

Definition.

(Probability mass function) Let be a discrete random variable. The probability mass function (pmf) of is

Remark.

- Alternative names include mass function and probability function.

- If random variable is discrete, then (it is closed).

- The cdf of random variable is . It follows that the sum of the value of pmf at each inside the support equals one.

- The cdf of a discrete random variable is a step function with jumps at the points in , and the size of each jump defines the pmf of at the corresponding point in .

Example. Suppose we throw a fair six-faced dice one time. Let be the number facing up. Then, pmf of is

Continuous random variables

[edit | edit source]Suppose is a discrete random variable. Partitioning into small disjoint intervals gives In particular, the probability per unit can be interpreted as the density of the probability of over the interval. (The higher the density, the more probability is distributed (or allocated) to that interval).

Taking limit, in which, intuitively and non-rigorously, can be interpreted as the probability over 'infinitesimal' interval , i.e. , and can be interpreted as the density of the probability over the 'infinitesimal' interval, i.e. .

These motivate us to have the following definition.

Definition. (Continuous random variable) A random variable is continuous if for each (measurable) set and for some nonnegative function .

Remark.

- The function is called probability density function (pdf), density function, or probability function (rarely).

- If is continuous, then the value of pdf at each single value is zero, i.e. for each real number .

- This can be seen by setting , then (dummy variable is changed).

- By setting , the cdf .

- Measurability will not be emphasized. The sets encountered in this book are all measurable.

- is the area of pdf under , which represents probability (which is obtained by integrating the density function over the set ).

The name continuous r.v. comes from the result that the cdf of this kind of r.v. is continuous.

Proposition. (Continuity of cdf of continuous random variable) If a random variable is continuous, its cdf is also continuous (not just right-continuous).

Proof. Since (Riemann integral is continuous), the cdf is continuous.

Example. (Exponential distribution) The function is a cdf of a continuous random variable since

- It is nonnegative.

- . So, .

- It is nondecreasing.

- It is right-continuous (and also continuous).

Proposition. (Finding pdf using cdf) If cdf of a continuous random variable is differentiable, then the pdf .

Proof. This follows from fundamental theorem of calculus:

Remark. Since is nondecreasing, . This shows that is always nonnegative if is differentiable. It is a motivation for us to define pdf to be nonnegative.

Without further assumption, pdf is not unique, i.e. a random variable may have multiple pdf's, since, e.g., we may set the value of pdf to be a real number at a single point outside its support (without affecting the probabilities, since the value of pdf at a single point is zero regardless of the value), and this makes another valid pdf for a random variable. To tackle this, we conventionally set for each to make the pdf become unique, and make the calculation more convenient.

Example. (Uniform distribution) Given that is a pdf of a continuous random variable , the probability

Mixed random variables

[edit | edit source]You may think that a random variable can either be discrete or continuous after reading the previous two sections. Actually, this is wrong. A random variable can be neither discrete nor continuous. An example of such random variable is mixed random variable, which is discussed in this section.

Theorem. (cdf decomposition) The cdf of each random variable can be decomposed as a sum of three components: for some nonnegative constants such that , in which is a real number, is cdf of discrete, continuous, and singular random variable respectively.

Remark.

- If and , then is a mixed random variable.

- We will not discuss singular random variable in this book, since it is quite advanced.

- One interpretation of this formula is:

- If is discrete (continuous) random variable, then ().

- We may also decompose pdf similarly, but we have different ways to find pdf of discrete and continuous random variable from the corresponding cdf.

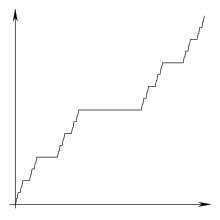

An example of singular random variable is the Cantor distribution function (sometimes known as Devil's Staircase), which is illustrated by the following graph. The graph pattern keeps repeating when you enlarge the graph.

Example. Let . Let . Then, is a cdf of a mixed random variable , with probability to be discrete and probability to be continuous, since it is nonnegative, nondecreasing, right-continuous and .

Exercise. Consider the function . It is given that is a cdf of a random variable .

(a) Show that .

(b) Show that the pdf of is

(c) Show that the probability for to be continuous is .

(d) Show that is .

(e) Show that the events and are independent if .

(a) Since is a cdf, and when ,

(b) Since is a mixed random variable, for the discrete random variable part, the pdf is On the other hand, for the continuous random variable part, the pdf is Therefore, the pdf of is

(c) We can see that can be decomposed as follows: Thus, the probability for to be continuous is .

(d)

(e) If , . Thus, i.e. and are independent.

![{\displaystyle X^{-1}((-\infty ,x]),X^{-1}(\{x\})}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7e94bbd33f15c5efcb6019ad65bd32ebf25e5cde)

![{\displaystyle \mathbb {P} _{X}:{\mathcal {F}}_{X}\to [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/51228781581dd69424c41863089ced42259938a0)

![{\displaystyle \mathbb {P} (X\leq x)=\mathbb {P} (X\in (-\infty ,x])}](https://wikimedia.org/api/rest_v1/media/math/render/svg/79f9feb2d6fa1cecf1a2e708c1b4a989dc6f98cc)

![{\displaystyle \mathbb {P} _{X}((-\infty ,x]\cap {\mathcal {X}})}](https://wikimedia.org/api/rest_v1/media/math/render/svg/778d9c9c464334d5fc83291d9c6afb22b3805aad)

![{\displaystyle \mathbb {P} (X\leq x)=\mathbb {P} _{X}((-\infty ,x]\cap {\mathcal {X}})=\mathbb {P} _{X}(\{0,1,\dotsc ,x\})=\sum _{y=0}^{x}\mathbb {P} _{X}(\{y\})=\sum _{y=0}^{x}\mathbb {P} (X=y)}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d5054787622aec355a3b04d1d882201844dd8a81)

![{\displaystyle U\in [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/66966a6f68e58c668e96ae0c3d72967076188f6e)

![{\displaystyle \operatorname {supp} (Y)=[\underbrace {-273.15} _{\text{absolute zero}},\underbrace {1.417\times 10^{32}} _{\text{Planck temperature}}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/042663d0595846d7f6bf52a3d8c041c0d18b8072)

![{\displaystyle [x_{1},x_{1}+\Delta x_{1}],\dotsc }](https://wikimedia.org/api/rest_v1/media/math/render/svg/f997d8772d19bd319e43843a2391f29dec788de4)

![{\displaystyle \mathbb {P} (X\in S)=\mathbb {P} \left(X\in \bigcup _{i}[x_{i}+\Delta x_{i}]\right)=\sum _{i}\mathbb {P} {\big (}X\in [x_{i}+x_{i}+\Delta x_{i}]{\big )}=\sum _{i}\underbrace {\frac {\mathbb {P} {\big (}X\in [x_{i}+x_{i}+\Delta x_{i}]{\big )}}{\Delta x_{i}}} _{\text{probability per unit}}\cdot \Delta x_{i}.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ffb52af0014e8dab4f8402a0bdca4e1876c01b05)

![{\displaystyle \lim _{\Delta x_{i}\to 0}\sum _{i}\underbrace {\frac {\mathbb {P} {\big (}X\in [x_{i}+x_{i}+\Delta x_{i}]{\big )}}{\Delta x_{i}}} _{\text{density}}\cdot \Delta x_{i}=\int _{S}\underbrace {f(x)} _{\text{density}}\,dx,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/745b7ffc5d01ffdeef1888d33bcc83b321ec9876)

![{\displaystyle [x,x+dx]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f07271dbe3f8967834a2eaf143decd7e41c61d7a)

![{\displaystyle \mathbb {P} (X\in [x,dx])}](https://wikimedia.org/api/rest_v1/media/math/render/svg/134902affae2e578579b674cdf5aa2ddfc685fd8)

![{\displaystyle {\frac {\mathbb {P} (X\in [x,dx])}{dx}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/da39bcb2ae30361d923db6309c4fd47225c1c161)

![{\displaystyle S=(-\infty ,x]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3c1ba3660ce274bbfdb58c2286382c039b83dfd7)

![{\displaystyle F(x)=\mathbb {P} {\big (}X\in (-\infty ,x]{\big )}=\int _{-\infty }^{x}f(u)\,du}](https://wikimedia.org/api/rest_v1/media/math/render/svg/95bac834b75c71781af9f402d6f388bbc851a978)

![{\displaystyle \lim _{x\to \infty }F(x)=(1/2)\left[\lim _{x\to \infty }(F_{d}(x)+F_{c}(x)\right]=(1/2)(1+1)=1}](https://wikimedia.org/api/rest_v1/media/math/render/svg/4e93c02c07781127300e6626d61f7abce9c17a81)