Cyberbotics' Robot Curriculum/Advanced Programming Exercises

This chapter requires advanced knowledge in computer science. It will guide you through a selection of advanced topics by means of exercises all based on the e-puck robot. At this level, the subject becomes increasingly rich and complex, so we will not try to be exhaustive. Instead, we will focus on five hot topics to get an insight into today robotics. The first exercise is about position estimation using odometry. The second is about path planning with NF1 and potential fields. The third one is about pattern recognition using artificial neural networks and supervised learning. Eventually, we will speak of unsupervised learning with particle swarm optimization (PSO) and the simultaneous localization and mapping problem (SLAM) will be investigated.

Odometry [Advanced]

[edit | edit source]We saw in previous exercises that the e-puck robot has two DC motors and posses an encoder for each. These encoders count the number of steps done by the motors (1000 steps for one rotation of the wheel). By recording this information, the trajectory of the robot can be estimated. This is called odometry. In the following exercise we will learn odometry basics.

The major drawback of the above procedure is error accumulation. At each step (each time you take an encoder measurement), the position update will involve some error. This error accumulates over time and therefore renders accurate tracking over large distances impossible. Over short distances, however, odometry can provide extremely precise results. To get such results, it is crucial to calibrate the robot. Tiny differences in wheel diameter will result in important errors after a few meters, if they are not properly taken into account. Note that the calibration must be done for each robot and preferably on the surface on which it will be used later on. The procedure also applies to the simulated e-puck.

Some theory

[edit | edit source]For a differential drive robot, the position of the robot can be estimated by looking at the difference in the encoder values: and . With these values we can update the position of the robot to find its current position . This update needs to be done as often as possible to maximize the precision of the whole method. We express and . Thus we have and where b is the distance between the wheels. As a summary we have:

These equations are given in section 5.2.4 of [1]. You will find more information on error propagation in the same reference.

Calibrate your e-puck

[edit | edit source]Open the following world file:

.../worlds/advanced_odometry.wbt

This world has one robot and the odometry equations are already implemented. The module odometry (.h and .c) tracks the position of the robot, the module odometry_goto (.h and .c) is made to give the robot a destination and compute the needed motor speeds to lead it there.

The calibration procedure is adapted from [2] for the e-puck robot, its goal is to compute the following three parameters:

- Distance per increment for left wheel (left conversion factor)

- Distance per increment for right wheel (right conversion factor)

- Distance between the two wheels (axis length)

Those parameters can be estimated rather easily but we cannot measure them directly with enough precision. Thus we will measure 4 other parameters instead and do some math to get the values we need. Each of these measures is a step in the procedure:

- Increments per rotation is the number of increments per wheel rotation

- Axis wheel ratio is the ratio between the axis length and the mean wheel diameter

- Diameter left and diameter right are the two wheel diameters

- Scaling factor is self explanatory

Once we have those parameters we can compute the previous parameters as follows:

Step 1 - Increments per tour

[edit | edit source]The number of increments per tour can be found experimentally. In this test, the robot can accelerate, stabilize its speed and do a given number of motor increments. This value can be modified by the constant INCREMENT_TEST in advanced_odometry.c Of course, every change in the code must be followed by a build and a simulation revert.

![]() [P.1] Run the first step with key '1' with the

[P.1] Run the first step with key '1' with the INCREMENT_TEST value to 1000. Check that the wheel has done exactly one revolution by putting a marker on the wheel. If it is not the case, find a value such that the wheel does a perfect turn and check it by doing 10 turns instead of 1.

Step 2 - Axis wheel ratio

[edit | edit source]If you search in the documentation of the e-puck you will find that the wheel diameter should be 41mm for each wheel and that the distance between the wheels is 53mm which gives a ratio of 1.293. To verify this we will command the robot to rotate in place several times. To do a complete turn we will need 1000 * 1.293 = 1293 increments. The number of steps can be adjusted with the constant RATIO_TEST.

![]() [P.2] Run the second step with key '2' and adjust the value as befor to have one perfect turn. Then verify it with 10 turns or more. Once you have a good value do the math backward to find out what is the axis wheel ratio.

[P.2] Run the second step with key '2' and adjust the value as befor to have one perfect turn. Then verify it with 10 turns or more. Once you have a good value do the math backward to find out what is the axis wheel ratio.

Step 3 - Wheels diameters

[edit | edit source]To experimentally find out diameter differences between the two wheels, we will load our current odometry model onto the robot and then let it move around in your arena while keeping track of its position. At the end, we let the robot move back to where it thinks it started, and note the offset with the actual initial position. We will give four waypoints to the robots such that it will move along a square. By default the side of the square measures 0.2 m but if you have more space you can increase this size to get better results.

![]() [P.3] Enter the value for the number of increments per tour and the axis wheel ratio in the file

[P.3] Enter the value for the number of increments per tour and the axis wheel ratio in the file odometry.c. This will provide the accuracy we need to finish the calibration.

![]() [P.4] Run the third test with key '3', the robot will move and come back near its start position. If the square is not closed, as shown on the figure called "Open and closed square trajectories", increase the left diameter and decrease the right diameter in

[P.4] Run the third test with key '3', the robot will move and come back near its start position. If the square is not closed, as shown on the figure called "Open and closed square trajectories", increase the left diameter and decrease the right diameter in odometry.c. This means: and . If on the contrary the trajectory is too closed, as shown on the same figure, decrease the left diameter and increase the right diameter.

Step 4 - Scaling factor

[edit | edit source]The scaling factor is the easiest to find out, we just run the robot on a straight line for a given distance (0.3m in the code) and measure the actual covered distance.

![]() [P.5] Run the fourth test with key '4', the robot will move. Measure the covered distance and compute the scaling factor as follows: . Again, report this value in

[P.5] Run the fourth test with key '4', the robot will move. Measure the covered distance and compute the scaling factor as follows: . Again, report this value in odometry.c.

Your robot is now calibrated.

![]() [Q.1] We did a whole procedure to limit the error in the odometry process but where does this error come from ? What are the causes ?

[Q.1] We did a whole procedure to limit the error in the odometry process but where does this error come from ? What are the causes ?

Use the odometry

[edit | edit source]Now that we have a complete and calibrated odometry module we will use it. The commands to move the robot are the summarized in the table.

| Keyboard key | Action |

|---|---|

| 1 | Start step 1 of calibration |

| 2 | Start step 2 of calibration |

| 3 | Start step 3 of calibration |

| 4 | Start step 4 of calibration |

| UP | Increase robot speed |

| DOWN | Decrease robot speed |

| LEFT | Turn left |

| RIGHT | Turn right |

| S | Stop the robot |

| R | Reset the encoders |

![]() [P.6] Using odometry can you compute the surface of the blue hexagon ?

[P.6] Using odometry can you compute the surface of the blue hexagon ?

This was a simple use of odometry, but in the next exercises you will see that we heavily depend on odometry to have an idea of the robot's position. We also use it to move the robot precisely. Exercises that make use of odometry are Path Planning and SLAM.

Path planning [Advanced]

[edit | edit source]Overview

[edit | edit source]The goal of this exercise is to implement and experiment with two methods of path planning: potential field and NF1. We will compare them to find out what are the advantages of both methods and what needs to be taken care of while using them. Path planning is heavily used in industry where you need to move, for instance, a robotic arm with 6 degrees of freedom. The techniques used in mobile robotics, especially in 2D as we do, are much simpler.

Potential field

[edit | edit source]This exercise is based on the section 6.2.1.3 of [1]. The goal is to implement a simple potential field control for the e-puck through the provided world.

Theory

[edit | edit source]The idea behind potential field is to create a field (or gradient) across the map that will direct the robot to the goal. We consider the robot as a point under the influence of an artificial potential field . The robot moves by following the field, as would a ball rolling downhill. This is possible by giving the goal an attractive force (i.e. the minimum potential) and obstacles very high repulsive force (peaks in the field). On the figures, you see the resulting contour plot for a single triangular obstacle (in blue) and a goal (in red), the same setup is plotted as a 3D field on next picture.

The resulting force can be computed at each position

where denotes the gradient of at position :

We can then separate the potential and the force as two different parts, an attractive part and a repulsive one. We can then compute them separately:

Application

[edit | edit source]Open the following world file:

.../worlds/advanced_path_planning.wbt

![]() [P.1] Implement the attractive goal potential and force according to equations to in the controller

[P.1] Implement the attractive goal potential and force according to equations to in the controller advanced_path_planning_potential_field.c

![]() [P.2] Implement the repulsive obstacle potential and force according to equations to .

[P.2] Implement the repulsive obstacle potential and force according to equations to .

![]() [P.3] Implement the control by calling the functions you just implemented with your current position as argument and following the computed force. The missing code is clearly indicated in the provided structure.

[P.3] Implement the control by calling the functions you just implemented with your current position as argument and following the computed force. The missing code is clearly indicated in the provided structure.

![]() [P.4] Test your code both in simulation and on the real e-puck. For the run on the real e-puck you can print a copy of the A3 sheet given in

[P.4] Test your code both in simulation and on the real e-puck. For the run on the real e-puck you can print a copy of the A3 sheet given in .../path_planning_obstacle.pdf

![]() [Q.1] Do you notice any difference ? Which one ? And why do they happen ?

[Q.1] Do you notice any difference ? Which one ? And why do they happen ?

![]() [P.5] Test other functions for the potential (for example, a linear, instead of quadratic, attractive potential).

[P.5] Test other functions for the potential (for example, a linear, instead of quadratic, attractive potential).

![]() [Q.2] There are methods to improve the trajectory of the robot (extended potential field for instance), think of how you could do to have a shorter path.

[Q.2] There are methods to improve the trajectory of the robot (extended potential field for instance), think of how you could do to have a shorter path.

NF1

[edit | edit source]The NF1 algorithm, also known as Grassfire, is an alternative approach to path planning. It is described in section 6.2.1.2 and in figure 6.15 of [1]. This algorithm is already implemented in the provided code.

Theory

[edit | edit source]The algorithm could be explained this way:

- Divide the plan into simple geometric areas called cells. In our case we do this by creating a grid with square cells and we might change the resolution if necessary.

- Determine which cells are occupied (by obstacles) and which are free. Also find out in which cell is the start point and where is the goal.

- Find a path in the free cells to reach the goal from the start position. In NF1 this is done by assigning a distance to each cell, the goal has distance 0 and then each movement increases the distance to the goal. This assignment can be done recursively on the whole grid.

The result of this process can be seen on figure called "Cell decomposition and distance to goal with NF1". We get a discretized gradient which is similar to the potential field we had before.

Application

[edit | edit source]![]() [P.7] Go through the NF1 controller code controller

[P.7] Go through the NF1 controller code controller advanced_path_planning_NF1.c and run it in simulation and on the real e-puck.

![]() [Q.3] What do you notice ? Is the behavior optimal ? Notice how it is based on the motion control algorithm used in step 3 of the odometry calibration procedure: Step 3.

[Q.3] What do you notice ? Is the behavior optimal ? Notice how it is based on the motion control algorithm used in step 3 of the odometry calibration procedure: Step 3.

![]() [P.8] What happens if you increase the configuration values for the motion control algorithm (

[P.8] What happens if you increase the configuration values for the motion control algorithm (k_alpha, k_beta and k_rho) in file .../lib/odometry_goto.c ? How can you explain that ? (Hint: Webots is a physically realistic simulator)

![]() [P.9] Can you modify the motion control algorithm to have a smoother behavior?

[P.9] Can you modify the motion control algorithm to have a smoother behavior?

Comparison

[edit | edit source]Now we will compare the two approaches to path planning to highlight the advantages and drawback of each method.

![]() [P.10] Using the blank A3 world sheet given in file

[P.10] Using the blank A3 world sheet given in file .../path_planning_blank.pdf, design a world where potential field should fail but not NF1.

![]() [P.11] Enter the obstacle coordinates in both controllers and run them in simultation to confirm the prediction.

[P.11] Enter the obstacle coordinates in both controllers and run them in simultation to confirm the prediction.

![]() [Q.4] Based on these exercises, list the advantages and drawbacks of each methods.

[Q.4] Based on these exercises, list the advantages and drawbacks of each methods.

Pattern Recognition using the Backpropagation Algorithm [Advanced]

[edit | edit source]Robot learning is one of the most exciting topics in computer science and in robotics. The aim of this exercise is to show that your robot is able to learn. First, the basics of supervised machine learning will be introduced. Then, you will use in details an advanced technique to recognize patterns in the e-puck camera image: the backpropagation algorithm. This exercise is spread over two topics: image processing and artificial neural networks.

Description of the Exercise

[edit | edit source]Generally speaking, the pattern recognition into an image has many applications such as the optical character recognition (OCR) used in many scanners or the faces detection used in many webcams. In robotics, it can be useful to locate the robot in an environment.

The purpose of this exercise is simply to recognize some landmarks on the wall. Your e-puck should be able to move randomly in a maze (see the figure) and, each time its camera is refreshed, to recognize the landmarks that it sees. The figure called "4 landmarks to recognize" depicts the landmarks used in this exercise. Note that the camera values are complex to treat. First, there are a huge number of values to treat (here: 52*39*3=6084 integers between 0 and 255). Moreover, some noise surrounds these values.

Intuition about Pattern Recognition

[edit | edit source]![]() [Q.1] Think about the problem described in the previous section. Describe a method to detect a blue landmark in the camera image values, i.e., to find the x-y coordinates and the size of the landmark in the image. (Hints: There are maybe several landmarks in one image.)

[Q.1] Think about the problem described in the previous section. Describe a method to detect a blue landmark in the camera image values, i.e., to find the x-y coordinates and the size of the landmark in the image. (Hints: There are maybe several landmarks in one image.)

![]() [Q.2] Once the position and the size of the landmarks are known, describe a method to recognize them, i.e., to determine at which class of landmarks it belongs to (either l1, l2, l3 or l4).

[Q.2] Once the position and the size of the landmarks are known, describe a method to recognize them, i.e., to determine at which class of landmarks it belongs to (either l1, l2, l3 or l4).

![]() [Q.3] Is your recognizing method easy to maintain? (Hint: if you modify the shape of the landmarks in order to get a horizontal cross, a diagonal cross, a circle and a checkerboard, is your method directly applicable?)

[Q.3] Is your recognizing method easy to maintain? (Hint: if you modify the shape of the landmarks in order to get a horizontal cross, a diagonal cross, a circle and a checkerboard, is your method directly applicable?)

Machine Learning

[edit | edit source]You observed in the last section that pattern recognition is not trivial. Some results can be achieved by programming empirically. But the program is then specific to the given problem.

How about a way in which your robot would be able to learn any landmark? During the rest of the exercise, a method of supervised learning is introduced. Supervised learning is a subset of the machine learning topic. It is constituted mainly by a function which maps the inputs of the robot with its outputs. This function can be trained using a database filled by an expert. This database contains pairs of one input and the corresponding output as expected by the expert. In the method used in this exercise, this function is represented by an artificial neural network (ANN) and the backpropagation algorithm is used to train the function. These features will be introduced in the following sections.

Neurons

[edit | edit source]The purpose is to model the capacity of learning. Which better solution to take inspiration from the best example we know in term of intelligence: the brain. Long-past, the biologists try to understand the mechanism of the brain. To sum up their results briefly, the brain is composed of a huge number (about 10^{11}) of basics cells called neurons. These cells are specialized in the propagation of information (electrical impulses). Each of these neurons has on average 7000 connections (called synaptic connection) with other neurons. These connections are chemical. An adult has on average 10^{15} of these connections in its brain. This incredible network maps our senses to muscles, and enables us to think, to feel, to memorize information, etc. On the figure below you see a representation of a biological neuron. The electrical information from the other neurons comes via the synaptic connection. According to this signal, the terminal button excites another neuron.

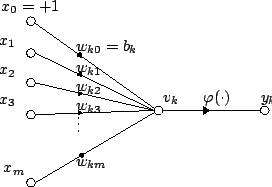

The next step is to model an artificial neuron. There exist several neuron models in the literature. But one thing is sure: the more biologically realistic the model is, the more complex it is. In this exercise, we will use a model often used in the literature: the McCulloch&Pitts neuron (see the figure). The explanation of the biological meaning of each term is out of the scope of this document, only the functioning of an artificial neuron is explained here. A neuron is modeled by several weighted inputs and a function to compute the output. The inputs (x vector) represents the synaptic connection by a floating-point value. The biological meaning of these values is the rate of the electrical peaks. They come either from another neuron or from the inputs of the system. The weights (w vector) balances the inputs. Thanks to these weights, a given input takes more or less importance to the output. You may have noticed on the picture that the first input is set to 1. Its corresponding weight is called the bias (b). The y output of the neuron is computed as a function of the weighted sum of the inputs . The function can be a sigmoid function .

A single neuron can already classify inputs. But it can only classify linearly separable inputs. To achieve better results artificial neurons are linked together like the neural network of our brain. The result is called an Artificial Neural Network (ANN).

The Backpropagation Algorithm

[edit | edit source]To train the ANN (finding the right weights) knowing the input and the expected output (supervised learning), there exist an algorithm called backpropagation. To apply it, a specific ANN shape is required. The ANN must be constructed by layer and each neuron of a layer has to be strictly linked with all the neurons of the previous and of the next layer. The algorithm is written in Algorithm 1 as reference. But, its explanation is out of the scope of this exercise. Nevertheless, information about this algorithm can be found on the Internet, [3] is a good starting point.

Backpropagation algorithm 1. Initialize the weights in the network (often randomly) 2. repeat * foreach example e in the training set do 1. O = neural-net-output(network, e) ; forward pass 2. T = teacher output for e 3. Calculate error (T - O) at the output units 4. Compute delta_wi for all weights from hidden layer to output layer ; backward pass 5. Compute delta_wi for all weights from input layer to hidden layer ; backward pass continued 6. Update the weights in the network * end 3. until all examples classified correctly or stopping criterion satisfied

The proposed Method

[edit | edit source]As shown in figure, the first step of the proposed method consists to extract the samples from the camera image and to preprocess them in order to directly send it to the ANN. Its results must be an array of floating values between 0 and 1 having a fixed size. So only the blue channel is kept and the resampled image is sampled into another resolution. The method to sample the image is the nearest neighbor interpolation. This method can be implemented quickly but the resulting samples are of very poor quality. However, for the landmarks used in this exercise, this method is sufficient.

The second step of the method is to send the values of the sampled image as input of an ANN (see figure). The input layer of the ANN has to have the same size as the sampled image pixels. The hidden layer of the ANN can have an arbitrary size. The last layer has the same size as the number of landmarks.

Train your Robot by yourself and test it

[edit | edit source]| Keyboard key | Action |

|---|---|

| 1 | Move the robot in front of the pattern 1 and select pattern 1 for learning |

| 2 | Move the robot in front of the pattern 2 and select pattern 2 for learning |

| 3 | Move the robot in front of the pattern 3 and select pattern 3 for learning |

| 4 | Move the robot in front of the pattern 4 and select pattern 4 for learning |

| UP | Select the next pattern for learning |

| DOWN | Select the previous pattern for learning |

| L | Enable (or disable) the learning mode |

| T | Enable (or disable) the testing mode |

| O | Load the weights stored in the weights.w file |

| S | Save the weights stored in the weights.w file |

| B | Stop the motors of the e-puck |

| R | The e-puck performs rotations |

| W | The e-puck walks randomly |

| D | The e-puck dances |

Open the following world file:

.../worlds/advanced_pattern_recognition.wbt

The table lists the commands of the exercise. The first group of commands enables to select a pattern to learn. The second one enables to learn the pattern selected (one backpropagation iteration on the ANN with the sampled image as input and the selected pattern as output) and to test a sampled image (to put it as input of the ANN and to observe its output). The third one enables to save and load the weights of the ANN. Finally, the last group enables to move the e-puck. The e-puck dance is particularly useful to add a noise during the training phase.

To train the e-puck, choose a pattern to learn, place the e-puck in front of the pattern and enable the learning mode.

To test the e-puck, place the e-puck anywhere and enable the testing mode.

![]() [P.1] Press the ”1” and the ”B” key strokes, then enable the learning mode thanks to the ”L” key stroke. Without moving the e-puck, press alternatively the ”1” to ”4” key strokes in order to train the other landmarks until the error on each of these landmarks is less than 10% (<0.1). You shall press randomly the numeric keys to achieve a fair evolution for each landmark, otherwise the weights might be biased towards one of the output. Then press the ”T” button for testing the results.

[P.1] Press the ”1” and the ”B” key strokes, then enable the learning mode thanks to the ”L” key stroke. Without moving the e-puck, press alternatively the ”1” to ”4” key strokes in order to train the other landmarks until the error on each of these landmarks is less than 10% (<0.1). You shall press randomly the numeric keys to achieve a fair evolution for each landmark, otherwise the weights might be biased towards one of the output. Then press the ”T” button for testing the results.

![]() [Q.4] Does your e-puck recognize well the landmarks when you place it thanks to the ”1” to ”4” key strokes? And what about this recognition when it moves randomly in the big board? What is the problem? How to correct it?

[Q.4] Does your e-puck recognize well the landmarks when you place it thanks to the ”1” to ”4” key strokes? And what about this recognition when it moves randomly in the big board? What is the problem? How to correct it?

![]() [P.2] Retry the e-puck training thanks to the previous results. (Hint: Revert the simulation in order to remove the weights of the ANN.)

[P.2] Retry the e-puck training thanks to the previous results. (Hint: Revert the simulation in order to remove the weights of the ANN.)

Parameters of the ANN

[edit | edit source]The ann.h file defines the structure of the ANN (size of the hidden layer, number of layers, etc.), the size of the sampled images and the learning rate.

![]() [P.3] What is the influence of the learning rate on the e-puck learning? What occurs if this rate is too high or too low?

[P.3] What is the influence of the learning rate on the e-puck learning? What occurs if this rate is too high or too low?

![]() [P.4] What is the influence of the hidden layer size?

[P.4] What is the influence of the hidden layer size?

![]() [P.5] Add at least one other hidden layer. What is its influence on the e-puck learning?

[P.5] Add at least one other hidden layer. What is its influence on the e-puck learning?

![]() [P.6] Improve the current image subsampling method. (Hint: There exist several methods to do interpolation. Search on the web: multivariate interpolation)

[P.6] Improve the current image subsampling method. (Hint: There exist several methods to do interpolation. Search on the web: multivariate interpolation)

![]() [P.7] Modify the resolution of the sample image. What is its influence on the e-puck learning?

[P.7] Modify the resolution of the sample image. What is its influence on the e-puck learning?

![]() [P.8] Replace the four initial landmarks by some other ones (ex: some icons you find on the Internet, your own creations, digits or the Rat's Life landmarks [4]). Modify the parameters of the ANN, of the backpropagation algorithm and of the image interpolation in order to recognize them with an error less than 3%.

[P.8] Replace the four initial landmarks by some other ones (ex: some icons you find on the Internet, your own creations, digits or the Rat's Life landmarks [4]). Modify the parameters of the ANN, of the backpropagation algorithm and of the image interpolation in order to recognize them with an error less than 3%.

Other Utilization of the ANN [Challenge]

[edit | edit source]![]() [Q.5] List some possible applications of an ANN and of the backpropagation algorithm on the e-puck without using the camera.

[Q.5] List some possible applications of an ANN and of the backpropagation algorithm on the e-puck without using the camera.

![]() [P.9] Implement one item of your list.

[P.9] Implement one item of your list.

Going Further

[edit | edit source]This exercise was inspired by papers about the OCR using the backpropagation algorithm, further information my be found in [3].

As a matter of fact, there exist other methods for the pattern recognition. The proposed method has the advantage to be biologically inspired and to be rather intuitive. This method can be extended in two ways: the utilization of more realistic neuron models and the utilization of some extension of the training algorithm. On the other hand, some completely different methods can be used such as statistically-based methods. The following Wikipedia page summarizes the existing solutions for supervised learning: Supervised learning

Unsupervised Learning using Particle Swarm Optimization (PSO) [Advanced]

[edit | edit source]In previous exercise we saw how to give a robot the capacity to learn something. This was done using an artificial neural network and the backpropagation algorithm, this was a supervised learning method. Now with PSO we will learn the basics of unsupervised learning. We want the robot to avoid obstacles as in Obstacle Avoidance Module with the Obstacle Avoidance Module but this time we will let it learn by itself. This technique is used when we don't have a teaching set, a set of input and their expected output, i.e., when good models do not exist and when the design space is too big (infinite) to be systematically searched. The role of the engineer is to specify the performance requirements and the problem encoding, the algorithm will do the rest.

Some insights about PSO

[edit | edit source]PSO is a relatively young but promising technique, it has been introduced by Eberhart and Kennedy in a 1995 paper [5]. Since then the algorithm has undergone many evolutions, more informations can be found in [6] and [7]. The key idea behind the algorithm is to explore a search space using a population of particles. Each of these particles represents a candidate solution and is characterized by a position and a velocity vector in the n-dimensional search space. Both vectors are randomly initialized and the particles are the flown through the search space. To evaluate the performance of a candidate solution we use a fitness function. This fitness function has to be designed specifically for the given problem, it will have to rank the performance of the solution. Typically we design a function returning values within the range [0,1] where 0 is the worst result and 1 is a perfect score. Each particle keeps track of its personal best solution called pbest and of the global best solution called gbest. Then at each iteration the velocity is changed (accelerated) toward its pbest and gbest. The flowchart shows the main PSO evolutionary loop.

Since we work in a multidimensional space we express the particles position as and the velocity as where i is the index of the particle and j represents the dimension in the search space. is then the personal best solution achieved by particle i and is the global best solution. The main equations are simple to understand and are expressed in this form:

We notice the function which generates a uniformly distributed random number between 0 and 1. We also notice three parameters that can be modified to tune the performance of the algorithm: , and .

Implementation

[edit | edit source]Open the following world file:

.../worlds/advanced_particle_swarm_optimization.wbt

In this exercise the goal is to give the ability to some robots to evolve on their own. To do that we use PSO and an artificial neural network (ANN, see Pattern Recognition using the Backpropagation Algorithm). Each robot will be running the same controller but with different weights for the ANN. PSO will act on the weights to evolve each controller, this means that the search space is a N-dimensional search space where N is the number of weights. The figure shows a representation of the network with one hidden layer and it's input and output.

![]() [Q.1] How could we specify that the robot should avoid obstacles as a fitness function ?

[Q.1] How could we specify that the robot should avoid obstacles as a fitness function ?

![]() [Q.2] Is the strategy “stay still while my sensors are not activated” successful with your function ? How to avoid this, i.e. how to encourage the robot to move fast ?

[Q.2] Is the strategy “stay still while my sensors are not activated” successful with your function ? How to avoid this, i.e. how to encourage the robot to move fast ?

![]() [Q.3] With your new function, what happens if the robot turns on itself at full speed ? If this strategy gives good results re-design your function to discourage this behavior.

[Q.3] With your new function, what happens if the robot turns on itself at full speed ? If this strategy gives good results re-design your function to discourage this behavior.

![]() [Q.4] Why do we use the previous speed as one of the input of the ANN ? Same question for the bias.

[Q.4] Why do we use the previous speed as one of the input of the ANN ? Same question for the bias.

If you didn't design a fitness function in the previous questions you can use the one proposed in [8] and used again in [9]. It is presented this way:

where is the average absolute wheel speed of both wheels, is the average difference between the wheel speed in absolute value and is the average value of the most active infrared sensor.

![]() [Q.5] Why is this fitness function adapted to each point of question 1-3 ?

[Q.5] Why is this fitness function adapted to each point of question 1-3 ?

![]() [P.1] Implement your fitness function or this function in the robot controller. (Hint: look for the TODO in the code)

[P.1] Implement your fitness function or this function in the robot controller. (Hint: look for the TODO in the code)

![]() [P.2] In the controller of the supervisor you have to set the number of run and their duration, good values are between 30 and 100 runs with a duration of at least 30 seconds. Now you can run the simulation a first time in fast mode... and get a coffee, learning is a long process.

[P.2] In the controller of the supervisor you have to set the number of run and their duration, good values are between 30 and 100 runs with a duration of at least 30 seconds. Now you can run the simulation a first time in fast mode... and get a coffee, learning is a long process.

![]() [Q.6] At the end of the simulation the simulation enters DEMO mode which means that all robots take the global best result as their controller. Analyse their behavior, is it a good obstacle avoidance algorithm ? Is any robot stuck after a while ? How could we improve the quality of the solution ?

[Q.6] At the end of the simulation the simulation enters DEMO mode which means that all robots take the global best result as their controller. Analyse their behavior, is it a good obstacle avoidance algorithm ? Is any robot stuck after a while ? How could we improve the quality of the solution ?

PSO modifications

[edit | edit source]We propose a way to improve the quality of the solution, we will modify the main PSO loop to give it more robustness against noise. In the step “Evaluate new particle position” we will also re-evaluate the personal best position for each particle. This helps to eliminate lucky runs were a bad solution gets a good fitness by chance. In the code this is done by evaluating all the personal best solutions together and it is done once each three run.

![]() [P.3] You can do this in the code of the supervisor, define

[P.3] You can do this in the code of the supervisor, define NOISE_RESISTANT to 1. Now build and run the simulation again.

![]() [Q.7] Is the resulting solution better ? Since we didn't change the number of run, we divide the number of evolution runs by enabling the noise resistant version. Why does this make sense ?

[Q.7] Is the resulting solution better ? Since we didn't change the number of run, we divide the number of evolution runs by enabling the noise resistant version. Why does this make sense ?

In the file pso.c you might have noticed the three parameters w, nw and pw. Those are the parameters used in the evolution function of PSO.

![]() [Q.8] What is the use of the w parameter ? What could happen if you set it to a value >1 ?

[Q.8] What is the use of the w parameter ? What could happen if you set it to a value >1 ?

Going further

[edit | edit source]To get further informations about PSO you can have a look at the paper proposed in this exercise [5], [6], [7], [8], [9].

Genetic Algorithms (GA) [Advanced]

[edit | edit source]Introduction

[edit | edit source]Genetic algorithms are optimization techniques invented by John Holland in the seventies and inspired by the Darwinian theory of evolution. Darwin’s theory is based on three basic principles, which are variation, heredity and selection. In nature, these three principles combine into a powerful optimization mechanism that maximizes the chances of survival of genes and explains the existence and the adaptation of complex life forms. The great idea of John Holland was to use the same Darwinian principles in computer simulations in order to solve engineering or mathematical problems. A genetic algorithm is a kind of artificial evolution of a population throughout a number of simulated generations. The population is made of candidate solutions to a domain specific problem. At every generation, the fitness of the candidates is evaluated with respect to one or several domain specific objectives. The best candidates are selected and allowed to reproduce; this is the selection principle. Reproduction generates new offspring based on the genetic material of two parents; this is the heredity principle. With a certain probability, the new offspring goes through mutations and therefore differs from its parents; this is the variation principle. Basic informations and pointers can be found on Wikipedia.

Simulation

[edit | edit source]Open the following world file:

.../worlds/advanced_genetic_algorithm.wbt

In this example you can observe a e-puck robot that pushes a load (small purple cylinder), while avoiding an obstacle (large brown cylinder). The simulation repeats the same action infinitely until the user pushes the O key. When the O key is pushed the supervisor starts the GA optimization. The GA fitness function is defined such as to push the load as far as possible (Euclidean distance) from its starting point. The genetic algorithm uses a population of 50 individuals selected by linear ranking. From one generation to the next, an elite composed of the 10% fittest individuals is preserved unchanged (cloned), this ensures that the very best individuals will not be lost. The remaining 90% of the population are reproduced sexually, using two-points crossover and mutations. The mutation probability of every gene is 0.06, which represents roughly one mutation per genome. The mutation amplitude is taken from a normal distribution with mean 0 and standard deviation 0.2. The GA optimization run for 50 generations, then the supervisor saves the genotype of the best individual in a file named "fittest.txt". When the simulation is restarted, this file is used to demonstrate the behavior of the best individual of the previous optimization.

Genotype Encoding

[edit | edit source]This simulation uses two different controllers; the supervisor controller runs the GA optimization, while the robot controller executes the robot's behavior. The robot's behavior is implemented as a direct sensor-to-actuator matrix that defines how much the speed of each wheel is influenced by every proximity sensor. The e-puck has 8 proximity sensors and 2 wheels, hence the sensor-to-actuator matrix is an 8*2 matrix. The genes are directly mapped to the sensor-to-actuator matrix, therefore each genotype has 16 genes. Each genes is coded as a double precision floating point number.

Questions

[edit | edit source]![]() [Q.1] How does the code specify that the load should be pushed far as possible ?

[Q.1] How does the code specify that the load should be pushed far as possible ?

![]() [Q.2] Does the robot needs all proximity sensors to solve this task ?

[Q.2] Does the robot needs all proximity sensors to solve this task ?

![]() [Q.3] How would you find out whether the crossover operation is more/less important than the mutation operation for this particular problem ?

[Q.3] How would you find out whether the crossover operation is more/less important than the mutation operation for this particular problem ?

![]() [P.1] Try to implement a fitness function that will teach the robot to do as many rotations as possible around the obstacle while pushing the load.

[P.1] Try to implement a fitness function that will teach the robot to do as many rotations as possible around the obstacle while pushing the load.

Bilateral Symmetry

[edit | edit source]The robot behavior used to push the load is completely left/right symmetrical. Hence it would be possible to encode only one half (left or right) of the matrix in each genotype. Then when the genotype is instantiated into a phenotype, the missing half of the matrix could be copied from the other part while respecting the robot's symmetry. By taking into account the bilateral symmetry, the number of genes can be divided by two, hence the search space becomes twice smaller and the GA will eventually converge more quickly to a solution.

![]() [P.2] Modify the supervisor and robot controller code to use bilateral symmetry for the genotype encoding ?

[P.2] Modify the supervisor and robot controller code to use bilateral symmetry for the genotype encoding ?

Going further *[Challenge]*

[edit | edit source]If you want to go further you can read papers on Evolutionary Robotics, good references are,[10][11] and.[12]

![]() [P.3] Try to modify this example such that the load would be pushed simultaneously by two e-puck robots instead of just one.

[P.3] Try to modify this example such that the load would be pushed simultaneously by two e-puck robots instead of just one.

SLAM [Advanced]

[edit | edit source]SLAM is the acronym for Simultaneous Localization And Mapping. The SLAM problem is for a mobile robot to concurrently estimate both a map of its environment and its position with respect to the map. It is a current research topic so we won't go in too many details but we will try to understand the key ideas behind this problem and give references for those who are interested..

Map building, first attempt

[edit | edit source]To illustrate the difficulty of this problem we will start with a naive approach and show its limitations. To do that, open the following world file:

.../worlds/advanced_slam.wbt

We will use a simple grid to model the map. The robot will start in the middle of the grid and will be able to modify it if it sees an obstacle. The grid is initialized to zero in each cell which means that it is initially empty. The robot will write a one in each cell where it finds an obstacle. Of course this will use the odometry to have an idea of the robot's position, we will reuse the same module as in exercise on odometry. Then we will display it on the screen so that we can examine it.

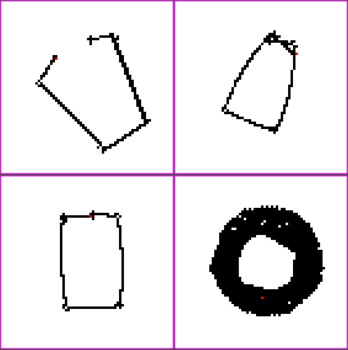

![]() [P.1] Run the simulation and notice the behavior of the robot, he is doing wall following. Now let it do a whole turn of this simplified arena, stop the simulation and look at the map displayed on the screen. The black points are obstacles while the white ones are free space, the red one is the robot current position.

[P.1] Run the simulation and notice the behavior of the robot, he is doing wall following. Now let it do a whole turn of this simplified arena, stop the simulation and look at the map displayed on the screen. The black points are obstacles while the white ones are free space, the red one is the robot current position.

![]() [Q.1] What can you notice about this map ? Look in particular at the width of the trace, at the point where the loop closes and in the corners.

[Q.1] What can you notice about this map ? Look in particular at the width of the trace, at the point where the loop closes and in the corners.

![]() [Q.2] What would happen in the map if you let the robot do 5 turns of the arena ? Check your prediction in simulation. Why is this result an issue ? Imagine what would happen with 10 or even 100 turns !

[Q.2] What would happen in the map if you let the robot do 5 turns of the arena ? Check your prediction in simulation. Why is this result an issue ? Imagine what would happen with 10 or even 100 turns !

On the picture you can see four of these runs plotted. The top row shows what you might obtain if your odometry is not perfectly calibrated, the loop is not closed or at least not properly. On the third image you see what is returned with a good calibration. It's better but even now this is not a perfect rectangle as the arena is. Finally the fourth picture shows the result of a very long run performed with the best calibration we found. Since the odometry cannot be perfect there will always be a small increase in the error during the run. On a long term this will cause a rotation of the plotted path and on a very long term (several minutes to an hour) we obtain this circle produced ba the rotation of the approximate rectangle.

This odometry error is a problem but if you think of it you will certainly come up with few other issues regarding this mapping technique. As a start in the reflexion we could stress the fact that a big matrix is very greedy in terms of memory. There exist several way to improve the map representation, starting with data structures, you might want to search for topological representation, line feature extraction or geometrically constrained SLAM.

Robot localization

[edit | edit source]This section is based on the laboratory D of the course Mobile Robots at EPFL. Now that we saw that map building is harder than it seems we will explore the localization problem. The robot is given a map and it must find out where it is located on the map. This is done by comparing the IR sensors measurements with the pre-established map and using odometry. The robot will have to integrate the sensor measurements over time since a single measurement is usually not sufficient to determine the position.

We can distinguish several localization problems so we will define some of them and limit ourselves to a single one. The first discrimination that must be done is static vs. dynamic environment, in our case the environment will be fixed and the robot only will move. Then the approach can be local or global, we can do only position tracking meaning we know where the robot is positioned and just have to follow it or localize it on the whole map “from scratch”. We will do a global localization example in this exercise. A third opposition is active vs. passive algorithm, while passive algorithm just process sensor data active ones will control the robot in order to minimize the position uncertainty, we will use a passive algorithm.

The base idea of our approach will be to assign to each cell of the grid a probability (or belief) and to use Bayes rule to update this probability distribution. Bayes rule states the following:

Since doesn't depend on we will only consider it as a normalizer and we can reformulate this rule as:

- is the prior probability distribution which gives the information we have about before incorporating the data

- is called the posterior probability distribution over and it is the information we have on knowing the data

- is usually called likelihood or generative model because it describes how state variables cause sensor measurements

We will now skip the Markov assumption and just give a bit of terminology before giving the algorithm:

- is the state of the robot and environment at instant . it will only include the robot position in our example but it could include much more (robot speed, location of moving objects in the environment, etc...)

- is the control action given before state . It will be the motor command.

- is the measurement data in state .

- is the map given to the robot, it takes no index since it does not vary

Any of these quantities can be expressed as a set, . We can now define the state transition probability which is the probabilistic law giving the evolution of state:

We can also define the measurement probability which is the probability to measure value knowing that we are in state :

Finally we have to define the belief which is the posterior probability of the state variable conditioned over the available data:

And if we compute it before including the latest measurement we call it the prediction:

Computing from is called the measurement update. We can now give the generic algorithm for Markov localization (which is just an adaptation of the Bayes rule to the mobile robot localization problem).

Markov localization(, , , ) for all do end for return

Now this algorithm can't be applied to our grid based map representation so we will give it under another form, as a discrete Bayes filter. This will allow us to implement the algorithm.

Discrete Bayes filter(, , ) for all do end for return

We can imagine that as replacing a curve by a histogram and it is exactly what happens if we think of it in on dimension, we have discretized the probability space. This algorithm is already implemented, you can use the same world file as for the mapping part but you will have to change the controller of the e-puck.

![]() [P.2] Look in the scene tree for the e-puck node and change the field controller to

[P.2] Look in the scene tree for the e-puck node and change the field controller to advanced_slam_2 instead of advanced_slam_1 and save the world file.

On the camera window we will plot the belief of the robot position as shades of red, the walls are shown in black and the most probable location is shown in cyan (there can be multiple best positions, especially at the beginning of the simulation). At the beginning the probability distribution is uniform over the whole map and it evolves over time according to the IR sensors measurements and to odometry information.

![]() [P.3] Run the simulation and let the robot localize itself. Why don't we have a single red point on the map but an area of higher values ?

[P.3] Run the simulation and let the robot localize itself. Why don't we have a single red point on the map but an area of higher values ?

![]() [Q.3] Can you explain why do we only have an small part of the former grid for this algorithm ?

[Q.3] Can you explain why do we only have an small part of the former grid for this algorithm ?

You might have noticed that the robot has only one possible position when it has localized itself. How is this possible since the rectangle is symmetric ? This is because we further simplified the algorithm. We just have to localize the robot in 2 dimensions instead of 3 . This means the robot knows its initial orientation and it removes the ambiguity.

![]() [Q.4] Read the code, do you understand how the belief is updated ?

[Q.4] Read the code, do you understand how the belief is updated ?

![]() [Q.5] Why do we apply a blur on the sensor model and on the odometry measurements ? What would happen if we didn't ?

[Q.5] Why do we apply a blur on the sensor model and on the odometry measurements ? What would happen if we didn't ?

![]() [P.4] Try to move the robot before it localizes itself (after only one step of simulation), the robot should of course be able to localize itself !

[P.4] Try to move the robot before it localizes itself (after only one step of simulation), the robot should of course be able to localize itself !

We have presented an algorithm to perform robot localization but there are many others ! If you are interested you can look at Monte-Carlo localization which is also based on the Bayes rule but represent the probability distributions by a set of particle instead of an histogram. Extended Kalman Filter (EKF) localization is also an interesting algorithm but it is slightly more complicated and if you want to go further you can have a look at Rao-Blackwellized filters.

Key challenges in SLAM

[edit | edit source]As you have seen with the two previous examples there are several challenges to overcome in order to solve the SLAM problem. We will list some of them here but many others might arise. We take in account the fact that the e-puck evolves in a fixed environment, the change in environment are irrelevant for us.

- The first problem we have seen is that the sensors are noisy. We know from exercise on odometry that the odometry precision decreases over time. This means that the incertitude over the position of the robot will increase. We have to find a way to lower this error at some point in the algorithm. But odometry is not the only sensor which generates noise, the IR sensors must be taken in account too. Then if we used the camera to do SLAM based on vision we would have to deal with the noise of the camera too.

- One solution to previous problem takes us to another problem, the correspondence problem which is maybe the hardest one. The problem is to determine if two measurement taken at a different point in time correspond to the same object in the physical world. This happens when the robot has made a cycle and it is again at the same point in space, this is also known as "closing the loop". If we can rely on the fact that two measurements are the same physical object we can dramatically diminish the incertitude on the robot position. This problem includes the localization process which is a challenge in itself.

- Another problem that might show up is the high dimensionality of the map. For a 2D grid map we already used 200×200 integer, imagine if we had to do the same with a 3D map over a whole building. This is the map representation problem. Since we also have a possibly unbounded space to explore memory and computational power might be issues too.

- A last challenge is to explore the map because the robot should not only build a map and localize itself but it should also explore its environment as completely as possible. This means explore the greatest surface in the least amount of time. This problem is related to navigation and path planning problem; it is commonly referred to as autonomous exploration.

Now you have an better idea of the challenges that we have to face before being able to do SLAM !

But SLAM does not limit to IR sensors, one could use the camera to perform vision based SLAM. We could dispose landmarks on the walls of the maze and reuse the artificial neural networks of exercise [sec:backprop] to recognize them and improve our algorithm. This could be done if one would like to participate to the Rat's Life contest. Many researchers also use laser range sensors to get precise data scans of a single position. We can also find work of SLAM in flying or underwater vehicles in 3D environments. Current research topics in SLAM include limiting the computational complexity, improving data association (between an observed landmark and the map) and environment representation.

Going further *[Challenge]*

[edit | edit source]If you want to go further you can read papers on SLAM, good references are [13] and.[14][15] presents a solution to the SLAM problem based on EKF.[16] presents SLAM with sparse sensing, you might also have a look at [17] which shows an advanced algorithm called FastSLAM. Other resources can be found on the internet, http://www.openslam.org/ provides a platform for SLAM researchers giving them the opportunity to publish their algorithms.

![]() [P.5] Implement a full SLAM algorithm in the Rat's Life framework.

[P.5] Implement a full SLAM algorithm in the Rat's Life framework.

Notes

[edit | edit source]- ↑ a b c R. Siegwart and I. R. Nourbakhsh. Introduction to Autonomous Mobile Robots. MIT Press, 2004

- ↑ Wikibooks contributors. Khepera III toolbox, odometry calibration, 2008. Khepera III Toolbox/Examples/odometry calibration

- ↑ a b W. Gerstner. Supervised learning for neural networks: A tutorial with java exercises, 1999

- ↑ Rat's Life. Rat's life - robot programming contest, 2008. http://www.ratslife.org

- ↑ a b R. Eberhart and J. Kennedy. A new optimizer using particle swarm theory. Micro Machine and Human Science, 1995. MHS '95., Proceedings of the Sixth International Symposium on, pages 39-43, Oct 1995

- ↑ a b Y. Shi and R. Eberhart. A modified particle swarm optimizer. Evolutionary Computation Proceedings, 1998. IEEE World Congress on Computational Intelligence., The 1998 IEEE International Conference on, pages 69-73, May 1998

- ↑ a b R. Poli, J. Kennedy, and T. Blackwell. Particle swarm optimization. Swarm Intelligence, 1(1):33-57, August 2007

- ↑ a b D. Floreano and F. Mondada. Evolution of homing navigation in a real mobile robot. Systems, Man, and Cybernetics, Part B, IEEE Transactions on, 26(3):396-407, June 1996

- ↑ a b J. Pugh, A. Martinoli, and Y. Zhang. Particle swarm optimization for unsupervised robotic learning. Swarm Intelligence Symposium, 2005. SIS 2005. Proceedings 2005 IEEE, pages 92-99, June 2005

- ↑ F. Mondada, D. Floreano (1995). Evolution of neural control structures: Some experiments on mobile robots. Robotics and Autonomous Systems, 16, 183-195.

- ↑ I. Harvey, P. Husbands, D. Cliff, A. Thompson, N. Jakobi (1997). Evolutionary Robotics: the Sussex Approach. In Robotics and Autonomous Systems, v. 20 (1997) pp. 205--224.

- ↑ Evolutionary Robotics by Stefano Nolfi and Dario Floreano. ISBN 0-262-14070-5

- ↑ H. Durrant-Whyte and T. Bailey. Simultaneous localization and mapping: part I. Robotics & Automation Magazine, IEEE, 13(2):99-110, June 2006

- ↑ T. Bailey and H. Durrant-Whyte. Simultaneous localization and mapping (slam): part II. Robotics & Automation Magazine, IEEE, 13(3):108-117, Sept. 2006

- ↑ M.W.M.G. Dissanayake, P. Newman, S. Clark, H.F. Durrant-Whyte, and M. Csorba. A solution to the simultaneous localization and map building (SLAM) problem. Robotics and Automation, IEEE Transactions on, 17(3):229-241, Jun 2001

- ↑ K.R. Beevers and W.H. Huang. Slam with sparse sensing. Robotics and Automation, 2006. ICRA 2006. Proceedings 2006 IEEE International Conference on, pages 2285-2290, May 2006

- ↑ M. Montemerlo, S. Thrun, D. Koller, and B. Wegbreit. FastSLAM: A factored solution to the simultaneous localization and mapping problem. In In Proceedings of the AAAI National Conference on Artificial Intelligence, pages 593-598. AAAI, 2002

![{\displaystyle p=\left[{\begin{array}{c}x\\y\\\theta \end{array}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/53309c5c81fd36ac2febb55da40ed1ab8d11aa07)

![{\displaystyle p'=p+\left[{\begin{array}{c}\Delta x\\\Delta y\\\Delta \theta \end{array}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e465be54fffb66b05bed8b0c30dc3190a35b94d7)

![{\displaystyle p=f(x,y,\theta ,\Delta s_{r},\Delta s_{l})=\left[{\begin{array}{c}x\\y\\\theta \end{array}}\right]+\left[{\begin{array}{c}{\frac {\Delta s_{r}+\Delta s_{l}}{2}}\cdot cos(\theta +{\frac {\Delta s_{r}-\Delta s_{l}}{2b}})\\{\frac {\Delta s_{r}+\Delta s_{l}}{2}}\cdot sin(\theta +{\frac {\Delta s_{r}-\Delta s_{l}}{2b}})\\{\frac {\Delta s_{r}-\Delta s_{l}}{b}}\end{array}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/69ff98007523cbd5c740f83ff74745ec734cb059)

![{\displaystyle \nabla U(q)=\left[{\begin{array}{c}{\frac {\partial U}{\partial x}}\\{\frac {\partial U}{\partial y}}\end{array}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7dc125df4683b886d8f5611d50cd9f1cdc8273da)