Assembly Language and Computer Organization/Introduction and Overview

This chapter introduces the basics of computer architecture and computer arithmetic.

What Computers Do

[edit | edit source]In order to explore how computers operate, we must first arrive at an explanation of what computers actually do. No doubt, you have some idea of what computers are capable of. After all, odds are high that you are reading this on a computer right now! If we tried to list all the functions of computers, we would come up with a staggeringly complex array of functions and features. Instead, what we need to do is peal back all the layers of functionality and see what's going on in the background.

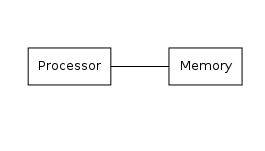

If we look at a computer at its most abstract level, what they do is process information. We could represent this sort of abstract machine as some sort of memory and some sort of processor. The processor can read and write items in the memory according to some list of instructions, while the memory simply remembers the data it has been given. The list of instructions executed by the processor are known as a computer program. The details of how this arrangement is accomplished determines the nature of the computer itself. Using this definition, quite a wide range of devices can be classified as computers. Most of the devices we call "computers" are, in fact, universal computers. That is, if they were given an infinite amount of memory, they would be able to compute any computable function. The requirements for a universal computer are actually very simple. Basically, a machine is universal if it is able to do the following:

- Read a value from memory.

- Based on the read value, determine a new value to write to memory.

- Based on the read value, determine which instruction to execute next.

A machine following these rules is said to be "Turing Complete." A complete definition of Turing Completeness is beyond the scope of this book. If you are interested, please see the articles on Turing Machines and Turing Completeness.

Trying to create programs in terms of the constraints of universal computers would be very difficult. It would be more convenient to create a list of more useful instructions such as "add", "subtract", "multiply", and "divide." The list of instructions that the processor can execute is called the machine's instruction set. This instruction set helps to define the behavior of the machine, and further define the nature of the programs which will run on the machine.

Another defining characteristic of the machine answers the question of "Where does the program get stored?" You'll note that in our diagram of the basic computer, we did not show where the program resides. This was done with purpose as answering this question helps to define the different types of computers. Breaking down the components of the system further is the topic of the next section.

Computer Architecture

[edit | edit source]Another defining characteristic of computers is the logical layout of the system. This property is called the computer's architecture. Computer architecture describes how a machine is logically organized and how its instruction set is actually implemented. One of the most important architectural decision made in designing a computer is how its memory is organized, and how programs are loaded into the machine. In the early history of computers, there was a distinction between stored program and hardwired computers. For example, the first general purpose electronic computer, the ENIAC, was programmed by wiring units of the machine to devices called function tables. Later machines, like the EDSAC, stored programs as data in program memory. Of course, stored programs proved to be much more convenient. Rewiring a machine simply takes too long when compared to the process of simply loading new data into the machine. In the current era of computing, almost all computers are stored program computers. In this book, we will be focusing on stored program computer architectures.

Having settled on storing programs as data, we now have to turn our attention to where, and how, programs are stored in memory. In the past, many different solutions to this problem have been proposed, but today there are two main high level variants. These are the Von Neumann architecture, and the Harvard Architecture.

Von Neumann Architecture

[edit | edit source]The Von Neumann Architecture is, by far, the most common architecture in existence today. PCs, Macs, and even Android phones are examples of Von Neumann computers.

The Von Neumann Architecture is a stored program computer which consists of the following components:

- A CPU (Central Processing Unit) which executes program instructions.

- A Memory which stores both programs and data.

- Input and Output Devices

The defining characteristic of the Von Neumann Architecture is that the both data and instructions are stored in the same memory device. Programs are stored as a collection of binary patterns which the CPU decodes in order to execute instructions. Of course, the data are stored as bit patterns in memory as well. The CPU can only distinguish between data and program code by the context in which it encounters the data in memory. The CPU in this architecture begins executing instructions at some memory location, and continues to step through instructions. Anything it encounters while stepping through the program is treated as program instructions. When the CPU is issued instructions to read or write data in the memory, the CPU treats the numbers in memory as data. Of course, this also means that a Von Neumann machine is perfectly capable of modifying its own program.

Computer Arithmetic

[edit | edit source]Computers spend a great deal of their time working out simple arithmetic expressions. In fact if we were to think in terms of "computation" arithmetic is what immediately springs to mind. How computers deal with numbers is, therefore, an important part of the study of computer architecture. The earliest electronic computer, ENIAC, represented numbers in much the same way that humans do, using decimal arithmetic with its operations. The problem with this approach is that you have to have 10 distinguishable states (0 through 9) which greatly increases the complexity of the machines that operates on them. Representing and distinguishing 10 states requires very precise electronics. If we want to produce easy representation with electronics, the simplest and easiest to distinguish is two states "on" and "off." This does not require nearly as much precision to detect and propogate so it greatly simplifies the physical implementation of a machine, however it does bring up the question of "How do we do math with only two symbols?"

The answer to this is that we use the binary number system where these states map to 0 and 1. So we could think of 0 as being "off" and 1 being "on". This section explains how this system of numbers works and introduces convenient methods of representing these numbers.

Representation of Numbers and Basis

[edit | edit source]In order to understand binary we need to first understand the nature of integers themselves. We will have to delve a little bit into the realm of number theory, but I promise it will be painless! We'll start off with a simple example. What do you see below?

In words, you may say that this is the number "one hundred and forty-five." However, this would not be be accurate. What you are really seeing is a representation of this quantity. From grade school on up, you are taught a specific way of writing numbers down. This system is called the "base 10" or "decimal" number system. When you see "145" you are really seeing a convention where:

Notice that each of these terms is a power of ten. So we could rewrite this as:

Colloquially, we call the positions in a number the "Ones, Tens, and Hundreds" places. If we extended on, each position takes on a power of 10 one greater than the one before it. Thus we could build the sort of table, which I am sure you have seen before.

Of course, we can continue this on into infinity adding new place

values by simply incrementing the exponent. We could generalize this

for the decimal system by saying that the value of a sequence of

digits is expressed as a polynomial:

Note that we can express this for any arbitrary number base. So we could generalize the above and say that for an arbitrary base the value of a sequence of digits is:

This opens up the door to many possibilities of representing numbers. You can choose any arbitrary base you like. The numeric base you are working in determines what symbols are used in representing numbers and how those symbols are interpreted. Generally speaking, for a base we must have symbols to represent it. For base 10, we have 10 symbols (0, 1, 2, 3, 4, 5, 6, 7, 8, 9). Notice that the highest valued symbol used in each place has the value . Hence we can come up with any base of numbers we choose, so long as we have a set of symbols to support it.

Binary Numbers

[edit | edit source]We can now turn our attention to a number base which matches the two easily detectable states of "on" and "off". This is the binary, or base 2, number system. From the above observations, the binary number system consists only of the symbols (0, 1). And its "places" are defined as shown in the following table:

Just like in decimal, we can continue this on into infinity by simply increasing the exponent. I have left it off after 8 digits because this is the unit we most often work with in computers, as we shall see later. So then, suppose we had the following binary number:

How can we determine its value? Remember this follows the same rules as a decimal number, so working from left to right we can convert this number to decimal as:

Now that we've seen how to convert from binary (or really any number base) into decimal, you are probably wondering how we can go in the other direction. That is, how can we convert a decimal number into binary (or any arbitrary base.) The answer is quite simple. We can change from decimal to any number base by repeatedly dividing by the target base. The remainder of each division for the digits, from right to left, of the converted number. Proving why this works is left as an exercise to the reader. Let's test this out by converting our good friend, 145, into binary.

Note we can stop once the quotient is 0 as it will remain 0 from here on out. Thus, we can read the binary number by reading the remainders in reverse order: