x86 Disassembly/Print Version

| This is the print version of X86 Disassembly You won't see this message or any elements not part of the book's content when you print or preview this page. |

The Wikibook of

Using C and Assembly Language

From Wikibooks: The Free Library

Introduction

What Is This Book About?

This book is about the disassembly of x86 machine code into human-readable assembly, and the decompilation of x86 assembly code into human-readable C or C++ source code. Some topics covered will be common to all computer architectures, not just x86-compatible machines.

What Will This Book Cover?

This book is going to look in-depth at the disassembly and decompilation of x86 machine code and assembly code. We are going to look at the way programs are made using assemblers and compilers, and examine the way that assembly code is made from C or C++ source code. Using this knowledge, we will try to reverse the process. By examining common structures, such as data and control structures, we can find patterns that enable us to disassemble and decompile programs quickly.

Who Is This Book For?

This book is for readers at the undergraduate level with experience programming in x86 Assembly and C or C++. This book is not designed to teach assembly language programming, C or C++ programming, or compiler/assembler theory.

What Are The Prerequisites?

The reader should have a thorough understanding of x86 Assembly, C Programming, and possibly C++ Programming. This book is intended to increase the reader's understanding of the relationship between x86 machine code, x86 Assembly Language, and the C Programming Language. If you are not too familar with these topics, you may want to reread some of the above-mentioned books before continuing.

What is Disassembly?

Computer programs are written originally in a human readable code form, such as assembly language or a high-level language. These programs are then compiled into a binary format called machine code. This binary format is not directly readable or understandable by humans. Many programs -- such as malware, proprietary commercial programs, or very old legacy programs -- may not have the source code available to you.

Programs frequently perform tasks that need to be duplicated, or need to be made to interact with other programs. Without the source code and without adequate documentation, these tasks can be difficult to accomplish. This book outlines tools and techniques for attempting to convert the raw machine code of an executable file into equivalent code in assembly language and the high-level languages C and C++. With the high-level code to perform a particular task, several things become possible:

- Programs can be ported to new computer platforms, by compiling the source code in a different environment.

- The algorithm used by a program can be determined. This allows other programs to make use of the same algorithm, or for updated versions of a program to be rewritten without needing to track down old copies of the source code.

- Security holes and vulnerabilities can be identified and patched by users without needing access to the original source code.

- New interfaces can be implemented for old programs. New components can be built on top of old components to speed development time and reduce the need to rewrite large volumes of code.

- We can figure out what a piece of malware does. We hope this leads us to figuring out how to block its harmful effects. Unfortunately, some malware writers use self-modifying code techniques (polymorphic camouflage, XOR encryption, scrambling)[1], apparently to make it difficult to even detect that malware, much less disassemble it.

Disassembling code has a large number of practical uses. One of the positive side effects of it is that the reader will gain a better understanding of the relation between machine code, assembly language, and high-level languages. Having a good knowledge of these topics will help programmers to produce code that is more efficient and more secure.

References

Tools

Assemblers and Compilers

Assemblers

Assemblers are significantly simpler than compilers, and are often implemented to simply translate the assembly code to binary machine code via one-to-one correspondence. Assemblers rarely optimize beyond choosing the shortest form of an instruction or filling delay slots.

Because assembly is such a simple process, disassembly can often be just as simple. Assembly instructions and machine code words have a one-to-one correspondence, so each machine code word will exactly map to one assembly instruction. However, disassembly has some other difficulties which cannot be accounted for using simple code-word lookups. We will introduce assemblers here, and talk about disassembly later.

Assembler Concepts

Assemblers, on a most basic level, translate assembly instructions into machine code with a one to one correspondence. They can also translate named variables into hard-coded memory addresses and labels into their relative code addresses.

Assemblers, in general, do not perform code optimization. The machine code that comes out of an assembler is equivalent to the assembly instructions that go into the assembler. Some assemblers have high-level capabilities in the form of Macros.

Some information about the program is lost during the assembly process. First and foremost, program data is stored in the same raw binary format as the machine code instructions. This means that it can be difficult to determine which parts of the program are actually instructions. Notice that you can disassemble raw data, but the resultant assembly code will be nonsensical. Second, textual information from the assembly source code file, such as variable names, label names, and code comments are all destroyed during assembly. When you disassemble the code, the instructions will be the same, but all the other helpful information will be lost. The code will be accurate, but more difficult to read.

Compilers, as we will see later, cause even more information to be lost, and decompiling is often so difficult and convoluted as to become nearly impossible to do accurately.

Intel Syntax Assemblers

Because of the pervasiveness of Intel-based IA-32 microprocessors in the home PC market, the majority of assembly work done (and the majority of assembly work considered in this wikibook) is x86-based. Many of these assemblers (or new versions of them) can handle amd64/x86_64/EMT64 code as well, although this wikibook will focus primarily on 32 bit (x86/IA-32) code examples.

MASM

MASM is Microsoft's assembler, an abbreviation for "Macro Assembler." However, many people use it as an acronym for "Microsoft Assembler," and the difference isn't a problem at all. MASM has a powerful macro feature, and is capable of writing very low-level syntax, and pseudo-high-level code with its macro feature. MASM 6.15 is currently available as a free-download from Microsoft, and MASM 7.xx is currently available as part of the Microsoft platform DDK.

- MASM uses Intel Syntax.

- MASM is used by Microsoft to implement some low-level portions of its Windows Operating systems.

- MASM, contrary to popular belief, has been in constant development since 1980, and is upgraded on a needs-basis.

- MASM has always been made compatible by Microsoft to the current platform, and executable file types.

- MASM currently supports all Intel instruction sets, including SSE2.

Many users love MASM, but many more still dislike the fact that it isn't portable to other systems.

TASM

TASM, Borland's "Turbo Assembler," is a functional assembler from Borland that integrates seamlessly with Borland's other software development tools. Current release version is version 5.0. TASM syntax is very similar to MASM, although it has an "IDEAL" mode that many users prefer. TASM is not free.

NASM

NASM, the "Netwide Assembler," is a free, portable, and retargetable assembler that works on both Windows and Linux. It supports a variety of Windows and Linux executable file formats, and even outputs pure binary. NASM is not as "mature" as either MASM or TASM, but is:

- more portable than MASM

- cheaper than TASM

- strives to be very user-friendly

NASM comes with its own disassembler ndisasm, and supports 64-bit (x86-64/x64/AMD64/Intel 64) CPUs.

NASM is released under the LGPL.

FASM

FASM, the "Flat Assembler" is an open source assembler that supports x86, and IA-64 Intel architectures.

(x86) AT&T Syntax Assemblers

AT&T syntax for x86 microprocessor assembly code is not as common as Intel-syntax, but the GNU Assembler (GAS) uses it, and it is the de facto assembly standard on Unix and Unix-like operating systems.

GAS

The GNU Assembler (GAS) is the default back-end to the GNU Compiler Collection (GCC) suite. As such, GAS is as portable and retargetable as GCC is. However, GAS uses the AT&T syntax for its instructions as default, which some users find to be less readable than Intel syntax. Newer versions of gas can be switched to Intel syntax with the directive ".intel_syntax noprefix".

GAS is developed specifically to be used as the GCC backend. Because GCC always feeds it syntactically correct code, GAS often has minimal error checking.

GAS is available as a part of either the GCC package or the GNU binutils package. [1]

Other Assemblers

HLA

HLA, short for "High Level Assembler" is a project spearheaded by Randall Hyde to create an assembler with high-level syntax. HLA works as a front-end to other assemblers such as FASM (the default), MASM, NASM, and GAS. HLA supports "common" assembly language instructions, but also implements a series of higher-level constructs such as loops, if-then-else branching, and functions. HLA comes complete with a comprehensive standard library.

Since HLA works as a front-end to another assembler, the programmer must have another assembler installed to assemble programs with HLA. HLA code output therefore, is as good as the underlying assembler, but the code is much easier to write for the developer. The high-level components of HLA may make programs less efficient, but that cost is often far outweighed by the ease of writing the code. HLA high-level syntax is very similar in many respects to Pascal, which in turn is itself similar in many respects to C, so many high-level programmers will immediately pick up many of the aspects of HLA.

Here is an example of some HLA code:

mov(src, dest); // C++ style comments

pop(eax);

push(ebp);

for(mov(0, ecx); ecx < 10; inc(ecx)) do

mul(ecx);

endfor;

Some disassemblers and debuggers can disassemble binary code into HLA-format, although none can faithfully recreate the HLA macros.

Compilers

A compiler is a program that converts instructions from one language into equivalent instructions in another language. There is a common misconception that a compiler always directly converts a high level language into machine language, but this isn't always the case. Many compilers convert code into assembly language, and a few even convert code from one high level language into another. Common examples of compiled languages are: C/C++, Fortran, Ada, and Visual Basic. The figure below shows the common compile-time steps to building a program using the C programming language. The compiler produces object files which are linked to form the final executable:

For the purposes of this book, we will only be considering the case of a compiler that converts C or C++ into assembly code or machine language. Some compilers, such as the Microsoft C compiler, compile C and C++ source code directly into machine code. GCC on the other hand compiles C and C++ into assembly language, and an assembler is used to convert that into the appropriate machine code. From the standpoint of a disassembler, it does not matter exactly how the original program was created. Notice also that it is not possible to exactly reproduce the C or C++ code used originally to create an executable. It is, however, possible to create code that compiles identically, or code that performs the same task.

C language statements do not share a one to one relationship with assembly language. Consider that the following C statements will typically all compile into the same assembly language code:

*arrayA = arrayB[x++];

*arrayA = arrayB[x]; x++;

arrayA[0] = arrayB[x++];

arrayA[0] = arrayB[x]; x++;

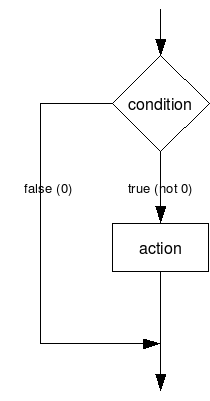

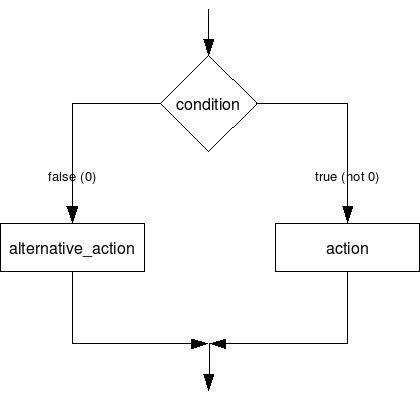

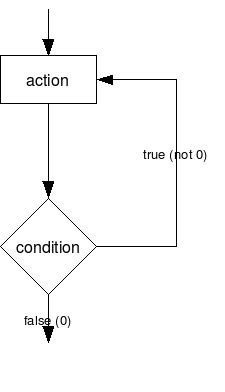

Also, consider how the following loop constructs perform identical tasks, and are likely to produce similar or even identical assembly language code:

for(;;) { ... }

while(1) { ... }

do { ... } while(1)

Common C/C++ Compilers

The purpose of this section is to list some of the most common C and C++ compilers in use for developing production-level software. There are many many C compilers in the world, but the reverser doesn't need to consider all cases, especially when looking at professional software. This page will discuss each compiler's strengths and weaknesses, its availability (download sites or cost information), and it will also discuss how to generate an assembly listing file from each compiler.

Microsoft C Compiler

The Microsoft C compiler is available from Microsoft for free as part of the Windows Server 2003 SDK. It is the same compiler and library as is used in MS Visual Studio, but doesn't come with the fancy IDE. The MS C Compiler has a very good optimizing engine. It compiles C and C++, and has the option to compile C++ code into MSIL (the .NET bytecode).

Microsoft's compiler only supports Windows systems, and Intel-compatible 16/32/64 bit architectures.

The Microsoft C compiler is cl.exe and the linker is link.exe

Listing Files

In this wikibook, cl.exe is frequently used to produce assembly listing files of C source code. To produce an assembly listing file yourself, use the syntax:

cl.exe /Fa<assembly file name> <C source file>

The "/Fa" switch is the command-line option that tells the compiler to produce an assembly listing file.

For example, the following command line:

cl.exe /FaTest.asm Test.c

would produce an assembly listing file named "Test.asm" from the C source file "Test.c". Notice that there is no space between the /Fa switch and the name of the output file.

GNU C Compiler

The GNU C compiler is part of the GNU Compiler Collection (GCC) suite. This compiler is available for most systems and it is free software. Many people use it exclusively so that they can support many platforms with just one compiler to deal with. The GNU GCC Compiler is the de facto standard compiler for Linux and Unix systems. It is retargetable, allowing for many input languages (C, C++, Obj-C, Ada, Fortran, etc...), and supporting multiple target OSes and architectures. It optimizes well, but has a non-aggressive IA-32 code generation engine.

The GCC frontend program is “gcc" (“gcc.exe” on Windows) and the associated linker is “ld” (“ld.exe” on Windows). Windows cmd searches for the programs with “.exe” extensions automatically, so you don't need to type the filename extension.

Listing Files

To produce an assembly listing file in GCC, use the following command line syntax:

gcc -S /path/to/sourcefile.c

For example, the following commandline:

gcc -S test.c

will produce an assembly listing file named “test.s”. Assembly listing files generated by GCC will be in GAS format. On x86 you can select the syntax with -masm=intel or -masm=att. GCC listing files are frequently not as well commented and laid-out as are the listing files for cl.exe.

You may add `-g3` flags to enable source-code-level debugging symbols so you can see the line numbers in the listing. The -fno-asynchronous-unwind-tables flag can help eliminate some macros in the listing.

Intel C Compiler

This compiler is used only for x86, x86-64, and IA-64 code. It is available for both Windows and Linux. The Intel C compiler was written by the people who invented the original x86 architecture: Intel. Intel's development tools generate code that is tuned to run on Intel microprocessors, and is intended to squeeze every last ounce of speed from an application. AMD IA-32 compatible processors are not guaranteed to get the same speed boosts because they have different internal architectures.

Metrowerks CodeWarrior

This compiler is commonly used for classic MacOS and for embedded systems. If you try to reverse-engineer a piece of consumer electronics, you may encounter code generated by Metrowerks CodeWarrior.

Green Hills Software Compiler

This compiler is commonly used for embedded systems. If you attempt to reverse-engineer a piece of consumer electronics, you may encounter code generated by Green Hills C/C++.

Disassemblers and Decompilers

What is a Disassembler?

In essence, a disassembler is the exact opposite of an assembler. Where an assembler converts code written in an assembly language into binary machine code, a disassembler reverses the process and attempts to recreate the assembly code from the binary machine code.

Since most assembly languages have a one-to-one correspondence with underlying machine instructions, the process of disassembly is relatively straight-forward, and a basic disassembler can often be implemented simply by reading in bytes, and performing a table lookup. Of course, disassembly has its own problems and pitfalls, and they are covered later in this chapter.

Many disassemblers have the option to output assembly language instructions in Intel, AT&T, or (occasionally) HLA syntax. Examples in this book will use Intel and AT&T syntax interchangeably. We will typically not use HLA syntax for code examples, but that may change in the future.

x86 Disassemblers

Here we are going to list some commonly available disassembler tools. Notice that there are professional disassemblers (which cost money for a license) and there are freeware/shareware disassemblers. Each disassembler will have different features, so it is up to you as the reader to determine which tools you prefer to use.

Online Disassemblers

- ODA

- is a free, web-based disassembler for a wide variety of architectures. You can use "Live View" to see how code is disassembled in real time, one byte at a time, or upload a file. The site is currently in beta release but will hopefully only get better with time.

- http://www.onlinedisassembler.com

Commercial Windows Disassemblers

- IDA Pro

- is a professional disassembler that is expensive, extremely powerful, and has a whole slew of features. The downside to IDA Pro is that it costs $515 US for the standard single-user edition. As such this wikibook will not consider IDA Pro specifically because the price tag is exclusionary. Freeware versions do exist; see below.

- (version 6.x) http://www.hex-rays.com/idapro/

- Relyze Desktop

- is an interactive software reverse engineering tool that lets you disassemble, decompile and diff x86, x64, ARM32 and ARM64 software.

- https://www.relyze.com/overview.html

- Hopper Disassembler

- is a reverse engineering tool for the Mac, that lets you disassemble, decompile and debug 32/64bits Intel Mac executables. It can also disassemble and decompile Windows executables.

- http://www.hopperapp.com

- OBJ2ASM

- is an object file disassembler for 16 and 32 bit x86 object files in Intel OMF, Microsoft COFF format, Linux ELF or Mac OS X Mach-O format.

- http://www.digitalmars.com/ctg/obj2asm.html

- PE Explorer

- is a disassembler that "focuses on ease of use, clarity and navigation." It isn't as feature-filled as IDA Pro and carries a smaller price tag to offset the missing functionality: $130

- http://www.heaventools.com/PE_Explorer_disassembler.htm

- W32DASM (Win32dasm)

- W32DASM was an excellent 16/32 bit disassembler for Windows, it seems it is no longer developed. the latest version available is from 2003. the website went down and no replacement went up.

- http://www.softpedia.com/get/Programming/Debuggers-Decompilers-Dissasemblers/WDASM.shtml

- Binary Ninja

- Binary Ninja is a commercial, cross-platform (Linux, OS X, Windows) reverse engineering platform with aims to offer a similar feature set to IDA at a much cheaper price point. A precursor written in python is open source and available at https://github.com/Vector35/deprecated-binaryninja-python. Introductory pricing is $99 for student/non-commercial use, and $399 for commercial use.

- https://binary.ninja/

- Hiew

- x86-64 disassembler & assembler. Single license pricing is $19, and $199 with lifetime updates.

- hiew.ru

Commercial Freeware/Shareware Windows Disassemblers

- OllyDbg

- OllyDbg is one of the most popular disassemblers recently. It has a large community and a wide variety of plugins available. It emphasizes binary code analysis. Supports x86 instructions only (no x86_64 support for now, although it is on the way).

- http://www.ollydbg.de/ (official website)

- http://www.openrce.org/downloads/browse/OllyDbg_Plugins (plugins)

- http://www.ollydbg.de/odbg64.html (64 bit version)

Free Windows Disassemblers

- Capstone

- Capstone is an open source disassembly framework for multi-arch (including support for x86, x86_64) & multi-platform with advanced features.

- http://www.capstone-engine.org/

- Zydis

- Fast and lightweight x86/x86-64 decoder library. It does not offer disassembler features such as linear sweep or recursive disassembling.

- https://github.com/zyantific/zydis

- Objconv

- A command line disassembler supporting 16, 32, and 64 bit x86 code. Latest instruction set (SSE4, AVX, XOP, FMA, etc.), several object file formats, several assembly syntax dialects. Windows, Linux, BSD, Mac. Intelligent analysis.

- IDA 3.7

- A DOS GUI tool that behaves very much like IDA Pro, but is considerably more limited. It can disassemble code for the Z80, 6502, Intel 8051, Intel i860, and PDP-11 processors, as well as x86 instructions up to the 486.

- http://www.simtel.net/product.php (search for ida37fw)

- IDA Pro Freeware

- Behaves almost exactly like IDA Pro, but disassembles only Intel x86 opcodes and is Windows-only. It can disassemble instructions for those processors available as of 2003. Free for non-commercial use.

- (version 4.1) http://www.themel.com/idafree.zip

- (version 4.3) http://www.datarescue.be/idafreeware/freeida43.exe

- (version 5.0) https://www.scummvm.org/frs/extras/IDA/idafree50.exe

- (version 7.0) https://www.hex-rays.com/products/ida/support/download_freeware.shtml

- BORG Disassembler

- BORG is an excellent Win32 Disassembler with GUI.

- http://www.caesum.com/

- HT Editor

- An analyzing disassembler for Intel x86 instructions. The latest version runs as a console GUI program on Windows, but there are versions compiled for Linux as well.

- http://hte.sourceforge.net/

- diStorm64

- diStorm is an open source highly optimized stream disassembler library for 80x86 and AMD64.

- http://ragestorm.net/distorm/

- crudasm

- crudasm is an open source disassembler with a variety of options. It is a work in progress and is bundled with a partial decompiler.

- http://sourceforge.net/projects/crudasm9/

- BeaEngine

- BeaEngine is a complete disassembler library for IA-32 and intel64 architectures (coded in C and usable in various languages : C, Python, Delphi, PureBasic, WinDev, masm, fasm, nasm, GoAsm).

- https://github.com/BeaEngine/beaengine

- Visual DuxDebugger

- is a 64-bit debugger disassembler for Windows.

- http://www.duxcore.com/products.html

- BugDbg

- is a 64-bit user-land debugger designed to debug native 64-bit applications on Windows.

- http://www.pespin.com/

- DSMHELP

- Disassemble Help Library is a disassembler library with single line Epimorphic assembler. Supported instruction sets - Basic,System,SSE,SSE2,SSE3,SSSE3,SSE4,SSE4A,MMX,FPU,3DNOW,VMX,SVM,AVX,AVX2,BMI1,BMI2,F16C,FMA3,FMA4,XOP.

- http://dsmhelp.narod.ru/ (in Russian)

- ArkDasm

- is a 64-bit interactive disassembler and debugger for Windows. Supported processor: x64 architecture (Intel x64 and AMD64)

- http://www.arkdasm.com/

- SharpDisam

- is a C# port of the udis86 x86 / x86-64 disassembler

- http://sharpdisasm.codeplex.com/

- CFF Explorer

- Special fields description and modification (.NET supported), utilities, rebuilder, hex editor, import adder, signature scanner, signature manager, extension support, scripting, disassembler, dependency walker etc.

- ntcore.com

- bddisasm

- fast, lightweight, x86/x64 instruction decoding library.

- github.com/bitdefender/bddisasm

Unix Disassemblers

Many of the Unix disassemblers, especially the open source ones, have been ported to other platforms, like Windows (mostly using MinGW or Cygwin). Some Disassemblers like otool ([OS X) are distro-specific.

- Capstone

- Capstone is an open source disassembly framework for multi-arch (including support for x86, x86_64) & multi-platform (including Mac OSX, Linux, *BSD, Android, iOS, Solaris) with advanced features.

- http://www.capstone-engine.org/

- Bastard Disassembler

- The Bastard disassembler is a powerful, scriptable disassembler for Linux and FreeBSD.

- http://bastard.sourceforge.net/

- ndisasm

- NASM's disassembler for x86 and x86-64. Works on DOS, Windows, Linux, Mac OS X and various other systems.

- udis86

- Disassembler Library for x86 and x86-64

- http://udis86.sourceforge.net/

- Zydis

- Fast and lightweight x86/x86-64 disassembler library.

- https://github.com/zyantific/zydis

- Objconv

- See above.

- ciasdis

- The official name of ciasdis is computer_intelligence_assembler_disassembler. This Forth-based tool allows to incrementally and interactively build knowledge about a code body. It is unique that all disassembled code can be re-assembled to the exact same code. Processors are 8080, 6809, 8086, 80386, Pentium I en DEC Alpha. A scripting facility aids in analyzing Elf and MSDOS headers and makes this tool extendable. The Pentium I ciasdis is available as a binary image, others are in source form, loadable onto lina Forth, available from the same site.

- http://home.hccnet.nl/a.w.m.van.der.horst/ciasdis.html

- objdump

- comes standard, and is typically used for general inspection of binaries. Pay attention to the relocation option and the dynamic symbol table option.

- gdb

- comes standard, as a debugger, but is very often used for disassembly. If you have loose hex dump data that you wish to disassemble, simply enter it (interactively) over top of something else or compile it into a program as a string like so: char foo[] = {0x90, 0xcd, 0x80, 0x90, 0xcc, 0xf1, 0x90};

- lida linux interactive disassembler

- an interactive disassembler with some special functions like a crypto analyzer. Displays string data references, does code flow analysis, and does not rely on objdump. Utilizes the Bastard disassembly library for decoding single opcodes. The project was started in 2004 and remains dormant to this day.

- http://lida.sourceforge.net

- dissy

- This program is a interactive disassembler that uses objdump.

- http://code.google.com/p/dissy/

- EmilPRO

- replacement for the deprecated dissy[check spelling] disassembler.

- http://github.com/SimonKagstrom/emilpro

- x86dis

- This program can be used to display binary streams such as the boot sector or other unstructured binary files.

- ldasm

- LDasm (Linux Disassembler) is a Perl/Tk-based GUI for objdump/binutils that tries to imitate the 'look and feel' of W32Dasm. It searches for cross-references (e.g. strings), converts the code from GAS to a MASM-like style, traces programs and much more. Comes along with PTrace, a process-flow-logger. Last updated in 2002, available from Tucows.

- http://www.tucows.com/preview/59983/LDasm

- llvm

- LLVM has two interfaces to its disassembler:

- llvm-objdump

- Mimics GNU objdump.

- llvm-mc

- See the LLVM blog. Example usage:$ echo '1 2' | llvm-mc -disassemble -triple=x86_64-apple-darwin9

addl %eax, (%rdx)

$ echo '0x0f 0x1 0x9' | llvm-mc -disassemble -triple=x86_64-apple-darwin9

sidt (%rcx)

$ echo '0x0f 0xa2' | llvm-mc -disassemble -triple=x86_64-apple-darwin9

cpuid

$ echo '0xd9 0xff' | llvm-mc -disassemble -triple=i386-apple-darwin9

fcos

- otool

- OS X's object file displaying tool.

- edb

- A cross platform x86/x86-64 debugger.

- https://github.com/eteran/edb-debugger

- bddisasm

- fast, lightweight, x86/x64 instruction decoding library.

- github.com/bitdefender/bddisasm

- rasm2

- radare2 disassembler and assembler tool. Includes x86.nz library with support for x86/x86-64.

Disassembler Issues

As we have alluded to before, there are a number of issues and difficulties associated with the disassembly process. The two most important difficulties are the division between code and data, and the loss of text information.

Separating Code from Data

Since data and instructions are all stored in an executable as binary data, the obvious question arises: how can a disassembler tell code from data? Is any given byte a variable, or part of an instruction?

The problem wouldn't be as difficult if data were limited to the .data section (segment) of an executable (explained in a later chapter) and if executable code were limited to the .code section of an executable, but this is often not the case. Data may be inserted directly into the code section (e.g. jump address tables, constant strings), and executable code may be stored in the data section (although new systems are working to prevent this for security reasons). AI programs, LISP or Forth compilers may not contain .text and .data sections to help decide, and have code and data interspersed in a single section that is readable, writable and executable, Boot code may even require substantial effort to identify sections. A technique that is often used is to identify the entry point of an executable, and find all code reachable from there, recursively. This is known as "code crawling".

Many interactive disassemblers will give the user the option to render segments of code as either code or data, but non-interactive disassemblers will make the separation automatically. Disassemblers often will provide the instruction AND the corresponding hex data on the same line, shifting the burden for decisions about the nature of the code to the user. Some disassemblers (e.g. ciasdis) will allow you to specify rules about whether to disassemble as data or code and invent label names, based on the content of the object under scrutiny. Scripting your own "crawler" in this way is more efficient; for large programs interactive disassembling may be impractical to the point of being unfeasible.

The general problem of separating code from data in arbitrary executable programs is equivalent to the halting problem. As a consequence, it is not possible to write a disassembler that will correctly separate code and data for all possible input programs. Reverse engineering is full of such theoretical limitations, although by Rice's theorem all interesting questions about program properties are undecidable (so compilers and many other tools that deal with programs in any form run into such limits as well). In practice a combination of interactive and automatic analysis and perseverance can handle all but programs specifically designed to thwart reverse engineering, like using encryption and decrypting code just prior to use, and moving code around in memory.

Lost Information

User defined textual identifiers, such as variable names, label names, and macros are removed by the assembly process. They may still be present in generated object files, for use by tools like debuggers and relocating linkers, but the direct connection is lost and re-establishing that connection requires more than a mere disassembler. Especially small constants may have more than one possible name. Operating system calls (like DLLs in MS-Windows, or syscalls in Unices) may be reconstructed, as their names appear in a separate segment or are known beforehand. Many disassemblers allow the user to attach a name to a label or constant based on his understanding of the code. These identifiers, in addition to comments in the source file, help to make the code more readable to a human, and can also shed some clues on the purpose of the code. Without these comments and identifiers, it is harder to understand the purpose of the source code, and it can be difficult to determine the algorithm being used by that code. When you combine this problem with the possibility that the code you are trying to read may, in reality, be data (as outlined above), then it can be even harder to determine what is going on. Another challenge is posed by modern optimising compilers; they inline small subroutines, then combine instructions over call and return boundaries. This loses valuable information about the way the program is structured.

Decompilers

Akin to Disassembly, Decompilers take the process a step further and actually try to reproduce the code in a high level language. Frequently, this high level language is C, because C is simple and primitive enough to facilitate the decompilation process. Decompilation does have its drawbacks, because lots of data and readability constructs are lost during the original compilation process, and they cannot be reproduced. Since the science of decompilation is still young, and results are "good" but not "great", this page will limit itself to a listing of decompilers, and a general (but brief) discussion of the possibilities of decompilation. Compared to disassemblers a decompiler generates code that doesnot require that one is familiar at the processor at hand. It may even be that the decompiled code can be compiled on a different processor, or give a reasonable starting point to reproduce the program on a different processor.

Decompilation: Is It Possible?

In the face of optimizing compilers, it is not uncommon to be asked "Is decompilation even possible?" To some degree, it usually is. Make no mistake, however: an optimizing compiler results in the irretrievable loss of information. An example is in-lining, as explained above, where code called is combined with its surroundings, such that the places where the original subroutine is called cannot even be identified. An optimizer that reverses that process is comparable to an artificial intelligence program that recreates a poem in a different language. So perfectly operational decompilers are a long way off. At most, current Decompilers can be used as simply an aid for the reverse engineering process leaving lots of arduous work.

Common Decompilers

- Hex-Rays Decompiler

- Hex-Rays is a commercial decompiler. It is made as an extension to popular IDA-Pro disassembler. It is currently the only viable commercially available decompiler which produces usable results. It supports both x86 and ARM architecture.

- http://www.hex-rays.com/products/decompiler/index.shtml

- ILSpy

- ILSpy is an open source .NET assembly browser and decompiler.

- https://github.com/icsharpcode/ILSpy

- DCC

- DCC is likely one of the oldest decompilers in existence, dating back over 20 years. It serves as a good historical and theoretical frame of reference for the decompilation process in general (Mirrors: [2][3]). As of 2015, DCC is an active project. Some of the latest changes include fixes for longstanding memory leaks and a more modern Qt5-based front-end.

- RetDec

- The Retargetable Decompiler is a freeware web decompiler that takes in ELF/PE/COFF binaries in Intel x86, ARM, MIPS, PIC32, and PowerPC architectures and outputs C or Python-like code, plus flow charts and control flow graphs. It puts a running time limit on each decompilation. It produces nice results in most cases.

- https://github.com/avast/retdec

- Reko

- a modular open-source decompiler supporting both an interactive GUI and a command-line interface. Its pluggable design supports decompilation of a variety of executable formats and processor architectures (8- , 16- , 32- and 64-bit architectures as of 2015). It also supports running unpacking scripts before actual decompilation. It performs global data and type analyses of the binary and yields its results in a subset of C++.

- http://sourceforge.net/projects/decompiler

- https://github.com/uxmal/reko

- C4Decompiler

- C4Decompiler is an interactive, static decompiler under development (Alpha in 2013). It performs global analysis of the binary and presents the resulting C source in a Windows GUI. Context menus support navigation, properties, cross references, C/Asm mixed view and manipulation of the decompile context (function ABI).

- http://www.c4decompiler.com

- Boomerang Decompiler Project

- Boomerang Decompiler is an attempt to make a powerful, retargetable decompiler. So far, it only decompiles into C with moderate success.

- http://boomerang.sourceforge.net/

- Reverse Engineering Compiler (REC)

- REC is a powerful "decompiler" that decompiles native assembly code into a C-like code representation. The code is half-way between assembly and C, but it is much more readable than the pure assembly is. Unfortunately the program appears to be rather unstable.

- http://www.backerstreet.com/rec/rec.htm

- ExeToC

- ExeToC decompiler is an interactive decompiler that boasted pretty good results in the past.

- http://sourceforge.net/projects/exetoc

- snowman

- Snowman is an open source native code to C/C++ decompiler. Supports ARM, x86, and x86-64 architectures. Reads ELF, Mach-O, and PE file formats. Reconstructs functions, their names and arguments, local and global variables, expressions, integer, pointer and structural types, all types of control-flow structures, including switch. Has a nice graphical user interface with one-click navigation between the assembler code and the reconstructed program. Has a command-line interface for batch processing.

- https://derevenets.com

- Ghidra

- Ghidra is a reverse engineering package that includes a decompiler. It was written by the NSA for internal work, and apparently released because they didn't want to have to re-train every new person they hired. It is written in Java.

A General view of Disassembling

8 bit CPU code

Most embedded CPUs are 8-bit CPUs.[1]

Normally when a subroutine is finished, it returns to executing the next address immediately following the call instruction.

However, assembly-language programmers occasionally use several different techniques that adjust the return address, making disassembly more difficult:

- jump tables,

- calculated jumps, and

- a parameter after the call instruction.

jump tables and other calculated jumps

On 8-bit CPUs, calculated jumps are often implemented by pushing a calculated "return" address to the stack, then jumping to that address using the "return" instruction. For example, the RTS Trick uses this technique to implement jump tables (branch table).

parameters after the call instruction

Instead of picking up their parameters off the stack or out of some fixed global address, some subroutines provide parameters in the addresses of memory that follow the instruction that called that subroutine. Subroutines that use this technique adjust the return address to skip over all the constant parameter data, then return to an address many bytes after the "call" instruction. One of the more famous programs that used this technique is the "Sweet 16" virtual machine.

The technique may make disassembly more difficult.

A simple example of this is the write() procedure implemented as follows:

; assume ds = cs, e.g like in boot sector code

start:

call write ; push message's address on top of stack

db "Hello, world",0dh,0ah,00h

; return point

ret ; back to DOS

write proc near

pop si ; get string address

mov ah,0eh ; BIOS: write teletype

w_loop:

lodsb ; read char at [ds:si] and increment si

or al,al ; is it 00h?

jz short w_exit

int 10h ; write the character

jmp w_loop ; continue writing

w_exit:

jmp si

write endp

end start

A macro-assembler like TASM will then use a macro like this one:

_write macro message

call write

db message

db 0

_write endm

From a human disassembler's point of view, this is a nightmare, although this is straightforward to read in the original Assembly source code, as there is no way to decide if the db should be interpreted or not from the binary form, and this may contain various jumps to real executable code area, triggering analysis of code that should never be analysed, and interfering with the analysis of the real code (e.g. disassembling the above code from 0000h or 0001h won't give the same results at all).

However a half-decent tool with possibilities to specifiy rules, and heuristic means to identify texts will have little trouble.

32 bit CPU code

Most 32-bit CPUs use the ARM instruction set.[1][2][3]

Typical ARM assembly code is a series of subroutines, with literal constants scattered between subroutines. The standard prolog and epilog for subroutines is pretty easy to recognize.

A brief list of disassemblers

- ciasdis "an assembler where the elements opcode, operands and modifiers are all objects, that are reusable for disassembly." For 8080 8086 80386 Alpha 6809 and should be usable for Pentium 68000 6502 8051.

- radare, the reverse engineering framework includes open-source tools to disassemble code for many processors including x86, ARM, PowerPC, m68k, etc. several virtual machines including java, msil, etc., and for many platforms including Linux, BSD, OSX, Windows, iPhoneOS, etc.

- IDA, the Interactive Disassembler ( IDA Pro ) can disassemble code for a huge number of processors, including ARM Architecture (including Thumb and Thumb-2), ATMEL AVR, INTEL 8051, INTEL 80x86, MOS Technologies 6502, MC6809, MC6811, M68H12C, MSP430, PIC 12XX, PIC 14XX, PIC 18XX, PIC 16XXX, Zilog Z80, etc.

- objdump, part of the GNU binutils, can disassemble code for several processors and platforms. binutils is an important part of the toolchain as it provides the linker, assembler and other utilties (like objdump) to manipulate executables on the target platform, and is available for most popular platforms.

- For OS X/BSD systems, there is a rough equivalent called otool in the XCode kit.

- Disassemblers at Curlie lists a huge number of disassemblers

- Program transformation wiki: disassembly lists many highly recommended disassemblers

- search for "disassemble" at SourceForge shows many disassemblers for a variety of CPUs.

- Hopper is a disassembler that runs on OS-X and disassembles 32/64-bit OS-X and windows binaries.

- The University of Queensland Binary Translator (UQBT) is a reusable, component-based binary-translation framework that supports CISC, RISC, and stack-based processors.

Further reading

- ↑ a b Jim Turley. "The Two Percent Solution". 2002.

- ↑ Mark Hachman. "ARM Cores Climb Into 3G Territory". 2002. "Although Intel and AMD receive the bulk of attention in the computing world, ARM’s embedded 32-bit architecture, ... has outsold all others."

- ↑ Tom Krazit. "ARMed for the living room". "ARM licensed 1.6 billion cores [in 2005]". 2006.

- http://www.crackmes.de/ : reverse engineering challenges

- "A Challengers Handbook" by Caesum [4] has some tips on reverse engineering programs in JavaScript, Flash Actionscript (SWF), Java, etc.

- the Open Source Institute occasionally has reverse engineering challenges among its other brainteasers.[5]

- The Program Transformation wiki has a Reverse engineering and Re-engineering Roadmap, and discusses disassemblers, decompilers, and tools for translating programs from one high-level language to another high-level language.

- Other disassemblers with multi-platform support

Analysis Tools

Debuggers

Debuggers are programs that allow the user to execute a compiled program one step at a time. You can see what instructions are executed in which order, and which sections of the program are treated as code and which are treated as data. Debuggers allow you to analyze the program while it is running, to help you get a better picture of what it is doing.

Advanced debuggers often contain at least a rudimentary disassembler, often times hex editing and reassembly features. Debuggers often allow the user to set breakpoints on instructions, function calls, and even memory locations.

A breakpoint is an instruction to the debugger that allows program execution to be halted when a certain condition is met. For instance, when a program accesses a certain variable, or calls a certain API function, the debugger can pause program execution.

Windows Debuggers

- SoftICE

- A de facto standard for Windows debugging. SoftICE can be used for local kernel debugging, which is a feature that is very rare, and very valuable. SoftICE was taken off the market in April 2006.

- WinDbg

- WinDbg is a free piece of software from Microsoft that can be used for local user-mode debugging, or even remote kernel-mode debugging. WinDbg is not the same as the better-known Visual Studio Debugger, but comes with a nifty GUI nonetheless. Available in 32 and 64-bit versions.

- https://docs.microsoft.com/en-us/windows-hardware/drivers/debugger/debugger-download-tools

- IDA Pro

- The multi-processor, multi-OS, interactive disassembler by DataRescue.

- http://www.hex-rays.com/idapro/

- OllyDbg

- OllyDbg is a free and powerful Windows debugger with a built-in disassembly and assembly engine. Very useful for patching, disassembling, and debugging.

- http://www.ollydbg.de/

- x64dbg

- A set of 32 and 64 bit x86 debuggers. x64dbg is the spiritual successor to the discontinued OllyDbg.

- Immunity Debugger

- Immunity Debugger is a branch of OllyDbg v1.10, with built-in support for Python scripting and much more.

- http://immunityinc.com/products/debugger/index.html

Linux Debuggers

Many of the open source debuggers on Linux, again, are cross-platform. They may be available on some other Unix(-like) systems, or even Windows. Some of the debuggers may give you better experience than the old and native ones on your system.

- gdb

- The GNU debugger, comes with any normal Linux install. It is quite powerful and even somewhat programmable, though the raw user interface is harsh.

- lldb

- LLVM's debugger.

- emacs

- The GNU editor, can be used as a front-end to gdb. This provides a powerful hex editor and allows full scripting in a LISP-like language.

- ddd

- The Data Display Debugger. It's another front-end to gdb. This provides graphical representations of data structures. For example, a linked list will look just like a textbook illustration.

- strace, ltrace, and xtrace

- Lets you run a program while watching the actions it performs. With strace, you get a log of all the system calls being made. With ltrace, you get a log of all the library calls being made. With xtrace, you get a log of some of the funtion calls being made.

- valgrind

- Executes a program under emulation, performing analysis according to one of the many plug-in modules as desired. You can write your own plug-in module as desired. Newer versions of valgrind also support OS X.

- NLKD

- A kernel debugger.

- http://forge.novell.com/modules/xfmod/project/?nlkd

- edb

- A fully featured plugin-based debugger inspired by the famous OllyDbg. Project page

- KDbg

- A gdb front-end for KDE. http://kdbg.org

- RR0D

- A Ring-0 Debugger for Linux. RR0D Project Page

- Radare2

- A debugger and reversing framework.

- Winedbg

- Wine's debugger. Debugs Windows executables using wine.

Debuggers for Other Systems

- dbx

- The standard Unix debugger on systems derived from AT&T Unix. It is often part of an optional development toolkit package which comes at an extra price. It uses an interactive command line interface.

- ladebug

- An enhanced debugger on Tru64 Unix systems from HP (originally Digital Equipment Corporation) that handles advanced functionality like threads better than dbx.

- DTrace

- An advanced tool on Solaris that provides functions like profiling and many others on the entire system, including the kernel.

- mdb

- The Modular Debugger (MDB) is a new general purpose debugging tool for the Solaris Operating Environment. Its primary feature is its extensibility. The Solaris Modular Debugger Guide describes how to use MDB to debug complex software systems, with a particular emphasis on the facilities available for debugging the Solaris kernel and associated device drivers and modules. It also includes a complete reference for and discussion of the MDB language syntax, debugger features, and MDB Module Programming API.

Debugger Techniques

Setting Breakpoints

As previously mentioned in the section on disassemblers, a 6-line C program doing something as simple as outputting "Hello, World!" turns into massive amounts of assembly code. Most people don't want to sift through the entire mess to find out the information they want. It can be time consuming just to find the information one desires by just looking through the code. As an alternative, one can choose to set breakpoints to halt the program once it has reached a given point within the program's code.

For instance, let's say that in your program you consistantly experience crashes after one particular event: immediately after closing a message box. You set breakpoints on all calls to MessageBoxA. You run your program with the breakpoints set, and it stops, ready to call MessageBoxA. Executing each line one-by-one thereafter (referred to as stepping) through the code, and watching the program stack, you see that a buffer overflow occurs soon after the call.

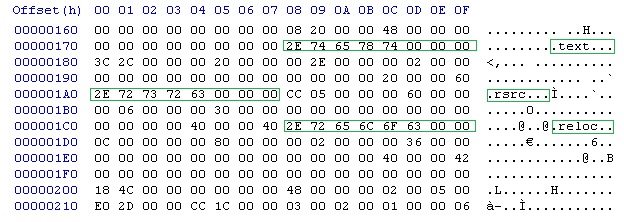

Hex Editors

Hex editors are able to directly view and edit the binary of a source file, and are very useful for investigating the structure of proprietary closed-format data files. There are many hex editors in existence. This section will attempt to list some of the best, some of the most popular, or some of the most powerful.

- HxD (Freeware)

- For Windows. A fast and powerful free hex, disk and RAM editor

- http://mh-nexus.de/hxd/

- Freeware Hex Editor XVI32

- For Windows. A freeware hex editor.

- http://www.chmaas.handshake.de/delphi/freeware/xvi32/xvi32.htm

- wxHexEditor (Beta, For Windows and Linux, Free & Open Source)

- A fast hex editor specially for HUGE files and disk devices, allows up to hexabyte, allow size changes (inject and deletes) without creating temp file, could view files with multiple panes, has built-in disassembler, supports tags for (reverse) engineering big binaries or file systems, could view files thrug XOR encryption.

- http://wxhexeditor.sourceforge.net/

- HHD Software Hex Editor Neo

- For Windows. A fast file, disk, and memory editor with built-in disassembler and file structure viewer.

- http://www.hhdsoftware.com/Family/hex-editor.html

- Catch22 HexEdit

- For Windows. his is a powerful hex editor with a slew of features. Has an excellent data structure viewer.

- http://www.catch22.net/software/hexedit.asp

- BreakPoint Hex Workshop

- For Windows. An excellent and powerful hex-editor, its usefulness is restricted by the fact that it is not free like some of the other options.

- http://www.bpsoft.com/

- Tiny Hexer

- Free and does statistics. For Windows.

- http://www.mirkes.de/files/

- frhed - free hex editor

- For Windows. Free and opensource.

- http://www.kibria.de/frhed.html

- Cygnus Hex Editor

- For Windows. A very fast and easy-to-use hex editor, available in a 'Free Edition'.

- http://www.softcircuits.com/cygnus/fe/

- Hexprobe Hex Editor

- For Windows. A professional hex editor designed to include all the power to deal with hex data, particularly helpful in the areas of hex-byte editing and byte-pattern analysis.

- http://www.hexprobe.com/hexprobe/index.htm

- UltraEdit32

- For Windows. A hex editor/text editor, won "Application of the Year" at 2005 Shareware Industry Awards Conference.

- http://www.ultraedit.com/

- Hexinator (For Windows and Linux)

- lets you edit files of unlimited size (overwrite, insert, delete), displays text with dozens of text encodings, shows variables in little and big endian byte order.

- https://hexinator.com

- ICY Hexplorer

- For Windows. A lightweight free and open source hex file editor with some nifty features, such as pixel view, structures, and disassembling.

- http://hexplorer.sourceforge.net/

- WinHex

- For Windows. A powerful hex file and disk editor with advanced abilities for computer forensics and data recovery (used by governments and military).

- http://www.x-ways.net/index-m.html

- 010 Editor

- For Windows. A very powerful and fast hex editor with extensive support for data structures and scripting. Can be used to edit drives and processes.

- http://www.sweetscape.com/010editor/

- 1Fh

- For Windows. A free binary/hex editor which is very fast, even while working with large files. It's the only Windows hex editor that allows you to view files in byte code (all 256-characters).

- http://www.4neurons.com/1Fh/

- HexEdit

- For Windows (Open source) and shareware versions. Powerful and easy to use binary file and disk editor.

- http://www.hexedit.com/

- HexToolkit

- For Windows. A free hex viewer specifically designed for reverse engineering file formats. Allows data to be viewed in various formats and includes an expression evaluator as well as a binary file comparison tool.

- http://www.binaryearth.net/HexToolkit

- FlexHex

- For Windows. It Provides full support for NTFS files which are based on a more complex model than FAT32 files. Specifically, FlexHex supports Sparse files and Alternate data streams of files on any NTFS volume. Can be used to edit OLE compound files, flash cards, and other types of physical drives.

- http://www.heaventools.com/flexhex-hex-editor.htm

- HT Editor

- For Windows. A file editor/viewer/analyzer for executables. Its goal is to combine the low-level functionality of a debugger and the usability of IDEs.

- http://hte.sourceforge.net/

- HexEdit

- For MacOS. A simple but reliable hex editor wher you to change highlight colours. There is also a port for Apple Classic users.

- http://hexedit.sourceforge.net/

- Hex Fiend

- For MacOS. A very simple hex editor, but incredibly powerful nonetheless. It's only 346 KB to download and takes files as big as 116 GB.

- http://ridiculousfish.com/hexfiend/

- ImHex

- For Windows, MacOS and Linux. Displays, decodes and analyzes binary data (+ printable ASCII chars) and allow edition of bytes. Includes data inspector with various decoding (integers, floats, char/wchar, Unicode, dates, RGBA/RGB565 color...), search by hex bytes and string, hex diff, pattern matching, yara rules (for malware pattern detection), hash computations, graphical data statistics, disassemblers, and various extra tools from a "content store". Free and open-source, licensed under GPLv2.

- https://imhex.werwolv.net/

Linux Hex Editors only

- bvi

- A typical three-pane hex editor, with a vi-like interface.

- emacs

- Along with everything else, emacs also includes a hex editor.

- joe

- Joe's own editor now also supports hex editing.

- bless

- A very capable gtk based hex editor.

- xxd and any text editor

- Produce a hex dump with xxd, freely edit it in your favorite text editor, and then convert it back to a binary file with your changes included.

- GHex

- Hex editor for GNOME.

- http://directory.fsf.org/All_Packages_in_Directory/ghex.html

- Okteta

- The well-integrated hexeditor from KDE since 4.1. Offers the traditional two-columns layout, one with numeric values (binary, octal, decicmal, hexdecimal) and one with characters (lots of charsets supported). Editing can be done in both columns, with unlimited undo/redo. Small set of tools (searching/replacing, strings, binary filter, and more).

- http://utils.kde.org/projects/okteta

- BEYE

- A viewer of binary files with built-in editor in binary, hexadecimal and disassembler modes. It uses native Intel syntax for disassembly. Highlight AVR/Java/Athlon64/Pentium 4/K7-Athlon disassembler, Russian codepages converter, full preview of formats - MZ, NE, PE, NLM, coff32, elf partial - a.out, LE, LX, PharLap; code navigator and more over. (

- http://beye.sourceforge.net/en/beye.html

- BIEW

- A viewer of binary files with built-in editor in binary, hexadecimal and disassembler modes. It uses native Intel syntax for disassembly. Highlight AVR/Java/Athlon64/Pentium 4/K7-Athlon disassembler, Russian codepages converter, full preview of formats - MZ, NE, PE, NLM, coff32, elf partial - a.out, LE, LX, PharLap; code navigator and more over. (PROJECT RENAMED, see BEYE)

- http://biew.sourceforge.net/en/biew.html

- hview

- A curses based hex editor designed to work with large (600+MB) files with as quickly, and with little overhead, as possible.

- http://web.archive.org/web/20010306001713/http://tdistortion.esmartdesign.com/Zips/hview.tgz

- HexCurse

- An ncurses-based hex editor written in C that currently supports hex and decimal address output, jumping to specified file locations, searching, ASCII and EBCDIC output, bolded modifications, an undo command, quick keyboard shortcuts, etc.

- http://www.jewfish.net/description.php?title=HexCurse

- hexedit

- View and edit files in hexadecimal or in ASCII.

- http://rigaux.org/hexedit.html

- Data Workshop

- An editor to view and modify binary data; provides different views which can be used to edit, analyze and export the binary data.

- http://www.dataworkshop.de/

- VCHE

- A hex editor which lets you see all 256 characters as found in video ROM, even control and extended ASCII, it uses the /dev/vcsa* devices to do it. It also could edit non-regular files, like hard disks, floppies, CDROMs, ZIPs, RAM, and almost any device. It comes with a ncurses and a raw version for people who work under X or remotely.

- http://www.grigna.com/diego/linux/vche/

- DHEX

- DHEX is just another Hexeditor with a Diff-mode for ncurses. It makes heavy use of colors and is themeable.

- http://www.dettus.net/dhex/

Other Tools for Windows

Resource Monitors

- SysInternals Freeware

- This page has a large number of excellent utilities, many of which are very useful to security experts, network administrators, and (most importantly to us) reversers. Specifically, check out Process Monitor, FileMon, RegMon, TCPView, and Process Explorer.

- https://docs.microsoft.com/en-us/sysinternals/

API Monitors

- SpyStudio Freeware

- The Spy Studio software is a tool to hook into windows processes, log windows API call to DLLs, insert breakpoints and change parameters.

- http://www.nektra.com/products/spystudio/

- rohitab.com API Monitor

- API Monitor is a free software that lets you monitor and control API calls made by applications and services. Features include detailed parameter information, structures, unions, enumerated/flag data types, call stack, call tree, breakpoints, custom DLLs, memory editor, call filtering, COM monitoring, 64-bit. Includes definitions for over 13,000 APIs and 1,300+ COM interfaces.

- http://www.rohitab.com/apimonitor

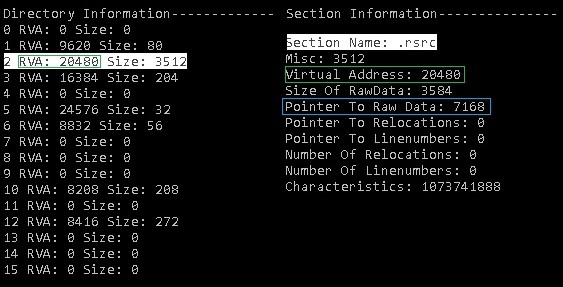

PE File Header dumpers

- Dumpbin

- Dumpbin is a program that previously used to be shipped with MS Visual Studio, but recently the functionality of Dumpbin has been incorporated into the Microsoft Linker, link.exe. to access dumpbin, pass /dump as the first parameter to link.exe:

link.exe /dump [options]

- It is frequently useful to simply create a batch file that handles this conversion:

::dumpbin.bat link.exe /dump %*

All examples in this wikibook that use dumpbin will call it in this manner.

- Here is a list of useful features of dumpbin [6]:

dumpbin /EXPORTS displays a list of functions exported from a library dumpbin /IMPORTS displays a list of functions imported from other libraries dumpbin /HEADERS displays PE header information for the executable

- Depends

- Dependency Walker is a GUI tool which will allow you to see exports and imports of binaries. It ships with many Microsoft tools including MS Visual Studio.

GNU Tools

The GNU packages have been ported to many platforms including Windows.

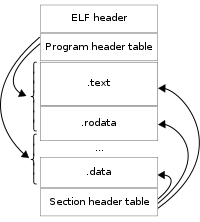

- GNU BinUtils

- The GNU BinUtils package contains several small utilities that are very useful in dealing with binary files. The most important programs in the list are the GNU objdump, readelf, GAS assembler, and the GNU linker, although the reverser might find more use in addr2line, c++filt, nm, and readelf.

- http://www.gnu.org/software/binutils/

- objdump

- Dumps out information about an executable including symbols and assembly. It comes standard. It can be made to support non-native binary formats.

objdump -p displays a list of functions imported from other libraries, exported to and miscellaneous file header information

It's useful to check dll dependencies from command line

- readelf

- Like objdump but more specialized for ELF executables.

- size

- Lists the sizes of the segments.

- nm

- Lists the symbols in an ELF file.

- strings

- Prints the strings from a file.

- file

- Tells you what type of file it is.

- fold

- Folds the results of strings into something pageable.

- kill

- Can be used to halt a program with the sig_stop signal.

- strace

- Trace system calls and signals.

Other Tools for Linux

- oprofile

- Can be used the find out what functions and data segments are used

- subterfugue

- A tool for playing odd tricks on an executable as it runs. The tool is scriptable in python. The user can write scripts to take action on events that occur, such as changing the arguments to system calls.

- http://subterfugue.org/

- lizard

- Lets you run a program backwards.

- http://lizard.sourceforge.net/

- dprobes

- Lets you work with both kernel and user code.

- biew

- Both a hex editor and a disassembler.

- ltrace

- Displays runtime library call information for dynamically linked executables.

- asmDIFF

- Searches for functions, instructions and memory pointers in different versions of same binary by using code metrics. Supports x86, x86_64 code in PE and ELF files.

- http://duschkumpane.org/index.php/asmdiff

XCode Tools

XCode contains some extra tools to be used under OS X with the Mach-O format. You can see more of them under /Applications/Xcode.app/Contents/Developer/usr/bin/.

- lipo

- Manages fat binaries with multiple architectures.

- otool

- Object file displaying tool, works somehow like objdump and readelf.

XCode also packs a lot of Unix tools, with many of them sharing the names (and functions) of the GNU tools. Other tools like nasm/ndisasm, lldb and GNU as can also be found.

Platforms

Microsoft Windows

Microsoft Windows

The Windows operating system is a popular reverse engineering target for one simple reason: the OS itself (market share, known weaknesses), and most applications for it, are not Open Source or free. Most software on a Windows machine doesn't come bundled with its source code, and most pieces have inadequate, or non-existent documentation. Occasionally, the only way to know precisely what a piece of software does (or for that matter, to determine whether a given piece of software is malicious or legitimate) is to reverse it, and examine the results.

Windows Versions

Windows operating systems can be easily divided into 2 categories: Windows9x, and WindowsNT.

Windows 9x

The Windows9x kernel was originally written to span the 16bit - 32bit divide. Operating Systems based on the 9x kernel are Windows 95, Windows 96, Windows 98, and Windows Me. Windows9x Series operating systems are known to be prone to bugs and system instability. The actual OS itself was a 32 bit extension of MS-DOS, its predecessor. An important issue with the 9x line is that they were all based around using the ANSI format for storing strings, rather than Unicode.

Development on the Windows9x kernel ended with the release of Windows Me.

Windows NT

The WindowsNT kernel series was originally written as enterprise-level server and network software. WindowsNT stresses stability and security far more than Windows9x kernels did (although it can be debated whether that stress was good enough). It also handles all string operations internally in Unicode, giving more flexibility when using different languages. Operating systems based on the WindowsNT kernel are: Windows NT (versions 3.1, 3.11, 3.2, 3.5, 3.51 and 4.0), Windows 2000 (NT 5.0), Windows XP (NT 5.1), Windows Server 2003 (NT 5.2), Windows Vista (NT 6.0), Windows 7 (NT 6.1), Windows 7.1 (NT 6.11), Windows 8 (NT 6.2), Windows 8.1 (NT 6.3), and Windows 10 (NT 10.0).

The Microsoft Xbox and Xbox 360 also run a variant of NT, forked from Windows 2000. Most future Microsoft operating system products are based on NT in some shape or form.

Virtual Memory

Memory is organized into "pages" that are 4096 bytes by default. Pages not in current use by the system or any of the applications may be written to a special section on the hard disk known as the "paging file." Use of the paging file may increase performance on some systems, although high latency for I/O to the HDD can actually reduce performance in some instances.

32-bit Windows NT allows for a maximum of 4 GiB of virtual memory address space per process. This is divided into 2 GiB user memory and 2 GiB kernel memory by default.

In some 32-bit versions and editions, the operating system can be started with the /3GB switch which divides this into 3 GiB user memory and 1 GiB kernel memory. Only 32-bit applications that are compiled with the large memory flag can use up to 3 GiB in this mode. The /3GB switch is not supported in 64-bit Windows, but 32-bit applications with the large memory flag can access up to 4 GiB on 64-bit Windows. 64-bit applications are not restricted in this way.

Starting with the Pentium Pro CPU some 32-bit versions and editions can use Physical Address Extensions (the /PAE switch) to access memory above 4 GiB up to 64 GiB. This memory can be accessed by 32-bit applications that support PAE (i.e. some versions of 32-bit Microsoft SQL Server and 32-bit Microsoft Exchange Server). Special configuration is required, however.

System Architecture

The Windows architecture is heavily layered. Function calls that a programmer makes may be redirected 3 times or more before any action is actually performed. There is an unignorable penalty for calling Win32 functions from a user-mode application. However, the upside is equally unignorable: code written in higher levels of the windows system is much easier to write. Complex operations that involve initializing multiple data structures and calling multiple sub-functions can be performed by calling only a single higher-level function.

The Win32 API comprises 3 modules: KERNEL32, USER32, and GDI32. KERNEL32 is layered on top of NTDLL, and most calls to KERNEL32 functions are simply redirected into NTDLL function calls. USER32 and GDI32 are both based on WIN32K (a kernel-mode module, responsible for the Windows "look and feel"), although USER32 also makes many calls to the more-primitive functions in GDI32. This and NTDLL both provide an interface to the Windows NT kernel, NTOSKRNL (see further below).

NTOSKRNL is also partially layered on HAL (Hardware Abstraction Layer), but this interaction will not be considered much in this book. The purpose of this layering is to allow processor variant issues (such as location of resources) to be made separate from the actual kernel itself. A slightly different system configuration thus requires just a different HAL module, rather than a completely different kernel module.

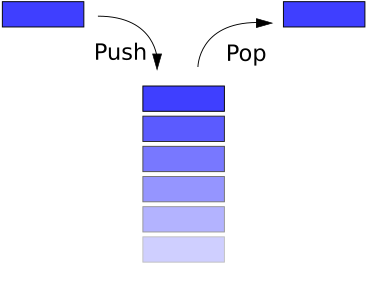

System calls and interrupts

After filtering through different layers of subroutines, most API calls require interaction with part of the operating system. Services are provided via 'software interrupts', traditionally through the "int 0x2e" instruction. This switches control of execution to the NT executive / kernel, where the request is handled. It should be pointed out here that the stack used in kernel mode is different from the user mode stack. This provides an added layer of protection between kernel and user. Once the function completes, control is returned back to the user application.

Both Intel and AMD provide an extra set of instructions to allow faster system calls, the "SYSENTER" instruction from Intel and the SYSCALL instruction from AMD.

Win32 API

Both WinNT and Win9x systems utilize the Win32 API. However, the WinNT version of the API has more functionality and security constructs, as well as Unicode support. Most of the Win32 API can be broken down into 3 separate components, each performing a separate task.

kernel32.dll

Kernel32.dll, home of the KERNEL subsystem, is where non-graphical functions are implemented. Some of the APIs located in KERNEL are: The Heap API, the Virtual Memory API, File I/O API, the Thread API, the System Object Manager, and other similar system services. Most of the functionality of kernel32.dll is implemented in ntdll.dll, but in undocumented functions. Microsoft prefers to publish documentation for kernel32 and guarantee that these APIs will remain unchanged, and then put most of the work in other libraries, which are then not documented.

gdi32.dll

gdi32.dll is the library that implements the GDI subsystem, where primitive graphical operations are performed. GDI diverts most of its calls into WIN32K, but it does contain a manager for GDI objects, such as pens, brushes and device contexts. The GDI object manager and the KERNEL object manager are completely separate.

user32.dll

The USER subsystem is located in the user32.dll library file. This subsystem controls the creation and manipulation of USER objects, which are common screen items such as windows, menus, cursors, etc... USER will set up the objects to be drawn, but will perform the actual drawing by calling on GDI (which in turn will make many calls to WIN32K) or sometimes even calling WIN32K directly. USER utilizes the GDI Object Manager.

Native API

The native API, hereby referred to as the NTDLL subsystem, is a series of undocumented API function calls that handle most of the work performed by KERNEL32. Microsoft also does not guarantee that the native API will remain the same between different versions, as Windows developers modify the software. This gives the risk of native API calls being removed or changed without warning, breaking software that utilizes it.

ntdll.dll

The NTDLL subsystem is located in ntdll.dll. This library contains many API function calls, that all follow a particular naming scheme. Each function has a prefix: Ldr, Nt, Zw, Csr, Dbg, etc... and all the functions that have a particular prefix all follow particular rules.

The "official" native API is usually limited only to functions whose prefix is Nt or Zw. These calls are in fact the same in user-mode: the relevant Export entries map to the same address in memory. However, in kernel-mode, the Zw* system call stubs set the previous mode to kernel-mode, ensuring that certain parameter validation routines are not performed. The origin of the prefix "Zw" is unknown; this prefix was chosen due to its having no significance at all[1].

In actual implementation, the system call stubs merely load two registers with values required to describe a native API call, and then execute a software interrupt (or the sysenter instruction).

Most of the other prefixes are obscure, but the known ones are:

- Rtl stands for "Run Time Library", calls which help functionality at runtime (such as RtlAllocateHeap)

- Csr is for "Client Server Runtime", which represents the interface to the win32 subsystem located in csrss.exe

- Dbg functions are present to enable debugging routines and operations

- Ldr provides the ability to load, manipulate and retrieve data from DLLs and other module resources

User Mode Versus Kernel Mode

Many functions, especially Run-time Library routines, are shared between ntdll.dll and ntoskrnl.exe. Most Native API functions, as well as other kernel-mode only functions exported from the kernel are useful for driver writers. As such, Microsoft provides documentation on many of the native API functions with the Microsoft Server 2003 Platform DDK. The DDK (Driver Development Kit) is available as a free download.

ntoskrnl.exe

This module is the Windows NT "'Executive'", providing all the functionality required by the native API, as well as the kernel itself, which is responsible for maintaining the machine state. By default, all interrupts and kernel calls are channeled through ntoskrnl in some way, making it the single most important program in Windows itself. Many of its functions are exported (all of which with various prefixes, a la NTDLL) for use by device drivers.

Win32K.sys

This module is the "Win32 Kernel" that sits on top of the lower-level, more primitive NTOSKRNL. WIN32K is responsible for the "look and feel" of windows, and many portions of this code have remained largely unchanged since the Win9x versions. This module provides many of the specific instructions that cause USER and GDI to act the way they do. It's responsible for translating the API calls from the USER and GDI libraries into the pictures you see on the monitor.

Win64 API

With the advent of 64-bit processors, 64-bit software is a necessity. As a result, the Win64 API was created to utilize the new hardware. It is important to note that the format of many of the function calls are identical in Win32 and Win64, except for the size of pointers, and other data types that are specific to 64-bit address space.

Windows Vista

Microsoft has released a new version of its Windows operation system, named "Windows Vista." Windows Vista may be better known by its development code-name "Longhorn." Microsoft claims that Vista has been written largely from the ground up, and therefore it can be assumed that there are fundamental differences between the Vista API and system architecture, and the APIs and architectures of previous Windows versions. Windows Vista was released January 30th, 2007.

Windows CE/Mobile, and other versions

Windows CE is the Microsoft offering on small devices. It largely uses the same Win32 API as the desktop systems, although it has a slightly different architecture. Some examples in this book may consider WindowsCE.

"Non-Executable Memory"

Recent windows service packs have attempted to implement a system known as "Non-executable memory" where certain pages can be marked as being "non-executable". The purpose of this system is to prevent some of the most common security holes by not allowing control to pass to code inserted into a memory buffer by an attacker. For instance, a shellcode loaded into an overflowed text buffer cannot be executed, stopping the attack in its tracks. The effectiveness of this mechanism is yet to be seen, however.

COM and Related Technologies

COM, and a whole slew of technologies that are either related to COM or are actually COM with a fancy name, is another factor to consider when reversing Windows binaries. COM, DCOM, COM+, ActiveX, OLE, MTS, and Windows DNA are all names for the same subject, or subjects, so similar that they may all be considered under the same heading. In short, COM is a method to export Object-Oriented Classes in a uniform, cross-platform and cross-language manner. In essence, COM is .NET, version 0 beta. Using COM, components written in many languages can export, import, instantiate, modify, and destroy objects defined in another file, most often a DLL. Although COM provides cross-platform (to some extent) and cross-language facilities, each COM object is compiled to a native binary, rather than an intermediate format such as Java or .NET. As a result, COM does not require a virtual machine to execute such objects.

Due to the way that COM works, a lot of the methods and data structures exported by a COM component are difficult to perceive by simply inspecting the executable file. Matters are made worse if the creating programmer has used a library such as ATL to simplify their programming experience. Unfortunately for a reverse engineer, this reduces the contents of an executable into a "Sea of bits", with pointers and data structures everywhere.

Remote Procedure Calls (RPC)