Statistics/Numerical Methods/Numerics in Excel

The purpose of this paper is to evaluate the accuracy of MS Excel in terms of statistical procedures and to conclude whether the MS Excel should be used for (statistical) scientific purposes or not.

According to the literature, there are three main problematic areas for Excel if it is used for statistical calculations. These are

- probability distributions,

- univariate statistics, ANOVA and Estimations (both linear and non-linear)

- random number generation.

If the results of statistical packages are assessed, one should take into account that the acceptable accuracy of the results should be achieved in double precision (which means that a result is accepted as accurate if it possesses 15 accurate digits) given that the reliable algorithms are capable of delivering correct results in double precision, as well. If the reliable algorithms can not retrieve results in double precision, it is not fair to anticipate that the package (evaluated) should achieve double precision. Thus we can say that the correct way for evaluating the statistical packages is assessing the quality of underlying algorithm of statistical calculations rather than only counting the accurate digits of results. Besides, test problems must be reasonable which means they must be amenable to solution by known reliable algorithms. (McCullough & Wilson, 1999, S. 28)

In further sections, our judgement about the accuracy of MS Excel will base on certified values and tests. As basis we have Knüsel’s ELV software for probability distributions, StRD (Statistical Reference Datasets) for Univariate Statistics, ANOVA and Estimations and finally Marsaglia’s DIEHARD for Random Number Generation. Each of the tests and certified values will be explained in the corresponding sections.

Assessing Excel Results for Statistical Distributions[edit | edit source]

As we mentioned above our judgement about Excel’s calculations for probability distributions will base on Knüsel’s ELV Program which can compute probabilities and quantiles of some elementary statistical distributions. Using ELV, the upper and lower tail probabilities of all distributions are computed with six significant digits for probabilities as small as 10−100 and upper and lower quantiles are computed for all distributions for tail probabilities P with 10−12 ≤ P ≤ ½. (Knüsel, 2003, S.1)

In our benchmark Excel should display no inaccurate digits. If six digits are displayed, then all six digits should be correct. If the algorithm is only accurate to two digits, then only two digits should be displayed so as not to mislead the user (McCullough & Wilson, 2005, S. 1245)

In the following sub-sections the exact values in the tables are retrieved from Knüsel’s ELV software and the acceptable accuracy is in single precision, because even the best algorithms can not achieve 15 correct digits in most cases, if the probability distributions are issued.

Normal Distribution[edit | edit source]

- Excel Function:NORMDIST

- Parameters: mean = 0, variance = 1, x (critical value)

- Computes: the tail probability Pr X ≤ x, whereas X denotes a random variable with a standard normal distribution (with mean 0 and variance 1)

As we can see in table 1, Excel 97, 2000 and XP encounter problems and computes small probabilities in tail incorrectly (i.e for x = -8,3 or x = -8.2) However, this problem is fixed in Excel 2003 (Knüsel, 2005, S.446).

Inverse Normal Distribution[edit | edit source]

- Excel Function: NORMINV

- Parameters: mean = 0, variance = 1, p (probability for X < x)

- Computes: the x value (quantile)

X denotes a random variable with a standard normal distribution. In contrast to “NORMDIST” function issued in the last section, p is given and quantile is computed.

If used, Excel 97 prints out quantiles with 10 digits although none of these 10 digits may be correct if p is small. In Excel 2000 and XP, Microsoft tried to fix errors, although results are not sufficient (See table 2). However in Excel 2003 the problem is fixed entirely. (Knüsel, 2005, S.446)

Inverse Chi-Square Distribution[edit | edit source]

- Excel Function: CHIINV

- Parameters: p (probability for X > x), n (degrees of freedom)

- Computes: the x value (quantile)

X denotes a random variable with a chi-square distribution with n degrees of freedom.

Old Excel Versions: Although the old Excel versions show ten significant digits, only very few of them are accurate if p is small (See table 3). Even if p is not small, the accurate digits are not enough to say that Excel is sufficient for this distribution.

Excel 2003: Problem was fixed. (Knüsel, 2005, S.446)

Inverse F Distribution[edit | edit source]

- Excel Function: FINV

- Parameters: p (probability for X > x), n1, n2 (degrees of freedom)

- Computes: the x value (quantile)

X denotes a random variable with a F distribution with n1 and n2 degrees of freedom.

Old Excel Versions: Excel prints out x values with 7 or more significant digits although only one or two of these many digits are correct if p is small (See table 4).

Excel 2003: Problem fixed. (Knüsel, 2005, S.446)

Inverse t Distribution[edit | edit source]

- Excel Function: TINV

- Parameters: p (probability for |X| > x), n (degree of freedom)

- Computes: the x value (quantile)

X denotes a random variable with a t distribution with n degrees of freedom. Please note that the |X| value causes a 2 tailed computation. (lower tail & high tail)

Old Excel Versions: Excel prints out quantiles with 9 or more significant digits although only one or two of these many digits are correct if p is small (See table 5).

Excel 2003: Problem fixed. (Knüsel, 2005, S.446)

Poisson Distribution[edit | edit source]

- Excel Function: Poisson

- Parameters: λ (mean), k (number of cases)

- Computes: the tail probability Pr X ≤ k

X denotes a random variable with a Poisson distribution with given parameters.

Old Excel Versions: correctly computes very small probabilities but gives no result for central probabilities near the mean (in the range about 0.5). (See table 6)

Excel 2003: The central probabilities are fixed. However, inaccurate results in the tail. (See table 6)

The strange behaviour of Excel can be encountered for values λ150. (Knüsel, 1998, S.375) It fails even for probabilities in the central range between 0.01 and 0.99 and even for parameter values that cannot be judged as too extreme.

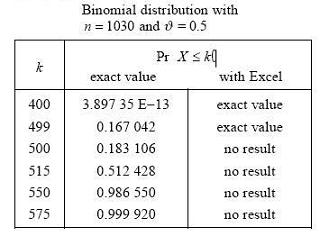

Binomial Distribution[edit | edit source]

- Excel Function: BINOMDIST

- Parameters: n (= number of trials) , υ(= probability for a success) , k(number of successes)

- Computes: the tail probability Pr X ≤ k

-X denotes a random variable with a binoamial distribution with given parameters

Old Excel Versions: As we see in table 7, old versions of Excel correctly computes very small probabilities but gives no result for central probabilities near the mean (same problem with Poisson distribuiton on old Excel versions)

Excel 2003: The central probabilities are fixed. However, inaccurate results in the tail. (Knüsel, 2005, S.446). (same problem with Poisson distribuiton on Excel 2003).

This strange behaviour of Excel can be encountered for values n > 1000. (Knüsel, 1998, S.375) It fails even for probabilities in the central range between 0.01 and 0.99 and even for parameter values that cannot be judged as too extreme.

Other problems[edit | edit source]

- Excel 97, 2000 and XP includes flaws by computing the hypergeometric distribution (HYPERGEOM). For some values (N > 1030) no result is retrieved. This is prevented on Excel 2003, but there is still no option to compute tail probabilities. So computation of Pr {X = k} is possible, but computation of Pr {X ≤ k} is not. (Knüsel, 2005, S.447)

- Function GAMMADIST for gamma distribution retrieves incorrect values on Excel 2003. (Knüsel, 2005, S.447-448)

- Also the function BETAINV for inverse beta distribution computes incorrect values on Excel 2003 (Knüsel, 2005, S. 448)

Assessing Excel Results for Univariate Statistics, ANOVA and Estimation (Linear & Non-Linear)[edit | edit source]

Our judgement about Excel’s calculations for univariate statistics, ANOVA and Estimation will base on StRD which is designed by Statistical Engineering Division of National Institute of Standards and Technology (NIST) to assist researchers in benchmarking statistical software packages explicitly. StRD has reference datasets (real-world and generated datasets) with certified computational results that enable the objective evaluation of statistical Software. It comprises four suites of numerical benchmarks for statistical software: univariate summary statistics, one way analysis of variance, linear regression and nonlinear regression and it includes several problems for each suite of tests. All problems have a difficulty level:low, average or high.

By assessing Excel results in this section we are going to use LRE (log relative error) which can be used as a score for accuracy of results of statistical packages. The number of correct digits in results can be calculated via log relative error. Please note that for double precision the computed LRE is in the range 0 - 15, because we can have max. 15 correct digits in double precision.

Formula LRE:

c: the correct answer (certified computational result) for a particular test problem

x: answer of Excel for the same problem

Univariate Statistics[edit | edit source]

- Excel Functions: - AVERAGE, STDEV, PEARSON (also CORREL)

- Computes (respectively): mean, standard deviation, correlation coefficient

Old Excel Versions: an unstable algorithm for calculation of the sample variance and the correlation coefficient is used. Even for the low difficulty problems (datasets with letter “l” in table 8) the old versions of Excel fail.

Excel 2003: Problem was fixed and the performance is acceptable. The accurate digits less than 15 don’t indicate an unsuccessful implementation because even the reliable algorithms can not retrieve 15 correct digits for these average and high difficulty problems (datasets with letters “a” and “h” in table 8) of StRD.

ONEWAY ANOVA[edit | edit source]

- Excel Function: Tools – Data Analysis – ANOVA: Single Factor (requires Analysis Toolpak)

- Computes: df, ss, ms, F-statistic

Since ANOVA produces many numerical results (such as df, ss, ms, F), here only the LRE for the final F-statistic is presented. Before assessing Excel’s performance one should consider that a reliable algorithm for one way Analysis of Variance can deliver 8-10 digits for the average difficulty problems and 4-5 digits for higher difficulty problems.

Old Excel Versions: Considering numerical solutions, delivering only a few digits of accuracy for difficult problems is not an evidence for bad software, but retrieving 0 accurate digits for average difficulty problems indicates bad software when calculating ANOVA. (McCullough & Wilson, 1999, S. 31). For that reason Excel versions prior than Excel 2003 has an acceptable performance only on low-difficulty problems. It retrieves zero accurate digits for difficult problems. Besides, negative results for “within group sum of squares” and “between group sum of squares” are the further indicators of a bad algorithm used for Excel. (See table 9)

Excel 2003: Problem was fixed (See table 9). The zero digits of accuracy for the Simon 9 test is no cause for concern, since this also occurs when reliable algorithms are employed. Therefore the performance is acceptable. (McCullough & Wilson, 2005, S. 1248)

Linear Regression[edit | edit source]

- Excel Function: LINEST

- Computes: All numerical results required by Linear Regression

Since LINEST produces many numerical results for linear regression, only the LRE for the coefficients and standard errors of coefficients are taken into account. Table 9 shows the lowest LRE values for each dataset as the weakest link in the chain in order to reflect the worst estimations (smallest -LRE and -LRE) made by Excel for each linear regression function.

Old Excel Versions: either doesn't check for near-singularity of the input matrix or checking it incorrectly, so the results for ill-conditioned Dataset “Filip (h)” include not a single correct digit. Actually, Excel should have refused the solution and commit a warning to user about the near singularity of data matrix. (McCullough & Wilson, 1999, S.32,33) . However, in this case, the user is mislead.

Excel 2003: Problem is fixed and Excel 2003 has an acceptable performance. (see table 10)

Non-Linear Regression[edit | edit source]

When solving nonlinear regression using Excel, it is possible to make choices about:

- method of derivative calculation: forward (default) or central numerical derivatives

- convergence tolerance (default=1.E-3)

- scaling (recentering) the variables

- method of solution (default – GRG2 quasi-Newton method)

Excel’s default parameters don’t always produce the best solutions always (like all other solvers). Therefore one needs to give different parameters and test the Excel-Solver for non-linear regression. In table 10 the columns A-B-C-D are combinations of different non-linear options. Because changing the 1st and 4th option doesn’t affect the result, only 2nd and 3rd parameters are changed for testing:

- A: Default estimation

- B: Convergence Tolerance = 1E -7

- C: Automatic Scaling

- D: Convergence Tolerance = 1E -7 & Automatic Scaling

In Table 11, the lowest LRE principle is applied to simplify the assessment. (like in linear reg.)

Results in table 11 are same for each Excel version (Excel 97, 2000, XP, 2003)

As we see in table 11, the non-linear option combination A produces 21 times, B 17 times, C 20 times and D 14 times “0” accurate digits. which indicates that the performance of Excel in this area is inadequate. Expecting to find all exact solutions for all problems with Excel is not fair, but if it is not able to find the result, it is expected to warn user and commit that the solution can not be calculated. Furthermore, one should emphasize that other statistical packages like SPSS, S-PLUS and SAS exhibit zero digit accuracy only few times (0 to 3) in these tests (McCullough & Wilson, 1999, S. 34).

Assessing Random Number Generator of Excel[edit | edit source]

Many statistical procedures employ random numbers and it is expected that the generated random numbers are really random. Only random number generators should be used that have solid theoretical properties. Additionally, statistical tests should be applied on samples generated and only generators whose output has successfully passed a battery of statistical tests should be used. (Gentle, 2003)

Based on the facts explained above we should assess the quality of Random Number Generation by:

- analysing the underlying algorithm for Random Number Generation.

- analysing the generators output stream. There are many alternatives to test the output of a RNG. One can evaluate the generated output using static tests in which the generation order is not important. These tests are goodness of fit tests. The second way of evaluating the output stream is running a dynamic test on generator, whereas the generation order of the numbers is important.

Excel’s RNG – Underlying algorithm[edit | edit source]

The objective of random number generation is to produce samples any given size that are indistinguishable from samples of the same size from a U(0,1) distribution. (Gentle, 2003) For this purpose there are different algorithms to use. Excel’s algorithm for random number generation is Wichmann–Hill algorithm. Wichmann–Hill is a useful RNG algorithm for common applications, but it is obsolete for modern needs (McCullough & Wilson, 2005, S. 1250). The formula for this random number generator is defined as follows:

Wichmann–Hill is a congruential generator which means that it is a recursive aritmethical RNG as we see in the formula above. It is a combination of three other linear congruential generator and requires three seeds: .

Period, in terms of random number generation, is the number of calls that can be made to the RNG before it begins to repeat. For that reason, having a long period is a quality measure for random number generators. It is essential that the period of the generator be larger than the number of random numbers to be used. Modern applications are increasingly demanding longer and longer sequences of random numbers (i.e for using in Monte-Carlo simulations) (Gentle, 2003)

The lowest acceptable period for a good RNG is and the period of Wichmann-Hill RNG is 6.95E+12 (≈ ). In addition to this unacceptable performance, Microsoft claims that the period of Wichmann-Hill RNG is 10E+13 Even if Excel’s RNG has a period of 10E+13, it is still not sufficient to be an acceptable random number generator because this value is also less than . (McCullough & Wilson, 2005, S. 1250)

Furthermore it is known that RNG of Excel produces negative values after the RNG executed many times. However a correct implementation of a Wichmann-Hill Random Number Generator should produce only values between 0 and 1. (McCullough & Wilson, 2005, S. 1249)

Excel’s RNG – The Output Stream[edit | edit source]

As we discussed above, it is not sufficient to discuss only the underlying algorithm of a random number generation. One needs also some tests on output stream of a random number generator while assessing the quality of this random number generator. So a Random Number Generator should produce output which passes some tests for randomness. Such a battery of tests, called DIEHARD, has been prepared by Marsaglia. A good RNG should pass almost all of the tests but as we can see in table 12 Excel can pass only 11 of them (7 failure), although Microsoft has declaired Wichmann–Hill Algorithm is implemented for Excel’s RNG. However, we know that Wichmann-Hill is able to pass 16 tests from DIEHARD (McCullough & Wilson, 1999, S. 35).

Due to reasons explained in previous and this section we can say that Excel’s performance is inadequate (because of period length, incorrect implementation Wichmann Hill Algorithm, which is already obsolete, DIEHARD test results)

Conclusion[edit | edit source]

Old versions of Excel (Excel 97, 2000, XP) :

- shows poor performance on following distributions: Normal, F, t, Chi Square, Binomial, Poisson, Hypergeometric

- retrieves inadequate results on following calculations: Univariate statistics, ANOVA, linear regression, non-linear regression

- has an unacceptable random number generator

For those reasons, we can say that use of Excel 97, 2000, XP for (statistical) scientific purposes should be avoided.

Although several bugs are fixed in Excel 2003, still use of Excel for (statistical) scientific purposes should be avoided because it:

- has a poor performance on following distributions: Binomial, Poisson, Gamma, Beta

- retrieves inadequate results for non-linear regression

- has an obsolete random number generator.

References[edit | edit source]

- Gentle J.E. (2003) Random number generation and Monte Carlo methods 2nd edition. New York Springer Verlag

- Knüsel, L. (2003) Computation of Statistical Distributions Documentation of the Program ELV Second Edition. http://www.stat.uni-muenchen.de/~knuesel/elv/elv_docu.pdf Retrieved [13 November 2005]

- Knüsel, L. (1998). On the Accuracy of the Statistical Distributions in Microsoft Excel 97. Computational Statistics and Data Analysis (CSDA), Vol. 26, 375-377.

- Knüsel, L. (2002). On the Reliability of Microsoft Excel XP for statistical purposes. Computational Statistics and Data Analysis (CSDA), Vol. 39, 109-110.

- Knüsel, L. (2005). On the Accuracy of Statistical Distributions in Microsoft Excel 2003. Computational Statistics and Data Analysis (CSDA), Vol. 48, 445-449.

- McCullough, B.D. & Wilson B. (2005). On the accuracy of statistical procedures in Microsoft Excel 2003. Computational Statistics & Data Analysis (CSDA), Vol. 49, 1244 – 1252.

- McCullough, B.D. & Wilson B. (1999). On the accuracy of statistical procedures in Microsoft Excel 97. Computational Statistics & Data Analysis (CSDA), Vol. 31, 27– 37.

- PC Magazin, April 6, 2004, p.71*