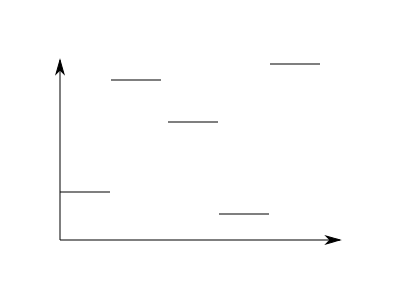

Before we dive deeply into the chapter, let's first motivate the notion of a test function. Let's consider two functions which are piecewise constant on the intervals

[

0

,

1

)

,

[

1

,

2

)

,

[

2

,

3

)

,

[

3

,

4

)

,

[

4

,

5

)

{\displaystyle [0,1),[1,2),[2,3),[3,4),[4,5)}

Let's call the left function

f

1

{\displaystyle f_{1}}

f

2

{\displaystyle f_{2}}

Of course we can easily see that the two functions are different; they differ on the interval

[

4

,

5

)

{\displaystyle [4,5)}

∫

R

φ

(

x

)

f

1

(

x

)

d

x

{\displaystyle \int _{\mathbb {R} }\varphi (x)f_{1}(x)dx}

∫

R

φ

(

x

)

f

2

(

x

)

d

x

{\displaystyle \int _{\mathbb {R} }\varphi (x)f_{2}(x)dx}

for functions

φ

{\displaystyle \varphi }

X

{\displaystyle {\mathcal {X}}}

We proceed with choosing

X

{\displaystyle {\mathcal {X}}}

f

1

≠

f

2

{\displaystyle f_{1}\neq f_{2}}

A

⊆

R

{\displaystyle A\subseteq \mathbb {R} }

characteristic function of

A

{\displaystyle A}

is defined as

χ

A

(

x

)

:=

{

1

x

∈

A

0

x

∉

A

{\displaystyle \chi _{A}(x):={\begin{cases}1&x\in A\\0&x\notin A\end{cases}}}

With this definition, we choose the set of functions

X

{\displaystyle {\mathcal {X}}}

X

:=

{

χ

[

0

,

1

)

,

χ

[

1

,

2

)

,

χ

[

2

,

3

)

,

χ

[

3

,

4

)

,

χ

[

4

,

5

)

}

{\displaystyle {\mathcal {X}}:=\{\chi _{[0,1)},\chi _{[1,2)},\chi _{[2,3)},\chi _{[3,4)},\chi _{[4,5)}\}}

It is easy to see (see exercise 1), that for

n

∈

{

1

,

2

,

3

,

4

,

5

}

{\displaystyle n\in \{1,2,3,4,5\}}

∫

R

χ

[

n

−

1

,

n

)

(

x

)

f

1

(

x

)

d

x

{\displaystyle \int _{\mathbb {R} }\chi _{[n-1,n)}(x)f_{1}(x)dx}

equals the value of

f

1

{\displaystyle f_{1}}

[

n

−

1

,

n

)

{\displaystyle [n-1,n)}

f

2

{\displaystyle f_{2}}

[

n

−

1

,

n

)

,

n

∈

{

1

,

2

,

3

,

4

,

5

}

{\displaystyle [n-1,n),n\in \{1,2,3,4,5\}}

f

1

=

f

2

⇔

∀

φ

∈

X

:

∫

R

φ

(

x

)

f

1

(

x

)

d

x

=

∫

R

φ

(

x

)

f

2

(

x

)

d

x

{\displaystyle f_{1}=f_{2}\Leftrightarrow \forall \varphi \in {\mathcal {X}}:\int _{\mathbb {R} }\varphi (x)f_{1}(x)dx=\int _{\mathbb {R} }\varphi (x)f_{2}(x)dx}

This obviously needs five evaluations of each integral, as

#

X

=

5

{\displaystyle \#{\mathcal {X}}=5}

Since we used the functions in

X

{\displaystyle {\mathcal {X}}}

f

1

{\displaystyle f_{1}}

f

2

{\displaystyle f_{2}}

test functions . What we ask ourselves now is if this notion generalises from functions like

f

1

{\displaystyle f_{1}}

f

2

{\displaystyle f_{2}}

In order to write down the definition of a bump function more shortly, we need the following two definitions:

Now we are ready to define a bump function in a brief way:

These two properties make the function really look like a bump, as the following example shows:

The standard mollifier

η

{\displaystyle \eta }

d

=

1

{\displaystyle d=1}

Example 3.4: The standard mollifier

η

{\displaystyle \eta }

η

:

R

d

→

R

,

η

(

x

)

=

1

c

{

e

−

1

1

−

‖

x

‖

2

if

‖

x

‖

2

<

1

0

if

‖

x

‖

2

≥

1

{\displaystyle \eta :\mathbb {R} ^{d}\to \mathbb {R} ,\eta (x)={\frac {1}{c}}{\begin{cases}e^{-{\frac {1}{1-\|x\|^{2}}}}&{\text{ if }}\|x\|_{2}<1\\0&{\text{ if }}\|x\|_{2}\geq 1\end{cases}}}

, where

c

:=

∫

B

1

(

0

)

e

−

1

1

−

‖

x

‖

2

d

x

{\displaystyle c:=\int _{B_{1}(0)}e^{-{\frac {1}{1-\|x\|^{2}}}}dx}

As for the bump functions, in order to write down the definition of Schwartz functions shortly, we first need two helpful definitions.

Now we are ready to define a Schwartz function.

Definition 3.7 :

We call

ϕ

:

R

d

→

R

{\displaystyle \phi :\mathbb {R} ^{d}\to \mathbb {R} }

Schwartz function iff the following two conditions are satisfied:

ϕ

∈

C

∞

(

R

d

)

{\displaystyle \phi \in {\mathcal {C}}^{\infty }(\mathbb {R} ^{d})}

∀

α

,

β

∈

N

0

d

:

‖

x

α

∂

β

ϕ

‖

∞

<

∞

{\displaystyle \forall \alpha ,\beta \in \mathbb {N} _{0}^{d}:\|x^{\alpha }\partial _{\beta }\phi \|_{\infty }<\infty }

By

x

α

∂

β

ϕ

{\displaystyle x^{\alpha }\partial _{\beta }\phi }

x

↦

x

α

∂

β

ϕ

(

x

)

{\displaystyle x\mapsto x^{\alpha }\partial _{\beta }\phi (x)}

f

(

x

,

y

)

=

e

−

x

2

−

y

2

{\displaystyle f(x,y)=e^{-x^{2}-y^{2}}}

Example 3.8 :

The function

f

:

R

2

→

R

,

f

(

x

,

y

)

=

e

−

x

2

−

y

2

{\displaystyle f:\mathbb {R} ^{2}\to \mathbb {R} ,f(x,y)=e^{-x^{2}-y^{2}}}

is a Schwartz function.

Theorem 3.9 :

Every bump function is also a Schwartz function.

This means for example that the standard mollifier is a Schwartz function.

Proof :

Let

φ

{\displaystyle \varphi }

φ

∈

C

∞

(

R

d

)

{\displaystyle \varphi \in {\mathcal {C}}^{\infty }(\mathbb {R} ^{d})}

R

>

0

{\displaystyle R>0}

supp

φ

⊆

B

R

(

0

)

¯

{\displaystyle {\text{supp }}\varphi \subseteq {\overline {B_{R}(0)}}}

, as in

R

d

{\displaystyle \mathbb {R} ^{d}}

α

,

β

∈

N

0

d

{\displaystyle \alpha ,\beta \in \mathbb {N} _{0}^{d}}

‖

x

α

∂

β

φ

(

x

)

‖

∞

:=

sup

x

∈

R

d

|

x

α

∂

β

φ

(

x

)

|

=

sup

x

∈

B

R

(

0

)

¯

|

x

α

∂

β

φ

(

x

)

|

supp

φ

⊆

B

R

(

0

)

¯

=

sup

x

∈

B

R

(

0

)

¯

(

|

x

α

|

|

∂

β

φ

(

x

)

|

)

rules for absolute value

≤

sup

x

∈

B

R

(

0

)

¯

(

R

|

α

|

|

∂

β

φ

(

x

)

|

)

∀

i

∈

{

1

,

…

,

d

}

,

(

x

1

,

…

,

x

d

)

∈

B

R

(

0

)

¯

:

|

x

i

|

≤

R

<

∞

Extreme value theorem

{\displaystyle {\begin{aligned}\|x^{\alpha }\partial _{\beta }\varphi (x)\|_{\infty }&:=\sup _{x\in \mathbb {R} ^{d}}|x^{\alpha }\partial _{\beta }\varphi (x)|&\\&=\sup _{x\in {\overline {B_{R}(0)}}}|x^{\alpha }\partial _{\beta }\varphi (x)|&{\text{supp }}\varphi \subseteq {\overline {B_{R}(0)}}\\&=\sup _{x\in {\overline {B_{R}(0)}}}\left(|x^{\alpha }||\partial _{\beta }\varphi (x)|\right)&{\text{rules for absolute value}}\\&\leq \sup _{x\in {\overline {B_{R}(0)}}}\left(R^{|\alpha |}|\partial _{\beta }\varphi (x)|\right)&\forall i\in \{1,\ldots ,d\},(x_{1},\ldots ,x_{d})\in {\overline {B_{R}(0)}}:|x_{i}|\leq R\\&<\infty &{\text{Extreme value theorem}}\end{aligned}}}

◻

{\displaystyle \Box }

Now we define what convergence of a sequence of bump (Schwartz) functions to a bump (Schwartz) function means.

Definition 3.11 :

We say that the sequence of Schwartz functions

(

ϕ

i

)

i

∈

N

{\displaystyle (\phi _{i})_{i\in \mathbb {N} }}

ϕ

{\displaystyle \phi }

∀

α

,

β

∈

N

0

d

:

‖

x

α

∂

β

ϕ

i

−

x

α

∂

β

ϕ

‖

∞

→

0

,

i

→

∞

{\displaystyle \forall \alpha ,\beta \in \mathbb {N} _{0}^{d}:\|x^{\alpha }\partial _{\beta }\phi _{i}-x^{\alpha }\partial _{\beta }\phi \|_{\infty }\to 0,i\to \infty }

Theorem 3.12 :

Let

(

φ

i

)

i

∈

N

{\displaystyle (\varphi _{i})_{i\in \mathbb {N} }}

φ

i

→

φ

{\displaystyle \varphi _{i}\to \varphi }

φ

i

→

φ

{\displaystyle \varphi _{i}\to \varphi }

Proof :

Let

O

⊆

R

d

{\displaystyle O\subseteq \mathbb {R} ^{d}}

(

φ

l

)

l

∈

N

{\displaystyle (\varphi _{l})_{l\in \mathbb {N} }}

D

(

O

)

{\displaystyle {\mathcal {D}}(O)}

φ

l

→

φ

∈

D

(

O

)

{\displaystyle \varphi _{l}\to \varphi \in {\mathcal {D}}(O)}

D

(

O

)

{\displaystyle {\mathcal {D}}(O)}

K

⊂

R

d

{\displaystyle K\subset \mathbb {R} ^{d}}

supp

φ

l

{\displaystyle {\text{supp }}\varphi _{l}}

supp

φ

⊆

K

{\displaystyle {\text{supp }}\varphi \subseteq K}

‖

φ

l

−

φ

‖

∞

≥

|

c

|

{\displaystyle \|\varphi _{l}-\varphi \|_{\infty }\geq |c|}

c

∈

R

{\displaystyle c\in \mathbb {R} }

φ

{\displaystyle \varphi }

K

{\displaystyle K}

φ

l

→

φ

{\displaystyle \varphi _{l}\to \varphi }

In

R

d

{\displaystyle \mathbb {R} ^{d}}

K

⊂

B

R

(

0

)

{\displaystyle K\subset B_{R}(0)}

R

>

0

{\displaystyle R>0}

α

,

β

∈

N

0

d

{\displaystyle \alpha ,\beta \in \mathbb {N} _{0}^{d}}

‖

x

α

∂

β

φ

l

−

x

α

∂

β

φ

‖

∞

=

sup

x

∈

R

d

|

x

α

∂

β

φ

l

(

x

)

−

x

α

∂

β

φ

(

x

)

|

definition of the supremum norm

=

sup

x

∈

B

R

(

0

)

|

x

α

∂

β

φ

l

(

x

)

−

x

α

∂

β

φ

(

x

)

|

as

supp

φ

l

,

supp

φ

⊆

K

⊂

B

R

(

0

)

≤

R

|

α

|

sup

x

∈

B

R

(

0

)

|

∂

β

φ

l

(

x

)

−

∂

β

φ

(

x

)

|

∀

i

∈

{

1

,

…

,

d

}

,

(

x

1

,

…

,

x

d

)

∈

B

R

(

0

)

¯

:

|

x

i

|

≤

R

=

R

|

α

|

sup

x

∈

R

d

|

∂

β

φ

l

(

x

)

−

∂

β

φ

(

x

)

|

as

supp

φ

l

,

supp

φ

⊆

K

⊂

B

R

(

0

)

=

R

|

α

|

‖

∂

β

φ

l

(

x

)

−

∂

β

φ

(

x

)

‖

∞

definition of the supremum norm

→

0

,

l

→

∞

since

φ

l

→

φ

in

D

(

O

)

{\displaystyle {\begin{aligned}\|x^{\alpha }\partial _{\beta }\varphi _{l}-x^{\alpha }\partial _{\beta }\varphi \|_{\infty }&=\sup _{x\in \mathbb {R} ^{d}}\left|x^{\alpha }\partial _{\beta }\varphi _{l}(x)-x^{\alpha }\partial _{\beta }\varphi (x)\right|&{\text{ definition of the supremum norm}}\\&=\sup _{x\in B_{R}(0)}\left|x^{\alpha }\partial _{\beta }\varphi _{l}(x)-x^{\alpha }\partial _{\beta }\varphi (x)\right|&{\text{ as }}{\text{supp }}\varphi _{l},{\text{supp }}\varphi \subseteq K\subset B_{R}(0)\\&\leq R^{|\alpha |}\sup _{x\in B_{R}(0)}\left|\partial _{\beta }\varphi _{l}(x)-\partial _{\beta }\varphi (x)\right|&\forall i\in \{1,\ldots ,d\},(x_{1},\ldots ,x_{d})\in {\overline {B_{R}(0)}}:|x_{i}|\leq R\\&=R^{|\alpha |}\sup _{x\in \mathbb {R} ^{d}}\left|\partial _{\beta }\varphi _{l}(x)-\partial _{\beta }\varphi (x)\right|&{\text{ as }}{\text{supp }}\varphi _{l},{\text{supp }}\varphi \subseteq K\subset B_{R}(0)\\&=R^{|\alpha |}\left\|\partial _{\beta }\varphi _{l}(x)-\partial _{\beta }\varphi (x)\right\|_{\infty }&{\text{ definition of the supremum norm}}\\&\to 0,l\to \infty &{\text{ since }}\varphi _{l}\to \varphi {\text{ in }}{\mathcal {D}}(O)\end{aligned}}}

Therefore the sequence converges with respect to the notion of convergence for Schwartz functions.

◻

{\displaystyle \Box }

In this section, we want to show that we can test equality of continuous functions

f

,

g

{\displaystyle f,g}

∫

R

d

f

(

x

)

φ

(

x

)

d

x

{\displaystyle \int _{\mathbb {R} ^{d}}f(x)\varphi (x)dx}

∫

R

d

g

(

x

)

φ

(

x

)

d

x

{\displaystyle \int _{\mathbb {R} ^{d}}g(x)\varphi (x)dx}

for all

φ

∈

D

(

O

)

{\displaystyle \varphi \in {\mathcal {D}}(O)}

φ

∈

S

(

R

d

)

{\displaystyle \varphi \in {\mathcal {S}}(\mathbb {R} ^{d})}

D

(

O

)

⊂

S

(

R

d

)

{\displaystyle {\mathcal {D}}(O)\subset {\mathcal {S}}(\mathbb {R} ^{d})}

But before we are able to show that, we need a modified mollifier, where the modification is dependent of a parameter, and two lemmas about that modified mollifier.

Definition 3.13 :

For

R

∈

R

>

0

{\displaystyle R\in \mathbb {R} _{>0}}

η

R

:

R

d

→

R

,

η

R

(

x

)

=

η

(

x

R

)

/

R

d

{\displaystyle \eta _{R}:\mathbb {R} ^{d}\to \mathbb {R} ,\eta _{R}(x)=\eta \left({\frac {x}{R}}\right){\big /}R^{d}}

Lemma 3.14 :

Let

R

∈

R

>

0

{\displaystyle R\in \mathbb {R} _{>0}}

supp

η

R

=

B

R

(

0

)

¯

{\displaystyle {\text{supp }}\eta _{R}={\overline {B_{R}(0)}}}

Proof :

From the definition of

η

{\displaystyle \eta }

supp

η

=

B

1

(

0

)

¯

{\displaystyle {\text{supp }}\eta ={\overline {B_{1}(0)}}}

Further, for

R

∈

R

>

0

{\displaystyle R\in \mathbb {R} _{>0}}

x

R

∈

B

1

(

0

)

¯

⇔

‖

x

R

‖

≤

1

⇔

‖

x

‖

≤

R

⇔

x

∈

B

R

(

0

)

¯

{\displaystyle {\begin{aligned}{\frac {x}{R}}\in {\overline {B_{1}(0)}}&\Leftrightarrow \left\|{\frac {x}{R}}\right\|\leq 1\\&\Leftrightarrow \|x\|\leq R\\&\Leftrightarrow x\in {\overline {B_{R}(0)}}\end{aligned}}}

Therefore, and since

x

∈

supp

η

R

⇔

x

R

∈

supp

η

{\displaystyle x\in {\text{supp }}\eta _{R}\Leftrightarrow {\frac {x}{R}}\in {\text{supp }}\eta }

, we have:

x

∈

supp

η

R

⇔

x

∈

B

R

(

0

)

¯

{\displaystyle x\in {\text{supp }}\eta _{R}\Leftrightarrow x\in {\overline {B_{R}(0)}}}

◻

{\displaystyle \Box }

In order to prove the next lemma, we need the following theorem from integration theory:

Theorem 3.15 : (Multi-dimensional integration by substitution)

If

O

,

U

⊆

R

d

{\displaystyle O,U\subseteq \mathbb {R} ^{d}}

ψ

:

U

→

O

{\displaystyle \psi :U\to O}

∫

O

f

(

x

)

d

x

=

∫

U

f

(

ψ

(

x

)

)

|

det

J

ψ

(

x

)

|

d

x

{\displaystyle \int _{O}f(x)dx=\int _{U}f(\psi (x))|\det J_{\psi }(x)|dx}

We will omit the proof, as understanding it is not very important for understanding this wikibook.

Lemma 3.16 :

Let

R

∈

R

>

0

{\displaystyle R\in \mathbb {R} _{>0}}

∫

R

d

η

R

(

x

)

d

x

=

1

{\displaystyle \int _{\mathbb {R} ^{d}}\eta _{R}(x)dx=1}

Proof :

∫

R

d

η

R

(

x

)

d

x

=

∫

R

d

η

(

x

R

)

/

R

d

d

x

Def. of

η

R

=

∫

R

d

η

(

x

)

d

x

integration by substitution using

x

↦

R

x

=

∫

B

1

(

0

)

η

(

x

)

d

x

Def. of

η

=

∫

B

1

(

0

)

e

−

1

1

−

‖

x

‖

d

x

∫

B

1

(

0

)

e

−

1

1

−

‖

x

‖

d

x

Def. of

η

=

1

{\displaystyle {\begin{aligned}\int _{\mathbb {R} ^{d}}\eta _{R}(x)dx&=\int _{\mathbb {R} ^{d}}\eta \left({\frac {x}{R}}\right){\big /}R^{d}dx&{\text{Def. of }}\eta _{R}\\&=\int _{\mathbb {R} ^{d}}\eta (x)dx&{\text{integration by substitution using }}x\mapsto Rx\\&=\int _{B_{1}(0)}\eta (x)dx&{\text{Def. of }}\eta \\&={\frac {\int _{B_{1}(0)}e^{-{\frac {1}{1-\|x\|}}}dx}{\int _{B_{1}(0)}e^{-{\frac {1}{1-\|x\|}}}dx}}&{\text{Def. of }}\eta \\&=1\end{aligned}}}

◻

{\displaystyle \Box }

Now we are ready to prove the ‘testing’ property of test functions:

Theorem 3.17 :

Let

f

,

g

:

R

d

→

R

{\displaystyle f,g:\mathbb {R} ^{d}\to \mathbb {R} }

∀

φ

∈

D

(

O

)

:

∫

R

d

φ

(

x

)

f

(

x

)

d

x

=

∫

R

d

φ

(

x

)

g

(

x

)

d

x

{\displaystyle \forall \varphi \in {\mathcal {D}}(O):\int _{\mathbb {R} ^{d}}\varphi (x)f(x)dx=\int _{\mathbb {R} ^{d}}\varphi (x)g(x)dx}

then

f

=

g

{\displaystyle f=g}

Proof :

Let

x

∈

R

d

{\displaystyle x\in \mathbb {R} ^{d}}

ϵ

∈

R

>

0

{\displaystyle \epsilon \in \mathbb {R} _{>0}}

f

{\displaystyle f}

δ

∈

R

>

0

{\displaystyle \delta \in \mathbb {R} _{>0}}

∀

y

∈

B

δ

(

x

)

¯

:

|

f

(

x

)

−

f

(

y

)

|

<

ϵ

{\displaystyle \forall y\in {\overline {B_{\delta }(x)}}:|f(x)-f(y)|<\epsilon }

Then we have

|

f

(

x

)

−

∫

R

d

f

(

y

)

η

δ

(

x

−

y

)

d

y

|

=

|

∫

R

d

(

f

(

x

)

−

f

(

y

)

)

η

δ

(

x

−

y

)

d

y

|

lemma 3.16

≤

∫

R

d

|

f

(

x

)

−

f

(

y

)

|

η

δ

(

x

−

y

)

d

y

triangle ineq. for the

∫

and

η

δ

≥

0

=

∫

B

δ

(

0

)

¯

|

f

(

x

)

−

f

(

y

)

|

η

δ

(

x

−

y

)

d

y

lemma 3.14

≤

∫

B

δ

(

0

)

¯

ϵ

η

δ

(

x

−

y

)

d

y

monotony of the

∫

≤

ϵ

lemma 3.16 and

η

δ

≥

0

{\displaystyle {\begin{aligned}\left|f(x)-\int _{\mathbb {R} ^{d}}f(y)\eta _{\delta }(x-y)dy\right|&=\left|\int _{\mathbb {R} ^{d}}(f(x)-f(y))\eta _{\delta }(x-y)dy\right|&{\text{lemma 3.16}}\\&\leq \int _{\mathbb {R} ^{d}}|f(x)-f(y)|\eta _{\delta }(x-y)dy&{\text{triangle ineq. for the }}\int {\text{ and }}\eta _{\delta }\geq 0\\&=\int _{\overline {B_{\delta }(0)}}|f(x)-f(y)|\eta _{\delta }(x-y)dy&{\text{lemma 3.14}}\\&\leq \int _{\overline {B_{\delta }(0)}}\epsilon \eta _{\delta }(x-y)dy&{\text{monotony of the }}\int \\&\leq \epsilon &{\text{lemma 3.16 and }}\eta _{\delta }\geq 0\end{aligned}}}

Therefore,

∫

R

d

f

(

y

)

η

δ

(

x

−

y

)

d

y

→

f

(

x

)

,

δ

→

0

{\displaystyle \int _{\mathbb {R} ^{d}}f(y)\eta _{\delta }(x-y)dy\to f(x),\delta \to 0}

∫

R

d

g

(

y

)

η

δ

(

x

−

y

)

d

y

→

g

(

x

)

,

δ

→

0

{\displaystyle \int _{\mathbb {R} ^{d}}g(y)\eta _{\delta }(x-y)dy\to g(x),\delta \to 0}

∀

δ

∈

R

>

0

:

∫

R

d

g

(

y

)

η

δ

(

x

−

y

)

d

y

=

∫

R

d

f

(

y

)

η

δ

(

x

−

y

)

d

y

{\displaystyle \forall \delta \in \mathbb {R} _{>0}:\int _{\mathbb {R} ^{d}}g(y)\eta _{\delta }(x-y)dy=\int _{\mathbb {R} ^{d}}f(y)\eta _{\delta }(x-y)dy}

As limits in the reals are unique, it follows that

f

(

x

)

=

g

(

x

)

{\displaystyle f(x)=g(x)}

x

∈

R

d

{\displaystyle x\in \mathbb {R} ^{d}}

f

=

g

{\displaystyle f=g}

◻

{\displaystyle \Box }

Remark 3.18 :

Let

f

,

g

:

R

d

→

R

{\displaystyle f,g:\mathbb {R} ^{d}\to \mathbb {R} }

∀

φ

∈

S

(

R

d

)

:

∫

R

d

φ

(

x

)

f

(

x

)

d

x

=

∫

R

d

φ

(

x

)

g

(

x

)

d

x

{\displaystyle \forall \varphi \in {\mathcal {S}}(\mathbb {R} ^{d}):\int _{\mathbb {R} ^{d}}\varphi (x)f(x)dx=\int _{\mathbb {R} ^{d}}\varphi (x)g(x)dx}

then

f

=

g

{\displaystyle f=g}

Proof :

This follows from all bump functions being Schwartz functions, which is why the requirements for theorem 3.17 are met.

◻

{\displaystyle \Box }

Let

b

∈

R

{\displaystyle b\in \mathbb {R} }

f

:

R

→

R

{\displaystyle f:\mathbb {R} \to \mathbb {R} }

[

b

−

1

,

b

)

{\displaystyle [b-1,b)}

∀

y

∈

[

b

−

1

,

b

)

:

∫

R

χ

[

b

−

1

,

b

)

(

x

)

f

(

x

)

d

x

=

f

(

y

)

{\displaystyle \forall y\in [b-1,b):\int _{\mathbb {R} }\chi _{[b-1,b)}(x)f(x)dx=f(y)}

Prove that the standard mollifier as defined in example 3.4 is a bump function by proceeding as follows:

Prove that the function

x

↦

{

e

−

1

x

x

>

0

0

x

≤

0

{\displaystyle x\mapsto {\begin{cases}e^{-{\frac {1}{x}}}&x>0\\0&x\leq 0\end{cases}}}

is contained in

C

∞

(

R

)

{\displaystyle {\mathcal {C}}^{\infty }(\mathbb {R} )}

Prove that the function

x

↦

1

−

‖

x

‖

{\displaystyle x\mapsto 1-\|x\|}

is contained in

C

∞

(

R

d

)

{\displaystyle {\mathcal {C}}^{\infty }(\mathbb {R} ^{d})}

Conclude that

η

∈

C

∞

(

R

d

)

{\displaystyle \eta \in {\mathcal {C}}^{\infty }(\mathbb {R} ^{d})}

Prove that

supp

η

{\displaystyle {\text{supp }}\eta }

supp

η

{\displaystyle {\text{supp }}\eta }

Let

O

⊆

R

d

{\displaystyle O\subseteq \mathbb {R} ^{d}}

φ

∈

D

(

O

)

{\displaystyle \varphi \in {\mathcal {D}}(O)}

ϕ

∈

S

(

R

d

)

{\displaystyle \phi \in {\mathcal {S}}(\mathbb {R} ^{d})}

α

,

β

∈

N

0

d

{\displaystyle \alpha ,\beta \in \mathbb {N} _{0}^{d}}

∂

α

φ

∈

D

(

O

)

{\displaystyle \partial _{\alpha }\varphi \in {\mathcal {D}}(O)}

x

α

∂

β

ϕ

∈

S

(

R

d

)

{\displaystyle x^{\alpha }\partial _{\beta }\phi \in {\mathcal {S}}(\mathbb {R} ^{d})}

Let

O

⊆

R

d

{\displaystyle O\subseteq \mathbb {R} ^{d}}

φ

1

,

…

,

φ

n

∈

D

(

O

)

{\displaystyle \varphi _{1},\ldots ,\varphi _{n}\in {\mathcal {D}}(O)}

c

1

,

…

,

c

n

∈

R

{\displaystyle c_{1},\ldots ,c_{n}\in \mathbb {R} }

∑

j

=

1

n

c

j

φ

j

∈

D

(

O

)

{\displaystyle \sum _{j=1}^{n}c_{j}\varphi _{j}\in {\mathcal {D}}(O)}

Let

ϕ

1

,

…

,

ϕ

n

{\displaystyle \phi _{1},\ldots ,\phi _{n}}

c

1

,

…

,

c

n

∈

R

{\displaystyle c_{1},\ldots ,c_{n}\in \mathbb {R} }

∑

j

=

1

n

c

j

ϕ

j

{\displaystyle \sum _{j=1}^{n}c_{j}\phi _{j}}

Let

α

∈

N

0

d

{\displaystyle \alpha \in \mathbb {N} _{0}^{d}}

p

(

x

)

:=

∑

ς

≤

α

c

ς

x

ς

{\displaystyle p(x):=\sum _{\varsigma \leq \alpha }c_{\varsigma }x^{\varsigma }}

ϕ

l

→

ϕ

{\displaystyle \phi _{l}\to \phi }

p

ϕ

l

→

p

ϕ

{\displaystyle p\phi _{l}\to p\phi }