Numerical Methods/Errors Introduction

When using numerical methods or algorithms and computing with finite precision, errors of approximation or rounding and truncation are introduced. It is important to have a notion of their nature and their order. A newly developed method is worthless without an error analysis. Neither does it make sense to use methods which introduce errors with magnitudes larger than the effects to be measured or simulated. On the other hand, using a method with very high accuracy might be computationally too expensive to justify the gain in accuracy.

Accuracy and Precision

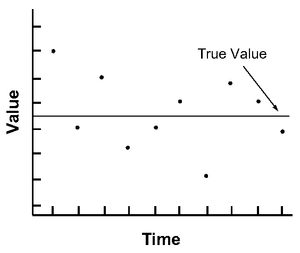

[edit | edit source]Measurements and calculations can be characterized with regard to their accuracy and precision. Accuracy refers to how closely a value agrees with the true value. Precision refers to how closely values agree with each other. The following figures illustrate the difference between accuracy and precision. In the first figure, the given values (black dots) are more accurate; whereas in the second figure, the given values are more precise. The term error represents the imprecision and inaccuracy of a numerical computation.

Absolute Error

[edit | edit source]Absolute Error is the magnitude of the difference between the true value x and the approximate value xa. Therefore absolute error=[x-xa] The error between two values is defined as

where denotes the exact value and denotes the approximation.

Relative Error

[edit | edit source]The relative error of is the absolute error relative to the exact value. Look at it this way: if your measurement has an error of ± 1 inch, this seems to be a huge error when you try to measure something which is 3 in. long. However, when measuring distances on the order of miles, this error is mostly negligible. The definition of the relative error is

Sources of Error

[edit | edit source]In a numerical computation, error may arise because of the following reasons:

- Truncation error

- Roundoff error

Truncation Error

[edit | edit source]The word 'Truncate' means 'to shorten'. Truncation error refers to an error in a method, which occurs because some number/series of steps (finite or infinite) is truncated (shortened) to a fewer number. Such errors are essentially algorithmic errors and we can predict the extent of the error that will occur in the method. For instance, if we approximate the sine function by the first two non-zero term of its Taylor series, as in for small , the resulting error is a truncation error. It is present even with infinite-precision arithmetic, because it is caused by truncation of the infinite Taylor series to form the algorithm.

Roundoff Error

[edit | edit source]Roundoff error occurs because of the computing device's inability to deal with certain numbers. Such numbers need to be rounded off to some near approximation which is dependent on the word size used to represent numbers of the device.