Foundations of Computer Science/Printable version

| This is the print version of Foundations of Computer Science You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/Foundations_of_Computer_Science

Introduction

Have you ever wondered what computing is and how a computer works? What exactly is computer science? Why—beyond the obvious reasons—is it important? What do computer scientists do? What types of problems do they work on? What approaches do they use to solve those problems? How, in general, do computer scientists think?

Question 1: What do you think of when you hear "computer science?" Write a paragraph or list, or draw an image or diagram of what comes to mind.

Question 2: What are the parts of computer science that are most interesting or important to you currently? Why?

When you hear the term "computer science" perhaps you think of a specific computer. Or someone you know who works with computers. Or a particular computer use, say online games or social networks. There are many, many different aspects of computing and computer science.

There are a number of reasons why it is useful and important to know something about this computer science. Computers affect many, many aspects of our lives in different ways. For many people, computers are playing or will play a significant role in the work they do, in their recreational pursuits, in how they communicate with others, in their education, in their health care, etc. Think about the many different ways you encounter computers and computing, either directly or indirectly, in your daily life.

What, more specifically, will this book cover? The foremost purpose of this text is to give you a greater understanding of the fundamentals of computer science: What is computer science, anyway? Is the same as computer programming? What is a computer? For example, most people would agree that a "laptop computer" is a computer, as is a "tablet computer", but what about a smartphone? And how do computers work? For example, we can store not only numbers and text in computers, but also images, video files, and audio files; how do computers handle such disparate data? And what are some interesting and important subareas of computer science? For example, what is important to know about subareas such as computer graphics, networking, or databases? And why is any of this important? Isn't it sufficient for most people just to use computers, rather than have a deeper understanding of computers and computer science?

These are all fundamental questions about computing, and in this book we'll look at them and other questions. In summary, one purpose of this book is to provide an overview of computer science that not only exposes you to computer science fundamentals—such as how a computer works on a rudimentary level—but also explores why these fundamentals are important.

There are two parts of this overview that are particularly important: while the main theme is an overview of computer science, two essential subthemes are how mathematics is used in computer science and how computer science affects, and is affected by, society.

Both subthemes fit well in an overview of computer science book. Computer science relies heavily on mathematics (in fact, some colleges have computer science and mathematics programs in a joint department). Certain uses of mathematics in computer science are obvious—for example, in computational tools such as spreadsheets—but there are also many less obvious ways that mathematics is essential to computer science. For example at the lowest level in a computer, data (whether that data is numeric, text, audio, video, etc.) is all represented in binary, i.e., as strings of 0's and 1's. This means that to understand something very basic about computers you need to understand binary numbers and operations.

Computers also affect society in many ways, from the use of computer-generated imagery in films, to large government or commercial databases, to the multiple societal effects of the Internet. And society affects computers, for example through user behavior and through different types of regulation.

While mathematics and technology and society might seem too different to be included comfortably in the same book, there are actually many computer science topics that are useful to explore from both perspectives—in a sense, these different viewpoints are "two sides of the same coin." For example, one topic in the book is computer security. Mathematics plays a role in security, for example in encryption. And computer security also has many societal aspects, for example national security, infrastructure security, and individual security. Most of the topics in this text similarly have both mathematical underpinnings and societal aspects, and exploring these topics from both perspectives will result in a richer understanding.

What this book isn't

[edit | edit source]There are a number of different types of introductory computer science books. So, in addition to explaining what this text is, it is also useful to state what it is not.

This is not a programming book. Programming is a central activity in computer science, but it is not the whole of computer science. Because programming is important, we'll spend some time on it. However, because computer science is much more than programming, and because this is an overview book, that time will be only a small part of this work.

This is not a computer applications book. Many other books cover basic computer applications. For example, a popular choice is teaching how to use a word processor, a spreadsheet, a database management program, and presentation software. These and other applications are important parts of computer science, and so in this book you will get a chance to learn about some applications that might be new to you. However—like programming— using applications is only part of learning about computer science, and so application use will be only a small part of this book.

This is not a "computer literacy" or "computer fluency" book. There are a variety of definitions of computer literacy or computer fluency. For example, the Wikipedia definition, derived from a report from the U.S. Congress of Technology Assessment, is "the knowledge and ability to use computers and related technology efficiently, with a range of skills covering levels from elementary use to programming and advanced problem solving."[1] Parts of this book will involve using computers to gain a variety of skills. For example, you will do a variety of computer-related tasks such as performing web searches, constructing web pages, doing elementary computer programming, and working with databases. However, this is just one part, rather than the totality, of the text. So this book shares some characteristics of a computer literacy book, but overall it has a wider focus than that type of a textbook.

This is not a "great ideas in computer science" book. One current trend in computer science introductory materials is to study computer science through its important, fundamental ideas.[2] And this book does cover some key ideas. For example, an early topic we'll study is how all data in computers, whether those data are numeric, text, video, or others, are represented within the computer as 0's and 1's. In general, the topics in the book are fundamental to computer science. However, this text also differs from a great ideas book. It is not focused solely on ideas, but explores broadly a number of computer-related issues, subtopics, and computer skills. Moreover, this book focuses more on mathematical thinking, and on technology and society, than a typical great ideas book would.

In addition to programming, applications, computer fluency, and great ideas, there are a number of other types of introductory computer science textbooks. Some survey a variety of computer science topics. Others focus on professional software development practices. Still others look at look at computing through a particular "lens" such as networks or computational biology. And so on. This book has some common characteristics with these other courses, but also has significant differences. In particular, the biggest difference is this book blends an overview of computer science with a strong emphasis on mathematics, and on society and technology; this is a balance of emphases that has a number of advantages, but is not usually seen in introductory computer science courses.

What is this book about?

[edit | edit source]Both mathematical thinking and technology and society are significant parts of this book. Many textbooks present an introduction to computer science though programming, or through how computers work, or through some other aspect of computing. However, there is not a suitable text that combines an overview of computer science with both sufficient mathematical and sufficient society and technology emphases.

At first glance, it might seem odd that a book introducing computer science would deal with liberal education. What does computer science have to do with liberal education? Understanding computers well involves exploring them from a variety of different viewpoints. This includes understanding not only how computers work—including, for example, the mathematical underpinnings of computer science—but also how they affect, and are affected by, society. In summary, to have a good understanding of computers and computer science it is important to explore them from a variety of perspectives, including the perspectives embodied in liberal education.

Mathematical thinking

[edit | edit source]Question 3. What do you think of when you hear the word "mathematics?" Write a paragraph or list, or draw an image or diagram of what comes to mind.

Question 4. Based on your experience with computers, write a list of some places where mathematics is used in computing.

What do computers and mathematics have in common? Why is it appropriate for an overview of computer science book to require mathematical thinking?

Much of the use of mathematics in this book is applying mathematical ideas and operations to solve computer science problems. There are a number of important mathematical underpinnings of computer science, and so understanding computer science involves being able to solve mathematical problems involving these underpinnings. At the same time, the different uses of mathematics in this text exemplify characteristics of mathematics as a whole, and of the close tie between the fields of mathematics and computer science. For instance, the mathematics in the book illustrates the following:

- The reliance of many key ideas in computer science, such as data representation, on mathematics.

- The use of special mathematics- or logic-related notation and terminology in many parts of computer science.

- The ability to represent and work with many different types of data in the computer, and the related ability to represent and work with quantities in different representations using a variety of operations.

- The need for rigor in solving problems, analyzing situations, or specifying computational processes.

- The use of numbers and arithmetic in solving computational problems. However, rather than being simple arithmetic problems, these problems often have some special characteristics such as involving repeated operations, or involving extremely large or extremely small numbers.

- The existence of a variety of different algorithms for solving such diverse problems as pattern matching, counting specified values in a table of data, or finding the shortest path between two nodes in a graph.

Solving many of the problems in this book will involve doing some mathematics, and therefore manipulating mathematical or logical symbols. Here are a few examples:

- In exploring low-level logical operations you'll need to manipulate binary representation and logical operators.

- In studying the growth rate of algorithms you'll need to work with the Ο and Θ notations commonly used by computer scientists.

- In specifying computational processes you'll need to use "pseudocode" or a programming language. These share many notational characteristics with mathematical or logical symbols, especially when the computational processing involves a large number of numeric computations.

The level of mathematics in this book is introductory-level college mathematics. As such, the mathematics is not advanced, and there is no mathematical prerequisite for this book beyond the requirements needed for general college admission. At the same time, the mathematics in this book goes beyond high school mathematics even though many of the types of mathematics used in this text appear in some high school mathematics courses.

As an example, one appearance of mathematics in this book is binary (or base 2) representation. This is a topic that often appears in high school mathematics courses, and the basics of binary representation are not complicated. In this book we review such basics as how to convert numbers between decimal (base 10) and binary representation, and how to do simple operations such as adding two binary numbers. However, we also use binary representation in additional ways that underpin the workings of computers. Here are a few examples:

- We'll look at a few different ways to represent numbers in binary representation. For example, integers are often represented in binary not using the usual straightforward binary representation, but in "two's complement" form. So part of this book is learning not only about the "usual" binary representation, but also about these alternatives.

- We'll look at various issues with binary representation, such as the number of "bits" used, that are important in determining the range and precision of numbers used by computers.

- In addition to representing numbers, we will also look at how computers use binary representation to represent and operate on other types of data such as text, colors, and images.

- In addition to basic operations such as binary addition, we will also look at other operations on binary representations. For example, logical operations are important in masking colors in image processing, and in implementing arithmetic operations in low-level computer hardware.

In summary, even though many of the mathematical topics in this book appear in high school mathematics, they go beyond the usual high school treatment of those topics in breadth or depth.

Technology and society

[edit | edit source]Question 5. What do you think of when you hear "technology and society?" Write a paragraph or list, or draw an image or diagram of what comes to mind.

Question 6. Based on your experience with computing, write a list of examples of how computing affects, and is affected by, society.

The topic of this book is computers and computing. Computers have affected society in numerous and diverse ways, some of which we'll explore in this book. And current and future computer applications will affect society in even more ways.

Through this book you should get an understanding of how computers work. This includes understanding the basics of computer hardware and computer software.

More broadly, however, computer science relies on results from other areas of science, engineering, and related fields. The most prominent example of this we will see in this text is various ways that mathematics is essential in computer science.

Technology affects society. However, it is not a one-way street. Society also affects technology. For example, society fosters technology by means such as government support for research. As another example, different individuals, businesses, and other organizations adopt and use technology in ways often not foreseen by the technology's creators.

In this book we'll look at a variety of instances of how society affects technology. These include government funding for the early Internet, Internet regulation, how business considerations affect computing products, and societal aspects of computer security.

In many topics in computers and society there are multiple stakeholders. These can include individual users, developers, companies (producers, consumers, and intermediaries), government bodies, professional organizations, and other types of organizations. These different stakeholders often have different views and different goals.

In this book we will often look at technology and society issues from numerous perspectives. Sometimes we will focus on a specific perspective or the role of a specific stakeholder. However, other times we will explore issues more broadly: Who are the stakeholders? What is their role in this issue? What are their goals?

One often hears conflicting views on computer and society issues. Computers are beneficial for society. Computers are harmful to society. The Internet is making it easier for people to communicate and is bringing people together. The Internet is making people more isolated. Computers and automation are robbing people of jobs. Computers and automation create jobs.[3]

In this book we'll often explore issues that are contentious and/or complicated. How do we avoid a superficial, one-sided understanding of such issues? How do we resolve conflicting claims about such issues?

Computing technology not only has had massive effects on society, but continues to affect society. Not a day goes by without some technological advance involving computing. In many ways the "computer revolution" is just beginning.

One goal of this book is that you'll learn enough about computing in general, about trends in computing, and about computing and society that you'll be able to evaluate new technology. Note that "evaluate" might mean different things in different contexts. For instance, it might mean give an informed projection about whether a new computer product will be successful or not. Or it might mean predict future computer advances in a certain area. Or it might mean analyze whether a new computer application is more likely to be more beneficial than harmful.

Additional questions for thought and discussion

[edit | edit source]Here are some additional introductory questions.

Question 7. How do you use computers? List the most important ways.

Question 8. Write down a list of movies in which computing plays a major role. For each movie, indicate whether computing is portrayed as beneficial, harmful, beneficial in some ways but harmful in others, or neutral.

Question 9. Do you think computers, on the whole, have more positive effects than negative ones, more negative ones than positive, or about equal positive and negative effects? Why?

Question 10. List some ways computers are beneficial to society. Then list some ways they are harmful.

Question 11. Suppose you were to write a novel, play, screenplay, etc. about some aspect of computers and society. Describe what the theme or themes of your work would be.

Question 12. What does "technology" mean? What are some important ways you use technology in your daily life?

Question 13. Suppose you had to write a short essay or short story entitled "Computers and Me." What would be some key points or themes in that work?

Question 14. Suppose you had to write a short essay or short story entitled "Technology and Me." What would be some key points or themes in that work?

Notes

[edit | edit source]- ↑ See Computer literacy at the English Wikipedia. Accessed May 20, 2015.

- ↑ For example, see Denning, Peter. "Great Principles of Computer Science". Retrieved May 20, 2015. This site organizes principles into seven categories: computation, communication, coordination, recollection, automation, evaluation, and design. There are a number of good ideas, insights, and frameworks in this and related approaches, and in fact many of the key ideas in this book will relate in some way to Denning's principles.

- ↑ See Putnam, Robert D. (August 2001). Bowling Alone: The Collapse and Revival of American Community. Simon and Schuster. ISBN 978-0-7432-0304-3. Retrieved 29 May 2015.

What is Computing

What is Computing

[edit | edit source]In this course, we try to focus on computing principles (big ideas) rather than computer technologies, which are tools and applications of the principles. Computing is defined by a set of principles or ideas, which underlies a myriad of technologies that are created based on the principles. Technologies can be complex and constantly evolving but principles stays the same. In the second half of the course, we will study various technologies to demonstrate the power of computing and how principles are applied.

In addition to principles of computing and technologies there are practices of computing - what professionals do to advance computing.

The chart to the right illustrates the difference between principles of computing and practices of computing. Principles underlie technologies and practices. A consumer exploits the power of computing through the applications built for them for various tasks. We believe everyone needs to know the principles of computing because such principles are widely applicable. As professionals in the field of computing we need to know the two ends and everything in the middle - the practices (activities and skills that make computing useful and effective).

We will use the terms computing and computation interchangeably throughout the book.

Principles of Computing

[edit | edit source]Computing is fundamentally about information processes. One of the big ideas of computing is that information processes can be carried out purely mechanically via symbol manipulation. The agent that does the computing, whether a thinking human being or a machine (computer), does not matter. Toward the end of the book we will see this is true for all modern computers - digital computers manipulate two symbols (zero and one) blindly according to instructions.

An Analogy

[edit | edit source]The following analogy from the "Thinking as Computation" book [1] illustrates the idea. Imagine that we have the following table of symbols.

| a | b | c | d | e | f | g | h | i | j | |

|---|---|---|---|---|---|---|---|---|---|---|

| a | aa | ab | ac | ad | ae | af | ag | ah | ai | aj |

| b | ab | ac | ad | ae | af | ag | ah | ai | aj | ba |

| c | ac | ad | ae | af | ag | ah | ai | aj | ba | bb |

| d | ad | ae | af | ag | ah | ai | aj | ba | bb | bc |

| e | ae | af | ag | ah | ai | aj | ba | bb | bc | bd |

| f | af | ag | ah | ai | aj | ba | bb | bc | bd | be |

| g | ag | ah | ai | aj | ba | bb | bc | bd | be | bf |

| h | ah | ai | aj | ba | bb | bc | bd | be | bf | bg |

| i | ai | aj | ba | bb | bc | bd | be | bf | bg | bh |

| j | aj | ba | bb | bc | bd | be | bf | bg | bh | bi |

The symbols can be any set of symbols, we pick the letters from the English alphabet for simplicity. We can define a procedure P that takes two symbols ('a' through 'j') as the input and produces two symbols in the same set as the output. Internally, the procedure uses the first input symbol to find a row that starts with the same symbol, then uses the second input symbol to find a column with the same symbol at the top, and then report/return the symbols at the cross point. It is not hard to imagine such a table lookup procedure can be done purely mechanically (blindly) by a simple agent (e.g. a device or a machine). Of course a human being can do it but this type of symbol manipulation requires no human intelligence. Two conclusions can be drawn from this thought experiment:

- Symbol manipulation can be done mechanically.

- The machine that performs the manipulation does not need to know the meaning of the symbols nor the purpose of the manipulation.

This procedure can be meaningful if we know how to interpret the symbols. For example, if the symbols 'a' through 'j' represent quantities of 0 through 9 respectively, this procedure performs single decimal digit addition. For instance, p(d, f) = p(3, 5) = ai = 08, which is the correct result of 3+5. The following table is essentially the same as the previous one except that it uses symbols that are meaningful to humans.

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 00 | 01 | 02 | 03 | 04 | 05 | 06 | 07 | 08 | 09 |

| 1 | 01 | 02 | 03 | 04 | 05 | 06 | 07 | 08 | 09 | 10 |

| 2 | 02 | 03 | 04 | 05 | 06 | 07 | 08 | 09 | 10 | 11 |

| 3 | 03 | 04 | 05 | 06 | 07 | 08 | 09 | 10 | 11 | 12 |

| 4 | 04 | 05 | 06 | 07 | 08 | 09 | 10 | 11 | 12 | 13 |

| 5 | 05 | 06 | 07 | 08 | 09 | 10 | 11 | 12 | 13 | 14 |

| 6 | 06 | 07 | 08 | 09 | 10 | 11 | 12 | 13 | 14 | 15 |

| 7 | 07 | 08 | 09 | 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| 8 | 08 | 09 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| 9 | 09 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 |

Now that we have a simple procedure P that can instruct a simple agent to add two single digit decimal numbers, we can devise a new procedure P1 that can add three single-digit decimal numbers as shown in the following chart.

The new procedure P1 employs three instances of procedure P to add three decimal digits and return two digits as the result. We can view the procedures as machines with inputs and outputs and the lines are pipes that allow the symbols to go from one place to another place. It is not hard to imagine that an agent that can carry out P can carry out P1 as P1 is entirely made up of P. Note that the dotted rectangle represents the new procedure P1 made up of instances of P and the answer given by P1 for the sample inputs is correct. Again the symbols used in the process can be any set of symbols because internally simple table lookups are performed.

Now imagine that we could use P1 to construct more complex procedures, for example procedure P2 in the following chart.

P2 uses P1 to add two double-digit numbers, in fact we can simply add more P1s to the design to deal with any numbers of digits.

By now we can make the following observations:

- Whatever machine that can perform P can perform P1, P2, and etc.

- We have made procedures that perform seemingly intelligent activities by making them more complex and at the same time kept them doable by simple machines.

If we follow the same line of reasoning, it is not hard to imagine we can create increasingly more complex procedures to instruct the simple machine to do progressively more intelligent things, such as

- integer subtraction

- compare two integers (subtraction and check the sign of the result)

- integer multiplication (repeated addition)

- represent fractions using a pair of integers and do arithmetic on them

- use matrices of integers to represent systems of equations and solve them using matrix operations

- use systems of equations to model complex physical systems and perform numerical simulations of these systems

In summary, from this example we can see that simple symbolic operations can be assembled to form larger procedures to perform amazing activities through computational processes. Such activities are not limited to numerical calculations. If we can represent abstract ideas as symbols (as we represent abstract quantities as concrete numbers) and device procedures to manipulate the symbols according to the relations among the ideas we can model reasoning as computational processes. This is what computer science is fundamentally about - information processes with two essential components: representations and a sequence of rules for manipulation of the representations. Note that it has nothing to do with electronics or physics. The machine that carries out such processes does not need to know the meaning of the symbols and why the process yields correct results. The machine only needs to follow the procedures (a set of rules) blindly.

As an example, you can read about a mechanical computer (difference engine) designed by Charles Babbage that can tabulate polynomial functions:

Another Analogy

[edit | edit source]Richard Feynman used another similar analogy (file clerk) to explain how computers work from the inside out in his Computer Heuristics Lecture (1 hour 15 mins): http://www.youtube.com/watch?v=EKWGGDXe5MA

History

[edit | edit source]Now that we have learned computing is, in essence, a certain manipulation of symbols. A computer's ability to perform amazing tasks depends on its ability to manipulate symbols according to well defined rules. In fact, digital computers only manipulate two symbols - zeros and ones. The intelligence of computing lies in the design and implementations of the rules/programs.

In the future, when talking about computer machinery, we will see computers are constructed using such principles.

You may wonder where the ideas come from. Many people in history made significant contributions to ideas of computing and computers. Gottfried Leibniz (1646–1716), a German philosopher, is considered the first person to dream of reducing reasoning to calculation and building a machine capable of carrying out such calculations. He observed that in arithmetic we represent abstract quantities using symbols and manipulate the symbols to get useful results according to rules. He dreamed that we could represent abstract ideas using symbols and reason with the ideas according to the logics between the ideas via similar concrete symbol manipulation as we do in arithmetic. Such manipulations give us correct results not because whoever does the manipulation is intelligent but because the rules of manipulation mirrors relationships between quantities and logics between ideas.

Because of Leibniz’s dream, now we have computer science and universal machines called computers. A computer is fundamentally a physical device that can manipulate symbols following very simple logic rules. Almost all computers are electronic because it happens to be cheaper and easier to build that way. Computer science is fundamentally about the information process (dealing with abstract ideas) that takes place through symbol manipulation, which follows a recipe (a set of rules). Such recipes are also known as algorithms. No wonder so many computer programming books are called cookbooks :) In computer science we study how to represent information and how to design and apply algorithms to get meaningful results. There are usually many ways to perform the same task. Comparing algorithms for evaluation purposes is called algorithm (complexity) analysis. Communicating an algorithm (recipe) to a computer is called programming/software development. The languages we use for such communication are called computer programming languages. The artifacts of programming are computer programs or software. The engineering disciplines we try to apply in the software development process to produce quality software is called software engineering. So computer science is more about problem solving than computers. Computing science is probably a more appropriate name for this discipline.

Practices of Computing

[edit | edit source]Principles are fundamental ideas that permeate all aspects of computing. Practices are not principles but are very useful to identify because they identify the central practices of computing professionals. Practices, sometimes called "know-hows", define someone's skill set and the level of competency: beginner, competent, and expert. The four core practices of computing are identified in the Great Principles of Computing project:[2]

- Programming (including multilingual programming practice)

- Systems and systems thinking

- Modeling, validating, testing, and measuring

- Innovating

Programming is an integral part of computer science because it allows us to explore abstract ideas in computer science in concrete ways. It is also an exciting creative process, which brings a great deal of satisfaction when we can make computers do useful things. In this course we will program in a very high-level graphical programming environment to explore ideas in computer science.

Donald Knuth regards programming like composition: well-written programs are a pleasure for others or yourself to read. He believes that programming is triply rewarding :

- beautiful code (aesthetic)

- do useful work (humanitarian)

- get paid (economic)

Programming a computer is essentially teaching the computer how to do things. As we mentioned previously computers are simple machines that strictly follow orders. For a computer to do the right task the instructions in our program must be correct and logical. Programs that are executable on a computer are software - serving as the brain of the computer. Software with errors is called buggy (see for the history of this name) software. Testing software on an actual computer can help catch most bugs in the software. Testing provides almost immediate feedback to the quality of our programs so that we can fix bugs and improve it. Because of this, we believe programming makes us better thinkers and learners. We will see why it is hard to prove the correctness of programs.

References

[edit | edit source]- ↑ Levesque, Hector,Thinking as Computation, Hector J. Levesque, ISBN 9780262016995

- ↑ Denning, Peter, The Great Principles of Computing, http://denninginstitute.com/pjd/GP/GP-site/welcome.html

Information Representation

Information Representation

[edit | edit source]Introductory problem

[edit | edit source]Computers often represent colors as a red-green-blue (RGB) set of numbers, called a "triple", where each of the red, green, and blue components is an integer between 0 and 255. For example, the color (255, 0, 10) has full red, no green, and a small amount of blue. Write an algorithm that takes as input the RGB components for a color, and returns a message indicating the largest component or components. For example, if the input color is (100, 255, 0), the algorithm should output "Largest component(s): green". And if the input color is (255, 255, 255), then the algorithm should output "Largest component(s): red, green, blue".

Overview of this chapter

[edit | edit source]One amazing aspect of computers is they can store so many different types of data. Of course computers can store numbers. But unlike simple calculators they can also store text, and they can store colors, and images, and audio, and video, and many other types of data. And not only can they store many different types, but they can also analyze them, and they can transmit them to other computers. This versatility is one reason why computers are so useful, and affect so many areas of our lives.

To understand computers and computer science, it is important to know something about how computers deal with different types of data. Let's return to colors. How are colors stored in a computer? The introductory problem states one way: as an RGB triple. This is not the only possible way. RGB is just one of many color systems. For example, sometimes colors are represented as an HSV triple: by hue, saturation, and value. However, RGB is the most common color representation in computer programs.

This leads to a deeper issue: how are numbers stored in a computer? And why is it important anyway that we understand how numbers, and other different types of data, are stored and processed in a computer? This chapter deals with these and related questions. In particular, we will look at the following:

- Why is this an important topic?

- How do computers represent numbers?

- How do computers represent text?

- How do computers represent other types of data such as images?

- What is the binary number system and why is it important in computer science?

- How do computers do basic operations such as addition and subtraction?

Goals

[edit | edit source]Upon completing this chapter, you should be able to do the following:

- Be able to explain how, on the lowest level, computers represent both numeric and text data, as well as other types of data such as color data.

- Be able to explain and use the basic terminology in this area: bit, byte, megabyte, RGB triple, ASCII, etc.

- Be able to convert numbers and text from one representation to another.

- Be able to convert integers from one representation to another, for example from decimal representation to two's complement representation.

- Be able to add and subtract numbers written in unsigned binary or in two's complement representation.

- Be able to explain how the number of bits used to represent data affects the range and precision of the representation.

- Be able to explain in general how computers represent different types of data such as images.

- Be able to do calculations involving amounts of memory or download times for certain datasets.

Data representation and mathematics

[edit | edit source]How is data representation related to liberal education and mathematics? As you might guess, there is a strong connection. Computers store all data in terms of binary (i.e., base 2) numbers. So to understand computers it is necessary to understand binary. Moreover, you need to understand not only binary basics, but also some of the complications such as the "two's complement" notation discussed below.

Binary representation is important not only because it is how computers represent data, but also because so much of computers and computing is based on it. For example, we will see it again in the chapter on machine organization.

Data representation and society and technology

[edit | edit source]The computer revolution. That is a phrase you often hear used to describe the many ways computers are affecting our lives. Another phrase you might hear is the digital revolution. What does the digital revolution mean?

Nowadays, many of our devices are digital. We have digital watches, digital phones, digital radio, digital TVs, etc. However, previously many devices were analog: "data ... represented by a continuously variable physical quantity" [1] Think, for example, of an old watch with second, minute, and hour hands that moved continuously (although very slowly for the minute and hour hands). Compare this with many modern-day watches that shows a digital representation of the time such as 2:03:23.

This example highlights a key difference between analog and digital devices: analog devices rely on a continuous phenomenon and digital devices rely on a discrete one. As a second example of this difference, an analog radio receives audio radio broadcast signals which are transmitted as radio waves, while a digital radio receives signals which are streams of numbers.[2]

The digital revolution refers to the many digital devices, their uses, and their effects. These devices include not only computers, but also other devices or systems that play a major role in our lives, such as communication systems.

Because digital devices usually store numbers using the binary number system, a major theme in this chapter is binary representation of data. Binary is fundamental to computers and computer science: to understand how computers work, and how computer scientists think, you need to understand binary. The first part of this chapter therefore covers binary basics. The second part then builds on the first and explains how computers store different types of data.

Representation basics

[edit | edit source]Introduction

[edit | edit source]Computing is fundamentally about information processes. Each computation is a certain manipulation of symbols, which can be done purely mechanically (blindly). If we can represent information using symbols and know how to process the symbols and interpret the results, we can access valuable new information. In this section we will study information representation in computing.

The algorithms chapters discuss ways to describe a sequence of operations. Computer scientists use algorithms to specify behavior of computers. But for these algorithms to be useful they need data, and so computers need ways to represent data.[3]

Information is conveyed as the content of messages, which when interpreted and perceived by our senses, causes certain mental responses. Information is always encoded into some form for transmission and interpretation. We deal with information all the time. For example, we receive information when we read a book, listen to a story, watch a movie, or dream a dream. We give information when we write an email, draw a picture, act in a show or give a speech. Information is abstract but it is conveyed through concrete media. For instance, a conversation on the phone communicates information but the information is represented by sound waves and electronic signals along the way.

Information is abstract/virtual and the media that carry the information must be concrete/physical. Therefore before any information can be processed or communicated it must be quantified/digitized: a process that turns information into (data) representations using symbols.

People have many ways to represent even a very simple number. For example, the number four can be represented as 4 or IV or |||| or 2 + 2, and so on. How do computers represent numbers? (Or text? Or audio files?)

The way computers represent and work with numbers is different from how we do. Since early computer history, the standard has been the binary number system. Computers "like" binary because it is extremely easy for them. However, binary is not easy for humans. While most of the time people do not need to be concerned with the internal representations that computers use, sometimes they do.

Why binary?

[edit | edit source]Suppose you and some friends are spending the weekend at a cabin. The group will travel in two separate cars, and you all agree that the first group to arrive will leave the front light on to make it easier for the later group. When the car you are in arrives at the cabin you will be able to tell by the light if your car arrived first. The light therefore encodes two possibilities: on (the other group has already arrived) or off (the other group hasn't arrived yet).

To convey more information you could use two lights. For example, both off could mean the first group hasn't arrived yet, the first light off and second on indicate the first group has arrived but left to get supplies, the first on and second off that the group arrived but left to go fishing, and both on that the group has arrived and hasn't left.

Note the key ideas here: a light can be on or off (we don't allow different level of light, multiple colors, or other options), just two possibilities. But the second is that if we want to represent more than two choices we can use more lights.

This "on or off" idea is a powerful one. There are two and only two distinct choices or states: on or off, 0 or 1, black or white, present or absent, large or small, rough or smooth, etc.—all of these are different ways of representing possibilities. One reason the two-choice idea is so powerful is it is easier to build objects—computers, cameras, CDs, and so on—where the data at the lowest level is in two possible states, either a 0 or a 1.[4]

In computer representation, a bit (i.e., a binary digit) can be a 0 or a 1. A collection of bits is called a bitstring. A bitstring that is 8 bits long is called a byte. Bits and bytes are important concepts in computer storage and data transmission, and later on we'll explain them further along with some related terminology and concepts. But first we will look at the basic question of how a computer represents numbers.

A brief historic aside

[edit | edit source]Claude Shannon is considered the father of information theory because he is the first person who studied and built mathematical models for information and communication of information. He also made many other significant contributions to computing. His seminal paper “A mathematical theory of communication” (1948) changed our view of information, laying the foundation for the information age. Shannon discovered that the fundamental unit of information is a yes or no answer to a question or one bit with two distinct states, which can be represented by only two symbols. He also founded the design theory of digital computers/circuits by proving that propositions of Boolean algebra can be used to build a "logic machine" capable of carrying out general computation (manipulation of two types of symbols). Data, another term closely related to information, is an abstract concept of representations of information. We will use information representations and data interchangeably.

External and internal information representation

[edit | edit source]Information can be represented on different levels. It is helpful to separate information representations into two categories: external representation and internal representation. External representation is used for communication between humans and computers. Everything we see on a computer monitor or screen, whether it is text, image, or motion picture, is a representation of certain information. Computers also represent information externally using sound and other media, such as touch pads for the blind to read text.

Internally all modern computers represent information as bits. We can think of a bit as a digit with two possible values. Since a bit is the fundamental unit of information it is sufficient to represent all information. It is also the simplest representation because only two symbols are needed to represent two distinct values. This makes it easy to represent bits physically - any device capable of having two distinct states works, e.g. a toggle switch. We will see later that modern computer processors are made up of tiny switches called transistors.

Review of the decimal number system

[edit | edit source]When bits are put together into sequences they can represent numbers. We are familiar with representing quantities with numbers. Numbers are concrete symbols representing abstract quantities. With ten fingers, humans conveniently adopted the base ten (decimal) numbering system, which requires ten different symbols. We all know decimal representation and use it every day. For instance, the arabic numerals use 0 through 9. Each symbol represents a power of ten depending on the position the symbol is in.

So, for example, the number one hundred and twenty-four is . We can emphasize this by writing the powers of 10 over the digits in 124:

10^2 10^1 10^0 1 2 4

So if we take what we know about base 10 and apply it to base 2 we can figure out binary. But first recall that a bit is a binary digit and a byte is 8 bits. In this file most of the binary numbers we talk about will be one byte long.

(Computers actually use more than one byte to represent most numbers. For example, most numbers are actually represented using 32 bits (4 bytes) or 64 bits (8 bytes). The more bits, the more different values you can represent: a single bit permits 2 values, 2 bits give 4 values, 3 bits gives 8 values, ..., 8 bits give 256 values, and in general n bits gives values. However when looking at binary examples we'll usually use 8 bit numbers to make the examples manageable.

This base ten system used for numbering is somewhat arbitrary. In fact, we commonly use other base systems to represent quantities of different nature: base 7 for days in a week, base 60 for minutes in an hour, 24 for hours in a day, 16 for ounces in a pound, and so on. It is not hard to imagine base 2 (two symbols) is the simplest base system, because with fewer than two symbols, we cannot represent change (and therefore no information).

Unsigned binary

[edit | edit source]When we talk about decimal, we deal with 10 digits—0 through 9 (that's where decimal comes from). In binary we only have two digits, that's why it's binary. The digits in binary are 0 and 1. You will never see any 2's or 3's, etc. If you do, something is wrong. A bit will always be a 0 or 1.

Counting in binary proceeds as follows:

0 (decimal 0)

1 (decimal 1)

10 (decimal 2)

11 (decimal 3)

100 (decimal 4)

101 (decimal 5)

...

An old joke runs, "There are 10 types of people in the world. Those who understand binary and those who don't."

The next thing to think about is what values are possible in one byte. Let's write out the powers of two in a byte:

2^7 2^6 2^5 2^4 2^3 2^2 2^1 2^0 128 64 32 16 8 4 2 1

As an example, the binary number 10011001 is Note each of the 8 bits can either be a 0 or a 1. So there are two possibilities for the leftmost bit, two for the next bit, two for the bit after that, and so on: two choices for each of the 8 bits. Multiplying these possibilities together gives or 256 possibilities. In unsigned binary these possibilities represent the integers between 0 (all bits 0) to 255 (all bits 1).

All base systems work in the same way: the rightmost digit represents the quantity of the base raised to the zeroth power (recall that anything raised to the 0th power results in 1), and each digit to the left represents a quantity that is base times larger than the one represented by the digit immediately to the right. The binary number 1001 represents the quantity 9 in decimal, because the rightmost 1 represents , the zeroes contribute nothing at the and positions, and finally the leftmost one represents . When we use different base systems it is necessary to indicate the base as the subscript to avoid confusion. For example, we write to indicate the number 1001 in binary (which represents the quantity 9 in decimal). The subscript 2 means "binary": it tells the reader that it does not represent a thousand and one in decimal. This example also shows us that representations have no intrinsic meaning. The same pattern of symbols, e.g. 1001, can represent different quantities depending on the way it is interpreted. There are many other ways to represent the quantity (remember: read this as "nine in base 10 / decimal"); for instance, the symbol 九 represents the same quantity in Chinese.

As the same quantity can be represented differently, we can often change the representation without changing the quantity it represents. As shown before, the binary representation is equivalent to the decimal representation - representing exactly the same quantity. In studying computing we often need to convert between decimal representation, which we are most familiar with, and binary representation, which is used internally by computers.

Binary to decimal conversion

[edit | edit source]Converting the binary representation of a non-negative integer to its decimal representation is a straight-forward process: summing up the quantities each binary digit represents yields the result.

Decimal to binary conversion

[edit | edit source]One task you will need to do in this book, and which computer scientists often need to do, is to convert a decimal number to or from a binary number. The last subsection showed how to convert binary to decimal: take each power of 2 whose corresponding bit is a 1, and add those powers together.

Suppose we want to do a decimal to binary conversion. As an example, let's convert the decimal value 75 to binary. Here's one technique that relies on successive division by 2:

75/2 quotient=37 remainder=1 37/2 quotient=18 remainder=1 18/2 quotient=9 remainder=0 9/2 quotient=4 remainder=1 4/2 quotient=2 remainder=0 2/2 quotient=1 remainder=0 1/2 quotient=0 remainder=1

We then take the remainders bottom-to-top to get 1001011. Since we usually work with group of 8 bits, if it doesn't fill all eight bits, we add zeroes at the front until it does. So we end up with 01001011.

Binary mathematics

[edit | edit source]Addition of binary numbers

[edit | edit source]In addition to storing data, computers also need to do operations such as addition of data. How do we add numbers in binary representation?

Addition of bits has four simple rules, shown here as four vertical columns:

0 0 1 1 + 0 + 1 + 0 + 1 ========================= 0 1 1 10

Now if we have a binary number consisting of multiple bits we use these four rules, plus "carrying". Here's an example:

00110101 + 10101100 ========== 11100001

Here's the same example, but with the carried bits listed explicitly, i.e., a 0 if there is no carry, and a 1 if there is. When 1+1=10, the 0 is kept in that column's solution and the 1 is carried over to be added to the next column left.

0111100 00110101 + 10101100 ========== 11100001

We can check binary operations by converting each number to decimal: with both binary and decimal we're doing the same operations on the same numbers, but with different representations. If the representations and operations are correct the results should be consistent. Let's look one more time at the example addition problem we just solved above. Converting to decimal produces (do the conversion on your own to verify its accuracy), and converting gives . Adding these yields , which, when converted back to binary is indeed .

But binary addition doesn't always work quite right:

01110100 + 10011111 ========== 100010011

Note there are 9 bits in the result, but there should only be 8 in a byte. Here is the sum in decimal:

116 + 159 ===== 275

Note 275 which is greater than 255, the maximum we can hold in an 8-bit number. This results in a condition called overflow. Overflow is not an issue if the computer can go to a 9-bit binary number; however, if the computer only has 8 bits set aside for the result, overflow means that a program might not run correctly or at all.

Subtraction of binary numbers

[edit | edit source]Once again, let's start by looking at single bits:

0 0 1 1 - 0 - 1 - 0 - 1 ======================== 0 -1 1 0

Notice that in the -1 case, what we often want to do is get a 1 result and borrow. So let's apply this to an 8-bit problem:

10011101 - 00100010 ========== 01111011

which is the same as (in base 10),

157 - 34 ====== 123

Here's the binary subtraction again with the borrowing shown:

1100010 10011101 - 00100010 ========== 01111011

Most people find binary subtraction significantly harder than binary addition.

Other representations related to binary

[edit | edit source]You might have had questions about the binary representation in the last section. For example, what about negative numbers? What about numbers with a fractional part? Aren't all those 0's and 1's difficult for humans to work with? These are good questions. In this and a couple of other sections we'll look at a few other representations that are used in computer science and are related to binary.

Hexadecimal

[edit | edit source]Computers are good at binary. Humans aren't. Binary is hard for humans to write, hard to read, and hard to understand. But what if we want a number system that is easier to read but still is closely tied to binary in some way, to preserve some of the advantages of binary?

One possibility is hexadecimal, i.e., base 16. But using a base greater than 10 immediately presents a problem. Specifically, we run out of digits after 0 to 9 — we can't use 10, 11, or greater because those have multiple digits within them. So instead we use letters: A is 10, B is 11, C is 12, D is 13, E is 14, and F is 15. So the digits we're using are 0 through F instead of 0 through 9 in decimal, or instead of 0 and 1 in binary.

We also have to reexamine the value of each place. In hexadecimal, each place represents a power of 16. A two-digit hexadecimal number has a 16's place and a 1's place. For example, D8 has D in the 16's place, and 8 in the 1's place:

16^1 16^0 <- hexadecimal places showing powers of 16 16 1 <- value of these places in decimal (base 10) D 8 <- our sample hexadecimal number

So the hexadecimal number D8 equals in decimal. Note any two digit hexadecimal number, however, can represent the same amount of information as one byte of binary. (That's because the largest two-digit hex number , the same maximum as 8 bits of binary.) So it's easier for us to read or write.

When working with a number, there are times when which representation is being used isn't clear. For example, does 10 represent the number ten (so the representation is decimal), the number two (the representation is binary), the number sixteen (hexadecimal), or some other number? Often, the representation is clear from the context. However, when it isn't, we use a subscript to clarify which representation is being used, for example for decimal, versus for binary, versus for hexadecimal.

Hexadecimal numbers can have more hexadecimal digits than the two we've already seen. For example, consider , which uses the following powers of 16:

16^7 16^6 16^5 16^4 16^3 16^2 16^1 16^0 F F 0 5 8 1 A 4

So in decimal this is:

Hexadecimal doesn't appear often, but it is used in some places, for example sometimes to represent memory addresses (you'll see this in a future chapter) or colors. Why is it useful in such cases? Consider a 24-bit RGB color with 8 bits each for red, green, and blue. Since 8 bits requires 2 hexadecimal digits, a 24-bit color needs 6 hexadecimal digits, rather than 24 bits. For example, FF0088 indicates a 24-bit color with a full red component, no green, and a mid-level blue.

Now there are additional types of conversion problems:

* Decimal to hexadecimal * Hexadecimal to decimal * Binary to hexadecimal * Hexadecimal to binary

Here are a couple examples involving the last two of these.

Let's convert the binary number 00111100 to hexadecimal. To do this, break it into two 4-bit parts: 0011 and 1100. Now convert each part to decimal and get 3 and 12. The 3 is a hexadecimal digit, but 12 isn't. Instead recall that C is the hexadecimal representation for 12. So the hexadecimal representation for 00111100 is 3C.

Rather than going from binary to decimal (for each 4-bit segment) and then to hexadecimal digits, you could go from binary to hexadecimal directly.

Hexadecimal digits and their decimal and binary equivalents: first, base 16 (hexadecimal), then base 10 (decimal), then base 2 (binary).

16 10 2 <- bases =========== 0 0 0000 1 1 0001 2 2 0010 3 3 0011 4 4 0100 5 5 0101 6 6 0110 7 7 0111 8 8 1000 9 9 1001 A 10 1010 B 11 1011 C 12 1100 D 13 1101 E 14 1110 F 15 1111

Now let's convert the hexadecimal number D6 to binary. D is the hexadecimal representation for , which is 1101 in binary. 6 in binary is 0110. Put these two parts together to get 11010110. Again we could skip the intermediate conversions by using the hexadecimal and binary columns above.

Text representation

[edit | edit source]A piece of text can be viewed as a stream of symbols can be represented/encoded as a sequence of bits resulting in a stream of bits for the text. Two common encoding schemes are ASCII code and Unicode. ASCII code use one byte (8 bits) to represent each symbol and can represent up to 256 () different symbols, which includes the English alphabet (in both lower and upper cases) and other commonly used symbols. Unicode extends ASCII code to represent a much larger number of symbols using multiple bytes. Unicode can represent any symbol from any written language and much more.

Image, audio, and video files

[edit | edit source]Images, audio, and video are other types of data. How computers represent these types of data is fascinating but complex. For example, there are perceptual issues (e.g., what types of sounds can humans hear, and how does that affect how many numbers we need to store to reliably represent music?), size issues (as we'll see below, these types of data can result in large file sizes), standards issues (e.g., you might have heard of JPEG or GIF image formats), and other issues.

We won't be able to cover image, audio, and video representation in depth: the details are too complicated, and can get very sophisticated. For example, JPEG images can rely on an advanced mathematical technique called the discrete cosine transform. However, it is worth examining a few key high-level points about image, audio, and video files:

- Computers can represent not only basic numeric and text data, but also data such as music, images, and video.

- They do this by digitizing the data. At the lowest level the data is still represented in terms of bits, but there are higher-level representational constructs as well.

- There are numerous ways to encode such data, and so standard encoding techniques are useful.

- Audio, image, and video files can be large, which presents challenges in terms of storing, processing and transmitting these files. For this reason most encoding techniques use some sophisticated types of compression.

Images

[edit | edit source]A perceived image is the result of light beams physically coming into our eyes and triggering nerves to send signals to our brain. In computing, an image is simulated by a grid of dots (called pixels, for "picture element"), each of which has a particular color. This works because our eyes cannot tell the difference between the original image and the dot-based image if the resolution (number of dots used) is high enough. In fact, the computer screen itself uses such a grid of pixels to display images and text.

"The largest and most detailed photograph of our galaxy ever taken has been unveiled. The gigantic nine-gigapixel image captures more than 84 million stars at the core of the Milky Way. It was created with data gathered by the Visible and Infrared Survey Telescope for Astronomy (VISTA) at the European Southern Observatory's Paranal Observatory in Chile. If it was printed with the resolution of a newspaper it would stretch 30 feet long and 23 feet tall, the team behind it said, and has a resolution of 108,200 by 81,500 pixels."[5]

While this galaxy image is obviously an extreme example, it illustrates that images (even much smaller images) can take significant computer space. Here is a more mundane example. Suppose you have an image that is 1500 pixels wide, and 1000 pixels high. Each pixel is stored as a 24-bit color. How many bytes does it take to store this image?

This problem describes a straightforward but naive way to store the image: for each row, for each column, store the 24-bit color at that location. The answer is pixels multiplied by 24 bits/pixel multiplied by 8 bits per 1 byte = 4.5 million bytes, or about 4.5MB.

Note the file size. If you store a number of photographs or other images you know that images, and especially collections of images, can take up considerable storage space. You might also know that most images do not take 4.5MB. And you have probably heard of some image storage formats such as JPEG or GIF.

Why are most image sizes tens or hundreds of kilobytes rather than megabytes? Most images are stored not in a direct format, but using some compression technique. For example, suppose you have a night image where the entire top half of the image is black ((0,0,0) in RGB). Rather than storing (0,0,0) as many times as there are pixels in the upper half of the image, it is more efficient to use some "shorthand." For example, rather than having a file that has thousands of 0's in it, you could have (0,0,0) plus a number indicating how many pixels starting the image (if you read from line by line from top to bottom) have color (0,0,0).

This leads to a compressed image: an image that contains all, or most, of the information in the original image, but in a more efficient representation. For example, if an original image would have taken 4MB, but the more efficient version takes 400KB, then the compression ratio is 4MB to 400KB, or about 10 to 1.

Complicated compression standards, such as JPEG, use a variety of techniques to compress images. The techniques can be quite sophisticated.

How much can an image be compressed? It depends on a number of factors. For many images, a compression ratio of, say, 10:1 is possible, but this depends on the image and on its use. For example, one factor is how complicated an image is. An uncomplicated image (say, as an extreme example, if every pixel is black[6]), can be compressed a very large amount. Richer, more complicated images can be compressed less. However, even complicated images can usually be compressed at least somewhat.

Another consideration is how faithful the compressed image is to the original. For example, many users will trade some small discrepancies between the original image and the compressed image for a smaller file size, as long as those discrepancies are not easily noticeable. A compression scheme that doesn't lose any image information is called a lossless scheme. One that does is called lossy. Lossy compression will give better compression than lossless, but with some loss of fidelity.[7]

In addition, the encoding of an image includes other metadata, such as the size of the image, the encoding standard, and the date and time when it was created.

Video

[edit | edit source]It is not hard to imagine that videos can be encoded as series of image frames with synchronized audio tracks also encoded using bits.

Suppose you have a 10 minute video, 256 x 256 pixels, 24 bits per pixel, and 30 frames of the video per second. You use an encoding that stores all bits for each pixel for each frame in the video. What is the total file size? And suppose you have a 500 kilobit per second download connection; how long will it take to download the file?

This problem highlights some of the challenges of video files. Note the answer to the file size question is (256x256) pixels 24 bits/pixel 10 minutes 60 seconds/minute 30 frames per second = approximately 28 Gb (Gb means gigabits). This is about 28/8 = 3.5 gigabytes. With a 500 kilobit per second download rate, this will take 28Gb/500 Kbps, or about 56,000 seconds. This is over 15 hours, longer than many people would like to wait. And the time will only increase if the number of pixels per frame is larger (e.g., in a full screen display) or the video length is longer, or the download speed is slower.

So video file size can be an issue. However, it does not take 15 hours to download a ten minute video; as with image files, there are ways to decrease the file size and transmission time. For example, standards such as MPEG make use not only of image compression techniques to decrease the storage size of a single frame, but also take advantage of the fact that a scene in one frame is usually quite similar to the scene in the next frame. There's a wealth of information online about various compression techniques and standards, storage media, etc.[8]

Audio

[edit | edit source]It might seem, at first, that audio files shouldn't take anywhere as much space as video. However, if you think about how complicated audio such as music can be, you probably won't be surprised that audio files can also be large.

Sound is essentially vibrations, or collections of sound waves travelling through the air. Humans can hear sound waves that have frequencies of between 20 and 20,000 cycles per second.[9] To avoid certain undesirable artifacts, audio files need to use a sample rate of twice the highest frequency. So, for example, for a CD music is usually sampled 44,100 Hz, or 44,100 times per second.[10] And if you want a stereo effect, you need to sample on two channels. For each sample you want to store the amplitude using enough bits to give a faithful representation. CDs usually use 16 bits per sample. So a minute of music takes 44,100 samples 16 bits/samples 2 channels 60 seconds/minute 8 bits/1 byte = about 10.5MB per minute. This means a 4 minute song will take about 40MB, and an hour of music will take about 630 MB, which is (very) roughly the amount of memory a typical CD will hold.[11]

Note, however, that if you want to download a 40 MB song over a 1Mbps connection, it will take 40MB/1Mbps, which comes to about 320 seconds. This is not a long time, but it would be desirable if it could be shorter. So, not surprisingly, there are compression schemes that reduce this considerably. For example, there is an MPEG audio compression standard that will compress 4 minutes songs to about 4MB, a considerable reduction.[12]

Sizes and limits of representations

[edit | edit source]In the last section we saw that a page of text could take a few thousand bytes to store. Images files might take tens of thousands, hundreds of thousands, or even more bytes. Music files can take millions of bytes. Movie files can take billions. There are databases that consist of trillions or quadrillions of bytes of data.

Computer science has special terminology and notation for large numbers of bytes. Here is a table of memory amounts, their powers of two, and approximate American English word.

1 kilobyte (KB) — bytes — thousand bytes 1 megabyte (MB) — bytes — million bytes 1 gigabyte (GB) — bytes — billion bytes 1 terabyte (TB) — bytes — trillion bytes 1 petabyte (PB) — bytes — quadrillion bytes 1 exabyte (EB) — bytes — quintillion bytes

There are still higher numbers or smaller quantities of these types.[13]

Kilobytes, megabytes, and the other sizes are important enough for discussing file sizes, computer memory sizes, and so on, that you should know both the terminology and the abbreviations. One caution: file sizes are usually given in terms of bytes (or kilobytes, megabytes, etc.). However, some quantities in computer science are usually given in terms involving bits. For example, download speeds are often given in terms of bits per second. "Mbps" is an abbreviation for megabits (not megabytes) per second. Notice the 'b' in Mbps is a lower case, while the 'b' in MB (megabytes) is capitalized.

In the context of computer memory, the usual definition of kilobytes, megabytes, etc. is a power of two. For example, a kilobyte is bytes, not a thousand. In some other situations, however, a kilobyte is defined to be exactly a thousand bytes. This can obviously be confusing. For the purposes of this book, the difference will usually not matter. That is, in most problems we do, an approximation will be close enough. So, for example, if we do a calculation and find a file takes 6,536 bytes, then you can say this is approximately 6.5 KB, unless the problem statement says otherwise.[14]

All representations are limited in multiple ways. First, the number of different things we can represent is limited because the number combinations of symbols we can use is always limited by the physical space available. For instance, if you were to represent a decimal number by writing it down on a piece of paper, the size of the paper and the size of the font limit how many digits you can put down. Similarly in a computer the number of bits can be stored physically is also limited. With three binary digits we can generate different representations/patterns, namely , which conventionally represent 0 through 7 respectively. Keep in mind representations do not have intrinsic meanings. So three bits can possibly represent seven different things. With n bits we can represent different things because each bit can be either one or zero and are the total combinations we can get, which limits the amount of information we can represent.

Another type of limit is due to the nature of the representations. For example, one third can never be represented precisely by a decimal format with a fractional part because there will be an infinite number of threes after the decimal point. Similarly, one third can not be represented precisely in binary format either. In other words, it is impossible to represent one third as the sum of a finite list of power of twos. However, in a base-three numbering system one third can be represented precisely as: because the one after the point represent a power of three: .

Notes and references

[edit | edit source]- ↑ Analog at Wiktionary.

- ↑ Actually, it's more complicated than that because some devices, including some digital radios, intermix digital and analog. For example, a digital radio broadcast might start in digital form, i.e., as a stream of numbers, then be converted into and transmitted as radio waves, then received and converted back into digital form. Technically speaking the signal was modulated and demodulated. If you have a modem (modulator-demodulator) on your computer, it fulfills a similar function.

- ↑ Actually we need not only data, but a way to represent the algorithms within the computer as well. How computers store algorithm instructions is discussed in another chapter.

- ↑ Of course how a 0 or 1 is represented varies according to the device. For example, in a computer the common way to differentiate a 0 from a 1 is by electrical properties, such as using different voltage levels. In a fiber optic cable, the presence or absence of a light pulse can differentiate 0's from 1's. Optical storage devices can differentiate 0's and 1's by the presence or absence of small "dents" that affect the reflectivity of locations on the disk surface.

- ↑ [1]

- ↑ You might have seen modern art paintings where the entire work is a single color.

- ↑ See, for example, [2] for examples of the interplay between compression rate and image fidelity.

- ↑ For example, see [3] and the links there.

- ↑ This is just a rough estimate since there is much individual variation as well as other factors that affect this range.

- ↑ Hz, or Hertz, is a measurement of frequency. It appears in a variety of places in computer science, computer engineering, and related fields such as electrical engineering. For example, a computer monitor might have a refresh rate of 60Hz, meaning it is redrawn 60 times per second. It is also used in many other fields. As an example, in most modern day concert music, A above middle C is taken to be 440 Hz.

- ↑ See, for example, [4] for more information about how CDs work. In general, there is a wealth of web sites about audio files, formats, storage media, etc.

- ↑ Remember there is also an MPEG video compression standard. MPEG actually has a collection of standards: see Moving Picture Experts Group on Wikipedia.

- ↑ See, for example, binary prefixes.

- ↑ The difference between "round" numbers, such as a million, and powers of 10 is not as pronounced for smaller numbers of bytes as it is for larger. A kilobyte is bytes, which is only 2.4% more than a thousand. A megabyte is bytes, about 4.9% more than one million. A gigabyte is about 7.4% bytes more than a billion, and a terabyte is about 10.0% more bytes than a trillion. In most of the file size problems we do, we'll be interested in the approximate size, and being off by 2% or 5% or 10% won't matter. But of course there are real-world applications where it does matter, so when doing file size problems keep in mind we are doing approximations, not exact calculations.

Algorithms and Programs

Algorithms and Programs

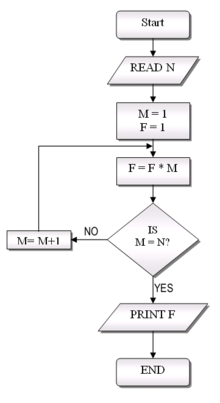

[edit | edit source]An algorithm can be defined as a set of steps used to solve a specific problem. For example, a cook may use a recipe when preparing a specific type of food. Similarly, in computer science, algorithms are the conceptual solutions used to create programs. It is important to distinguish an algorithm from a program. The implementation of an algorithm is known as a program.

Defining information processes

[edit | edit source]Computer is about information processes. Once information is represented concretely using different patterns of symbols it can be processed to derive new information. We learned that computers use the binary system internally to represent everything as sequence of bits - zeros and ones. Chapter 1 of the Blown to Bits book talks about the digital explosion of bits as the result of the innovations in computing and technologies, which enable us to turn information into bits and shared them with unprecedented speed.

Creating information processes is the topic of this chapter. We will learn that information processes start with conceptual solutions to problems and then can be implemented (coded) in ways understandable to machines. The conceptual solutions are called algorithms and the executable implementations are called programs.

What is an algorithm?

[edit | edit source]Algorithm is a rather fancy name for a simple idea: a step-by-step solution to a problem. Avi Wigderson once said algorithm is a common language for nature, human, and computer. The idea has been around for a long time. You are already familiar with many algorithms, such as tying your shoes, making coffee, send an email, and cooking a dish according to a recipe. Algorithms in computing are designed for computers to follow. Imagine we have built a machine that can perform the single digit addition procedure described in chapter one. Recall the procedure performs the addition using simple table lookup. If we give the machine two digits and ask it to perform the operation, it gives two digits back as the answer. Of course the numbers in the inputs and the output have to be represented (encoded) properly. Even though the machine doesn't understand addition, it should be able to perform the addition correctly. However, the machine will not perform the addition unless it is instructed to do so. The command (with input values) that signals the machine to perform an addition is called an instruction. We have imagined that it is not hard to use the addition procedure to create other more complex procedures that can perform more impressive activities. Before we can create such procedures we must identify a problem and find a conceptual solution to it. Often time the conceptual solution is one that can be carried out manually by a person. This conceptual solution is an algorithm.

Why study algorithms?

[edit | edit source]Algorithm is a center piece in the computer science discipline. As we discussed in chapter one, computing can be done blindly or purely mechanically by a simple device. The intelligence of any computation (information process) lies in the algorithm that defines it.

For an algorithm to be useful, it must be correct - the steps must be logical and specific for machines to carry out - and efficient - it must finish in a reasonable amount of time. The correctness and efficiency of algorithms are two key issues in the study of algorithms.