Crowdsourcing/Two approaches to complex tasks

There is something fundamentally appealing about the notion that out of millions of heads can come information … larger than the sum of its parts. Imagine if the world’s people could write poetry or make music together; these are unbelievable ideas.—Mahzarin Banaji, quoted by the Quality Assurance Agency for Higher Education, 2010

Imagine you have a big, complicated task which can be broken down into many small steps. We will set in motion a machine – that is, a digital computer – to carry out the task. Here are two possible approaches.

Computer 1 works in sequence through its list of instructions. If it has to add two numbers, it grabs the two numbers from wherever they are stored, puts them through its adding machine, sends the answer to be stored and then clears its workspace to prepare for the next instruction. It only begins a step once the previous one is complete.

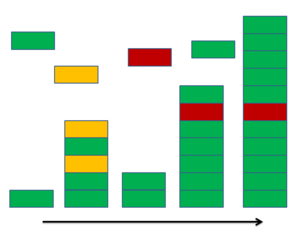

Computer 2 is completely different. Parts of Computer 2 are constantly breaking off and other things are constantly sticking to it. It thus exists in a state of near-equilibrium with its environment. However, it makes progress because the equilibrium is not exact. When part of its structure corresponds to part of the correct solution to its problem, Computer 2 becomes a little bit more stable. So over time it grows bigger and more complete, even though from minute to minute it is rapidly changing in a way that seems chaotic. Just under half the steps in the development of Computer 2 are reversals of previous steps.

Another difference with Computer 2 is that a constant proportion of its steps give incorrect answers. Asked for 1+1 it will most of the time say 2, but every now and again give 3 or 4. This is not as disastrous as it sounds, because the answers are so frequently erased and replaced, and correct answers are more stable and more likely to become part of the long-term structure. Still, there is no guarantee that every instruction is carried out correctly.

Here is another difference: Computer 2 is more energy-efficient by a factor of a hundred or a thousand, according to the physicist Richard Feynman. What’s more, these two machines are things we encounter all the time. Computer 1 is a microprocessor of the type we have in our computers, our phones, our cars, and ever more everyday objects. Computer 2 is DNA.

The long-term sustainability of DNA is not in question. Microprocessors had to be brought into existence and need constant external power because of their relative inefficiency. By contrast, DNA just happened when certain molecules came together. DNA does not need to be plugged into the wall. Given enough time and the opportunity to make lots of mistakes, DNA has made things that seem highly designed for their environment, all without any kind of forward planning.

The DNA approach may be unacceptable when guarantees of reliability and quality are paramount. An astronaut getting ready for launch won’t be happy to be told that 90% of the rocket’s components are definitely working properly. Either everything has been checked and tested, or the rocket is not likely safe. So we would not use the DNA approach in building a rocket. Then again, not every task is like this. The different articles in an encyclopedia do not depend on each other in the same way as the parts of a rocket: mistakes in the art history articles do not ruin the usefulness of the articles about military history. Similarly with creating a multilingual dictionary, a database, or a museum catalogue; partial success gives partial utility, not zero utility.

We could say that the DNA approach works to create things that are organic. In practice, organic means:

- Modular: a failure of a part does not mean a failure of the whole

- Visible in quality: it is possible to evaluate the quality of a part, independently from the whole

Open up an encyclopedia, database or educational materials to the public (the “crowd”) for editing and people may contribute, whether to demonstrate a skill, promote altruistic goals, to educate themselves, or for other reasons. It will also invite vandalism, hoaxes and other misbehaviour.

What makes crowdsourcing worth it is the net change over time. In a truly open system, it is not feasible to prevent vandalism entirely, but it is possible to structure it so that the negative contributions are outweighed by improvements. Achieving this is the challenge of successful crowdsourcing.

Summing up

[edit | edit source]It is not the best way to do everything, but crowdsourcing offers huge efficiency gains for certain kinds of large, complex tasks. Managing it requires a different way of thinking that accepts unpredictability, imperfection and diminished control. Crowdsourced effort is hard to control, but the absence of central control gives it its efficiency and strength.

| Index | Next |