Concepts of Computer Graphics/Printable version

| This is the print version of Concepts of Computer Graphics You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/Concepts_of_Computer_Graphics

Introduction

This Wikibook is concerned with explaining the concepts of computer graphics to a non-technical audience. Most books on computer graphics are written from the perspective of a programmer who is attempting to complete an implementation of some algorithms, be they a computer game, a ray-tracer, or an animation system.

In contrast, this Wikibook seeks to explain the concepts of computer graphics to someone who has no intention of implementing anything and only a basic level of math knowledge (and patience). Such a reader might be an artist who seeks to better understand the systems he uses to produce his art or video game content, someone who is attempting to get started in computer graphics but has no previous experience, or perhaps just a curious person who would like to know more about the graphics that increasingly make up the media we experience.

Since we are starting at the very beginning, our first step will be to define the problem that the techniques of computer graphics attempt to solve. So to begin our discussion, we shall make a first (clumsy) attempt at explaining the problem by saying that the main problem in computer graphics is how to create a desired image.

Given the number of different reasons people want to create images, a statement that encompasses all of computer graphics must necessarily be vague. In order to give a better sense of what we are trying to understand, we'll discuss some specific problems that computer graphics attempts to solve.

One of the most common uses for computer graphics is the creation of an image that looks like a photograph from real life, but portrays something we could not actually take a picture of. For example, we might want to create an animation for a movie that portrays an alien creature or location, or we might want to create an architectural rendering to get an idea of what the final building will look like. We'll call this type of computer graphics photo-realistic. Typically there is a significant amount of time available for creating these images, and so the techniques often attempt to capture the properties of lights and surfaces as accurately as possible, at the expense of time needed to compute the image.

Another common use for computer graphics is the creation of images very quickly so that they can be used in an interactive animation; the application of these algorithms that most people are familiar with is computer games. As a user controls their character, we want to create a series of images very quickly (hopefully 30 per second or more) that respond to their inputs. We'll refer to this type of computer graphics as real-time, or interactive. We might want these images to look as realistic as possible, but the fact of the situation is that if we have more time to devote to computation, we can create better results. Thus, real-time computer graphics is usually concerned with techniques for creating the best-looking picture possible with the very short amount of time available between frames.

There are many other applications for computer graphics as well. We might be interested in automating a complex picture that we could make by hand for convenience, or we might be interested in creating an image that is abstract or random in nature. In fact, there are many applications of computer graphics that fall somewhere in-between the two extremes presented above, such as medical imaging and visualization systems. However, for an introductory text, it will suffice to present the two main techniques that are mixed and matched for the desired results in practice.

In the text that follows, we'll begin by attempting to understand the concepts of pixels and how computer represent color, which is the format for what computer graphics algorithms output. After that, we'll consider how we represent the input data that programs in computer graphics use, the 3D objects that get rendered. With these basics out of the way, we'll then delve into the techniques of photo-realistic and real-time rendering.

Output Space/Vectors

In two dimensional graphics, the area of Graphic design, the main modes of transporting images and shapes are vectors and pixels. Think of vectors like outlines. Imagine a shape, such as that of a bottle. If you were to create an icon that looked like a simplified, one color representation of the shape of a bottle, you would achieve this by drawing the outline, the outer edge of the bottle, as seen from where you look at it. To draw it with vectors, you create points around that edge. Just drawing points and lines will create a jagged form, that looks a bit like a cut-out made from paper with a scissor. To create curved lines without creating an enormous amount of points and lines, "off-curve points" are used, they act to create a tension on the line, pulling it in a direction for a part of the line's trajectory. The basic points that always sit on the line are, in contrast, called "on-curve points". The most common vector format is the cubic bezier-curve, which can be recognised as two off-curve points that come out of every on-curve point. "Cubic" describes the number of points used to make one curved line, a cube has four corners.

A vector curve is often called a "path", since it needs to start somewhere and end somewhere, the line will travel across that path.

Vector shapes can ge geometrical primitives, such as circles, squares or triangles, or complex shapes built up from curve points and corner points.

Such a line can be scaled infinitely, since a curve is always a curve. But every representation of a digital curve becomes a square or a cube on the way from the mathematical innards of the computing device (laptop, smartphone, gaming console or other) into the forms that we can see. On screens, they are represented by the square pixels that make up that screen. On print, a similar process happens, but usually printed so fine we can't see the pixels, as they are often lost in the grain of the paper.

A pixel is one square that represents a color or shade in a mosaic. The larger mosaic image is called a bitmap. A map of bits. The most simple form of bitmap image, an uncompressed two color black and white mosaic, is transmittable as a series of zeroes and ones in computer code, where the only raw data needed additionally is the length of each line of pixels. If you shrink a large image, represented on the screen as a mosaic of 2000 by 2000 squares across, down to 3 by 3 squares, you get an nine squares of color. Everything else is lost, and if you scale it up again, you just have the nine squares, in varying sizes.

For graphic designers, you mostly talk about vectors when dealing with artwork, and in particular logos. Vectors can scale infinately, while a pixel representation is like a mosaic. Imagery that is re-used in various sizes and locations, like icons, logos, and letter-shapes (usually inside font files) are best transmitted in vectors, since scaling up (or down) will not cause problems in the same way that bitmap imagery will. A small bitmap logo taken from the header of a website will not look good printed on a banner the size of a house.

Fonts are almost exclusively vector-based, but are with few exceptions translated into bitmap before viewing. The most common exception is the plotter, a printing or cutting device that is most commonly used to cut out the letters that go on many road signs and other signage, since they can travel diagonally, something printers cannot.

Most graphic output devices, screens and printers, work much in the same way typewriters did. Lines are drawn from right to left, down the page, or screen. The old, big CRT screens would literally scan lines this way down the screen, 24-30 times a second usually, fast enough for the eye to pictures rather than an amazingly fast dot and it's trail of light.

In three dimensional graphic space, these concepts mostly hold, but are expanded upon. 3D printing often involves building shapes out of voxels, which are the three-dimensional counterpart to pixels, cubes to squares. A basic 3d printer simply adds layer upon layer of material to build up an object, scanning lines the same way computer printers do, but building up into the air.

The 3d graphics that are commonly seen in computer games and video effects are mathematical shapes much like vectors, but there are more varieties to the methods of building such shapes. While the (mostly) manually made curve points in bezier curves of a logo or an illustration serve every purpose of a two-dimensional image, three dimensional shapes can be simple or complex, so many different methods exist to create them, and major 3d authoring programs offer a variety of these input methods.

In the 1970s, Vector based computer graphics was commonly used to display lines, text, and symbols on a CRT. Even though terminals have mostly fallen out of use, the concepts of vector based displays are inherently present in most computer graphics APIs, e.g., DirectX, AutoCad dwg and post-script. The idea is that the designer specifies the line segments to be drawn in the output space [screen], freeing him or her to think about drawing fundamental geometric primitives and turning over the conversion of the vector to a raster image to the API.

A further extension of this idea is think about 3D parts in space and time as well as the feature history and level of detail. A 3D part is oriented in space and its corresponding 2D projection is calculated as a collection of 2D vectors. This leads to the fundamental idea of CAD/CAM. Common CAD/CAM interchange standards are IGES and STEP.

Output Space/Images

The first thing we'd like to understand is what a digital image "is." This work being primarily concerned with the generation of digital images (and computers being digital, we have no other alternatives within the field of computer graphics), understanding what those are will be essential to understanding the intuition of many algorithms that follow. Most of those algorithms approach the complexity of computer graphics by working backwards from the final form of the digital image as a way to reduce the scope of the problem.

When you look around the world, light from the world comes into your eyeballs and is focused on an area on the back of your eye which is covered with millions of little cells. These cells are sensitive to various aspects of light, like color and brightness, and react with the light so that a signal is sent to your brain about which cell saw what sort of light, and your brain creates a complete picture from all these constituent pieces of information.

Unsurprisingly, we attempt to capture images by a similar, though man-made process. A film camera acts very much like an eye, in that light is taken in through the lens, and focused on a surface covered with millions of similarly light-sensitive elements. However, these elements are chemically treated grains of film instead of retinal cells. When the light comes into contact with the chemicals on the film, it causes a reaction that changes the structure of those chemicals, and in so doing records the information about what kind of light came through. The film can later be processed to create a print-out that replicates those colors using the information on the film.

A digital camera works very much the same way, except that instead of placing a chemically-treated strip of film behind the lens, an array of light-sensitive semiconductors is used instead. These elements convert the information about the light they interact with into numbers encoded to represent light. Thus, we can consider a digital image to be a set of data which provides information about the light used to create a particular image.

In fact, since a digital image is really just a collection of numbers, the concept exists outside the realm of photography as well; we could in theory consider any stream of numbers, properly formatted and in digital form, to be a digital image. The key technique of computer science is that of creating such a dataset purposely, element-by-element, without the use of any photographic equipment or actual light of any sort to base the image on.

Output Space/Colors

Color, one of the most natural elements of our daily existence, can be hard to describe. We recognize and name certain broad categories of color, like "blue" and "yellow," but it is very difficult to formalize the notion of color so that you could observe, say, a blouse of a given color and then describe it to a friend so that they would have exactly the same picture in mind.

Nonetheless, for computer graphics to work, we must be able to come up with just such a description. In fact, we must go further than that and create an encoding for colors so that we can represent them in digital form as numbers. Fortunately, it turns out that light lends itself to such an encoding. A full treatment of color, including the properties of light that it represents, the way the eye perceives it, and the range of different representations for it is not important at the moment (see the useful links below for more detailed information).

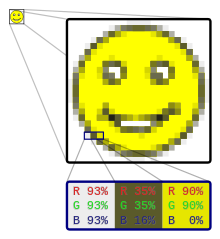

For our purposes, it will be adequate to note that most colors we need to deal with can be represented as a combination of red, green, and blue intensities. That is, we will represent a color as three values: the intensity of red light in a given color, the intensity of blue light in a given color, and the intensity of green light in a given color. We call each of these component colors channels, and we call this system of color representation the RGB color space (or just RGB).

If we devote one byte for each type of color, then we can represent 256 different levels of intensity for each component color. In this scheme, when a given value is at its maximum (255), this is the brightest, most intense amount of that color that we can represent, and when it is at its minimum (0), this is the lowest amount of that color we can have contributing to the color.

Colors that are not one of the component colors can be represented by the correct combination of the component colors. For example, we can represent purple by setting the red and blue components to high levels, and while leaving the green component low or equal to zero.

A very important thing to understand in computer graphics is that when all three color components are all equal to the same value, the resulting color is a shade of gray. Specifically, when all three components are at the maximum level (255, 255, 255), we perceive the combined color as white, and when all three components are at the minimum level (0,0,0), we perceive the combined color as black.

It is also worth noting that our choice of one byte per color channel is fairly arbitrary. Other systems of representation use fewer or more possible values per color channel. Having three color channels of 256 possible values each results in about 16.7 million colors, but in real life we perceive many, many more. For example, if we have to represent a smooth transition from black to bright red (due to shadow, let's say), we would find that having only 256 discrete colors in between the two would result in visible "bands" of color if we were really concerned about image quality. Thus, computer graphics professionals in film production often allow tens of thousands of possible values for each of the three color channels. On the opposite end of the spectrum, to allow older computers to operate more efficiently, programmers would sometimes only allow 32 possible values for each of the red, green, and blue components. Even with only a few thousand colors to choose from, surprisingly realistic images could be represented.

Useful links:

[edit | edit source]

Output Space/Representing The Digital Image

Now that we have covered the basics of how we represent images and colors, we can combine that information into a more detailed picture of how a digital image is represented. We noted before that a digital image is just an array of color values, and now that we know a bit about how colors are represented as numbers in the RGB color space, we can combine that information to get a more detailed picture.

As you might expect, the digital representation of an image is simply an array of these triples of RGB values. Specifically, an image can be thought of as a rectangular grid of these color values. If we are dealing with an image we wish to be 800 by 600 pixels, then we would have an array which had 800 RGB color values across the width of the image, and 600 RGB color values down the length of the image.

As you might expect, it is convenient to reference any given pixel by its (x,y) coordinates. In this coordinate system, (0,0) is the upper-left-most pixel, and (width, height) is the lower-right-most pixel, where width and height represent the width and height of the image, of course (using the above example, they would be 800 and 600 respectively). Thus, as you move horizontally across the image the x values increase, while the y values increase as you move down the image, which can be counterintuitive at first.

This a fairly arbitrary organization (in fact, in some image formats, (0,0) is the lower-left-most pixel, as you might expect from the familiar Cartesian coordinate system), but it is the one we will assume throughout the book, and it is the most widely used organization in practice. There is a practical benefit to using this organization from the perspective of a computer designer, but the computer graphics programmer rarely sees the benefit from it. That benefit is this: when a computer monitor displays an image, it sweeps a beam of light, left to right, top to bottom across all the pixels on the monitor. Thus, as the image is being sent to the monitor, it makes the most sense to give it what it draws first first.

It is also worth noting that it is easy to convert from one representation to another. For example, if you wish to think of the screen as having the familiar coordinate system, then you could simply write your code the way you like and then reorder the rows later on, before it is displayed to the screen. Another alternative would be to replace each y coordinate you wish you access by (height-y). Thus, when you try to access what you think of as the bottom row of the screen (y=0), you will instead access (y=800-0=800), which is the bottom row of the screen as the computer monitor thinks of it. In the end, the pixels have to go in one direction or another, and this is a familiar and commonly used format.

In the computer's memory, the image is laid out in this very direct fashion, as one contiguous block of memory. Each pixel is assigned enough space for the three numbers representing its color components, and the first pixel in the image (0,0) is the first pixel in the memory block. At the next highest memory address is the next pixel in the row (1,0), and so forth. Eventually, the last pixel in the memory block is the one which is considered to be in the position (width, height).

As an example, let us say that we have an image in memory which is 800 by 600 pixels, the first pixel of the image is at memory address 100, and the size of each RGB component is given by r. Then the r bytes at address 100 are the pixel at (0,0), the r bytes at address 100+r are the pixel at (1,0), and the r bytes at address 100+2*r are the pixel at (2,0). If we wish to access the pixel data for the pixel at (5,5), we need to access the r bytes at 100+5*width*r+5*r. That is, we need to add to the base address the number of bytes in the first 5 rows (5*width*r), plus the number of bytes into the row that that pixel is at. In general, we can access the pixel (x,y) in memory by accessing baseAddress+y*width*r+x*r. This formula is not important to remember, but it is important to understand that the image data in memory is not two dimensional, it is one dimensional and we only give the data a two dimensional organization by how we interpret it.

Input Space

In the previous section, we described how we represent digital images in computers. With this understanding, we now know what the final result we are trying to create is. There are many interesting fields of computer graphics that generate digital images, but for the rest of the book, we are going to focus on what we'll call the rendering problem. That is, how do we take a digital representation of geometry, and create a drawing of it in a digital image? Later chapters of the book deal with the cases where we want to create a very photorealistic image or a very quick rendering for interactive applications. For now, we are concerned with understanding how we represent and manipulate the geometry that we will try to render.

Input Space/Measuring Space

We immediately face a problem very similar to our problem when we were trying to understand how to represent color as numbers in digital form. Specifically, how do we represent a geometric object in digital form?

Most of us are familiar with the Cartesian coordinate system we are taught in school. The Cartesian coordinate system lets us denote the position of objects in space relative to two axes in space. We then locate a point in space by its position along the two axes, where we consider the place where the axes cross to be the "zero" location. Because these axes are abstract ideas that extend infinitely in every direction, it is as if we have created a universal measuring stick for space (specifically a two dimensional plane in this case). It does not matter where we set the axes, or in which direction they point (as long as the axes are at right angles to each other), we can still measure the distances along each axis, and in so doing locate a given point in space.

Thus, if we are given a collection of points, our choice for how we place the Cartesian coordinate system axes is entirely unimportant, the relationships between the points will be maintained.

Photorealism/What Your Eyes See

Simply put, your eyes see light. That is, the only information your eyes receive about the world in order to construct an image in your head is the photons that have bounced off of objects near you and into your eyes. So constructing a photo-realistic image is (almost) all about simulating lighting. When there is no light, there are no photons to interact with your eyes, and so obviously, you don't see anything.

In everyday life, there are light sources all around us to provide light, from lamps in our houses or in the streets, to the sun during the day and the moon (or perhaps just the stars) at night. Every light source has characteristics that we are familiar with; for example, the sun is much brighter than most other light sources we experience, and has light of a particular color (white), and occupies a position in the sky at various parts of the day, and so forth.

A light source emits photons (and our eyes receive them) at an astronomical rate; the number is so huge that it is difficult to relate it to numbers in our everyday experience. Each photon a light source emits will travel in a straight line forever, until it interacts with some matter (as a matter of fact, the photons that enter your eyes when you look up at the stars at night are the same photons that left the stars millions of years before and have traveled across the universe in that time to enter your eye). When a photon does interact with matter, several things might happen.

In the simplest case, a photon strikes matter and reflects off. In this case, the photon will typically carry information about the color of the object it struck and continue on in the reflected direction. The specifics of the color can depend on the circumstance. For example, if a white light reflects off a brown table, the photons that reach your eye will carry information about the brown color of the table, not the white light. If a red light reflects off of a white table, it will probably carry some information about the original color of the light and reach your eye a shade of red.

However, the photon does not always reflect off. What a photon does when it interacts with matter depends on a great many optical properties of the matter. For example, surfaces can have varying degrees of reflectiveness, specularity, opacity, and surface smoothness (amongst others). Photons that strike very reflective surfaces are much more likely to reflect off in a predictable direction (the direction you would expect from looking at a mirror at an angle), but if they strike a non-reflective surface, they're much more likely to scatter in various directions, or simply be absorbed, so that the photon does not continue on any further from the surface. Similarly, a photon that strikes a particularly transparent surface might not bounce off, but instead continue onwards, potentially having been diverted to a slightly different course (this change in original heading is called refraction, and it is manifested, for example, by the distortion you see when you stick a pen into a glass of water).

You might look at the room you are sitting in, with all its finely detailed surfaces, and many intricate objects, and numerous light sources, and imagine all of the photons zipping around the room, bouncing off of objects, through objects, or getting absorbed, or perhaps bouncing off of objects numerous times, all before finally reaching your eyes. The final picture your brain processes is constructed from a huge number of photons, all of which have taken a potentially complex path in their journey from the light source to your eyes.

The problem becomes apparent. We cannot hope to ever, even with futuristic computers many years hence, hope to model all of these light interactions as individual photons leaving light sources and zipping around the scene before heading into our virtual camera lens. Instead, we must find more computationally tractable ways of approximating the situation we've described above. The most obvious technique for doing this is called raytracing.

Photorealism/The Idea Of Raytracing

As we saw in the previous chapter, it is impossible, practically speaking, to model the photons coming from light sources directly. There are simply too many photons necessary to generate all of the visual information we see, and most of the photons that leave a given light source probably do not even reach our eyes. When you add in the complexities of multiple light sources necessary to create a scene true to our experience, tracing each individual photon from the light source until it (potentially) reaches our virtual camera is simply not possible.

The approach we use instead is called raytracing. Raytracing is actually very simple conceptually, and makes our problem tractable. Here is the basic idea.

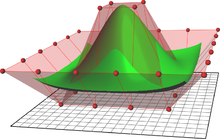

Our digital image is made up of a finite number of pixels. Since the pixels are the atomic element of the image, we are only concerned with what color each pixel should be. The idea of raytracing is to work backwards from the pixels of our virtual camera, going through each individual pixel and tracing an imaginary "ray" straight away from that pixel until we encounter an object (specifically, a particular point on that object).

Once we find the intersection point in space that the ray hit, we reason that the light that would have hit this pixel contains all of the information about the light that was striking the object at this point, plus the interaction with that object. Thus, we can start a new ray on this location of the object, and trace it backwards until we hit another object, and so on. Eventually, the ray must strike a light source. Once we have found this light source, we then know the color of the light that left this light source, and struck one object, bounced off, struck another, and so on all the way back to our camera's pixel. Thus, we now know what color to put in that pixel.