Cognitive Science: An Introduction/Vision

Vision is turning light into information for a cognitive system, a process an agent uses to exploit light to inform it about its surroundings. It is a very useful sense, and not just for human beings. Different kinds of eyes have independently evolved at least 40 times in the history of life on Earth.[1]

Photoreceptors

[edit | edit source]To do this, the agent needs something to detect light: photoreceptors. Even plants can react to light, and we can see this in the plants we have in our house, that grow toward the window. Sunflowers are even more dramatic, as they turn their flowers to face the sun over the course of the day. The way plants react to light is called phototropism. This system is very old, evolutionarily, and plans and humans use the same genes to detect light and dark.[2]

The Simplest Eye

[edit | edit source]The simplest "eyes" only detect brightness and darkness. You might think of this as one color. The simplest light-detection systems on Earth are probably those of green algae, which have organelles that can detect the presence of light and its intensity. These are called "eye-spots."[3]

Dichromats

[edit | edit source]Cats are dichromats, which means they have two different kinds of photoreceptors in their eyes.[4] They are like humans who have red-green colorblindness. This affects about 8% of males, and is due to mutation on the x chromosome. Women usually have backup genes on their other x chromosome, so they are less affected by colorblindness.[5]

We can think of plants as being dichromats as well. They have receptors for blue light, and other receptors for red light. The blue light detectors are mainly used for phototropism. In only three hours you can measure a plant's reaction to even dim light, as was first demonstrated by Charles Darwin. The end of a plant's shoot detects light, and then sends a message down the midsection, changing the direction of the plant's growth.

Plants also have photoperiodism, which detects how much light has been taken in. This is useful for keeping track of what time of year it is. In a kind of primitive memory system, a plant keeps track of periods of darkness. The red light detectors even know what kind of red light is present, distinguishing the far-red light of dusk (the wavelengths of which are longer) from the bright red seen during the day.

Take irises, for example, which normally won't flower during a long night. If, in the middle of the night, you shine a bright red light on it, it will flower. But it won't if you quickly shine a far-red light on it right afterward. It remembers the last light it saw, and behaves appropriately.[6]

Trichromats

[edit | edit source]

Humans are considered trichromats, meaning we have three different kinds of photoreceptors in our eyes. We can feel sunlight on our skin, but this is detecting only heat, not light. Bees are also trichromats, but their three respond to yellow, blue, and ultraviolet.[4]

Human trichromats discriminate about seven color bands in the visible spectrum, such as a rainbow. Those with red-green color blindness perceive about five. About 2% of human women are tetrachromats, and they can distinguish about 10 colors.[7]

But humans also have two other special receptors that rarely get talked about. We detect shadow and light using receptors with rhodopsin, and a fifth light detector that uses cryptochrome, which is used for regulating our internal clocks.[8]. But humans are called trichromats because we use three detectors for color. Cryptochrome captures some redundant information to be used for other purposes.

Higher-order Chromats

[edit | edit source]

As we are seeing, different living things have varieties of light detectors. There is even a plant, called Arabidopsis, that has 11 different photoreceptors.[9]

The mantis shrimp has one of the most complex eyes ever discovered, with a full 16 different kinds of photoreceptors!

Vision in Non-Human Animals

[edit | edit source]As we have seen, vision takes on different forms in different creatures. This is important to keep in mind when we think about vision in general, abstracted away from human beings. Let's take the octopus, for example. It can change its colors rapidly in response to the environment. If it is sitting on a rock with a particular color pattern, its skin can change quickly to mimic that pattern for better camouflage. They do this by expanding and contracting colored cells in the skin. The strange thing about this is that octopuses, and, as far as we can tell, all cephalopods, are color blind!

More specifically, their eyes are colorblind. It turns out that the skin itself can detect colored light and automatically change color to match it--this happens even when the arm has been removed from the octopus. This means that the octopus skin has color vision but the eyes do not.[10]

Human Vision

[edit | edit source]Even the ancient Greeks argued over how vision works. Democritus thought that information went from objects to the eyes. But Plato had a different idea: that the human eye sent out power to scan objects. This was called extramission theory, and held for almost a thousand years![11]

Today we know that Democritus was correct--or, at least, more correct than Plato, with his intromission theory. Light enters the eye and is focused by the lens. The focused light plays on the retina, a sheet of cells about the size of a passport photo. You have one retina in each eye, and each has about 255 million cells (that's 125 million rods and six million cones.) If we think of the capacity of information representation in the retina as though it were a digital camera, we might say that it has the resolution of a 130 megapixel camera.[12]

Compared to other senses, vision is relatively slow. This is because the information goes through several layers of neurons, which modify and amplify the signal. This is why we don't see flicker on a computer screen, which flickers at about 60 cycles per second.[13]

When we look at the world, it appears to be a seamless whole. This makes it difficult to understand, and sometimes even difficult to believe, that our visual perceptual experience is created through a series of smaller perceptions of simpler things. But that's how it happens.

Vision is turning light into something useful. In our retinas we have photosensitive neurons. They increase their firing rate when photons (particles of light) hit them. At a first approximation, you can think of the first layer of neurons as a bunch of pixels, encoding some color at some location. But this is just the first layer. After that, they start to detect higher level patterns.

One of the first discoveries was that there were certain neurons that detected lines at particular angles. That is, there would be a neuron that would increase its firing rate in the presence of line at, say, 90 degrees, but not at other angles. As a shorthand, we'll say that the neuron "detects" lines at 90 degrees. David Hubel and Torsten Weisel discovered this in 1958, studying the dog's visual system. They were exposing the dogs to images, and recording the activity of single cells in the visual pathway from the eye to the brain. The images weren't doing much, but when they moved the slides sometimes the neuron would go crazy. It finally dawned on them that the neuron was responding to the edge of the slide, not the picture in it! This is one of many stories in science of accidental discovery [14].

So what happens? Does the neuron increase firing only in the presence of lines at exactly 90 degrees? No. If the line is slightly off 90 degrees, the neuron will increase its firing rate to a lesser extent. The closer the line is to 90 degrees, the higher the firing rate. There are neurons for detecting angles of all orientations: some for 180 degrees, some for 30 degrees, etc. Each one has a particular "receptive field." With the information from all of these neurons firing, an cognitive system can tell with some good degree of accuracy the orientation of a line. Hubel and Weisel found that the further "upstream" you go, away from the retina and toward the rest of the brain, the more complex are the detections. That is, lines are fairly simple, but those lines are used by higher neurons to detect even more complex patterns.

Eye Movement

[edit | edit source]Although vision sometimes can feel relatively passive, at least compared to touch, people move their heads and eyes to help understand their world. Your eyes are constantly moving. They dart around with saccades about three times every second, but we're largely unaware of this. While your eyes move like this, your brain suppresses the signal, which is why you don't see blurring. We don't even see the temporary blackout. But if you watch someone's eyes carefully, you can see these quick little movements. [15]

We also move our bodies and heads around to aid with depth perception, the subject of a section below.

Depth Perception

[edit | edit source]Knowing how far away things are is essential to getting a good, three-dimensional perception of our world. The visual system uses several cues to see how far away things are. You can use the mnemonic "SPOT-FM" to help remember them.

S: Size. We know how big things are, so we relate that to how big they look to get an idea of how far away things are. We know how big cars are, so when we see three of different sizes (in the image or on the retina), we get an idea of how far away they are.

P: Perspective. Things that are farther away tend to look smaller. That is, they take up less space on the retina when you look at them. In the image, we can see that cars that are farther away are smaller on the image.

O: Occlusion. When something is in front of another thing, it blocks it. In the picture, some cars are occluding others, giving us clues as to which ones are father away.

T: Texture, shading, saturation. Because of the air that light passes through, distant objects are more hazy, with less saturated colour and more blue.[16] We also use texture gradients. In the car picture, the shadows of the trees on the street are relatively uniform, but the shapes get smaller as the road goes into the distance. Also, we assume that brighter-lit things are facing upward, and their downward sides are in shadow. This helps us see surfaces as concave or convex (bumping away from us or toward us.)

F: Focus. Muscles in your eye bend the lens to focus the image. Our minds know when we're focusing on something farther away based on what the muscles have to do to get it in focus. In the picture, the most distant, parked car is slightly out of focus. When one object requires a different focus than another, they are at different distances.

M: Multiple viewpoints. This is for stereo vision. We use binocular vision to help us understand distance. Because the eyes are slightly apart, each eye gets a different viewpoint. The mind uses these different views to infer depth. This is how 3D movies work. But for things very far away, stereo vision doesn't work as well, because the two eyes get almost the same image. The few inches between your eyes is negligible when looking at things further away than, say, a football field's length (about 100 yards).[17] We can also get muliple viewpoints by moving the head. In fact, people with vision in only one eye subconsciously move their heads back and forth to simulate binocular vision!

Motion Perception

[edit | edit source]The visual perception of motion seems to happen in the middle temporal area of the brain, often called MT. People who get damage to this part of the brain lose their ability to see motion. They can't tell what's moving, how fast, or in what direction. This is a rare disorder called akinetopsia, and for these patients, the world is experienced as a set of static images. Pouring coffee and crossing the street are difficult, and these patients often end up relying heavily on sound, much like a blind person might.[18]

Vocabulary

akinetopsia

receptive field

extramission theory

intromission theory

Visual Information Input

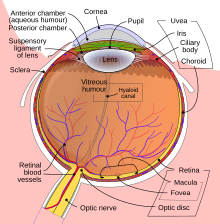

[edit | edit source]This section overviews how light is interpreted upon striking the retina. The structure of the eye, including its retina and optic nerves, prepares the incoming information for the perceptional system. As light enters the eye, the amount of light received is controlled by the pupil. The two major surfaces that control where the light will travel is the cornea and the intra ocular lens. The ocular lens will flex to ensure that the light is focused properly at the back of the eye in a process known as accommodation[19]. The cornea is the clear layer of the eye, the shape of which effects the lights rays as the light converges. After passing through the vitreous, the light will finally arrive at the back of the eye to strike the retina. The retina is part of the central nervous system. The retinal image the brain receives is reversed, and the brain reinterprets the image. Within the optic chiasm, nerve fibres from half of one eye travel to the opposite side of the brain. This ensures that each half of the brain receives signals from both visual fields of each eye. If each eye were divided into both a lateral and medial field, the right side of the brain would view the medial side of the left eye and the lateral field of the right eye. The medial field corresponds to the temporal side of the image due to the inversion. This system allows for binocular and stereoscopic vision i.e.: depth perception, due to there being two vantage points. The brain can use the differences between the two images as visual depth[20].

Eye cells

[edit | edit source]The two major types of retinal cells are known as rods and cones. There are approximately 150 million rods and 7 million cones. The cones are located centrally to form central vision. The macula is where these cones are located. The fovea located near the centre of the macula has the highest concentration of cone cells. The rods are responsible for peripheral and low light scenarios. The difference in their ability to detect fluctuations of light is due to different photopigments[21]. In bright light, only the cones can detect light fluctuation. In dim light, the rods react. In intermediate light, or mesopic light, rods remain responsive to light changes and cones also function. The functioning of cones can be observed in the emergence of colour perception. Although there are ten different layers of the retina, the process of detecting light can be more easily broken down into four basic stages: photoreception, transmission to bipolar cells, transmission to ganglion cells and transmission to the optic nerve.

In their resting state, cells are depolarized. The intraretinal axons are myelinated voltage gated sodium channels[22]. The chemical responsible for keeping the Na+ channel open is known as cyclic guanine monophosphate (cGMP). Photons entering the retinal area cause a shift from the protein rhodopsin chromophore from the cis form to the transform. Multiple G-Proteins are activated. One significant G-protein called transduction binds to rhodopsin and begins the process known as the transduction cascade. A phosphodiester is activated which degrades the cGMP; the Na+ channels normally kept open by cGMP close rapidly causing a hyperpolarization in respective cell. The highest depolarization occurs in dark conditions (-40mV). Hyper polarization occurs progressively as light is introduced to the rods and cones (-60 to -75mV). The potential of these cells regulates the Ca2+ channel. In between the values -50 and -55, the channels begin to open, thus “firing” the cell. These photoreceptor cells send signals to bipolar cells, horizontal cells and amacrine cells. Horizontal cells and amacrine cells exist through all retinal layers to varying degrees. Retinal bipolar cells are a type of neuron that both directly and indirectly transmit signals. The graded potential difference is unique to the eye, differentiating other bipolar cells which send impulses (the difference between a gradient and an “on/off” switch.

Horizontal cells provide inhibitory feedback to photoreceptors. They help eyes adjust in order to be able to see in both dim and bright lights. There is a much greater density of horizontal cells towards the centre of the retina. There has been linked to optical migraines due to the over firing of horizontal cells[23].

Amacrine cells are the least common type of cell in the deep retinal layers. They are responsible for the distribution of dopamine and serve to help regulate the ganglion cells and horizontal cells. Specifically, in horizontal cells, dopamine has been shown to reduce cell-to-cell couplings in the outer retina. Dopamine serves a role in regulating both retinal pigment epithelium and cAMP (cyclic adenosine monophosphate). Retinal pigment epithelium is responsible for absorbing light once it crosses the retina so that it does not continue to bounce within the eye[24].

The bipolar and ganglion cells form a system often referred to as “centre-surround system.” In this model, the centre of the cell and the outside of the cell can be weighed positively or negatively[25]. “On” centres have positively weighted centre and a negatively weighted surrounding. “Off" centres contain the reverse. The combination allows for four different possibilities for each type of cell for a total of 8 different responses. For example, in “on” centre cells, if there is light on the centre with no light on the peripheral, the ganglion would fire rapidly. The inverse is also true. With no light in the centre and light on the periphery, the cell does not fire. The “off” centre cell holds the same conditions except that the portion of the cell where light is required to shine is reversed. This shows 4 of the eight combinations. The other four can be simplified. When light strikes everywhere, for both on and off centre cells, the ganglion fires slowly (reduced frequency). When there is no light anywhere on the cell, the cells do not fire at all. An important point is that cells are not all given equal weighting for when they do or do not fire. The eye as a whole is more focused on the ability to contrast different light; the organization of the ganglions and the reception from the rods and cones lend themselves to a system that is focused on being able to detect contrasts rather then absolute changes [26]. The brain “makes up” a large portion of the mental image we “see” in our head. Because the process of recreating the image happens so quickly in milliseconds, we do not realize that the mental image is reconstructed each time . The importance of this system is that there are roughly 100 times more photoreceptor cells than ganglion cells. In order to compress and summarize the huge amounts of information coming into the eye, the centre surround system weighs information. This weighing is all done in parallel, which is part of the first stage of processing incoming information.

Blind spot

[edit | edit source]Each eye contains a blind spot where the optic nerves depart, containing the synapses. This blind spot is normally undetectable due to the brain combining both of the eye’s images together. The blind spot can be found using techniques such as moving one’s fingers within vision in a way that reveals there to be two images [27].

Eye disease

[edit | edit source]The optic nerve is primarily comprised on ganglion cells and is responsible for carrying all the information to the optic chiasm. The sensitivity of the optic nerve is responsible for one of the eye disease glaucoma, where the optic nerve is compressed, damaging the ganglions and axons which impair vision[28]. Other diseases involve damage to the macula, which is known as macular degeneration.

References

[edit | edit source]- ↑ Mayr, E. (2001). What Evolution Is. New York: Basic Books. Page 113

- ↑ Chamovitz, D. (2012). What a plant knows: A field guide to the senses. Scientific American: New York. Page 3.

- ↑ Chamovitz, D. (2012). What a plant knows: A field guide to the senses. Scientific American: New York. Page 24

- ↑ a b Preston, E. (2014). How animals see the world. Nautilus, Summer, p14.

- ↑ Mitchell, K. J. (2018). ‘’Innate: How the wiring of our brains shapes who we are.’’ Princeton, NJ: Princeton University Press. Page 136

- ↑ Chamovitz, D. (2012). What a plant knows: A field guide to the senses. Scientific American: New York. Pages 12-20

- ↑ Mitchell, K. J. (2018). ‘’Innate: How the wiring of our brains shapes who we are.’’ Princeton, NJ: Princeton University Press. Page 137

- ↑ Chamovitz, D. (2012). What a plant knows: A field guide to the senses. Scientific American: New York. Page 20

- ↑ Chamovitz, D. (2012). What a plant knows: A field guide to the senses. Scientific American: New York. Pages 22-23

- ↑ Godfrey-Smith, P. (2016). Other minds: The octopus, the sea, and the deep origins of consciousness. Farrar, Straus and Giroux. Page 119

- ↑ Groh, J. M. (2014). Making space: how the brain knows where things are. Harvard University Press. Page 9.

- ↑ Chamovitz, D. (2012). What a plant knows: A field guide to the senses. Scientific American: New York. Page 11.

- ↑ Groh, J. M. (2014). Making space: how the brain knows where things are. Harvard University Press. Pages 20-21.

- ↑ Martinez-Conde, S. & Macknik, S. L. (2014). David Hubel's vision. "Scientific American Mind", March/April, 6--8.

- ↑ Groh, J. M. (2014). Making space: how the brain knows where things are. Cambridge, MA: Harvard University Press. Pages 162--163.

- ↑ Groh, J. M. (2014). Making space: how the brain knows where things are. Harvard University Press. Page 44.

- ↑ Groh, J. M. (2014). Making space: how the brain knows where things are. Harvard University Press. Page 43.

- ↑ Groh, J. M. (2014). Making space: how the brain knows where things are. Harvard University Press. Pages 100-101.

- ↑ Buetti, S., Cronin, D. A., Madison, A. M., Wang, Z., & Lleras, A. (2016). Towards a better understanding of parallel visual processing in human vision: Evidence for exhaustive analysis of visual information. Journal of Experimental Psychology: General, 145(6), 672–707. https://doi.org/10.1037/xge0000163

- ↑ Sonkusare, S., Breakspear, M., & Guo, C. (2019). Naturalistic Stimuli in Neuroscience: Critically Acclaimed. Trends in Cognitive Sciences, 23(8), 699–714. https://doi.org/https://doi.org/10.1016/j.tics.2019.05.004

- ↑ Moser, T., Grabner, C. P., & Schmitz, F. (2020). Sensory processing at ribbon synapses in the retina and the cochlea. Physiological Reviews, 100(1), 103-144. doi:10.1152/physrev.00026.2018

- ↑ Risner, M. L., McGrady, N. R., Pasini, S., Lambert, W. S., & Calkins, D. J. (2020). Elevated ocular pressure reduces voltage-gated sodium channel NaV1.2 protein expression in retinal ganglion cell axons. Experimental Eye Research, 190 doi:10.1016/j.exer.2019.107873

- ↑ Yener, A. Ü., & Korucu, O. (2019). Quantitative analysis of the retinal nerve fiber layer, ganglion cell layer and optic disc parameters by the swept source optical coherence tomography in patients with migraine and patients with tension-type headache. Acta Neurologica Belgica, 119(4), 541-548. doi:10.1007/s13760-018-1041-6

- ↑ Martucci, A., Cesareo, M., Pinazo-Durán, M., Di Pierro, M., Di Marino, M., Nucci, C., Mancino, R. (2020). Is there a relationship between dopamine and rhegmatogenous retinal detachment? Neural Regeneration Research, 15(2), 311-314. doi:10.4103/1673-5374.265559

- ↑ Lisani, J. -., Morel, J. -., Petro, A. -., & Sbert, C. (2020). Analyzing center/surround retinex. Information Sciences, 512, 741-759. doi:10.1016/j.ins.2019.10.009

- ↑ Lisani, J. -., Morel, J. -., Petro, A. -., & Sbert, C. (2020). Analyzing center/surround retinex. Information Sciences, 512, 741-759. doi:10.1016/j.ins.2019.10.009

- ↑ Lee Masson, H., Bulthé, J., Op De Beeck, H. P., & Wallraven, C. (2016). Visual and Haptic Shape Processing in the Human Brain: Unisensory Processing, Multisensory Convergence, and Top-Down Influences. Cerebral Cortex, 26(8), 3402-3412. https://doi.org/10.1093/cercor/bhv170

- ↑ Risner, M. L., McGrady, N. R., Pasini, S., Lambert, W. S., & Calkins, D. J. (2020). Elevated ocular pressure reduces voltage-gated sodium channel NaV1.2 protein expression in retinal ganglion cell axons. Experimental Eye Research, 190 doi:10.1016/j.exer.2019.107873