Applied Robotics/Printable version

| This is the print version of Applied Robotics You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/Applied_Robotics

Mechanisms and Actuation/DC Stepper Motor

Stepper Motor Basics[edit | edit source]

A stepper motor is a DC motor that consists of a polyphase coil stator and a permanent magnet rotor. These motors are designed to cog into discrete commanded locations and will hold their position as current is ran through the motor. Every stepper motor will have a fixed number of steps per rotation that can be stepped through by changing the direction of the applied magnetic field in the motor. Rotation is achieved by continuously stepping the motor with a short delay in between steps. The shorter the delay, the higher the RPM.

Stepper motors work well for simple open loop position control applications where high torque and low RPM are needed. As speed increases, stepper torque greatly decreases, and excessively small step timing can lead to missed steps due to motor inertia.

Controlling a stepper motor requires a state machine that will commutate, or change the direction of the magnetic field in the motor, and apply a time delay in between steps to control motor velocity. This is typically done using a timer on a microcontroller and 4 I/O lines to control the current and therefore the magnetic field direction.

== Unipolar stepper motors == utilize center-tapped coils and switching elements that only draw current in one direction to change the current direction in the motor. These motors require twice the winding length for unipolar drive compared to a comparable bipolar stepper, and are thus less powerful for their weight than bipolar steppers. These motors typically have two center tapped phases.

== Bipolar stepper motors == utilize a single coil per phase and require the control electronics to switch current directions through the coil, typically with a single H-bridge per phase. Unipolar motors can also be driven in a bipolar fashion by leaving the center tap of each phase disconnected. These motors typically have two phases.

Unipolar Stepper Driver[edit | edit source]

An efficient unipolar stepper driver can be built from 4 N-channel MOSFETs controlled by microcontroller I/O pins. Each of the coil center taps is connected to a positive voltage that the motor is rated for and each of the phase coil ends is connected to a different MOSFET drain. Each step is controlled by turning on one of the MOSFETs at a time. Note: If the MOSFETs will be directly driven off of microcontroller I/O levels, it is critically important that they are logic-level FETs that will turn on at 5V.

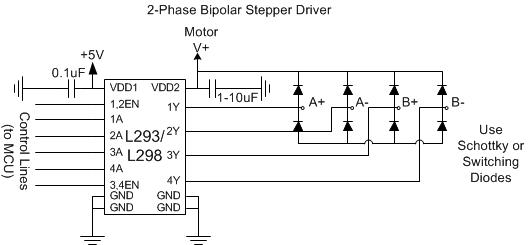

Bipolar Stepper Driver[edit | edit source]

A bipolar stepper driver can be built using a 4-channel high current driver, such as an L293, SN754410, or L298 motor driver IC. Each of the outputs should have a pair of fast acting catch diodes to prevent damage to the driver IC from flyback voltages that occur as a result of motor inductance during switching. Each phase of the stepper motor is connected to two of the output drivers, forming two H-bridges and allowing for current to be driven bidirectionally through the two phases. Only one phase should be active at a time for a standard control scheme, so the output driver enables will have to be turned on and off in addition to controlling the direction of the output drivers.

Stepper Control Code[edit | edit source]

Stepper control can be done on an AVR microcontroller using either a delay statement (good for simple testing, but terrible for accurate timing and program efficiency), or with a timer/counter and an interrupt (slightly more complicated, but much better efficiency wise). The following code shows how to utilize a timer counter on an ATmega device along with a simple state machine and I/O lines. Reversing direction just involves stepping in the opposite direction. This code can be used to control an L29x type motor driver to drive a bipolar stepper or a pull-down driver to control a unipolar stepper.

The basic step sequence is as follows for both types of two-phase stepper motor.

| Step | A Current | B Current |

|---|---|---|

| Step 1 | + | 0 |

| Step 2 | 0 | + |

| Step 3 | - | 0 |

| Step 4 | 0 | - |

/**************************************************

*

* AVR Microcontroller Stepper Control Example

* Cody Hyman <hymanc@onid.orst.edu>

*

**************************************************/

#include <avr/io.h>

#include <avr/interrupt.h>

#define STEP0 0b10001000 // Phase A Enabled, positive current

#define STEP1 0b00100100 // Phase B Enabled, positive current

#define STEP2 0b01001000 // Phase A enabled, negative current

#define STEP3 0b00010100 // Phase B Enabled, negative current

#define STEPPER_MASK 0xF0 // Upper 4 bits for DIR

#define STEPPER_EN_MASK 0x0C // Bits 2 and 3 for EN

#define STEPPER_ALL_MASK (STEPPER_MASK | STEPPER_EN_MASK)

#define STEPPER_PORT PORTC // Stepper I/O Port

#define DIRECTION_FWD 1

#define DIRECTION_REV -1

volatile int8_t stepper_state; // Step state

volatile int8_t step_direction; // Step direction

/* Initialize the stepper I/O and timer */

void initialize_stepper()

{

DDRC |= STEPPER_MASK | STEPPER_EN_MASK; // Initialize stepper outputs

STEPPER_PORT |= STEP0; // Step to initial position

// Enable time 3 for stepper timing via timer overflow interrupt

TCCR3B = (1<<WGM33)|(1<<WGM32)|(1<<CS32);// Set WGM to CTC mode CLK/256

ICR3 = 0xFFFF; // Default to maximum delay

current_direction = DIRECTION_FWD; // Set initial direction to fwd

ETIMSK |= (1<<TOIE3); // Set Timer 3 Overflow Interrupt

sei(); // Enable global interrupts

}

/* Adjusts the step delay */

void set_step_delay(uint16_t delay)

{

ICR3 = delay; // Reset the top value

}

/* Step handler sequence */

void step(uint8_t direction)

{

stepper_state = (stepper_state + direction) % 4; // Step to the next state

// Handle I/O changes with the switch statement

switch(stepper_state)

{

STEPPER_PORT &= ~(STEPPER_ALL_MASK);

case(STEP0):

{

STEPPER_PORT |= STEP0;

break;

}

case(STEP1):

{

STEPPER_PORT |= STEP1;

break;

}

case(STEP2):

{

STEPPER_PORT |= STEP2;

break;

}

case(STEP3):

{

STEPPER_PORT |= STEP3;

break;

}

}

}

/* Timer 3 overflow interrupt service routine */

void ISR(TIMER3_OVF_vect)

{

step(current_direction); // Run step handler

};

/* Main */

int main(void)

{

initialize_stepper();

while(1)

{

// Nothing to do here, the interrupt handles motion control

}

return 0;

}

Off the Shelf Stepper Solutions[edit | edit source]

As an alternative to creating your own stepper driver/controller, a number of low-cost off the shelf products exist for controlling small steppers. Texas Instruments, Allegro, ST Microelectronics, and ON Semiconductor all make specialty microstepping driver ICs that operate on a simplified logic interface and provide a finer granularity of stepper control than the basic concept outlined above. A number of hobbyist vendors, such as Sparkfun and Pololu, carry breakout boards for these types of stepper drives.

Sensors and Perception/Open CV

What is OpenCV[edit | edit source]

OpenCV is a powerful free open source computer vision library maintained by Willow Garage that is widely used in image processing and recognition applications. OpenCV is a framework containing routines for image storage and retrieval, video capture, image processing, computer vision algorithms, GUI and windowing, and many other advanced features. From basic image thresholding to 3D stereo vision, OpenCV usually has a solution for most computer vision needs. OpenCV currently supports many platforms (Linux, BSD, Windows, and MacOSX) and supports bindings for the C, C++, and Python languages. OpenCV is notably faster at image processing than MATLAB in most cases but requires an application built around using the OpenCV library. OpenCV is also available as a ROSpackage in Robot Operating System. More information is available at the OpenCV website.

How to Install/Build OpenCV for C/C++[edit | edit source]

Windows: In a Windows environment, OpenCV can be installed for both the Microsoft Visual C++ compiler or MinGW. The VC++ package is easiest to install and comes in a precompiled binary available: http://sourceforge.net/projects/opencvlibrary/files/opencv-win/2.4.4/OpenCV-2.4.4.exe/download. This install requires that Microsoft Visual Studio be installed, which is available as a free download from Microsoft (Express Edition): http://www.microsoft.com/visualstudio/eng/products/visual-studio-express-for-windows-desktop

The full version of MS Visual Studio is available via student Dreamspark accounts accessible via the College of Engineering T.E.A.C.H. webpage.

Linux, Android, MacOSX, non VC++ Windows Compilers: Most other supported platforms require building OpenCV from source files. Full instructions and methods of obtaining the source code can be found in the official OpenCV install guide.

Sensors and Perception/Open CV/Basic OpenCV Tutorial

This post explains the basics of using OpenCV for computer vision in Python.

Installation[edit | edit source]

On Ubuntu (and other flavors of Debian), you can install all the necessary packages for OpenCV, Python, and the OpenCV-Python bindings by running: sudo apt-get install python-opencv This will install a somewhat outdated version of OpenCV (2.3.1), but it should be sufficient for what you need in this class. If you don't use a Debian flavor, consult your package manager or Google.

Simple Image Capture[edit | edit source]

This is a very simple OpenCV program. It opens your video camera, reads an image, displays it, and repeats until you press a key. It's a good test to verify that your OpenCV setup is functional:

#!/usr/bin/python

import cv2

# Open video device

capture1 = cv2.VideoCapture(0)

while True:

ret, img = capture1.read() # Read an image

cv2.imshow("ImageWindow", img) # Display the image

if (cv2.waitKey(2) >= 0): # If the user presses a key, exit while loop

break

cv2.destroyAllWindows() # Close window

cv2.VideoCapture(0).release() # Release video device

Template OpenCV Program[edit | edit source]

This program implements the base functionality of any OpenCV application:

#!/usr/bin/python

#

# ENGR421 -- Applied Robotics, Spring 2013

# OpenCV Python Demo

# Taj Morton <mortont@onid.orst.edu>

#

import sys

import cv2

import time

import numpy

import os

##

# Opens a video capture device with a resolution of 800x600

# at 30 FPS.

##

def open_camera(cam_id = 0):

cap = cv2.VideoCapture(cam_id)

cap.set(cv2.cv.CV_CAP_PROP_FRAME_HEIGHT, 600);

cap.set(cv2.cv.CV_CAP_PROP_FRAME_WIDTH, 800);

cap.set(cv2.cv.CV_CAP_PROP_FPS, 30);

return cap

##

# Gets a frame from an open video device, or returns None

# if the capture could not be made.

##

def get_frame(device):

ret, img = device.read()

if (ret == False): # failed to capture

print >> sys.stderr, "Error capturing from video device."

return None

return img

##

# Closes all OpenCV windows and releases video capture device

# before exit.

##

def cleanup(cam_id = 0):

cv2.destroyAllWindows()

cv2.VideoCapture(cam_id).release()

##

# Creates a new RGB image of the specified size, initially

# filled with black.

##

def new_rgb_image(width, height):

image = numpy.zeros( (height, width, 3), numpy.uint8)

return image

########### Main Program ###########

if __name__ == "__main__":

# Camera ID to read video from (numbered from 0)

camera_id = 0

dev = open_camera(camera_id) # open the camera as a video capture device

while True:

img_orig = get_frame(dev) # Get a frame from the camera

if img_orig is not None: # if we did get an image

cv2.imshow("video", img_orig) # display the image in a window named "video"

else: # if we failed to capture (camera disconnected?), then quit

break

if (cv2.waitKey(2) >= 0): # If the user presses any key, exit the loop

break

cleanup(camera_id) # close video device and windows before we exit

Image Thresholding[edit | edit source]

A common way to simplify your computer vision problem is to convert your image into a black and white ("binary") image. The threshold used to decide what becomes black and what becomes white determines what features are ignored an which ones are important to your algorithm. Many thresholding algorithms expect the image input to be in grayscale. The following functions will take an image, convert it to grayscale, and then convert it to a binary image at the specified threshold. Be sure to include the functions from the template program (above) in here as well:

##

# Converts an RGB image to grayscale, where each pixel

# now represents the intensity of the original image.

##

def rgb_to_gray(img):

return cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

##

# Converts an image into a binary image at the specified threshold.

# All pixels with a value <= threshold become 0, while

# pixels > threshold become 1

def do_threshold(image, threshold = 170):

(thresh, im_bw) = cv2.threshold(image, threshold, 255, cv2.THRESH_BINARY)

return (thresh, im_bw)

#####################################################

# If you have captured a frame from your camera like in the template program above,

# you can create a bitmap from it as follows:

img_gray = rgb_to_gray(img_orig) # Convert img_orig from video camera from RGB to Grayscale

# Converts grayscale image to a binary image with a threshold value of 220. Any pixel with an

# intensity of <= 220 will be black, while any pixel with an intensity > 220 will be white:

(thresh, img_threshold) = do_threshold(img_gray, 220)

cv2.imshow("Grayscale", img_gray)

cv2.imshow("Threshold", img_threshold)

Contour Detection[edit | edit source]

A simple object detection technique is to use contour finding, which finds the outline of shapes in your image. OpenCV can return different information on the contours it finds, including outer-contours, hierarchies, and simple lists. See the findContours() function in the OpenCV documentation for more information (http://docs.opencv.org/modules/imgproc/doc/structural_analysis_and_shape_descriptors.html#findcontours).

In this example, we find the outermost contours of each shape (the outline of the pucks), and return a shape-approximation of the object:

##

# Finds the outer contours of a binary image and returns a shape-approximation

# of them. Because we are only finding the outer contours, there is no object

# hierarchy returned.

##

def find_contours(image):

(contours, hierarchy) = cv2.findContours(image, mode=cv2.cv.CV_RETR_EXTERNAL, method=cv2.cv.CV_CHAIN_APPROX_SIMPLE)

return contours

#####################################################

# If you have created a binary image as above and stored it in "img_threshold"

# the following code will find the contours of your image:

contours = find_contours(img_threshold)

# Here, we are creating a new RBB image to display our results on

results_image = new_rgb_image(img_threshold.shape[1], img_threshold.shape[0])

cv2.drawContours(results_image, contours, -1, cv2.cv.RGB(255,0,0), 2)

# Display Results

cv2.imshow("results", results_image)

Finding Centroids Of Contours[edit | edit source]

Once you have found your contours, you may want to find the center of the shape. OpenCV can accomplish this by finding the moments (http://en.wikipedia.org/wiki/Image_moment) of your contour. The following functions can be used to find and display the centroids of a list of contours:

##

# Finds the centroids of a list of contours returned by

# the find_contours (or cv2.findContours) function.

# If any moment of the contour is 0, the centroid is not computed. Therefore

# the number of centroids returned by this function may be smaller than

# the number of contours passed in.

#

# The return value from this function is a list of (x,y) pairs, where each

# (x,y) pair denotes the center of a contour.

##

def find_centers(contours):

centers = []

for contour in contours:

moments = cv2.moments(contour, True)

# If any moment is 0, discard the entire contour. This is

# to prevent division by zero.

if (len(filter(lambda x: x==0, moments.values())) > 0):

continue

center = (moments['m10']/moments['m00'] , moments['m01']/moments['m00'])

# Convert floating point contour center into an integer so that

# we can display it later.

center = map(lambda x: int(round(x)), center)

centers.append(center)

return centers

##

# Draws circles on an image from a list of (x,y) tuples

# (like those returned from find_centers()). Circles are

# drawn with a radius of 20 px and a line width of 2 px.

##

def draw_centers(centers, image):

for center in centers:

cv2.circle(image, tuple(center), 20, cv2.cv.RGB(0,255,255), 2)

#####################################################

# If you have computed the contours of your binary image as above

# and created an RGB image to show your algorithm's output, this will

# draw circles around each detected contour:

centers = find_centers(contours)

cv2.drawContours(results_image, contours, -1, cv2.cv.RGB(255,0,0), 2)

draw_centers(centers, results_image)

cv2.imshow("results", results_image)

Image Transforms[edit | edit source]

Because your cameras are (likely) not mounted directly above the center of the field, you will want to perform a perspective transform on the incoming video frames to correct for the skew in your image. Generally, this is done by choosing 4 known locations in a rectangle and finding the matrix which rotates/skews an image so the 4 points selected on the original image are placed in the correct locations. This example has the user click on 4 points during initialization in the corners of the board. A more advanced algorithm could use the fiducials (http://en.wikipedia.org/wiki/Fiduciary_marker) located on the 4 corners of the board.

# Global variable containing the 4 points selected by the user in the corners of the board

corner_point_list = []

##

# This function is called by OpenCV when the user clicks

# anywhere in a window displaying an image.

##

def mouse_click_callback(event, x, y, flags, param):

if event == cv2.EVENT_LBUTTONDOWN:

print "Click at (%d,%d)" % (x,y)

corner_point_list.append( (x,y) )

##

# Computes a perspective transform matrix by capturing a single

# frame from a video source and displaying it to the user for

# corner selection.

#

# Parameters:

# * dev: Video Device (from open_camera())

# * board_size: A tuple/list with 2 elements containing the width and height (respectively) of the gameboard (in arbitrary units, like inches)

# * dpi: Scaling factor for elements of board_size

# * calib_file: Optional. If specified, the perspective transform matrix is saved under this filename.

# This file can be loaded later to bypass the calibration step (assuming nothing has moved).

##

def get_transform_matrix(dev, board_size, dpi, calib_file = None):

# Read a frame from the video device

img = get_frame(dev)

# Displace image to user

cv2.imshow("Calibrate", img)

# Register the mouse callback on this window. When

# the user clicks anywhere in the "Calibrate" window,

# the function mouse_click_callback() is called (defined above)

cv2.setMouseCallback("Calibrate", mouse_click_callback)

# Wait until the user has selected 4 points

while True:

# If the user has selected all 4 points, exit loop.

if (len(corner_point_list) >= 4):

print "Got 4 points: "+str(corner_point_list)

break

# If the user hits a key, exit loop, otherwise remain.

if (cv2.waitKey(10) >= 0):

break;

# Close the calibration window:

cv2.destroyWindow("Calibrate")

# If the user selected 4 points

if (len(corner_point_list) >= 4):

# Do calibration

# src is a list of 4 points on the original image selected by the user

# in the order [TOP_LEFT, BOTTOM_LEFT, TOP_RIGHT, BOTTOM_RIGHT]

src = numpy.array(corner_point_list, numpy.float32)

# dest is a list of where these 4 points should be located on the

# rectangular board (in the same order):

dest = numpy.array( [ (0, 0), (0, board_size[1]*dpi), (board_size[0]*dpi, 0), (board_size[0]*dpi, board_size[1]*dpi) ], numpy.float32)

# Calculate the perspective transform matrix

trans = cv2.getPerspectiveTransform(src, dest)

# If we were given a calibration filename, save this matrix to a file

if calib_file:

numpy.savetxt(calib_file, trans)

return trans

else:

return None

#####################################################

### Calibration Example ###

if __name__ == "__main__":

cam_id = 0

dev = open_camera(cam_id)

# The size of the board in inches, measured between the two

# robot boundaries:

board_size = [22.3125, 45]

# Number of pixels to display per inch in the final transformed image. This

# was selected somewhat arbitrarily (I chose 17 because it fit on my screen):

dpi = 17

# Calculate the perspective transform matrix

transform = get_transform_matrix(dev, board_size, dpi)

# Size (in pixels) of the transformed image

transform_size = (int(board_size[0]*dpi), int(board_size[1]*dpi))

while True:

img_orig = get_frame(dev)

if img_orig is not None: # if we did get an image

# Show the original (untransformed) image

cv2.imshow("video", img_orig)

# Apply the transformation matrix to skew the image and display it

img = cv2.warpPerspective(img_orig, transform, dsize=transform_size)

cv2.imshow("warped", img)

else: # if we failed to capture (camera disconnected?), then quit

break

if (cv2.waitKey(2) >= 0):

break

cleanup(cam_id)

Final Puck Detector Application[edit | edit source]

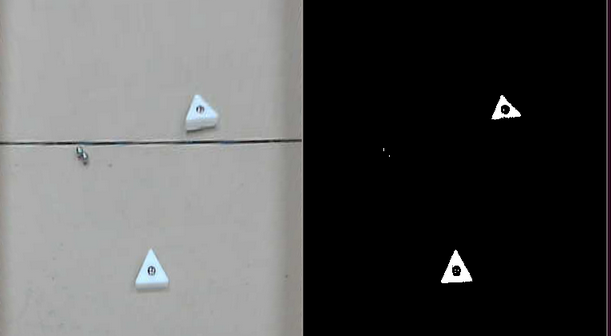

Putting all of the above together, we can find pucks and their centers from a video stream. The complete source code can be downloaded from http://classes.engr.oregonstate.edu/engr/spring2013/engr421-001/code/opencv/puck_detector.py.

From Left to Right: Original Image Image after Perspective Transform Binary Image (Thresholding) Contours (red) and Centroids (blue) of Image

Changing Camera Settings on Linux[edit | edit source]

If you have a camera which requires you to play around with its settings to get it to work on Linux, try using the "uvcdynctrl" tool. To get the Logitech webcams to work, I had to change their exposure setting to keep them from continuously adjusting their settings. Unfortunately, OpenCV can't control all aspects of webcams, so you need to use an external tool. Here's an example of using "uvcdynctrl" to show available devices, the available controls, and how to query and set control values:

# Available Devices

$ uvcdynctrl --list

Listing available devices:

video1 Camera

Media controller device /dev/media1 doesn't exist

ERROR: Unable to list device entities: Invalid device or device cannot be opened. (Code: 5)

video0 Integrated Camera

Media controller device /dev/media0 doesn't exist

ERROR: Unable to list device entities: Invalid device or device cannot be opened. (Code: 5)

# Available Controls

$ uvcdynctrl -d video1 --clist

Listing available controls for device video1:

Hue

Exposure

Gain

# Get a value

$ uvcdynctrl -d video1 -g 'Exposure'

60

# Set a Value

$ uvcdynctrl -d video1 -s 'Exposure' -- 30

$ uvcdynctrl -d video1 -g 'Exposure'

30

Microcontrollers/Getting Started

Basics of Microcontrollers[edit | edit source]

A microcontroller is a programmable integrated circuit containing a microprocessor, memory, and I/O peripherals for general purpose control in embedded applications.

Typical Peripherals[edit | edit source]

Timer/Counters[edit | edit source]

Most microcontrollers contain timer/counter peripherals that increment a counter register on each cycle of an input clock (internally or externally generated). These peripherals are used for numerous purposes including program timing, timing external signals (i.e. encoders or pulse widths), and generating PWM signals.

Analog-to-Digital Converters[edit | edit source]

Microcontrollers often contain analog-to-digital converters to read in analog voltages. These peripherals convert a voltage on command or periodically into a digital code that is readable by the processor. Some microcontroller ADCs contain an internal reference voltage, however some may require an external reference to operate.

Digital-to-Analog Converters[edit | edit source]

Serial Transceivers (USARTs)[edit | edit source]

Serial Peripheral Interfaces[edit | edit source]

I2C Interfaces[edit | edit source]

Clock/Timing Control[edit | edit source]

Real Time Clocks[edit | edit source]

USB Host/Node Controllers[edit | edit source]

Interrupt Controllers[edit | edit source]

Common Microcontroller Families[edit | edit source]

Microcontrollers/Serial Communication

Serial Communication Basics[edit | edit source]

Serial communication is a means of sending data between devices one bit at a time. This method only requires a few wires and I/O pins per device to facilitate communication. Typically, most serial devices send and receive byte-oriented data (8-bits per transaction), and have a dedicated transmit and receive line (full-duplex). Serial communication is typically handled by specialized hardware peripherals known as USARTs (Universal Synchronous-Asynchronous Receiver-Transmitters) that handle low level formatting, shifting, and recovery of data.

Most ATmega series AVR microcontrollers have 1-2 USARTs available. Some modern microcontrollers have 5-7 separate USARTs.

Serial Frame Structure[edit | edit source]

Serial transactions are broken up into what are called frames. Each frame consists of the payload data byte being sent along with a set number of bits that "frame" or format the data. Most USARTs, including the AVR USARTs, are compatible with a frame structure such as this one.

The serial line idles at a logic high level until the transmission starts. Each transmission is started with a single logic-low bit known as a start bit. After the start bit, the payload data is shifted out, typically with the least-significant bit first. After the last data bit is shifted out, an optional parity bit is sent. The parity bit takes on a value of 0 or 1 depending on the number of logic 1 bits in the data and can be used by the receiver to detect an erroneous transmissions in which one of the bits are flipped. Finally, the serial line returns to logic high for a configurable period of 1 or 2 bit-times; these are known as stop bits. AVR USARTs are capable of sending frames with 5-9 data bits, with even, odd, or no parity, and 1 or 2 stop bits. In the frame above, a logic-high signal corresponds to a '0' while a logic-low signal corresponds to a '1'.

Synchronous vs. Asynchronous Serial[edit | edit source]

Serial communication with a USART can be done both synchronously (data with a dedicated clock signal) or asynchronously (data without a dedicated clock signal). Using a synchronous serial line requires that extra wiring is included for the clock signals, and is often times unnecessary at typical serial data rates. Typically, most standard serial communication, especially between a PC and a microcontroller, is done asynchronously.

Baud Rate[edit | edit source]

The baud rate specifies the frequency at which bits are sent. The baud rate can be calculated by dividing 1 over the bit time in seconds. Setting the baud rate correctly for asynchronous serial is very important as the receiver must recover a clock signal from the incoming data based on a predetermined clock frequency. Common baud rates available on a PC are 1.2k, 2.4k, 4.8k, 9.6k, 19.2k, 38.4k, 57.6k, 115.2k, 230.4k, 460.8k, and 921.6k.

Logic Levels[edit | edit source]

There are many different standard serial logic levels used. Most PC interfaces use RS-232 serial, that specifies logic levels between -15V and -3V and +3 to +15V with idle-low lines. AVR USARTs use CMOS logic levels between 0-5V and have idle-high serial lines that are typically compatible with standard 3.3V or 5V logic outputs. The appropriate serial logic levels must be used between devices, and for that purpose, special level shifters may be required.

Texas Instruments manufactures RS-232 to CMOS/TTL level shifters for RS-232 use. These devices have a built in charge pump for providing the RS-232 levels and require only a few external components. An example of these chips is the MAX232 http://www.ti.com/product/max232.

Setting up a USART on an ATmega Microcontroller[edit | edit source]

The following example shows how to set up a serial port on an ATmega128 using USART0 as an asynchronous serial device operating at 115200 baud. The ATmega128 is using a 16 MHz clock in this example. The program should initialize USART0, and send out the byte 0xAA on its transmitter. It will continue to check its receiver for new data and will set PB0 high if it receives the 0xAA byte. This allows for a quick loopback test and demonstrates basic USART concepts.

/**************************************************

*

* AVR Microcontroller USART0 Example

* Cody Hyman <hymanc@onid.orst.edu>

*

**************************************************/

#include <avr/io.h>

/* Initialize USART0 */

void init_usart()

{

UBRR0H = 0; // Initialize the baud rate registers for 115.2kbps

UBRR0L = 8;

UCSR0C = (1<<UCSZ00)|(1<<UCSZ01);// Set the USART data size to 8b

UCSR0B = (1<<RXEN0)|(1<<TXEN0); // Enable the receiver and transmitter

DDRE |= (1<<1); // Set the Tx line (PE0) to be an output.

}

/* Blocking single byte transmit on USART0 */

void transmit_usart0(uint8_t byte)

{

// Check for previous transmission to complete and

while(!(UCSR0A & (1<<TXC0))); // Wait until last transmit is complete

UDR0 = byte; // Start next transmission by writing to UDR0

}

/* Checks the USART0 Rx complete status flag */

uint8_t usart_has_rx_data(void)

{

return (UCSR0A & (1<<RXC0)) != 0; // Return the RX complete status flag

}

/* Reads data from the USART receive buffer */

uint8_t receive_usart0(void)

{

uint8_t ret_val = UDR0; // Read data register

return ret_val; // Return read value

}

int main(void)

{

init_usart(); // Run USART initialization routine

DDRB |= (1<<0); // Initialize ATmega128 board LED 0

uint8_t received_data = 0; // Receive data buffer

transmit_usart0(0xAA); // Transmit 0xAA

while(1) // Main loop

{

if(usart_has_rx_data()) // Check for received data

{

received_data = receive_usart0(); // If yes, get the data

if(received_data = 0xAA) // Check the data

PORTB |= (1<<0); // If it matches, turn on LED

else

PORTB &= ~(1<<0); // Else turn off LED

}

}

return 0;

}

USB - Serial Bridges[edit | edit source]

Typically most modern PCs do not have a dedicated RS-232 serial port available, so an alternative must be used. Many vendors sell both RS-232 and TTL or LVTTL level adapters for a low cost. Many of these devices typically require drivers, so follow all manufacturer setup instructions.

Plugable USB to RS-232 Adapter

FTDI UMFT230XB USB to 3.3V Serial Adapter

FTDI TTL-232R-5V-PCB USB to 5V (or 3.3V) Serial Adapter

On Windows machines[edit | edit source]

Serial adapters typically enumerate as a COMx port. Check your device manager to determine the COM port number.

On Linux machines[edit | edit source]

Serial adapters typically enumerate as ttyUSBx devices. Check your /dev/ directory for ttyUSB devices to determine the tty device number.

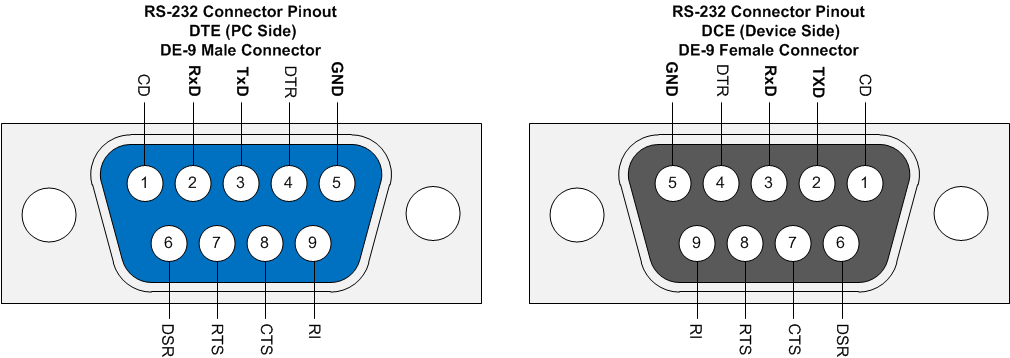

RS-232 Pinouts[edit | edit source]

The following pinouts are commonly found standard RS-232 DE-9 connectors. The male DTE pinout corresponds to the pins on the PC or serial adapter side. The female DCE pinout corresponds to the end device, such as a microcontroller board with an RS-232 port. RS-232 also specifies a number of other flow control lines that were commonly used in old serial modems, however for most cases, just the TxD/RxD and ground lines are needed.

Common Errors[edit | edit source]

Common errors include improperly wiring the DCE side connector Tx/Rx pins or accidentally using an RS-232 crossover cable rather than a straight cable. It's strongly advised to check these factors if your RS-232 communication does not work.

Serial Terminal Software[edit | edit source]

A variety of serial terminal programs exist that allow for quick sending and receiving of serial data. These are useful for debugging and testingrial devices.

Windows Serial Programs[edit | edit source]

Realterm - A very capable GUI based serial terminal. - http://realterm.sourceforge.net/ Linux Serial Programs: Minicom: A very popular terminal based serial interface program - Debian package name "minicom" Cutecom: A GUI based serial terminal program - Debian package name "cutecom"

Python Serial Module (PySerial)[edit | edit source]

An easy cross platform library for PC serial communication is PySerial. Python version 2.7 is recommended over Python 3.x. Pyserial allows for easy serial port management and manages sending and receiving data over a COM or tty port in Windows or Linux respectively. Pyserial and the accompanying documentation can be found at - http://pyserial.sourceforge.net/

Microcontrollers/Reading Sensors

Reading Digital Inputs[edit | edit source]

Simple 1-bit digital sensors are the simplest type of input to read on a microcontroller. Most pins on microcontrollers are usable as general purpose I/O, which allows for the interfacing of numerous 1-bit digital sensors. Examples of these sensors might be limit switches, IR proximity detectors, high gain photodetectors (light sensors), or pushbuttons.

Binary on/off switching type sensors are very easy to integrate simply by having a pull-up or pull-down resistor connected to the switch output.

To read a sensor on an AVR microcontroller, two registers must be used to handle pin setup and reading. These two registers are:

- DDRx

- PINx

Where the x indicates an arbitrary I/O port (i.e. A, B, C, D, etc.)

The DDR (Data Direction Register) is used to configure the specified pin as an input or output for a given port. A "1" bit for each location specifies that that port pin is set as an output, and a "0" bit for each location specifies that the pin is configured as an input.

The PIN register is used for reading the digital state of all 8 pins on each port, returning a 1 for logic high and 0 for logic low states for each bit. Note that this is not the PORTx register. PORTx is used for setting output values, while PINx is used for reading inputs.

To use a digital input, you must configure the pin as an input, and then read the appropriate bit from the PINx register. The simplest way to extract a single bit from PINx is to use a bitmask.

DDRA = 0b00000000; // All PORTA pins configured as inputs

if (PINA & 0b00000100) {

// if PA2 is high

}

else {

// if PA2 is low

}

if ((PINA & 0b00001000) == 0) {

// if PA 3 is low

}

In these examples, the bitmask is used to remove the state of all other pins on the port from the conditional. Like with digital outputs, you can use AVR-GCC macros to make your code easier to read:

if ((PINA & 1<<PIN3) == 0) {

// if PA 3 is low

}

Reading Analog Inputs[edit | edit source]

Many sensors measure a continuous signal, such as the position of a knob, the light level in the room, or the distance of an object from a sensor. Often, the output of these sensors is a voltage which is linearly related to the measured signal. To interface with these sensors, you must use an Analog Digital Converter (ADC). Most microprocessors contain several ADCs. An ADC converts the voltage into an integer, the size of which depends on the accuracy of the ADC. Most ADCs are 8- or 10- bit, meaning they use 8 or 10 bits to represent the entire range (from 0 to Vcc). A 10-bit ADC discretizes the analog voltage into values, ranging from 0 (0 V) to 1023 (Vcc).

AVR's ADCs require several steps to configure and read an analog signal. In addition, only pins on the microcontroller can be used as analog inputs. Refer to your microcontroller's datasheet to find which pins can be used with the ADC (they are typically labeled ADCn, where n is a number). The registers involved with ADCs are:

- PINx: Configures pin as an input

- ADMUX: Selects the ADC input being read

- ADCSRA: ADC Control Register

- ADCL: Low 8 bits of the ADC conversion

- ADCH: High bits of the ADC conversion

Because ADC pins can also be used as digital inputs/outputs, they must be configured as an input to be read by the ADC. The ADMUX register is used to select which ADC is being converted, and for single-ended inputs, is simply a number between 0..N, where N is the last ADC pin on the MCU. ADCSRA stands for ADC Control And Status Register A, and is used to begin a conversion and check when it has completed. ADCL and ADCH contain the result of the conversion.

// Configuration:

DDRF &= ~(1<<PIN0); // Configure PF0 as an input (connected to ADC0 on AT90USB64)

// Reading ADC:

ADMUX = 0; // Read ADC0

ADCSRA |= (1<<ADEN) | (1<<ADSC) | (1<<ADPS0); // Enable ADC (ADEN), Start a Conversion (ADSC), Fast conversion (ADPS0)

while ((ADCSRA & (1<<ADIF))==0); // Wait for Conversion to Complete

unsigned short value = (ADCL) | (ADCH<<8); // Combine low and high portions of result