Structured Query Language/Print version

| This is the print version of Structured Query Language You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/Structured_Query_Language

About the Book

It's a Translation and a Guide[edit | edit source]

This Wikibook introduces the programming language SQL as defined by ISO/IEC. The standard is — similar to most standard publications — fairly technical and neither easy to read nor understandable. Therefore there is a demand for a text document explaining the key features of the language. That is what this wikibook strives to do: we want to present an easily readable and understandable introduction for everyone interested in the topic.

Manuals and white papers from database vendors are mainly focused on the technical aspects of their product. As they want to set themselves apart from each other, they tend to emphasize those aspects which go beyond the SQL standard and the products of other vendors. This is contrary to the Wikibook's approach: we want to emphasize the common aspects.

The main audience of this Wikibook are people who want to learn the language, either as beginners or as persons with existing knowledge and some degree of experience looking for a recapitulation.

What this Wikibook is not[edit | edit source]

First of all, the Wikibook is not a reference manual for the syntax of standard SQL or any of its implementations. Reference manuals usually consist of definitions and explanations for those definitions. By contrast, the Wikibook tries to present concepts and basic commands through textual descriptions and examples. Of course, some syntax will be demonstrated. On some pages, there are additional hints about small differences between the standard and particular implementations.

The Wikibook is also not a complete tutorial. First, its focus is the standard and not any concrete implementation. When learning a computer language it is necessary to work with it and experience it personally. Hence, a concrete implementation is needed. And most of them differ more or less from the standard. Second, the Wikibook is far away from reflecting the complete standard, e.g. the central part of the standard consists of about 18 MB text in more than 1,400 pages. But you can use the Wikibook as a companion for learning SQL.

How to proceed[edit | edit source]

For everyone new to SQL, it will be necessary to study the chapters and pages from beginning to end. For persons who have some experience with SQL or are interested in a specific aspect, it is possible to navigate directly to any page.

Knowledge about any other computer language is not necessary, but it will be helpful.

The Wikibook consists of descriptions, definitions, and examples. It should be read with care. Furthermore, it is absolutely necessary to do some experiments with data and data structures personally. Hence, access to a concrete database system, where you can do read-only and read-write tests, is necessary. For those tests, you can use our example database or individually defined tables and data.

Conventions[edit | edit source]

The elements of the language SQL are case-insensitive, e.g., it makes no difference whether you write SELECT ..., Select ..., select ... or any combination of upper and lower case characters like SeLecT. For readability reasons, the Wikibook uses the convention that all language keywords are written in upper case letters and all names of user objects e.g., table and column names, are written in lower case letters.

We will write short SQL commands within one row.

SELECT street FROM address WHERE city = 'Duckburg';

For longer commands spawning multiple lines we use a tabular format.

SELECT street

FROM address

WHERE city IN ('Duckburg', 'Gotham City', 'Hobbs Lane');

Database Management Systems (DBMS)

Historical Context[edit | edit source]

One of the original scopes of computer applications was storing large amounts of data on mass storage devices and retrieving them at a later point in time. Over time user requirements increased to include not only sequential access but also random access to data records, concurrent access by parallel (writing) processes, recovery after hardware and software failures, high performance, scalability, etc. In the 1970s and 1980s, the science and computer industries developed techniques to fulfill those requests.

What makes up a Database Management System?[edit | edit source]

Basic bricks for efficient data storage - and for this reason for all Database Management Systems (DBMS) - are implementations of fast read and write access algorithms to data located in central memory and mass storage devices like routines for B-trees, Index Sequential Access Method (ISAM), other indexing techniques as well as buffering of dirty and non-dirty blocks. These algorithms are not unique to DBMS. They also apply to file systems, some programming languages, operating systems, application servers, and much more.

In addition to the appropriation of these routines, a DBMS guarantees compliance with the ACID paradigm. This compliance means that in a multi-user environment all changes to data within one transaction are:

- Atomic: all changes take place or none.

- Consistent: changes transform the database from one valid state to another valid state.

- Isolated: transactions of different users working at the same time will not affect each other.

- Durable: the database retains committed changes even if the system crashes afterward.

Classification of DBMS Design[edit | edit source]

A distinction between the following generations of DBMS design and implementation can be made:

- Hierarchical DBMS: Data structures are designed in a hierarchical parent/child model where every child has exactly one parent (except the root structure, which has no parent). The result is that the data is modeled and stored as a tree. Child rows are physically stored directly after the owning parent row. So there is no need to store the parent's ID or something like it within the child row (XML realizes a similar approach). If an application processes data in exactly this hierarchical way, it is speedy and efficient. But if it's necessary to process data in a sequence that deviates from this order, access is less efficient. Furthermore, hierarchical DBMSs do not provide the modeling of n:m relations. Another fault is that there is no possibility to navigate directly to data stored in lower levels. You must first navigate over the given hierarchy before reaching that data.

- The best-known hierarchical DBMS is IMS from IBM.

- Network DBMS: The network model designs data structures as a complex network with links from one or more parent nodes to one or more child nodes. Even cycles are possible. There is no need for a single root node. In general, the terms parent node and child node lose their hierarchical meaning and may be referred to as link source and link destination. Since those links are realized as physical links within the database, applications that follow the links show good performance.

- Relational DBMS: The relational model designs data structures as relations (tables) with attributes (columns) and the relationship between those relations. Definitions in this model are expressed in a purely declarative way, not predetermining any implementation issues like links from one relation to another or a certain sequence of rows in the database. Relationships are based purely upon content. At runtime, all linking and joining is done by evaluating the actual data values, e.g.:

... WHERE employee.department_id = department.id .... The consequence is that - except for explicit foreign keys - there is no meaning of a parent/child or owner/member denotation. Relationships in this model do not have any direction.

- The relational model and SQL are based on the mathematical theory of relational algebra.

- During the 1980s and 1990s, proprietary and open-source DBMS's based on the relational design paradigm established themselves as market leaders.

- Object-oriented DBMS: Nowadays, most applications are written in an object-oriented programming language (OOP). If, in such cases, the underlying DBMS belongs to the class of relational DBMS, the so-called object-relational impedance mismatch arises. That is to say, in contrast to the application language, pure relational DBMS (prDBMS) does not support central concepts of OOP:

- Type system: OOPs do not only know primitive data types. As a central concept of their language, they offer the facility to define classes with complex internal structures. The classes are built on primitive types, system classes, references to other or the same class. prDBMS knows only predefined types. Secondary prDBMS insists in first normal form, which means that attributes must be scalar. In OOPs they may be sets, lists or arrays of the desired type.

- Inheritance: Classes of OOPs may inherit attributes and methods from their superclass. This concept is not known to prDBMS.

- Polymorphism: The runtime system can decide via late binding which one of a group of methods with the same name and parameter types will be called. This concept is not known by prDBMS.

- Encapsulation: Data and access methods to data are stored within the same class. It is not possible to access the data directly - the only way is using the access methods of the class. This concept is not known to prDBMS.

- Object-oriented DBMS are designed to overcome the gap between prDBMS and OOP. At their peak, they reached a weak market position in the mid and late 1990s. Afterward, some of their concepts were incorporated into the SQL standard as well as rDBMS implementations.

- NoSQL: The term NoSQL stands for the emerging group of DBMS which differs from others in central concepts:

- They do not necessarily support all aspects of the ACID paradigm.

- The data must not necessarily be structured according to any schema.

- Their goal is to support fault-tolerance, distributed data, and vast volume, see also: CAP theorem.

- Implementations differ widely in storing techniques: you can see key-value stores, document-oriented databases, graph-oriented databases, and more.

- They do not offer an SQL interface.

- Some products: MongoDB, Firebase Realtime Database (Google), Cloud Firestore (Google)

- NewSQL: This class of DBMS seeks to provide the same scalable performance as NoSQL systems while maintaining the ACID paradigm, the relational model, and the SQL interface. They try to reach scalability by eschewing heavyweight recovery or concurrency control.

Relational DBMS (rDBMS)

The Theory[edit | edit source]

A relational DBMS is an implementation of data stores according to the design rules of the relational model. This approach allows operations on the data according to the relational algebra like projections, selections, joins, set operations (union, difference, intersection, ...), and more. Together with Boolean algebra (and, or, not, exists, ...) and other mathematical concepts, relational algebra builds up a complete mathematical system with basic operations, complex operations, and transformation rules between the operations. Neither a DBA nor an application programmer needs to know the relational algebra. But it is helpful to know that your rDBMS is based on this mathematical foundation - and that it has the freedom to transform queries into several forms.

The Data Model[edit | edit source]

The relational model designs data structures as relations (tables) with attributes (columns) and the relationship between those relations. The information about one entity of the real world is stored within one row of a table. However, the term one entity of the real world must be used with care. It may be that our intellect identifies a machine like a single airplane in this vein. Depending on the information requirements, it may be sufficient to put all of the information into one row of a table airplane. But in many cases, it is necessary to break the entity into its pieces and model the pieces as discrete entities, including the relationship to the whole thing. If, for example, information about every single seat within the airplane is needed, a second table seat and some way of joining seats to airplanes will be required.

This way of breaking up information about real entities into a complex data model depends highly on the information requirements of the business concept. Additionally, there are some formal requirements independent of any application: the resulting data model should conform to a so-called normal form. Normally these data models consist of a great number of tables and relationships between them. Such models will not predetermine their use by applications; they are strictly descriptive and will not restrict access to the data in any way.

Some more Basics[edit | edit source]

Operations within databases must have the ability to act not only on single rows, but also on sets of rows. Relational algebra offers this possibility. Therefore languages based on relational algebra, e.g.: SQL, offer a powerful syntax to manipulate lots of data within one single command.

As operations within relational algebra may be replaced by different but logically equivalent operations, a language based on relational algebra should not predetermine how its syntax is mapped to operations (the execution plan). The language should describe what should be done and not how to do it. Note: This choice of operations does not concern the use or neglect of indices.

As described before the relational model tends to break up objects into sub-objects. In this and in other cases it is often necessary to collect associated information from a bunch of tables into one information unit. How is this possible without links between participating tables and rows? The answer is: All joining is done based on the values which are actually stored in the attributes. The rDBMS must make its own decisions about how to reach all concerned rows: whether to read all potentially affected rows and ignore those which are irrelevant (full table scan) or, to use some kind of index and read-only those which match the criteria. This value-based approach allows even the use of operators other than the equal-operator, e.g.:

SELECT * FROM gift JOIN box ON gift.extent < box.extent;

This command will join all "gift" records to all "box" records with a larger "extent" (whatever "extent" means).

SQL: A Language for Working with rDBMS

History[edit | edit source]

As outlined above, rDBMS acts on the data with operations of relational algebra like projections, selections, joins, set operations (union, except and intersect) and more. The operations of relational algebra are denoted in a mathematical language that is highly formal and hard to understand for end-users and - possibly also - for many software engineers. Therefore, rDBMS offers a layer above relational algebra that is easy to understand but can be mapped to the underlying relational operations. Since the 1970s, we have seen some languages doing this job; one of them was SQL - another example was QUEL. In the early 1980s (after a rename from its original name SEQUEL due to trademark problems), SQL achieved market dominance. And in 1986, SQL was standardized for the first time. The current version is SQL 2023.

Characteristics[edit | edit source]

The tokens and syntax of SQL are modeled on English common speech to keep the access barrier as small as possible. An SQL command like UPDATE employee SET salary = 2000 WHERE id = 511; is not far away from the sentence "Change employee's salary to 2000 for the employee with id 511."

The keywords of SQL can be expressed in any combination of upper and lower case characters, i.e. the keywords are case insensitive. It makes no difference whether UPDATE, update, Update, UpDate, or any other combination of upper and lower case characters is written in SQL code.

Next, SQL is a descriptive language, not a procedural one. It does not proscribe all aspects of the relational operations (which operation, their order, ...), which are generated from the given SQL statement. The rDBMS has the freedom to generate more than one execution plan from a statement. It may compare several generated execution plans with each other and run the one it thinks is best in a given situation. Additionally, the programmer is freed from considering all the details of data access, e.g.: Which one of a set of WHERE criteria should be evaluated first if they are combined with AND?

Despite the above simplifications, SQL is very powerful. It allows the manipulation of a set of data records with a single statement. UPDATE employee SET salary = salary * 1.1 WHERE salary < 2000; will affect all employee records with an actual salary smaller than 2000. Potentially, there may be thousands of those records, only a few or even zero. The operation may also depend on data already present in the database; the statement SET salary = salary * 1.1 leads to an increase of the salaries by 10%, which may be 120 for one employee and 500 for another one.

The designer of SQL tried to define the language elements orthogonally to each other. Among other things, this refers to the fact that any language element may be used in all positions of a statement where the result of that element may be used directly. E.g.: If you have a function power(), which takes two numbers and returns another number, you can use this function in all positions where numbers are allowed. The following statements are syntactically correct (if you have defined the function power() ) - and lead to the same resulting rows.

SELECT salary FROM employee WHERE salary < 2048;

SELECT salary FROM employee WHERE salary < power(2, 11);

SELECT power(salary, 1) FROM employee WHERE salary < 2048;

Another example of orthogonality is the use of subqueries within UPDATE, INSERT, DELETE, or inside another SELECT statement.

However, SQL is not free of redundancy. Often there are several possible formulations to express the same situation.

SELECT salary FROM employee WHERE salary < 2048;

SELECT salary FROM employee WHERE NOT salary >= 2048;

SELECT salary FROM employee WHERE salary between 0 AND 2048; -- 'BETWEEN' includes edges

This is a very simple example. In complex statements, there may be the choice between joins, subqueries, and the exists predicate.

Fundamentals[edit | edit source]

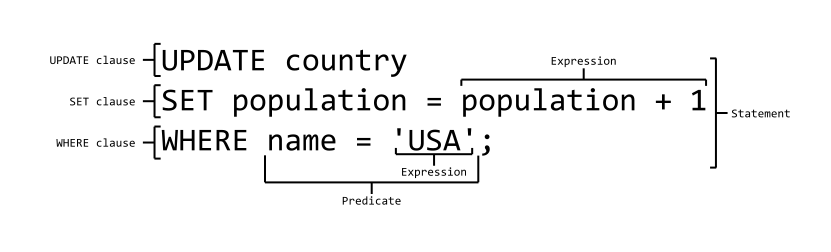

Core SQL consists of statements. Statements consist of keywords, operators, values, names of system- and user-objects or functions. Statements are concluded by a semicolon. In the statement SELECT salary FROM employee WHERE id < 100; the tokens SELECT, FROM and WHERE are keywords. salary, employee, and id are object names, the "<" sign is an operator, and "100" is a value.

The SQL standard arranges statements into nine groups:

- "The main classes of SQL-statements are:

- SQL-schema statements; these may have a persistent effect on the set of schemas.

- SQL-data statements; some of these, the SQL-data change statements, may have a persistent effect on SQL data.

- SQL-transaction statements; except for the <commit statement>, these, and the following classes, have no effects that persist when an SQL-session is terminated.

- SQL-control statements.

- SQL-connection statements.

- SQL-session statements.

- SQL-diagnostics statements.

- SQL-dynamic statements.

- SQL embedded exception declaration."

This detailed grouping is unusual in everyday speech. A typical alternative is to organize SQL statements into the following groups:

- Data Definition Language (DDL): Managing the structure of database objects (CREATE/ALTER/DROP tables, views, columns, ...).

- Data Query Language (DQL): Retrieval of data with the statement SELECT. This group has only one statement.

- Data Manipulation Language (DML): Changing of data with the statements INSERT, UPDATE, MERGE, DELETE, COMMIT, ROLLBACK, and SAVEPOINT.

- Data Control Language (DCL): Managing access rights (GRANT, REVOKE).

Turing completeness[edit | edit source]

Core SQL, as described above, is not Turing complete. It misses conditional branches, variables, subroutines. But the standard, as well as most implementations, offers an extension to fulfill the demand for Turing completeness. In 'Part 4: Persistent Stored Modules (SQL/PSM)' of the standard, there are definitions for IF-, CASE-, LOOP-, assignment- and other statements. The existing implementations of this part have different names, different syntax, and also a different scope of operation: PL/SQL in Oracle, SQL/PL in DB2, Transact-SQL, or T-SQL in SQL Server and Sybase, PL/pgSQL in Postgres and simply 'stored procedures' in MySQL.

SQL: The Standard ISO IEC 9075 and various Implementations

Benefit of Standardization[edit | edit source]

Like most other standards, the primary purpose of SQL is portability. Usually, software designers and application developers structure and solve problems in layers. Every abstraction level is realized in its own component or sub-component: presentation to end-user, business logic, data access, data storage, net, and operation system demands are typical representatives of such components. They are organized as a stack and every layer offers an interface to the upper layers to use its functionality. If one of those components is realized by two different providers and both offer the same interface (as an API, Web-Service, language specification, ...), it is possible to exchange them without changing the layers which are based on them. In essence, the software industry needs stable interfaces at the top of essential layers to avoid dependence on a single provider. SQL acts as such an interface to relational database systems.

If an application uses only those SQL commands which are defined within standard SQL, it should be possible to exchange the underlying rDBMS with a different one without changing the source code of the application. In practice, this is a hard job, because concrete implementations offer numerous additional features and software engineers love to use them.

A second aspect is the conservation of know-how. If a student learns SQL, he is in a position to develop applications that are based on an arbitrary database system. The situation is comparable with any other popular programming language. If one learns Java or C-Sharp, he can develop applications of any kind running on a lot of different hardware systems and even different hardware architectures.

Limits[edit | edit source]

Database systems consist of many components. Access to the data is an essential element but not the only component. Other components include: throughput optimization, physical design, backup, distributed databases, replication, 7x24 availability, ... . Standard SQL is focused mainly on data access and ignores typical DBA tasks. Even the CREATE INDEX statement as a widely used optimization strategy is not part of the standard. Nevertheless, the standard fills thousands of pages. But most of the DBA's daily work is highly specialized to every concrete implementation and must be done differently when switching to a different rDBMS. Mainly application developers benefit from SQL.

The Standardization Process[edit | edit source]

The standardization process is organized in two levels. The first level acts in a national context. Interested companies, universities and persons of one country work within their national standardization organization like ANSI, Deutsches Institut für Normung (DIN) or British Standards Institution (BSI), where every member has one vote. The second level is the international stage. The national organizations are members of ISO, respectively IEC. In case of SQL there is a common committee of ISO and IEC named Joint Technical Committee ISO/IEC JTC 1, Information technology, Subcommittee SC 32, Data management and interchange, where every national body has one vote. This committee approves the standard under the name ISO/IEC 9075-n:yyyy, where n is the part number and yyyy is the year of publication. The ten parts of the standard are described in short here.

If the committee releases a new version, this may concern only some of the ten parts. So it is possible that the yyyy denomination differs from part to part. Core SQL is defined mainly by the second part: ISO/IEC 9075-2:yyyy Part 2: Foundation (SQL/Foundation) - but it also contains some features of other parts.

Note: The API JDBC is part of Java SE and Java EE but not part of the SQL standard.

A second, closely related standard complements the standard: ISO/IEC 13249-n:yyyy SQL Multimedia and Application Packages, which is developed by the same organizations and committee. This publication defines interfaces and packages based on SQL. They focus on particular kinds of applications: text, pictures, data mining, and spatial data applications.

Verification of Conformance to the Standard[edit | edit source]

Until 1996 the National Institute of Standards and Technology (NIST) certified the compliance of the SQL implementation of rDBMS with the SQL standard. As NIST abandon this work, nowadays, vendors self-certify the compliance of their product. They must declare the degree of conformance in a special appendix of their documentation. This documentation may be voluminous as the standard defines not only a set of base features - called Core SQL:yyyy - but also a lot of additional features an implementation may conform to or not.

Implementations[edit | edit source]

To fulfill their clients' demands, all major vendors of rDBMS offers - among other data access ways - the language SQL within their product. The implementations cover Core SQL, a bunch of additional standardized features, and a huge number of additional, not standardized features. The access to standardized features may use the regular syntax or an implementation-specific syntax. In essence, SQL is the clamp holding everything together, but typically there are a lot of detours around the official language.

Language Elements

SQL consists of statements that start with a keyword like SELECT, DELETE or CREATE and terminate with a semicolon. Their elements are case-insensitive except for fixed character string values like 'Mr. Brown'.

- Clauses: Statements are subdivided into clauses. The most popular one is the WHERE clause.

- Predicates: Predicates specify conditions that can be evaluated to a boolean value. E.g.: a boolean comparison, BETWEEN, LIKE, IS NULL, IN, SOME/ANY, ALL, EXISTS.

- Expressions: Expressions are numeric or string values by itself, or the result of arithmetic or concatenation operators, or the result of functions.

- Object names: Names of database objects like tables, views, columns, functions.

- Values: Numeric or string values.

- Arithmetic operators: The plus sign, minus sign, asterisk and solidus (+, –, * and /) specify addition, subtraction, multiplication, and division.

- Concatenation operator: The '||' sign specifies the concatenation of character strings.

- Comparison operators: The equals operator, not equals operator, less than operator, greater than operator, less than or equals operator, greater than or equals operator ( =, <>, <, >, <=, >= ) compares values and expressions.

- Boolean operators: AND, OR, NOT combines boolean values.

Learning by Doing

When learning SQL (or any other programming language), it is not sufficient to read books or listen to lectures. It's absolutely necessary that one does exercises - prescribed exercises as well as own made-up tests. In the case of SQL, one needs access to a DBMS installation, where he can create tables, store, retrieve and delete data, and so on.

This page offers hints and links to some popular DBMS. In most cases, one can download the system for test purposes or use a free community edition. Some of them offer an online version so that there is no need for any local installation. Instead, such systems can be used in the cloud.

Often, but not always, a DBMS consists of more than the pure database engine. To be able to formulate SQL commands easily, we additionally need an interactive access to the database engine. Different client programs and IDEs provide this. They offer interactive access, and in many cases, they are part of the downloads. (In some cases, there are several different clients from the same producer.) At the same time, there are client programs and IDEs from other companies or organizations which offer only an interactive access but no DBMS. Such clients often support a lot of different DBMS.

Derby[edit | edit source]

Firebird[edit | edit source]

IBM DB2[edit | edit source]

http://www-01.ibm.com/software/data/db2/linux-unix-windows/

IBM Informix[edit | edit source]

http://www-01.ibm.com/software/data/informix/

MariaDB[edit | edit source]

MS SQL Server[edit | edit source]

http://www.microsoft.com/en/server-cloud/products/sql-server/default.aspx

MySQL[edit | edit source]

DBMS: http://dev.mysql.com/downloads/

IDE for administration and SQL-tests: http://dev.mysql.com/downloads/workbench/

Oracle[edit | edit source]

The Oracle database engine is available in 4 editions: Enterprise Edition (EE), Standard Edition (SE), Standard Edition One (SE One), and Express Edition (XE). The last-mentioned is the community edition and is sufficient for this course. http://www.oracle.com/technetwork/database/enterprise-edition/downloads/index.html.

SQL-Developer is an IDE with an Eclipse-like look-and-feel and offers access to the database engine. http://www.oracle.com/technetwork/developer-tools/sql-developer/overview/

In the context of Oracles application builder APEX (APplication EXpress), there is a cloud solution consisting of a database engine plus APEX. https://apex.oracle.com/. Among a lot of other things, it offers an SQL workshop where everybody can execute his own SQL commands for testing purposes. On the other hand, APEX can be downloaded separately and installed into any of the above editions except for the Express Edition.

PostgreSQL[edit | edit source]

SQLite[edit | edit source]

Online Access[edit | edit source]

SQL Fiddle offers an online access to following implementations:

MySQL, PostgreSQL, MS SQL Server, Oracle, and SQLite.

Snippets

This page offers SQL examples concerning different topics. You can copy/past the examples according to your needs.

Create Table[edit | edit source]

Data Types[edit | edit source]

--

-- Frequently used data types and simple constraints

CREATE TABLE t_standard (

-- column name data type default nullable/constraint

id DECIMAL PRIMARY KEY, -- some prefer the name: 'sid'

col_1 VARCHAR(50) DEFAULT 'n/a' NOT NULL, -- string with variable length. Oracle: 'VARCHAR2'

col_2 CHAR(10), -- string with fixed length

col_3 DECIMAL(10,2) DEFAULT 0.0, -- 8 digits before and 2 after the decimal. Signed.

col_4 NUMERIC(10,2) DEFAULT 0.0, -- same as col_3

col_5 INTEGER,

col_6 BIGINT -- Oracle: use 'NUMBER(n)', n up to 38

);

-- Data types with temporal aspects

CREATE TABLE t_temporal (

-- column name data type default nullable/constraint

id DECIMAL PRIMARY KEY,

col_1 DATE, -- Oracle: contains day and time, seconds without decimal

col_2 TIME, -- Oracle: use 'DATE' and pick time-part

col_3 TIMESTAMP, -- Including decimal for seconds

col_4 TIMESTAMP WITH TIME ZONE, -- MySql: no time zone

col_5 INTERVAL YEAR TO MONTH,

col_6 INTERVAL DAY TO SECOND

);

CREATE TABLE t_misc (

-- column name data type default nullable/constraint

id DECIMAL PRIMARY KEY,

col_1 CLOB, -- very long string (MySql: LONGTEXT)

col_2 BLOB, -- binary, eg: Word document or mp3-stream

col_3 FLOAT(6), -- example: two-thirds (2/3).

col_4 REAL,

col_5 DOUBLE PRECISION,

col_6 BOOLEAN, -- Oracle: Not supported

col_7 XML -- Oracle: 'XMLType'

);

Constraints[edit | edit source]

--

-- Denominate all constraints with an expressive name, eg.: abbreviations for

-- table name (unique across all tables in your schema), column name, constraint type, running number.

--

CREATE TABLE myExampleTable (

id DECIMAL,

col_1 DECIMAL(1), -- only 1 (signed) digit

col_2 VARCHAR(50),

col_3 VARCHAR(90),

CONSTRAINT example_pk PRIMARY KEY (id),

CONSTRAINT example_uniq UNIQUE (col_2),

CONSTRAINT example_fk FOREIGN KEY (col_1) REFERENCES person(id),

CONSTRAINT example_col_1_nn CHECK (col_1 IS NOT NULL),

CONSTRAINT example_col_1_check CHECK (col_1 >=0 AND col_1 < 6),

CONSTRAINT example_col_2_nn CHECK (col_2 IS NOT NULL),

CONSTRAINT example_check_1 CHECK (LENGTH(col_2) > 3),

CONSTRAINT example_check_2 CHECK (LENGTH(col_2) < LENGTH(col_3))

);

Foreign Key[edit | edit source]

--

-- Reference to a different (or the same) table. This creates 1:m or n:m relationships.

CREATE TABLE t_hierarchie (

id DECIMAL,

part_name VARCHAR(50),

super_part_id DECIMAL, -- ID of the part which contains this part

CONSTRAINT hier_pk PRIMARY KEY (id),

-- In this special case the foreign key refers to the same table

CONSTRAINT hier_fk FOREIGN KEY (super_part_id) REFERENCES t_hierarchie(id)

);

-- -----------------------------------------------

-- n:m relationships

-- -----------------------------------------------

CREATE TABLE t1 (

id DECIMAL,

name VARCHAR(50),

-- ...

CONSTRAINT t1_pk PRIMARY KEY (id)

);

CREATE TABLE t2 (

id DECIMAL,

name VARCHAR(50),

-- ...

CONSTRAINT t2_pk PRIMARY KEY (id)

);

CREATE TABLE t1_t2 (

id DECIMAL,

t1_id DECIMAL,

t2_id DECIMAL,

CONSTRAINT t1_t2_pk PRIMARY KEY (id), -- also this table should have its own Primary Key

CONSTRAINT t1_t2_unique UNIQUE (t1_id, t2_id), -- every link should occur only once

CONSTRAINT t1_t2_fk_1 FOREIGN KEY (t1_id) REFERENCES t1(id),

CONSTRAINT t1_t2_fk_2 FOREIGN KEY (t2_id) REFERENCES t2(id)

);

-- -----------------------------------------------------------------------------------

-- ON DELETE / ON UPDATE / DEFFERABLE

-- -----------------------------------------------------------------------------------

-- DELETE and UPDATE behaviour for child tables (see first example)

-- Oracle: Only DELETE [CASCADE | SET NULL] is possible. Default is NO ACTION, but this cannot be

-- specified explicit - just omit the phrase.

CONSTRAINT hier_fk FOREIGN KEY (super_part_id) REFERENCES t_hierarchie(id)

ON DELETE CASCADE -- or: NO ACTION (the default), RESTRICT, SET NULL, SET DEFAULT

ON UPDATE CASCADE -- or: NO ACTION (the default), RESTRICT, SET NULL, SET DEFAULT

-- Initial stage: immediate vs. deferred, [not] deferrable

-- MySQL: DEFERABLE is not supported

CONSTRAINT t1_t2_fk_1 FOREIGN KEY (t1_id) REFERENCES t1(id)

INITIALLY IMMEDIATE DEFERRABLE

-- Change constraint characteristics at a later stage

SET CONSTRAINT hier_fk DEFERRED; -- or: IMMEDIATE

Alter Table[edit | edit source]

Concerning columns.

-- Add a column (plus some column constraints). Oracle: The key word 'COLUMN' is not allowed.

ALTER TABLE t1 ADD COLUMN col_1 VARCHAR(100) CHECK (LENGTH(col_1) > 5);

-- Change a columns characteristic. (Some implementations use different key words like 'MODIFY'.)

ALTER TABLE t1 ALTER COLUMN col_1 SET DATA TYPE NUMERIC;

ALTER TABLE t1 ALTER COLUMN col_1 SET SET DEFAULT -1;

ALTER TABLE t1 ALTER COLUMN col_1 SET NOT NULL;

ALTER TABLE t1 ALTER COLUMN col_1 DROP NOT NULL;

-- Drop a column. Oracle: The key word 'COLUMN' is mandatory.

ALTER TABLE t1 DROP COLUMN col_2;

Concerning complete table.

--

ALTER TABLE t1 ADD CONSTRAINT t1_col_1_uniq UNIQUE (col_1);

ALTER TABLE t1 ADD CONSTRAINT t1_col_2_fk FOREIGN KEY (col_2) REFERENCES person (id);

-- Change definitions. Some implementations use different key words like 'MODIFY'.

ALTER TABLE t1 ALTER CONSTRAINT t1_col_1_unique UNIQUE (col_1);

-- Drop a constraint. You need to know its name. Not supported by MySQL, there is only a 'DROP FOREIGN KEY'.

ALTER TABLE t1 DROP CONSTRAINT t1_col_1_unique;

-- As an extension to the SQL standard, some implementations offer an ENABLE / DISABLE command for constraints.

Drop Table[edit | edit source]

--

-- All data and complete table structure inclusive indices are thrown away.

-- No column name. No WHERE clause. No trigger is fired. Considers Foreign Keys. Very fast.

DROP TABLE t1;

Select[edit | edit source]

Basic Syntax[edit | edit source]

--

-- Overall structure: SELECT / FROM / WHERE / GROUP BY / HAVING / ORDER BY

-- constants, column values, operators, functions

SELECT 'ID: ', id, col_1 + col_2, sqrt(col_2)

FROM t1

-- precedence within WHERE: functions, comparisions, NOT, AND, OR

WHERE col_1 > 100

AND NOT MOD(col_2, 10) = 0

OR col_3 < col_1

ORDER BY col_4 DESC, col_5; -- sort ascending (the default) or descending

-- number of rows, number of not-null-values

SELECT COUNT(*), COUNT(col_1) FROM t1;

-- predefined functions

SELECT COUNT(col_1), MAX(col_1), MIN(col_1), AVG(col_1), SUM(col_1) FROM t1;

-- UNIQUE values only

SELECT DISTINCT col_1 FROM t1;

-- In the next example col_1 many have duplicates. Only the combination of col_1 plus col_2 is unique.

SELECT DISTINCT col_1, col_2 FROM t1;

Case[edit | edit source]

--

-- CASE expression with conditions on exactly ONE column

SELECT id,

CASE contact_type -- ONE column name

WHEN 'fixed line' THEN 'Phone'

WHEN 'mobile' THEN 'Phone'

ELSE 'Not a telephone number'

END,

contact_value

FROM contact;

-- CASE expression with conditions on ANY column

SELECT id,

CASE -- NO column name

WHEN contact_type IN ('fixed line', 'mobile') THEN 'Phone'

WHEN id = 4 THEN 'ICQ'

ELSE 'Something else'

END,

contact_value

FROM contact;

Grouping[edit | edit source]

--

SELECT product_group, count(*) AS cnt

FROM sales

WHERE region = 'west' -- additional restrictions are possible but not necessary

GROUP BY product_group -- 'product_group' is the criterion which creates groups

HAVING COUNT(*) > 1000 -- restriction to groups with more than 1000 sales per group

ORDER BY cnt;

-- Attention: in the next example, col_2 is not part of the GROUP BY criterion. Therefore it cannot be displayed.

SELECT col_1, col_2

FROM t1

GROUP BY col_1;

-- We must accumulate all col_2-values of each group to ONE value, eg:

SELECT col_1, sum(col_2), min(col_2)

FROM t1

GROUP BY col_1;

Join[edit | edit source]

--

-- Inner join: Only persons together with their contacts.

-- Ignores all persons without contacts and all contacts without persons

SELECT *

FROM person p

JOIN contact c ON p.id = c.person_id;

-- Left outer join: ALL persons. Ignores contacts without persons

SELECT *

FROM person p

LEFT JOIN contact c ON p.id = c.person_id;

-- Right outer join: ALL contacts. Ignores persons without contacts

SELECT *

FROM person p

RIGHT JOIN contact c ON p.id = c.person_id;

-- Full outer join: ALL persons. ALL contacts.

SELECT *

FROM person p

FULL JOIN contact c ON p.id = c.person_id;

-- Carthesian product (missing ON keyword): be carefull!

SELECT COUNT(*)

FROM person p

JOIN contact c;

Subquery[edit | edit source]

--

-- Subquery within SELECT clause

SELECT id,

lastname,

weight,

(SELECT avg(weight) FROM person) -- the subquery

FROM person;

-- Subquery within WHERE clause

SELECT id,

lastname,

weight

FROM person

WHERE weight < (SELECT avg(weight) FROM person) -- the subquery

;

-- CORRELATED subquery within SELECT clause

SELECT id,

(SELECT status_name FROM status st WHERE st.id = sa.state)

FROM sales sa;

-- CORRELATED subquery retrieving the highest version within each booking_number

SELECT *

FROM booking b

WHERE version =

(SELECT MAX(version) FROM booking sq WHERE sq.booking_number = b.booking_number)

;

Set operations[edit | edit source]

--

-- UNION

SELECT firstname -- first SELECT command

FROM person

UNION -- push both intermediate results together to one result

SELECT lastname -- second SELECT command

FROM person;

-- Default behaviour is: 'UNION DISTINCT'. 'UNION ALL' must be explicitly specified, if duplicate values shall be removed.

-- INTERSECT: resulting values must be in BOTH intermediate results

SELECT firstname FROM person

INTERSECT

SELECT lastname FROM person;

-- EXCEPT: resulting values must be in the first but not in the second intermediate result

SELECT firstname FROM person

EXCEPT -- Oracle uses 'MINUS'. MySQL does not support EXCEPT.

SELECT lastname FROM person;

Rollup/Cube[edit | edit source]

-- Additional sum per group and sub-group

SELECT SUM(col_x), ...

FROM ...

GROUP BY ROLLUP (producer, model); -- the MySQL syntax is: GROUP BY producer, model WITH ROLLUP

-- Additional sum per EVERY combination of the grouping columns

SELECT SUM(col_x), ...

FROM ...

GROUP BY CUBE (producer, model); -- not supported by MySQL

Window functions[edit | edit source]

-- The frames boundaries

SELECT id,

emp_name,

dep_name,

FIRST_VALUE(id) OVER (PARTITION BY dep_name ORDER BY id) AS frame_first_row,

LAST_VALUE(id) OVER (PARTITION BY dep_name ORDER BY id) AS frame_last_row,

COUNT(*) OVER (PARTITION BY dep_name ORDER BY id) AS frame_count,

LAG(id) OVER (PARTITION BY dep_name ORDER BY id) AS prev_row,

LEAD(id) OVER (PARTITION BY dep_name ORDER BY id) AS next_row

FROM employee;

-- The moving average

SELECT id, dep_name, salary,

AVG(salary) OVER (PARTITION BY dep_name ORDER BY salary

ROWS BETWEEN 2 PRECEDING AND CURRENT ROW) AS sum_over_1or2or3_rows

FROM employee;

Recursions[edit | edit source]

-- The 'with clause' consists of three parts:

-- First: arbitrary name of an intermediate table and its columns

WITH intermediate_table (id, firstname, lastname) AS

(

-- Second: starting row (or rows)

SELECT id, firstname, lastname

FROM family_tree

WHERE firstname = 'Karl'

AND lastname = 'Miller'

UNION ALL

-- Third: Definition of the rule for querying the next level. In most cases this is done with a join operation.

SELECT f.id, f.firstname, f.lastname

FROM intermediate_table i

JOIN family_tree f ON f.father_id = i.id

)

-- After the 'with clause': depth first / breadth first

-- SEARCH BREADTH FIRST BY firstname SET sequence_number (default behaviour)

-- SEARCH DEPTH FIRST BY firstname SET sequence_number

-- The final SELECT

SELECT * FROM intermediate_table;

-- Hints: Oracle supports the syntax of the SQL standard since version 11.2. .

-- MySQL does not support recursions at all and recommend procedural workarounds.

Insert[edit | edit source]

--

-- fix list of values/rows

INSERT INTO t1 (id, col_1, col_2) VALUES (6, 46, 'abc');

INSERT INTO t1 (id, col_1, col_2) VALUES (7, 47, 'abc7'),

(8, 48, 'abc8'),

(9, 49, 'abc9');

COMMIT;

-- subselect: leads to 0, 1 or more new rows

INSERT INTO t1 (id, col_1, col_2)

SELECT id, col_x, col_y

FROM t2

WHERE col_y > 100;

COMMIT;

-- dynamic values

INSERT INTO t1 (id, col_1, col_2) VALUES (16, CURRENT_DATE, 'abc');

COMMIT;

INSERT INTO t1 (id, col_1, col_2)

SELECT id,

CASE

WHEN col_x < 40 THEN col_x + 10

ELSE col_x + 5

END,

col_y

FROM t2

WHERE col_y > 100;

COMMIT;

Update[edit | edit source]

--

-- basic syntax

UPDATE t1

SET col_1 = 'Jimmy Walker',

col_2 = 4711

WHERE id = 5;

-- raise value of col_2 by factor 2; no WHERE ==> all rows!

UPDATE t1 SET col_2 = col_2 * 2;

-- non-correlated subquery leads to one single evaluation of the subquery

UPDATE t1 SET col_2 = (SELECT max(id) FROM t1);

-- correlated subquery leads to one evaluation of subquery for EVERY affected row of outer query

UPDATE t1 SET col_2 = (SELECT col_2 FROM t2 where t1.id = t2.id);

-- Subquery in WHERE clause

UPDATE article

SET col_1 = 'topseller'

WHERE id IN

(SELECT article_id

FROM sales

GROUP BY article_id

HAVING COUNT(*) > 1000

);

Merge[edit | edit source]

--

-- INSERT / UPDATE depending on any criterion, in this case: the two columns 'id'

MERGE INTO hobby_shadow t -- the target table

USING (SELECT id, hobbyname, remark

FROM hobby

WHERE id < 8) s -- the source

ON (t.id = s.id) -- the 'match criterion'

WHEN MATCHED THEN

UPDATE SET remark = concat(s.remark, ' Merge / Update')

WHEN NOT MATCHED THEN

INSERT (id, hobbyname, remark) VALUES (s.id, s.hobbyname, concat(s.remark, ' Merge / Insert'))

;

-- Independent from the number of affected rows there is only ONE round trip between client and DBMS

Delete[edit | edit source]

--

-- Basic syntax

DELETE FROM t1 WHERE id = 5; -- no column name behind 'DELETE' key word because the complete row will be deleted

-- no hit is OK

DELETE FROM t1 WHERE id != id;

-- subquery

DELETE FROM person_hobby

WHERE person_id IN

(SELECT id

FROM person

WHERE lastname = 'Goldstein'

);

Truncate[edit | edit source]

--

-- TRUNCATE deletes ALL rows (WHERE clause is not possible). The table structure remains.

-- No trigger actions will be fired. Foreign Keys are considered. Much faster than DELETE.

TRUNCATE TABLE t1;

Create a simple Table

More than a Spreadsheet[edit | edit source]

Let's start with a simple example. Suppose we want to collect information about people - their name, place of birth and some more items. In the beginning we might consider to collect this data in a simple spreadsheet. But what if we grow to a successful company and have to handle millions of those data items? Could a spreadsheet deal with this huge amount of information? Could several employees or programs simultaneously insert new data, delete or change it? Of course not. And this is one of the noteworthy advantages of a Database Management System (DBMS) over a spreadsheet program: we can imagine the structure of a table as a simple spreadsheet - but the access to it is internally organized in a way that huge amounts of data can be accessed by a lot of users at the same time.

In summary, it can be said that one can imagine a table as a spreadsheet optimized for bulk data and concurrent access.

Conceive the Structure[edit | edit source]

To keep control and to ensure good performance, tables are subject to a few strict rules. Every table column has a fixed name, and the values of each column must be of the same data type. Furthermore, it is highly recommended - though not compulsory - that each row can be identified by a unique value. The column in which this identifying value resides is called the Primary Key. In this Wikibook, we always name it id. But everybody is free to choose a different name. Furthermore, we may use the concatenation of more than one column as the Primary Key.

At the beginning we have to decide the following questions:

- What data about persons (in this first example) do we want to save? Of course, there is a lot of information about persons (e.g., eye color, zodiacal sign, ...), but every application needs only some of them. We have to decide which ones are of interest in our concrete context.

- What names do we assign to the selected data? Each identified datum goes into a column of the table, which needs to have a name.

- Of what type are the data? All data values within one column must be of the same kind. We cannot put an arbitrary string into a column of data type

DATE.

In our example, we decide to save the first name, last name, date, and place of birth, social security number, and the person's weight. Obviously date of birth is of data type DATE, the weight is a number, and all others are some kind of strings. For strings, there is a distinction between those that have a fixed length and those in which the length usually varies greatly from row to row. The former is named CHAR(<n>), where <n> is the fixed length, and the others VARCHAR(<n>), where <n> is the maximum length.

Fasten Decisions[edit | edit source]

The decisions previously taken must be expressed in a machine-understandable language. This language is SQL, which acts as the interface between end-users - or special programs - and the DBMS.

-- comment lines start with two consecutive minus signs '--'

CREATE TABLE person (

-- define columns (name / type / default value / nullable)

id DECIMAL NOT NULL,

firstname VARCHAR(50) NOT NULL,

lastname VARCHAR(50) NOT NULL,

date_of_birth DATE,

place_of_birth VARCHAR(50),

ssn CHAR(11),

weight DECIMAL DEFAULT 0 NOT NULL,

-- select one of the defined columns as the Primary Key and

-- guess a meaningful name for the Primary Key constraint: 'person_pk' may be a good choice

CONSTRAINT person_pk PRIMARY KEY (id)

);

We choose person as the name of the table, which consists of seven columns. The id column is assigned the role of the Primary Key. We can store exclusively digits in the columns id and weight, strings of a length up to 50 characters in firstname, lastname and place_of_birth, dates in date_of_birth and a string of exactly eleven characters in ssn. The phrase NOT NULL is part of the definition of id, firstname, lastname and weight. This means that in every row, there must be a value for those four columns. Storing no value in any of those columns is not possible - but the 8-character-string 'no value' or the digit '0' are allowed because they are values. Or to say it the other way round: it is possible to omit the values of date_of_birth, place_of_birth and ssn.

The definition of a Primary Key is called a 'constraint' (later on, we will get to know more kinds of constraints). Every constraint should have a name - it's person_pk in this example.

The Result[edit | edit source]

After execution of the above 'CREATE TABLE' command, the DBMS has created an object that one can imagine similar to the following Wiki-table:

id firstname lastname date_of_birth place_of_birth ssn weight

This Wiki-table shows 4 lines. The first line represents the names of the columns - not values! The following 3 lines are for demonstration purposes only. But in the database table, there is currently no single row! It is completely empty, no rows at all, no values at all! The only thing that exists in the database is the structure of the table.

Back to Start[edit | edit source]

Maybe we want to delete the table one day. To do so, we can use the DROP command. It removes the table totally: all data and the complete structure are thrown away.

DROP TABLE person;

Don't confuse the DROP command with the DELETE command, which we present on the next page. The DELETE command removes only rows - possibly all of them. However, the table itself, which holds the definition of the structure, is retained.

Handle Data

As shown in the previous page, we now have an empty table named person. What can we do with such a table? Just use it like a bag! Store things in it, look into it to check the existence of things, modify things in it or throw things out of it. These are the four essential operations, which concerns data in tables:

- INSERT: put some data into the table

- SELECT: retrieve data from the table

- UPDATE: modify data, which exists in the table

- DELETE: remove data from the table.

For each of these four operations, there is a SQL command. It starts with a keyword and runs up to a terminating semicolon. This rule applies to all SQL commands: They are introduced by a keyword and terminated by a semicolon. In the middle, there may be more keywords as well as object names and values.

Store new Data with INSERT Command[edit | edit source]

When storing new data in rows of a table, we must name all affected objects and values: the table name (there may be a lot of tables within the database), the column names and the values. All this is embedded within some keywords so that the SQL compiler can recognize the tokens and their meaning. In general, the syntax for a simple INSERT is

INSERT INTO <tablename> (<list_of_columnnames>)

VALUES (<list_of_values>);

Here is an example

-- put one row

INSERT INTO person (id, firstname, lastname, date_of_birth, place_of_birth, ssn, weight)

VALUES (1, 'Larry', 'Goldstein', date'1970-11-20', 'Dallas', '078-05-1120', 95);

-- confirm the INSERT command

COMMIT;

When the DBMS recognizes the keywords INSERT INTO and VALUES, it knows what to do: it creates a new row in the table and puts the given values into the named columns. In the above example, the command is followed by a second one: COMMIT confirms the INSERT operation as well as the other writing operations UPDATE and DELETE. (We will learn much more about COMMIT and its counterpart ROLLBACK in a later chapter.)

- A short comment about the format of the value for date_of_birth: There is no unique format for dates honored all over the world. Peoples use different formats depending on their cultural habits. For our purpose, we decide to represent dates in the hierarchical format defined in ISO 8601. It may be possible that your local database installation use a different format so that you are forced to either modify our examples or to modify the default date format of your database installation.

Now we will put some more rows into our table. To do so, we use a variation of the above syntax. It is possible to omit the list of column names if the list of values correlates precisely with the number, order, and data type of the columns used in the original CREATE TABLE statement.

- Hint: The practice of omitting the list of column names is not recommended for real applications! Table structures change over time, e.g. someone may add new columns to the table. In this case, unexpected side effects may occur in applications.

-- put four rows

INSERT INTO person VALUES (2, 'Tom', 'Burton', date'1980-01-22', 'Birmingham', '078-05-1121', 75);

INSERT INTO person VALUES (3, 'Lisa', 'Hamilton', date'1975-12-30', 'Mumbai', '078-05-1122', 56);

INSERT INTO person VALUES (4, 'Debora', 'Patterson', date'2011-06-01', 'Shanghai', '078-05-1123', 11);

INSERT INTO person VALUES (5, 'James', 'de Winter', date'1975-12-23', 'San Francisco', '078-05-1124', 75);

COMMIT;

Retrieve Data with SELECT Command[edit | edit source]

Now our table should contain five rows. Can we be sure about that? How can we check whether everything worked well and the rows and values exist really? To do so, we need a command which shows us the actual content of the table. It is the SELECT command with the following general syntax

SELECT <list_of_columnnames>

FROM <tablename>

WHERE <search_condition>

ORDER BY <order_by_clause>;

As with the INSERT command, you may omit some parts. The simplest example is

SELECT *

FROM person;

The asterisk character '*' indicates 'all columns'. In the result, the DBMS should deliver all five rows, each with the seven values we used previously with the INSERT command.

In the following examples, we add the currently missing clauses of the general syntax - one after the other.

Add a list of some or all columnnames

SELECT firstname, lastname

FROM person;

The DBMS should deliver the two columns firstname and lastname of all five rows.

Add a search condition

SELECT id, firstname, lastname

FROM person

WHERE id > 2;

The DBMS should deliver the three columns id, firstname and lastname of three rows.

Add a sort instruction

SELECT id, firstname, lastname, date_of_birth

FROM person

WHERE id > 2

ORDER BY date_of_birth;

The DBMS should deliver the four columns id, firstname, lastname and date_of_birth of three rows in the ascending order of date_of_birth.

Modify Data with UPDATE Command[edit | edit source]

If we want to change the values of some columns in some rows we can do so by using the UPDATE command. The general syntax for a simple UPDATE is:

UPDATE <tablename>

SET <columnname> = <value>,

<columnname> = <value>,

...

WHERE <search_condition>;

Values are assigned to the named columns. Unmentioned columns keep unchanged. The search_condition acts in the same way as in the SELECT command. It restricts the coverage of the command to rows, which satisfy the criteria. If the WHERE keyword and the search_condition are omitted, all rows of the table are affected. It is possible to specify search_conditions, which hit no rows. In this case, no rows are updated - and no error or exception occurs.

Change one column of one row

UPDATE person

SET firstname = 'James Walker'

WHERE id = 5;

COMMIT;

The first name of Mr. de Winter changes to James Walker, whereas all his other values keep unchanged. Also, all other rows keep unchanged. Please verify this with a SELECT command.

Change one column of multiple rows

UPDATE person

SET firstname = 'Unknown'

WHERE date_of_birth < date'2000-01-01';

COMMIT;

The <search_condition> isn't restricted to the Primary Key column. We can specify any other column. And the comparison operator isn't restricted to the equal sign. We can use different operators - they solely have to match the data type of the column.

In this example, we change the firstname of four rows with a single command. If there is a table with millions of rows we can change all of them using one single command.

Change two columns of one row

-- Please note the additional comma

UPDATE person

SET firstname = 'Jimmy Walker',

lastname = 'de la Crux'

WHERE id = 5;

COMMIT;

The two values are changed with one single command.

Remove data with DELETE Command[edit | edit source]

The DELETE command removes complete rows from the table. As the rows are removed as a whole, there is no need to specify any columnname. The semantics of the <search_condition> is the same as with SELECT and UPDATE.

DELETE

FROM <tablename>

WHERE <search_condition>;

Delete one row

DELETE

FROM person

WHERE id = 5;

COMMIT;

The row of James de Winter is removed from the table.

Delete many rows

DELETE

FROM person;

COMMIT;

All remained rows are deleted as we have omitted the <search_condition>. The table is empty, but it still exists.

No rows affected

DELETE

FROM person

WHERE id = 99;

COMMIT;

This command will remove no row as there is no row with id equals to 99. But the syntax and the execution within the DBMS are still perfect. No exception is thrown. The command terminates without any error message or error code.

Summary[edit | edit source]

The INSERT and DELETE commands affect rows in their entirety. INSERT puts a complete new row into a table (unmentioned columns remain empty), and DELETE removes entire rows. In contrast, SELECT and UPDATE affect only those columns that are mentioned in the command; unmentioned columns are unaffected.

The INSERT command (in the simple version of this page) has no <search_condition> and therefore handles exactly one row. The three other commands may affect zero, one, or more rows depending on the evaluation of their <search_condition>.

Example Database Structure

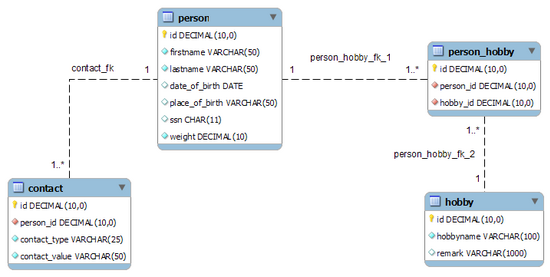

First of all a database is a collection of data. These data are organized in tables as shown in the example person. In addition, there are many other kinds of objects in the DBMS: views, functions, procedures, indices, rights and many others. Initially we focus on tables and present four of them. They serve as the foundation for our Wikibook. Other kind of objects will be given later.

We try to keep everything as simple as possible. Nevertheless, this minimalistic set of four tables demonstrates a 1:n as well as a n:m relationship.

person[edit | edit source]

The person table holds information about fictitious persons; see: Create a simple Table.

-- comment lines start with two consecutive minus signs '--'

CREATE TABLE person (

-- define columns (name / type / default value / nullable)

id DECIMAL NOT NULL,

firstname VARCHAR(50) NOT NULL,

lastname VARCHAR(50) NOT NULL,

date_of_birth DATE,

place_of_birth VARCHAR(50),

ssn CHAR(11),

weight DECIMAL DEFAULT 0 NOT NULL,

-- select one of the defined columns as the Primary Key and

-- guess a meaningfull name for the Primary Key constraint: 'person_pk' may be a good choice

CONSTRAINT person_pk PRIMARY KEY (id)

);

contact[edit | edit source]

The contact table holds information about the contact data of some persons. One could consider to store this contact information in additional columns of the person table: one column for email, one for icq, and so on. We decided against it for some serious reasons.

- Missing values: A lot of people do not have most of those contact values respectively we don't know the values. Hereinafter the table will look like a sparse matrix.

- Multiplicities: Other people have more than one email address or multiple phone numbers. Shall we define a lot of columns email_1, email_2, ... ? What is the upper limit? Standard SQL does not offer something like an 'array of values' for columns (some implementations do).

- Future Extensions: Someday, there will be one or more contact types that are unknown today. Then we have to modify the table.

We can deal with all these situations in an uncomplicated way, when the contact data goes to its own table. The only special thing is bringing persons together with their contact data. This task will be managed by the column person_id of table contact. It holds the same value as the Primary Key of the allocated person.

The general statement is that we do have one information unit (person) to which potentially multiple information units of the same type (contact) belongs to. We call this togetherness a relationship - in this case a 1:m relationship (also known as a one to many relationship). Whenever we encounter such a situation, we store the values, which may occur more than once, in a separate table together with the id of the first table.

CREATE TABLE contact (

-- define columns (name / type / default value / nullable)

id DECIMAL NOT NULL,

person_id DECIMAL NOT NULL,

-- use a default value, if contact_type is omitted

contact_type VARCHAR(25) DEFAULT 'email' NOT NULL,

contact_value VARCHAR(50) NOT NULL,

-- select one of the defined columns as the Primary Key

CONSTRAINT contact_pk PRIMARY KEY (id),

-- define Foreign Key relation between column person_id and column id of table person

CONSTRAINT contact_fk FOREIGN KEY (person_id) REFERENCES person(id),

-- more constraint(s)

CONSTRAINT contact_check CHECK (contact_type IN ('fixed line', 'mobile', 'email', 'icq', 'skype'))

);

hobby[edit | edit source]

People usually pursue one or more hobbies. Concerning multiplicity, we have the same problems as before with contact. So we need a separate table for hobbies.

CREATE TABLE hobby (

-- define columns (name / type / default value / nullable)

id DECIMAL NOT NULL,

hobbyname VARCHAR(100) NOT NULL,

remark VARCHAR(1000),

-- select one of the defined columns as the Primary Key

CONSTRAINT hobby_pk PRIMARY KEY (id),

-- forbid duplicate recording of a hobby

CONSTRAINT hobby_unique UNIQUE (hobbyname)

);

You may have noticed that there is no column for the corresponding person. Why this? With hobbies, we have an additional problem: It's not just that one person pursues multiple hobbies. At the same time, multiple persons pursue the same hobby.

We call this kind of togetherness a n:m relationship. It can be designed by creating a third table between the two original tables. The third table holds the ids of the first and second table. So one can decide which person pursues which hobby. In our example, this 'table-in-the-middle' is person_hobby and will be defined next.

person_hobby[edit | edit source]

CREATE TABLE person_hobby (

-- define columns (name / type / default value / nullable)

id DECIMAL NOT NULL,

person_id DECIMAL NOT NULL,

hobby_id DECIMAL NOT NULL,

-- Also this table has its own Primary Key!

CONSTRAINT person_hobby_pk PRIMARY KEY (id),

-- define Foreign Key relation between column person_id and column id of table person

CONSTRAINT person_hobby_fk_1 FOREIGN KEY (person_id) REFERENCES person(id),

-- define Foreign Key relation between column hobby_id and column id of table hobby

CONSTRAINT person_hobby_fk_2 FOREIGN KEY (hobby_id) REFERENCES hobby(id)

);

Every row of the table holds one id from person and one from hobby. This is the technique of how the information of persons and hobbies are joined together.

Visualisation of the Structure[edit | edit source]

After execution of the above commands, your database should contain four tables (without any data). The tables and their relationship to each other may be visualized in a so-called Entity Relationship Diagram. On the left side there is the 1:n relationship between person and contact and on the right side the n:m relationship between person and hobby with its 'table-in-the-middle' person_hobby.

Example Database Data

rDBMS offers different ways to put data into their storage: from CSV files, Excel files, product-specific binary files, via several API's or special gateways to other databases respectively database systems and some more technics. So there is a wide range of - non standardized - possibilities to bring data into our system. Because we are speaking about SQL, we use the standardized INSERT command to do the job. It is available on all systems.

We use only a small amount of data because we want to keep things simple. Sometimes one needs a high number of rows to do performance tests. For this purpose, we show a special INSERT command at the end of this page, which exponentially inflates your table.

person[edit | edit source]

--

-- After we have done a lot of tests we may want to reset the data to its original version.

-- To do so, use the DELETE command. But be aware of Foreign Keys: you may be forced to delete

-- persons at the very end - with DELETE it's just the opposite sequence of tables in comparison to INSERTs.

-- Be careful and don't confuse DELETE with DROP !!

--

-- DELETE FROM person_hobby;

-- DELETE FROM hobby;

-- DELETE FROM contact;

-- DELETE FROM person;

-- COMMIT;

INSERT INTO person VALUES (1, 'Larry', 'Goldstein', DATE'1970-11-20', 'Dallas', '078-05-1120', 95);

INSERT INTO person VALUES (2, 'Tom', 'Burton', DATE'1977-01-22', 'Birmingham', '078-05-1121', 75);

INSERT INTO person VALUES (3, 'Lisa', 'Hamilton', DATE'1975-12-23', 'Richland', '078-05-1122', 56);

INSERT INTO person VALUES (4, 'Kim', 'Goldstein', DATE'2011-06-01', 'Shanghai', '078-05-1123', 11);

INSERT INTO person VALUES (5, 'James', 'de Winter', DATE'1975-12-23', 'San Francisco', '078-05-1124', 75);

INSERT INTO person VALUES (6, 'Elias', 'Baker', DATE'1939-10-03', 'San Francisco', '078-05-1125', 55);

INSERT INTO person VALUES (7, 'Yorgos', 'Stefanos', DATE'1975-12-23', 'Athens', '078-05-1126', 64);

INSERT INTO person VALUES (8, 'John', 'de Winter', DATE'1977-01-22', 'San Francisco', '078-05-1127', 77);

INSERT INTO person VALUES (9, 'Richie', 'Rich', DATE'1975-12-23', 'Richland', '078-05-1128', 90);

INSERT INTO person VALUES (10, 'Victor', 'de Winter', DATE'1979-02-28', 'San Francisco', '078-05-1129', 78);

COMMIT;

Please note that the format of DATEs may depend on your local environment. Furthermore, SQLite uses a different syntax for the implicit conversion from string to DATE.

-- SQLite syntax

INSERT INTO person VALUES (1, 'Larry', 'Goldstein', DATE('1970-11-20'), 'Dallas', '078-05-1120', 95);

...

contact[edit | edit source]

-- DELETE FROM contact;

-- COMMIT;

INSERT INTO contact VALUES (1, 1, 'fixed line', '555-0100');

INSERT INTO contact VALUES (2, 1, 'email', 'larry.goldstein@acme.xx');

INSERT INTO contact VALUES (3, 1, 'email', 'lg@my_company.xx');

INSERT INTO contact VALUES (4, 1, 'icq', '12111');

INSERT INTO contact VALUES (5, 4, 'fixed line', '5550101');

INSERT INTO contact VALUES (6, 4, 'mobile', '10123444444');

INSERT INTO contact VALUES (7, 5, 'email', 'james.dewinter@acme.xx');

INSERT INTO contact VALUES (8, 7, 'fixed line', '+30000000000000');

INSERT INTO contact VALUES (9, 7, 'mobile', '+30695100000000');

COMMIT;

hobby[edit | edit source]

-- DELETE FROM hobby;

-- COMMIT;

INSERT INTO hobby VALUES (1, 'Painting',

'Applying paint, pigment, color or other medium to a surface.');

INSERT INTO hobby VALUES (2, 'Fishing',

'Catching fishes.');

INSERT INTO hobby VALUES (3, 'Underwater Diving',

'Going underwater with or without breathing apparatus (scuba diving / breath-holding).');

INSERT INTO hobby VALUES (4, 'Chess',

'Two players have 16 figures each. They move them on an eight-by-eight grid according to special rules.');

INSERT INTO hobby VALUES (5, 'Literature', 'Reading books.');

INSERT INTO hobby VALUES (6, 'Yoga',

'A physical, mental, and spiritual practices which originated in ancient India.');

INSERT INTO hobby VALUES (7, 'Stamp collecting',

'Collecting of post stamps and related objects.');

INSERT INTO hobby VALUES (8, 'Astronomy',

'Observing astronomical objects such as moons, planets, stars, nebulae, and galaxies.');

INSERT INTO hobby VALUES (9, 'Microscopy',

'Observing very small objects using a microscope.');

COMMIT;

person_hobby[edit | edit source]

-- DELETE FROM person_hobby;

-- COMMIT;

INSERT INTO person_hobby VALUES (1, 1, 1);

INSERT INTO person_hobby VALUES (2, 1, 4);

INSERT INTO person_hobby VALUES (3, 1, 5);

INSERT INTO person_hobby VALUES (4, 5, 2);

INSERT INTO person_hobby VALUES (5, 5, 3);

INSERT INTO person_hobby VALUES (6, 7, 8);

INSERT INTO person_hobby VALUES (7, 4, 4);

INSERT INTO person_hobby VALUES (8, 9, 8);

INSERT INTO person_hobby VALUES (9, 9, 9);

COMMIT;

Grow up[edit | edit source]

For realistic performance tests, we need a vast amount of data. The few number of rows in our example database does not meet this criteria. How can we generate test data and store it in a table? There are different possibilities: FOR loops in a procedure, (pseudo-) recursive calls, importing external data in a system-specific fashion, and some more.

Because we are dealing with SQL, we introduce an INSERT command, which is portable across all rDBMS. Although it has a simple syntax, it is very powerful. With every execution, it will double the number of rows. Suppose there is 1 row in a table. After the first execution, there will be a second row in the table. At first glance, this sounds boring. But after 10 executions there are more than a thousand rows, after 20 executions there are more than a million, and we suspect that only a few installations can execute it more than 30 times.

INSERT INTO person (id, firstname, lastname, weight)

SELECT id + (select max(id) from person), firstname, lastname, weight

FROM person;

COMMIT;

The command is an INSERT in combination with a (Sub-)SELECT. The SELECT retrieves all rows of the table because there is no WHERE clause. This is the reason for the doubling. The mandatory columns firstname and lastname keeps unchanged. We ignore optional columns. Only the primary key id is computed. The new value is the sum of the old value plus the highest available id when starting the command.

Some more remarks:

- max(id) is determined only once per execution! This illustrates an essential aspect of rDBMS: At a conceptual level, the database has a particular state before execution of a command and a new state after its execution. Commands are atomic operations moving the database from one state to another - they run entirely or not a bit! Both, the SELECT and the inner SELECT with the max(id), act on the initial state. They never see the result or an intermediate result of the INSERT. Otherwise, the INSERT would never end.

- If we wish to observe the process of growing, we can add a column to the table to store max(id) with each iteration.

- The computation of the new id may be omitted if the DBMS supports AUTOINCREMENT columns.

- For performance tests, it may be helpful to store some random data in one or more columns.

SELECT: Fundamentals

The SELECT command retrieves data from one or more tables or views. It generally consists of the following language elements:

SELECT <things_to_be_displayed> -- the so called 'Projection' - mostly a list of columnnames

FROM <tablename> -- table or view names and their aliases

WHERE <where_clause> -- the so called 'Restriction' or 'search condition'

GROUP BY <group_by_clause>

HAVING <having_clause>

ORDER BY <order_by_clause>

OFFSET <offset_clause>

FETCH <fetch_first_or_next_clause>;

With the exception of the first two elements all others are optional. The sequence of language elements is mandatory. At certain places within the command there may start new SELECT commands - in a recursive manner.

Projection (specify resulting columns)[edit | edit source]

In the projection part of the SELECT command, you specify a list of columns, operations working on columns, functions, fixed values, or new SELECT commands.

-- C/Java style comments are possible within SQL commands

SELECT id, /* the name of a column */

concat(firstname, lastname), /* the concat() function */

weight + 5, /* the add operation */

'kg' /* a value */

FROM person;

The DBMS will retrieve ten rows, each of which consists of four columns.

We can mix the sequence of columns in any order or retrieve them several times.

SELECT id, lastname, lastname, 'weighs', weight, 'kg'

FROM person;

The asterisk '*' is an abbreviation for the list of all columns.

SELECT * FROM person;

For numeric columns, we can apply the usual numeric operators +, -, * and /. There are also many predefined functions depending on the data type: power, sqrt, modulo, string functions, date functions.

Uniqueness via keyword DISTINCT[edit | edit source]

It is possible to compact the result in the sense of unique values by using the keyword DISTINCT. In this case, all resulting rows, which would be identical, will be compressed to one row. In other words: duplicates are eliminated - just like in set theory.

-- retrieves ten rows

SELECT lastname

FROM person;

-- retrieves only seven rows. Duplicate values are thrown away.

SELECT DISTINCT lastname

FROM person;

-- Hint:

-- The keyword 'DISTINCT' refers to the entirety of the resulting rows, which you can imagine as

-- the concatenation of all columns. It follows directly behind the SELECT keyword.

-- The following query leads to ten rows, although three persons have the same lastname.

SELECT DISTINCT lastname, firstname

FROM person;

-- again only seven rows

SELECT DISTINCT lastname, lastname

FROM person;

Aliases for Column names[edit | edit source]

Sometimes we want to give resulting columns more descriptive names. We can do so by choosing an alias within the projection. This alias is the new name within the result set. GUIs show the alias as the column label.

-- The keyword 'AS' is optional

SELECT lastname AS family_name, weight AS weight_in_kg

FROM person;

Functions[edit | edit source]

There are predefined functions for use in projections (and at some other positions). The most frequently used are:

- count(<columnname>|'*'): Counts the number of resulting rows.

- max(<columnname>): The highest value in <column> of the resultset. Also applicable on strings.

- min(<columnname>): The lowest value in <column> of the resultset. Also applicable on strings.

- sum(<columnname>): The sum of all values in a numeric column.

- avg(<columnname>): The average of a numeric column.

- concat(<columnname_1>, <columnname_2>): The concatenation of two columns. Alternatively the function may be expressed by the '||' operator: <columnname_1> || <columnname_2>

Standard SQL and every DBMS offers many more functions.